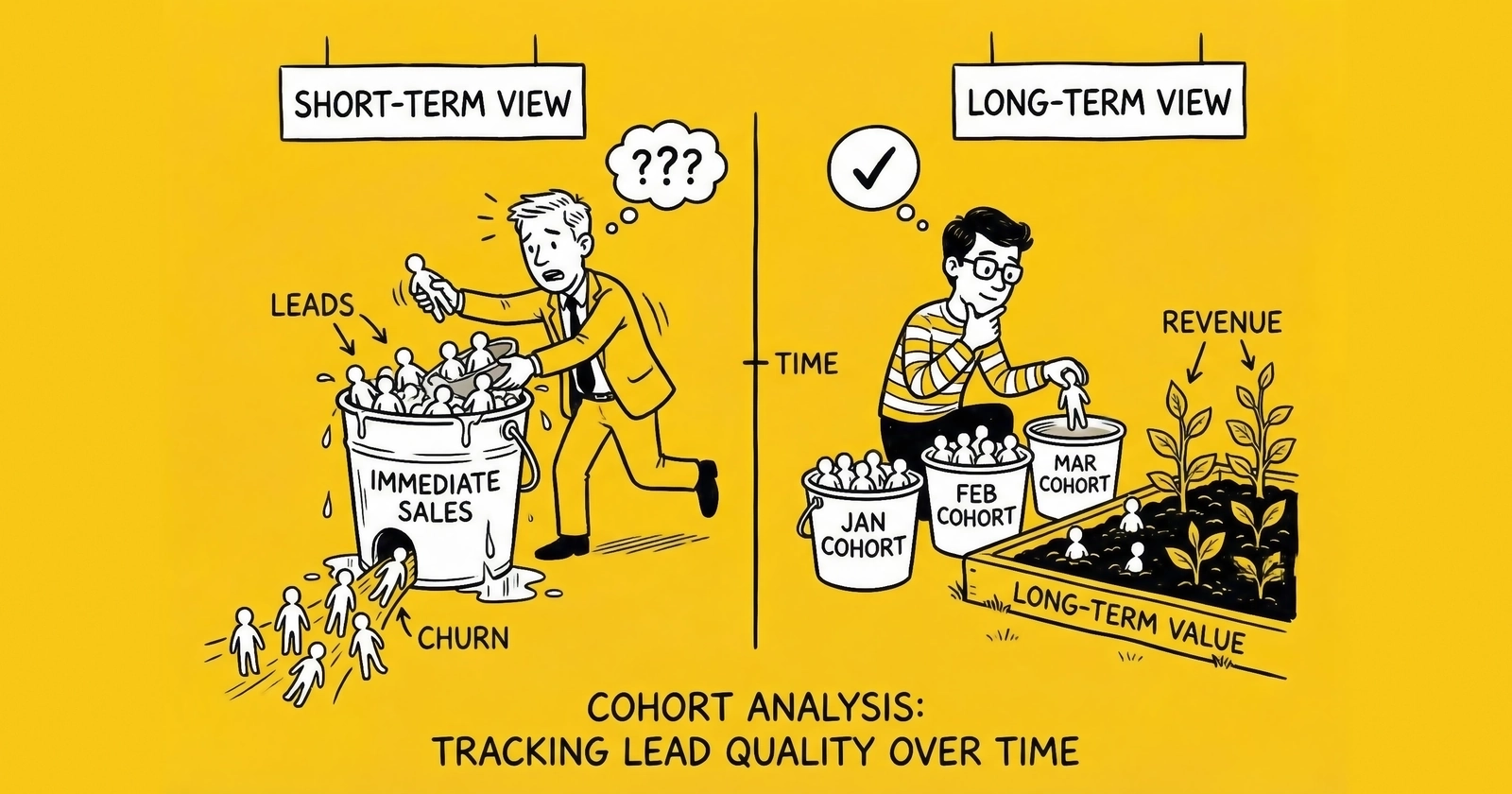

Stop evaluating lead sources with point-in-time snapshots that hide the true story. Cohort analysis reveals whether your leads are actually improving, degrading, or costing more than they earn across their complete lifecycle.

The dashboard shows your August leads converting at 12%. Your team celebrates. Budget flows to the sources that delivered those numbers. Three months later, the same sources are producing leads that convert at 6%, but you do not discover this until your buyer calls to cancel.

This is not a measurement problem. It is a methodology problem.

Point-in-time metrics tell you what happened yesterday. Cohort analysis tells you what will happen tomorrow. The difference between these two approaches separates operators who scale profitably from those who optimize their way into crisis.

Lead quality is not static. A traffic source that delivered exceptional leads in Q1 may have exhausted its best audience segments by Q3. A landing page change that boosted conversion rate may have diluted intent signals that buyers depend on. A new affiliate partner may have started strong before their traffic quality degraded. None of these patterns appear in aggregate metrics. All of them become visible through cohort analysis.

This guide provides the framework for implementing cohort analysis in lead generation operations. We will cover the methodology, the metrics that matter, the tools that work, and the interpretation skills that transform data into decisions. By the end, you will know exactly how to track lead quality over time – and more importantly, how to act on what you find.

What Is Cohort Analysis and Why Does It Matter for Leads

Cohort analysis is a method of evaluating groups of leads that share a common characteristic over a defined time period. Rather than looking at all leads in aggregate, you segment them into cohorts based on when they were generated, which source produced them, or what campaign attracted them – then track their performance as each cohort ages.

The power of cohort analysis lies in its ability to reveal patterns that aggregate metrics obscure. When you calculate your overall conversion rate, you are blending leads generated yesterday with leads generated six months ago. You are mixing traffic from sources that have fundamentally different quality profiles. You are averaging outcomes that should be compared, not combined.

The Aggregate Metrics Trap

Consider this scenario. Your blended lead conversion rate is 10%. This looks stable month over month, so you assume quality is consistent. But the reality underneath that number tells a different story:

- Leads from January converted at 14%

- Leads from February converted at 12%

- Leads from March converted at 10%

- Leads from April converted at 8%

- Leads from May converted at 6%

Your aggregate rate stayed at 10% only because January leads continued converting (masking March quality decline) while May volume increased (diluting January outcomes). By the time the full picture emerges, you have already purchased three months of leads at the degraded quality level.

Cohort analysis would have revealed the downward trend by March, giving you 60-90 days of lead time to investigate and intervene. That early warning is worth tens of thousands of dollars in avoided bad inventory.

The Time Dimension Most Operators Miss

Lead quality has a temporal dimension that most measurement systems ignore. A lead captured today will not reach final disposition for weeks or months. The buyer needs time to contact, qualify, and convert that lead. Returns take 7-30 days to surface. Customer churn may not appear for 90-180 days.

This means today’s quality metrics actually describe leads from the past. When you celebrate a high conversion rate, you are celebrating the quality of leads generated 30-60 days ago – a reality that affects everything from lead return policies to source optimization. When you panic about declining performance, you are reacting to problems that began weeks before.

Cohort analysis aligns your measurement with this reality. Instead of asking “what is our conversion rate today,” it asks “what is the conversion rate of leads generated in Week 12, measured at 30-60-90 days after generation.” This seemingly small shift in framing changes everything about how you identify problems, measure interventions, and optimize sources.

Lead Quality Degradation Patterns

Across thousands of lead generation operations, several quality degradation patterns appear consistently. Understanding these patterns helps you know what to look for in your cohort analysis.

Audience Exhaustion Pattern: A traffic source starts strong, capturing the most motivated segment of its audience. Over weeks or months, it progressively taps into less motivated segments. Quality declines gradually and steadily.

Algorithm Fatigue Pattern: Advertising platforms optimize for the metrics you give them. Initially, they find high-quality prospects. As those segments saturate, the algorithm expands to broader audiences that convert on your form but not on buyer outcomes.

Seasonal Shift Pattern: Many verticals have quality variations tied to seasons. Auto insurance leads generated in December (during renewal season) often convert differently than leads generated in March. Without cohort analysis, you cannot distinguish seasonal patterns from source problems.

Fraud Escalation Pattern: When bad actors discover a profitable scheme, they start small. If undetected, they scale. Early cohorts show normal quality; later cohorts show progressively worse outcomes as fraud volume increases. Implementing click fraud detection technology helps identify these patterns before they cause significant damage.

Landing Page Drift Pattern: Conversion rate optimization often trades form completions for lead quality. Early cohorts (before the optimization) convert better downstream than later cohorts (after forms became easier).

Cohort analysis reveals each of these patterns through the simple act of comparing like to like – leads from the same origin, tracked through the same lifecycle, measured at the same maturity points.

Essential Metrics for Lead Cohort Analysis

Effective cohort analysis requires tracking the right metrics at the right time intervals. Not every metric matters equally, and tracking too many creates noise that obscures signal. Focus on these core measurements.

Primary Quality Indicators

Contact Rate by Cohort Age

Contact rate measures the percentage of leads successfully reached by the buyer’s sales team. This metric deserves priority because it reveals data quality issues and intent problems before downstream conversion data becomes available.

Track contact rate at 24 hours, 48 hours, 7 days, and 14 days post-delivery. A healthy cohort should achieve 50-70% contact within 7 days for most verticals. Contact rates below 40% at 7 days indicate data quality issues, timing problems, or intent mismatches – and may signal the need for improved lead validation services.

Conversion Rate by Cohort Age

Track the percentage of leads that convert to the buyer’s desired outcome – typically a sale, appointment, or quote. The timeline for this measurement varies by vertical:

- Auto insurance: 7-30 days to policy bind

- Medicare: 14-60 days (enrollment windows affect timing)

- Solar: 30-90 days (longer sales cycle)

- Mortgage: 30-120 days (rate shopping and processing)

- Legal: 30-180 days (case evaluation and engagement)

For each cohort, calculate conversion rate at multiple intervals: 30 days, 60 days, 90 days, and final. The curve between these points reveals as much as the final number.

Return Rate by Cohort

Returns – leads rejected by buyers after initial acceptance – provide one of the earliest quality signals available. A lead returned at day 10 tells you something about leads generated 10 days ago, not 60 days ago.

Track return rates by cohort at 7 days, 14 days, and 30 days post-delivery. Return rates above 15% typically indicate systematic quality problems. More importantly, watch for changes within cohorts over time. A source that historically ran 8% returns suddenly spiking to 18% requires immediate investigation.

Return Reason Distribution by Cohort

Raw return rates tell you something is wrong. Return reason distribution tells you what is wrong. Track the percentage breakdown of returns by reason code:

- Invalid phone (wrong number, disconnected): Data quality problem

- Cannot contact (rings, no answer): Intent or timing problem

- Not shopping (changed mind, already purchased): Intent problem

- Duplicate (already received from another source): Source problem

- Incorrect information: Form design or fraud problem

When you see return reasons shift between cohorts – for example, “not shopping” returns doubling from 15% to 30% of returns – you have identified a specific problem to address.

Secondary Quality Indicators

Speed to Sale by Cohort

This metric measures the average time from lead delivery to closed sale. Faster conversion typically indicates higher intent. When cohorts show lengthening speed-to-sale patterns, the leads are either less motivated, less qualified, or both.

Revenue Per Lead by Cohort

Beyond conversion rate, track the actual revenue generated per lead in each cohort. This matters especially in verticals where transaction sizes vary. A cohort might convert at the same rate but produce smaller policies, shorter contracts, or lower-value purchases.

Calculate revenue per lead at 30, 60, and 90 days post-delivery. Compare cohorts on equivalent timelines – a Week 12 cohort at 60 days versus a Week 8 cohort at 60 days, not versus Week 8 at 90 days.

Customer Lifetime Value by Cohort

For operations with visibility into downstream customer behavior, track retention and lifetime value by acquisition cohort. This extends the analysis timeline significantly but reveals quality dimensions invisible in conversion metrics.

A lead cohort with average conversion rate but exceptional retention (low churn, high upsell) may be more valuable than a cohort with high conversion but poor retention. This distinction only emerges through extended cohort tracking – the same principle that drives customer lifetime value analysis for lead buyers.

Financial Metrics That Reveal Hidden Quality

True Cost Per Acquisition by Cohort

Calculate the fully-loaded cost to acquire a customer from each cohort. This includes lead cost, return costs, validation costs, sales labor, and any other variable expenses.

Compare true CPA across cohorts on equivalent maturity timelines. Rising CPA across sequential cohorts often indicates quality degradation before conversion metrics show it – you are working harder (more contacts, more sales effort) to achieve the same outcomes. Understanding true cost per lead calculation helps you capture all the factors that affect profitability.

Net Revenue Per Lead by Cohort

Net revenue accounts for gross revenue minus returns, refunds, chargebacks, and variable costs. A cohort that generates $50,000 gross revenue but $8,000 in returns and $3,000 in chargebacks has very different economics than a cohort generating $48,000 gross with $2,000 in returns and $500 in chargebacks.

Return on Lead Investment by Cohort

ROLI measures net revenue relative to lead acquisition cost. Calculate this at consistent intervals: 30-day ROLI, 60-day ROLI, 90-day ROLI, and final ROLI. This single metric summarizes unit economics by cohort better than any other.

Target ROLI benchmarks vary by vertical and business model, but sustainable operations typically need 2.0x-4.0x final ROLI (generating $2-4 in net revenue for every $1 spent on lead acquisition).

Designing Your Cohort Analysis Framework

Building an effective cohort analysis capability requires intentional design. You need to choose the right cohort definitions, establish appropriate time intervals, and create systems that track leads through their complete lifecycle.

Cohort Definition Strategies

Time-Based Cohorts

The most common approach groups leads by generation date – daily, weekly, or monthly cohorts depending on volume. Weekly cohorts work well for most operations, providing enough volume for statistical significance while maintaining granularity for trend detection.

High-volume operations (5,000+ leads daily) benefit from daily cohorts. Lower-volume operations may need monthly cohorts to accumulate meaningful sample sizes.

Source-Based Cohorts

Segment leads by acquisition source or traffic channel. This reveals which sources maintain quality over time and which degrade. Create cohorts for each significant traffic source: Google Ads, Facebook Ads, organic search, email, affiliates, and partnerships.

For affiliate networks, create cohorts by individual affiliate when possible. One degrading affiliate within a network can poison aggregate metrics while remaining invisible at the network level.

Campaign-Based Cohorts

Group leads by the specific campaign or creative that attracted them. This granular approach identifies not just which channels work but which messages within channels attract quality leads.

Campaign cohorts are especially valuable for testing. When you launch new creative, track its cohort separately from established campaigns. New creative may convert on forms at high rates but produce leads that perform poorly downstream.

Combined Dimensions

The most sophisticated implementations use multi-dimensional cohorts: source plus time, campaign plus source, or all three dimensions combined. This reveals patterns like “Facebook leads from Q1 converted better than Facebook leads from Q2” or “Affiliate X leads from Campaign A outperform Affiliate X leads from Campaign B.”

The trade-off: more dimensions mean smaller cohort sizes and longer times to statistical significance. Balance granularity against sample size requirements.

Optimal Cohort Time Windows

Generation Window

Choose a consistent time period for grouping leads into cohorts. Options include:

- Daily cohorts: Best for high-volume operations (1,000+ leads/day) needing rapid trend detection

- Weekly cohorts: Optimal for most operations, balancing granularity with sample size

- Monthly cohorts: Appropriate for lower-volume operations or long-cycle verticals

Maintain consistent windows across your analysis. Mixing weekly and monthly cohorts makes comparison difficult.

Measurement Windows

Define the intervals at which you will evaluate cohort performance. Align these with your business cycle:

- Short-term metrics (7-14 days): Contact rate, return rate, initial quality signals

- Medium-term metrics (30-60 days): Conversion rate, revenue per lead, cost per acquisition

- Long-term metrics (90-180 days): Lifetime value, retention, final ROLI

Document these windows in your measurement protocol. Consistency matters more than precision – comparing Week 12 cohort at 60 days to Week 8 cohort at 60 days requires both measurements to use the same definition of “60 days.”

Sample Size and Statistical Significance

Cohort analysis requires sufficient volume for conclusions to be meaningful. A cohort of 20 leads with 15% conversion (3 leads) is not statistically different from a cohort with 10% conversion (2 leads). Random variation dominates at small sample sizes.

Minimum Sample Size Guidelines

For conversion rate comparisons, plan for at least 100 leads per cohort at minimum, with 200-500 preferred. This allows detection of meaningful differences (5+ percentage point changes) with reasonable confidence.

For return rate analysis, smaller samples can work because the baseline rates are lower. A cohort of 50 leads with 16% returns versus 50 leads with 8% returns can be meaningful.

For revenue metrics, sample size requirements depend on revenue variance. High-variance verticals (legal, solar) need larger samples than low-variance verticals (auto insurance, Medicare).

Handling Low-Volume Cohorts

When individual cohorts lack sufficient volume:

- Extend the cohort window (from weekly to bi-weekly or monthly)

- Combine similar sources into source categories

- Use rolling cohort aggregation (Week 10-12 combined versus Week 7-9 combined)

- Apply Bayesian methods that incorporate prior knowledge

Never draw conclusions from underpowered cohorts. Flag small sample sizes in your reports and wait for sufficient data before acting.

Implementing Cohort Tracking Systems

Moving from concept to implementation requires data infrastructure, tracking protocols, and reporting systems. Most operations already have the raw data – the challenge is organizing it for cohort analysis.

Data Requirements and Sources

Lead Generation Data

Capture and retain: lead ID, generation timestamp, source/channel, campaign, creative, landing page, all form fields, validation results, and consent metadata.

Critical: The generation timestamp must be accurate and preserved through all downstream systems. Rounding timestamps to days or losing timezone information destroys your ability to create precise cohorts.

Lead Delivery Data

Track: delivery timestamp, buyer ID, delivery method, acceptance/rejection status, rejection reason, price, and any buyer-provided quality scores.

Outcome Data

Collect from buyers: contact attempts, contact success, conversion status, conversion timestamp, revenue amount, returns (with reason codes and timestamps), and any downstream quality feedback.

This is often the hardest data to obtain. Buyers may be reluctant to share detailed outcome data. Build data sharing into your buyer agreements. Consider offering volume incentives or quality guarantees in exchange for outcome reporting.

Financial Data

Track: lead acquisition cost (fully loaded), validation costs, return credit amounts, and net revenue by lead.

Building the Tracking Infrastructure

Unique Lead Identifiers

Every lead needs a persistent unique identifier that survives through the entire lifecycle. This ID links generation data to delivery data to outcome data to financial data.

Use UUIDs or similar formats that cannot collide. Do not use sequential integers that might duplicate across systems. Do not use source-specific IDs that cannot be joined later.

Timestamp Standardization

Normalize all timestamps to a single timezone (UTC recommended). Convert incoming timestamps at ingestion. Store generation time, delivery time, and all event times in consistent format.

Millisecond precision matters for real-time operations but is optional for cohort analysis. Minute-level precision suffices for most cohort purposes.

Data Warehouse Architecture

For meaningful cohort analysis, leads must be stored in a queryable data warehouse – not just production databases. Modern options include:

- Snowflake, BigQuery, or Redshift for cloud-native data warehousing

- PostgreSQL or MySQL for simpler implementations

- Lead distribution platform analytics (boberdoo, LeadsPedia) for built-in reporting

The warehouse must support: time-series queries, source-based filtering, aggregation functions, and joins across generation/delivery/outcome tables.

ETL Pipelines

Automated pipelines should move data from source systems to the warehouse on regular schedules. Outcome data from buyers, which often arrives via reports or manual uploads, needs standardized ingestion processes.

Design ETL pipelines for eventual consistency rather than real-time updates. Cohort analysis does not require real-time data – daily or weekly refreshes suffice.

Reporting and Visualization

Cohort Tables

The classic cohort visualization displays cohorts as rows, time periods as columns, with metric values in cells. This format makes patterns immediately visible:

| Cohort | Week 1 | Week 2 | Week 4 | Week 8 |

|---|---|---|---|---|

| Jan W1 | 8% | 11% | 14% | 15% |

| Jan W2 | 7% | 10% | 13% | 14% |

| Jan W3 | 5% | 8% | 11% | 12% |

| Jan W4 | 4% | 6% | 9% | — |

| Feb W1 | 3% | 5% | — | — |

This table shows conversion rate by cohort at different maturity points. The pattern is immediately visible: later cohorts are converting at lower rates at equivalent ages. Something changed between early January and late January.

Trend Charts

Line charts showing the same metric across cohorts at a fixed maturity point reveal trends clearly. Plot Week 8 conversion rate for each cohort on a timeline. Upward or downward slopes indicate improving or degrading quality.

Heatmaps

Color-coded heatmaps highlight high and low performance visually. Use traffic light colors (red/yellow/green) or gradient scales. This visualization works especially well for multi-source cohort comparisons.

Dashboard Integration

Embed cohort visualizations in operational dashboards, but at the right frequency. Cohort metrics change slowly – updating daily creates noise, not signal. Weekly updates for short-term cohort metrics, monthly for long-term metrics.

Analyzing Cohort Data: What to Look For

Raw cohort data requires interpretation. The patterns that matter, the anomalies that demand investigation, and the trends that predict future performance all require trained analysis.

Pattern Recognition

Quality Degradation Curves

When plotting conversion rate (or any quality metric) by cohort over time, look for:

- Steady decline: Each successive cohort performs worse than prior cohorts at the same maturity

- Step-function decline: Performance drops suddenly between specific cohorts (indicating a discrete event like source change or algorithm shift)

- Cyclical patterns: Quality rises and falls in repeating patterns (indicating seasonal effects)

- Random variation: No discernible pattern (indicating noise rather than signal)

Each pattern has different implications. Steady decline suggests audience exhaustion or algorithmic drift – interventions might include new creative, new targeting, or source replacement. Step-function decline demands investigation of what changed between the cohorts. Cyclical patterns require seasonal adjustment in your expectations.

Cohort Maturation Curves

Track how quickly cohorts reach their ultimate conversion rate. A healthy cohort might show: Week 1 at 5%, Week 2 at 9%, Week 4 at 13%, Week 8 at 15%, with minimal further conversion after Week 8.

When maturation slows – the same progression taking 12 weeks instead of 8 – something in the buyer’s sales process or lead quality has changed. Slower maturation often precedes conversion rate declines.

Source Comparison Patterns

Compare the same time-period cohorts across different sources. If Week 12 cohort from Source A converts at 16% while Week 12 cohort from Source B converts at 9%, and this pattern persists across multiple weeks, Source A delivers consistently higher quality.

More valuable: compare the trajectories. Source A might start at 18% and decline to 14%. Source B might start at 8% and improve to 11%. Which source would you rather scale? The answer depends on your confidence in the trajectory continuing.

Establishing Baselines and Thresholds

Historical Baselines

Before declaring a cohort underperforming, establish what “normal” looks like. Calculate the average and standard deviation of your key metrics across a baseline period (typically 90-180 days of cohorts).

A conversion rate of 11% is not good or bad in isolation. It is only meaningful relative to your historical baseline. If your baseline is 14% with standard deviation of 2%, an 11% cohort is 1.5 standard deviations below average – worth investigating.

Alert Thresholds

Set automated alerts for cohort metrics that exceed thresholds:

- Contact rate below baseline minus 1.5 standard deviations

- Return rate above baseline plus 2 standard deviations

- Conversion rate below baseline minus 2 standard deviations at any maturity point

These thresholds catch problems earlier than aggregate metrics. A cohort underperforming at Week 2 provides warning before it drags down your monthly average at Week 8.

Control Charts

Statistical process control charts display cohort metrics with upper and lower control limits. Values within limits represent normal variation. Values outside limits require investigation.

Control charts work best for mature operations with stable historical performance. New operations with limited history may need simpler threshold approaches.

Distinguishing Signal from Noise

Sample Size Awareness

Always check sample sizes before interpreting cohort differences. A 50% relative difference based on 20 leads means little. A 15% relative difference based on 2,000 leads deserves attention.

Report confidence intervals alongside point estimates. “Conversion rate of 12% plus or minus 3%” communicates uncertainty better than “conversion rate of 12%.”

Multiple Comparison Correction

When analyzing many cohorts simultaneously, some will appear unusually high or low by chance alone. If you examine 52 weekly cohorts, 2-3 will likely fall outside two-standard-deviation limits even with no actual quality change.

Apply Bonferroni correction or similar methods when scanning for anomalies. Alternatively, require persistence – act only when two or more consecutive cohorts show the same pattern.

Regression to Mean

Extreme cohorts (very high or very low quality) tend to be followed by less extreme cohorts. An unusually good cohort does not mean you solved quality problems forever. An unusually bad cohort does not necessarily indicate permanent degradation.

Look for sustained patterns across multiple cohorts before concluding that something fundamental changed.

Cohort Analysis by Vertical: Specific Considerations

Different verticals have different conversion cycles, quality indicators, and analysis requirements. Calibrate your cohort approach to your specific market.

Insurance Leads

Insurance leads typically convert within 14-45 days for auto, 7-30 days for Medicare (during enrollment periods), and 30-90 days for life. Track cohorts at these intervals:

- 7-day: Contact rate, initial return rate

- 14-day: Conversion rate (auto), quote rate

- 30-day: Conversion rate (Medicare, life), return rate final

- 90-day: Retention rate for policies bound

Medicare cohorts require special handling during Annual Enrollment Period (October 15 - December 7). AEP leads behave differently than off-season leads. Analyze these cohorts separately.

Insurance carrier feedback often includes policy size, premium amount, and retention data. Incorporate these into cohort analysis for true lifetime value assessment.

Mortgage Leads

Mortgage leads have extended conversion cycles – 30-120 days from lead to closed loan. Interest rate environments significantly affect cohort behavior.

Track cohorts by rate environment, not just calendar time. A cohort generated when rates were 6.5% will behave differently than a cohort generated at 7.5%. Segment accordingly.

Key metrics for mortgage cohorts:

- 7-day: Contact rate, preliminary qualification rate

- 30-day: Application rate

- 60-day: Approval rate

- 90-day: Close rate

- 120-day: Final close rate and loan amount

Rate lock timing affects when conversion finalizes. A lead that locks at day 45 may not close until day 90. Design measurement windows accordingly.

Solar Leads

Solar leads have the longest conversion cycles in consumer lead generation – 60-180 days from initial inquiry to installation completion. This requires patience in cohort analysis.

Solar cohorts should be segmented by geography (state incentive programs vary), property type (homeowner versus renter verification), and utility (different rate structures affect buyer appetite).

Measurement intervals for solar:

- 14-day: Contact rate, site assessment scheduled

- 30-day: Proposal delivered rate

- 60-day: Contract signed rate

- 90-day: Installation scheduled rate

- 180-day: Installation complete (final conversion)

Solar leads have high return rates (15-25% typical) due to property qualification issues. Return reason analysis is especially important for solar cohort evaluation.

Legal Leads

Legal leads vary dramatically by practice area. Personal injury leads may take 12-24 months to reach final disposition. Mass tort leads can take years. Bankruptcy and family law leads convert within 30-60 days.

For longer-cycle practice areas, track interim metrics:

- 7-day: Contact rate, initial consultation scheduled

- 30-day: Consultation completed, case evaluation status

- 90-day: Engagement decision (signed retainer or declined)

- 180-day: Case status (active, settled, pending)

- 12-24 months: Resolution and revenue (personal injury)

Legal cohort analysis often requires tracking beyond typical lead lifecycle. Build systems that can maintain cohort integrity over multi-year periods.

Home Services

Home services leads (HVAC, roofing, plumbing, electrical) typically convert within 14-30 days. Speed matters more than extended analysis.

Home services cohort metrics:

- 24-48 hours: Contact rate, appointment scheduled

- 7-day: Appointment completed rate

- 14-day: Quote accepted, job scheduled

- 30-day: Job completed, revenue collected

Seasonality affects home services dramatically. HVAC demand spikes in summer and winter extremes. Roofing follows storm patterns. Compare cohorts to same-season prior year, not just prior month.

Common Cohort Analysis Mistakes and How to Avoid Them

Even sophisticated practitioners make predictable errors in cohort analysis. Avoiding these mistakes accelerates your path to actionable insights.

Mistake 1: Insufficient Maturation Time

Evaluating cohorts before they reach maturity leads to premature conclusions. A cohort at Week 2 might show 6% conversion while the same cohort at Week 8 shows 14%. Judging at Week 2 would miss more than half the ultimate conversion.

Solution: Define minimum maturation periods by vertical and metric. Do not compare immature cohorts to mature cohorts. Wait for sufficient data before acting.

Mistake 2: Ignoring Seasonality

Comparing a February cohort to an August cohort without seasonal adjustment produces misleading conclusions. Many verticals have strong seasonal patterns that affect lead quality.

Solution: Compare year-over-year cohorts (February 2025 vs. February 2024) in addition to sequential cohorts. Build seasonal adjustment factors into your baselines.

Mistake 3: Conflating Source Changes with Quality Changes

When you shift traffic mix – reducing one source while increasing another – aggregate cohort metrics can change even if individual source quality remains stable.

Solution: Analyze source-level cohorts separately from aggregate cohorts. Attribute changes to source mix shifts versus true quality changes.

Mistake 4: Acting on Noise

A single underperforming cohort does not necessarily indicate a problem. Random variation produces occasional outliers even in healthy operations.

Solution: Require persistence (two or more consecutive cohorts) or statistical significance before acting. Use control charts to distinguish signal from noise.

Mistake 5: Analysis Without Action

Cohort analysis is only valuable if it drives decisions. Many operations build sophisticated reporting but never actually change behavior based on findings.

Solution: Build action triggers into your analysis framework. When a source shows three consecutive cohorts with declining conversion, the trigger might be “reduce volume by 25% and investigate.” Tie analysis to decisions.

Mistake 6: Neglecting Data Quality

Cohort analysis inherits all the problems in your underlying data. Missing timestamps, duplicate records, incorrect source attribution, and incomplete outcome data all compromise your conclusions.

Solution: Audit data quality regularly. Validate timestamp accuracy. Reconcile lead counts between systems. Fix data problems before investing heavily in analysis.

Building a Cohort Analysis Practice

Implementing cohort analysis is not a one-time project. It is an ongoing practice that requires discipline, resources, and organizational commitment.

Getting Started: The Minimum Viable Approach

For operations new to cohort analysis, start simple:

- Define weekly cohorts based on lead generation date

- Track three metrics: contact rate (7-day), return rate (14-day), conversion rate (30-day)

- Build a cohort table in spreadsheet format (cohorts as rows, weeks as columns)

- Update weekly with new data

- Compare each cohort to the three prior cohorts at equivalent maturity

This minimal approach takes 1-2 hours per week and provides immediate value. Expand scope as you develop capability.

Scaling the Practice

As your practice matures:

- Add source-level cohort segmentation

- Extend metrics to include revenue and lifetime value

- Implement automated data pipelines

- Build dashboard visualizations

- Create alert systems for threshold breaches

- Develop predictive models based on early cohort signals

Organizational Integration

Cohort analysis findings must reach decision-makers. Build regular review cadences:

- Weekly: Operations review of current cohort performance versus baseline

- Monthly: Source-level cohort analysis with traffic team

- Quarterly: Strategic review of cohort trends with leadership

Document findings and actions. Create a cohort analysis log that tracks: what you found, what you concluded, what action you took, and what result you observed. This institutional memory improves analysis quality over time.

Resource Requirements

Realistic resource allocation for cohort analysis:

- Analyst time: 4-8 hours per week for data preparation, analysis, and reporting

- Technology: Data warehouse capability, visualization tools, automation infrastructure

- Data access: Outcome data from buyers, financial data from accounting

Operations spending less than 2-3% of marketing budget on analytics typically under-invest in measurement capability. Cohort analysis should be part of that investment.

Frequently Asked Questions

What is cohort analysis for lead generation?

Cohort analysis for lead generation is a method of grouping leads by common characteristics – typically the time period when they were generated – and tracking their performance over time. Instead of measuring all leads in aggregate, you segment them into cohorts and compare how each cohort performs at equivalent points in their lifecycle. This approach reveals quality trends, source degradation, and seasonal patterns that aggregate metrics obscure.

How do you calculate lead quality over time?

Calculate lead quality over time by creating cohorts (typically weekly or monthly based on generation date), then measuring key quality indicators at consistent intervals. Track contact rate at 7 days, conversion rate at 30 and 60 days, return rate at 14 and 30 days, and revenue per lead at 60 and 90 days. Compare each cohort’s metrics at the same maturity point – for example, Week 12 cohort at 60 days versus Week 8 cohort at 60 days – to identify trends.

What metrics should I track in lead cohort analysis?

The essential metrics for lead cohort analysis are: contact rate (percentage of leads successfully reached), conversion rate (percentage of leads becoming customers), return rate (percentage of leads returned by buyers for quality issues), and revenue per lead (actual revenue generated). Secondary metrics include speed to sale, cost per acquisition, lifetime value, and return reason distribution. Track each metric at consistent intervals aligned with your vertical’s conversion cycle.

How often should I review lead cohort data?

Review cohort data at three frequencies: weekly for operational monitoring of short-term metrics like contact rate and early returns, monthly for conversion and revenue analysis with sufficient maturation, and quarterly for strategic assessment of long-term trends and source performance. Daily reviews rarely add value because cohort metrics change slowly – leads need time to mature before their cohort performance becomes meaningful.

What cohort size is needed for statistical significance?

For reliable cohort analysis, aim for 200-500 leads per cohort minimum when analyzing conversion rates. This sample size allows detection of meaningful differences (5+ percentage point changes) with statistical confidence. For return rate analysis, smaller cohorts of 50-100 leads can provide useful signals due to lower baseline rates. When cohort volume is insufficient, extend the cohort window (from weekly to bi-weekly) or combine similar sources to build adequate sample sizes.

How do I identify degrading lead sources using cohort analysis?

Identify degrading sources by tracking conversion rate and revenue per lead for each source’s cohorts over time. Create a trend chart showing, for example, Facebook cohort conversion rates from Week 1 through Week 12, all measured at 60-day maturity. A downward slope indicates degradation. Compare this trend to other sources – if Source A declines while Sources B and C remain stable, the problem is source-specific rather than market-wide.

What causes lead quality to decline over time?

Lead quality typically declines due to audience exhaustion (the best prospects in a source have already been captured), algorithm drift (advertising platforms progressively expand to less qualified audiences), creative fatigue (target audiences develop ad blindness), competitive pressure (more advertisers competing for the same prospects), and fraud escalation (bad actors scale successful schemes). Cohort analysis reveals these patterns by showing progressive quality decline across sequential cohorts from the same source.

How do I set baselines and thresholds for cohort analysis?

Establish baselines by calculating the average and standard deviation of your key metrics across a stable historical period – typically 90-180 days of cohorts. Set alert thresholds at 1.5-2 standard deviations from the mean. For example, if your historical conversion rate averages 14% with 2% standard deviation, set an alert threshold at 10% (two standard deviations below mean). Review and adjust baselines quarterly to account for market changes.

Should I use cohort analysis for A/B testing landing pages?

Cohort analysis is essential for landing page A/B testing because form conversion rate does not capture downstream quality. A landing page variant might increase form completions by 20% while decreasing buyer conversion rate by 15% – a net loss. Track cohorts from each variant through the full conversion cycle. Only declare winners after cohorts reach maturity (typically 60-90 days), comparing not just form conversion but contact rate, return rate, and buyer conversion.

How does cohort analysis differ from standard lead tracking?

Standard lead tracking focuses on aggregate metrics – total conversion rate, overall CPL, monthly revenue. Cohort analysis segments by time period and tracks each segment through its lifecycle. This reveals trends invisible in aggregates. Your overall conversion rate might appear stable at 12% while individual cohorts show decline from 15% to 9% over six months – the aggregate masks the problem because older, higher-quality cohorts offset newer, lower-quality cohorts until those older cohorts mature out of the calculation.

Key Takeaways

-

Aggregate metrics hide quality degradation. A stable overall conversion rate can mask severe cohort-level decline. By the time aggregate metrics reflect the problem, you have already purchased weeks or months of poor-quality leads.

-

Track cohorts at consistent maturity intervals. Compare Week 12 cohort at 60 days to Week 8 cohort at 60 days – not Week 8 at 90 days. Inconsistent comparison windows produce misleading conclusions.

-

Start with three core metrics. Contact rate at 7 days, return rate at 14 days, and conversion rate at 30-60 days (depending on vertical) provide the foundation for quality monitoring. Add revenue and lifetime value metrics as your practice matures.

-

Wait for statistical significance. Small cohorts with few leads do not support reliable conclusions. Require 200+ leads per cohort for conversion analysis and two or more consecutive cohorts showing the same pattern before acting.

-

Segment by source, not just time. Source-level cohort analysis identifies which traffic channels maintain quality and which degrade. A declining source hidden in aggregate numbers can contaminate your entire operation.

-

Build action triggers, not just reports. Cohort analysis without action is academic. Define specific decisions tied to specific thresholds: if three consecutive cohorts show conversion below baseline minus two standard deviations, reduce source volume and investigate.

-

Match measurement windows to your vertical’s conversion cycle. Insurance cohorts need 30-60 day maturity. Solar cohorts need 90-180 days. Legal cohorts for personal injury may need 12-24 months. Evaluating before maturity produces premature conclusions.

-

Data quality determines analysis quality. Cohort analysis inherits every flaw in your underlying data – inaccurate timestamps, missing source attribution, incomplete outcome data. Audit and fix data quality before investing heavily in cohort analytics.

Sources

-

Investopedia: Cohort Analysis - Foundational explanation of cohort analysis methodology and its applications in business analytics.

-

Corporate Finance Institute: Days Sales Outstanding - DSO calculation methodology applicable to tracking lead conversion cycle timing.

-

HubSpot Marketing Statistics - Research on lead response timing and conversion rate benchmarks across industries.

-

Salesforce Research Reports - Customer journey and conversion cycle data supporting vertical-specific analysis windows.

Those who build sustainable lead businesses share one trait: they measure what matters, not what is easy to measure. Cohort analysis takes more work than aggregate reporting. It requires discipline, patience, and infrastructure. But it reveals the truth that aggregate metrics hide – and the truth, however inconvenient, is always more valuable than comfortable delusion.