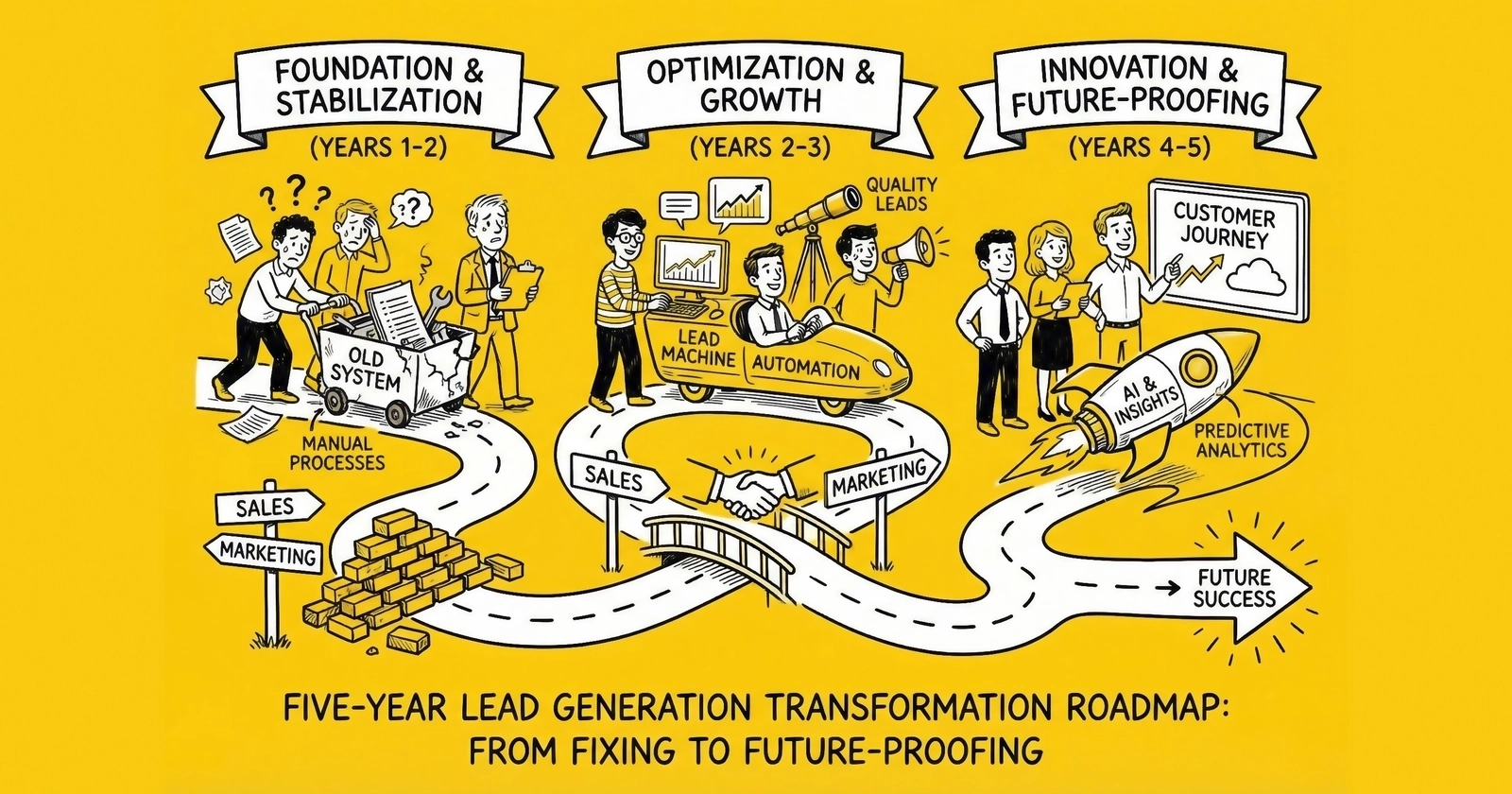

The infrastructure you spent years mastering is being rebuilt from the foundation. Not optimized. Rebuilt. Here’s the phased approach that separates the operators who will thrive from those who will wonder where their business went.

The lead generation industry is entering its most significant transformation since the shift from yellow pages to digital. Third-party cookies are disappearing. AI agents are becoming autonomous buyers. Privacy regulation is proliferating. Those who recognize this transformation and prepare methodically will capture disproportionate value while competitors scramble to catch up.

The transformation will not happen all at once. That is both the good news and the challenge.

Good news: You do not need to rebuild everything simultaneously. Those who survive do it methodically – foundation first, capabilities second, differentiation third. Trying to leap to AI agents without the data infrastructure is like building the penthouse before pouring the foundation.

The challenge: Phased transformation requires discipline when markets reward urgency. The temptation to skip steps is constant. Those who rush past foundational work end up rebuilding later at higher cost.

The stakes are substantial. The global lead generation software market is projected to expand from $5.11 billion in 2024 to $12.37 billion by 2033 – a compound annual growth rate of 10.32%. The broader lead generation services market presents even more impressive figures, with projections ranging up to $32.1 billion by 2035. McKinsey projects agentic commerce – where AI agents act as autonomous buyers – could reach $3-5 trillion globally by 2030. This is not incremental growth. This is the creation of an entirely new commercial architecture.

This roadmap provides the transformation framework – three phases spanning 2025 to 2030. Each phase builds on the previous, creating compounding capability that positions you for the agentic commerce future while generating returns along the way.

Understanding the Transformation Forces

Before diving into the roadmap, you need to understand what is driving this transformation. Three simultaneous forces are dismantling the infrastructure that made modern lead generation possible.

The End of Third-Party Surveillance Infrastructure

For twenty years, third-party cookies enabled the cross-site tracking that powered behavioral targeting, retargeting, and attribution. That capability is collapsing – not because of a single event, but because the entire ecosystem has turned against it.

Safari and Firefox blocked third-party cookies years ago. Chrome, controlling roughly 67% of global browser share as of 2025, has repeatedly delayed full deprecation but the trajectory is clear. Even when Google announced in April 2025 that it would keep third-party cookies enabled by default with user toggle options rather than forcing deprecation, the UK Competition and Markets Authority’s June 2025 report revealed the uncomfortable truth: per-impression publisher revenue runs approximately 30% lower under Privacy Sandbox alternatives compared to traditional cookies.

The practical reality is that ad blockers and browser restrictions now block up to 30% of client-side tracking data, regardless of cookie policy. When a decision-maker visits your pricing page but their browser blocks the tracking pixel, your retargeting loop breaks and your attribution model fails. Server-side tracking addresses this signal loss, recovering 20-40% of previously invisible conversions.

AI Content Saturation and Channel Dilution

Generative AI has democratized content creation at scale, and the consequences are profound. Every channel you rely on for lead generation is being flooded with machine-generated content. By 2027, Gartner projects that AI will handle 30% of traditional marketing tasks, including SEO, content optimization, customer data analysis, segmentation, lead scoring, and hyper-personalization.

The saturation is measurable. When every company can generate unlimited “thought leadership,” the concept loses meaning. When every email is “personalized,” personalization becomes background static. The result is fundamental degradation of the channels themselves.

The Shift to Agentic Commerce

In September 2025, OpenAI launched instant checkout capabilities in ChatGPT, starting with Etsy integrations for U.S. users. Shopify support and further expansions followed, including grocery giant Instacart adding full end-to-end shopping and Instant Checkout by December. More than one million merchants are expected to follow.

ChatGPT now has more than 800 million weekly users. Adobe data shows AI-driven traffic to U.S. retail sites surged 4,700% year-over-year in July 2025. When an AI agent conducts research on behalf of a buyer, traditional lead capture mechanisms – forms, gated content, nurture sequences – become irrelevant. The agent evaluates options programmatically, comparing structured data, cross-referencing reviews, and making recommendations without ever “converting” in the traditional sense.

Phase 1: The Data Foundation (2025-2027)

The first phase establishes the infrastructure that everything else depends on. Without clean data, accurate tracking, and documented consent, the AI and automation investments in later phases produce garbage outputs from garbage inputs. The industry leaders of 2030 will be those who invested in data foundations while competitors chased AI buzzwords.

This phase is not glamorous. Data warehouses, server-side tracking, and consent management do not generate LinkedIn posts about innovation. But they generate the reliable data flows that make innovation possible. Treat this phase as the essential groundwork that enables future differentiation.

Priority Investment 1: Establish Data Sovereignty

Your organization’s data is currently scattered across platforms – CRM, marketing automation, ad platforms, analytics tools, lead distribution systems. Each platform has its own data model, its own definitions, its own retention policies. “Lead” means something different in your MAP than in your CRM. Conversion metrics do not reconcile between Google and your internal systems.

This fragmentation becomes fatal as AI enters the picture. AI systems trained on inconsistent data produce inconsistent outputs. Models that predict conversion based on marketing automation data will not align with models trained on CRM data.

The solution: establish a data warehouse (Snowflake, BigQuery, Databricks, or similar) as the Single Source of Truth. All systems feed data into the warehouse. All analysis draws from the warehouse. When marketing and sales disagree about pipeline metrics, the warehouse resolves the dispute.

Implementation priorities include:

Data model design: Before loading data, define your data model. What is a “lead” in your organization? What stages does it pass through? How do you define conversion? These definitions must be consistent across all source systems.

ETL/ELT pipeline construction: Build automated pipelines that extract data from source systems, transform it to match your unified data model, and load it into the warehouse. Modern tools like Fivetran, Airbyte, or dbt simplify this, but the work is not trivial.

Identity resolution: Prospects interact through multiple channels before converting. The person who clicked your ad, visited your site anonymously, then filled out a form might appear as three different records. Identity resolution stitches these together, creating unified views that enable accurate attribution.

Data quality monitoring: Establish alerts for data quality issues – missing fields, impossible values, failed pipeline runs. Data quality degrades without active maintenance.

The timeline for warehouse implementation varies by organizational scale:

| Organization Size | Timeline | Complexity Factors |

|---|---|---|

| Small/MVP | 2-4 months | Single source systems, simple data models |

| Mid-size enterprise | 3-6 months | Multiple sources, moderate identity resolution |

| Large enterprise | 6-12 months | Complex integrations, full identity resolution |

| Multi-source complex | 9-18+ months | Legacy systems, regulatory requirements, global data |

Most lead generation businesses fall in the 3-6 month range for basic infrastructure, extending to 12-18 months for comprehensive coverage with mature identity resolution.

Priority Investment 2: Hire Revenue Data Architect

Data infrastructure at this scale requires dedicated expertise. The Revenue Data Architect role sits between technical data engineering and commercial operations – understanding both database architecture and revenue process well enough to design systems that serve business needs.

Key responsibilities include data dictionary ownership (ensuring “MQL” means the same thing across all reports), pipeline architecture (balancing current needs with future AI scalability), cross-functional diplomacy (translating between technical and commercial teams), and data product management (treating internal data as a product).

Compensation typically ranges $150,000-250,000+ depending on market and experience.

Priority Investment 3: Migrate to Server-Side Tracking

Client-side tracking is failing. Ad blockers, browser privacy features, and intelligent tracking prevention now block 30%+ of tracking pixels. Every blocked pixel is a conversion you cannot measure, an optimization signal you lose, a dollar spent without attribution.

Server-side tracking recovers 20-40% of this lost signal by routing data through your own servers before forwarding to ad platforms. Current industry adoption sits at 20-25% of SMBs, with projections showing 70% adoption by 2027 across data-driven organizations.

The documented improvements are substantial:

| Implementation Type | Signal Improvement |

|---|---|

| General SST implementation | 20-40% more conversions |

| Ad blocker bypass (custom loader) | Up to 40% more accurate data |

| Meta Conversions API optimization | 22% more purchases recorded |

| Meta-specific performance lift | Up to 38% improvement |

| Google Enhanced Conversions (Search) | +5% average |

| Google Enhanced Conversions (YouTube) | +17% average |

Meta Event Match Quality (EMQ) benchmarks define success thresholds: 0-4 is poor, 5-6 is below optimal, 6+ is acceptable minimum, and 8+ is target for optimal performance. Organizations moving from pixel-only tracking (40-60% match rate) to optimized Conversions API (80-90%+ match rate) see corresponding EMQ improvements from 4-6/10 to 8-9/10.

Implementation priorities include:

Infrastructure setup: Deploy server-side containers (Google Tag Manager Server-Side, or custom implementations) on your own infrastructure or cloud platforms.

Platform integration: Configure server-side connections to major ad platforms – Meta Conversions API, Google Enhanced Conversions, TikTok Events API, and others relevant to your traffic mix.

Data enrichment: Use the server-side layer to enrich events with first-party data before forwarding. A conversion event that includes customer value, product category, or lead quality enables platform optimization unavailable from basic pixel data.

Validation and testing: Verify that server-side events match client-side events, monitor match quality scores, and continuously optimize data completeness.

Timeline typically runs 2-4 months for initial implementation, with ongoing optimization extending indefinitely.

Priority Investment 4: Launch Data Clean Room Pilots

Data clean rooms enable collaboration with partners – matching customer lists, measuring campaign overlap, identifying shared opportunities – without exposing raw data. As privacy regulation tightens and third-party data becomes less reliable, clean rooms become essential infrastructure for partnership-driven lead generation.

The adoption curve has accelerated faster than many realize. Forrester’s Q4 2024 B2C Marketing CMO Pulse Survey found 90% of B2C marketers now use clean rooms for marketing use cases. The 2025 State of Retail Media Report shows 66% adoption in retail media networks specifically. IDC predicts 60% of enterprises will collaborate through clean rooms by 2028.

The investment reality exceeds vendor marketing. Average company investment runs $879,000 according to Funnel.io research, with 62% of users spending $200,000+ and 23% at $500,000+. Enterprise implementations can reach $10 million. Timeline expectations require calibration: vendors promise 8-12 weeks to basic production, but reality is closer to 6 months, with 12 months for full activation capabilities and up to 2 years for 30% of organizations to see meaningful results.

Phase 1 pilot priorities include:

- Provider selection: Evaluate clean room providers (Snowflake, AWS Clean Rooms, InfoSum, LiveRamp) based on your existing infrastructure and partner ecosystem.

- Partner identification: Identify 2-3 partners for initial pilots – companies with complementary customer bases where collaboration creates mutual value.

- Use case definition: Start with simple use cases: audience overlap analysis, suppression, or measurement.

- Governance framework: Establish policies governing clean room participation – what data can be shared, for what purposes, with what retention limits.

Pilot timelines typically span 3-6 months from partner agreement to initial insights. The goal in Phase 1 is not comprehensive clean room deployment – it is learning the capabilities and limitations so Phase 2 can scale appropriately.

Priority Investment 5: Implement First-Party Data Strategy

Third-party data is degrading. First-party data – information you collect directly from prospects and customers – becomes the most reliable foundation for targeting, personalization, and AI training.

Strategy priorities include value exchange design (offer value in return for data sharing), progressive profiling (collect essential information first, additional data through subsequent interactions), zero-party data capture (information prospects explicitly share), and data activation infrastructure (connect your warehouse to activation platforms).

Phase 1 Budget Allocation

Phase 1 investment should emphasize infrastructure over experimentation:

| Investment Area | % of Tech Budget | Rationale |

|---|---|---|

| Data infrastructure | 30% | Foundation for everything else; warehouse, pipelines, quality |

| Server-side tracking | 20% | Signal recovery provides immediate ROI |

| Compliance technology | 25% | Consent management, documentation, verification |

| Analytics/BI | 15% | Reporting and visualization on new infrastructure |

| Testing/emerging | 10% | Reserved for evaluating Phase 2 technologies |

This allocation deliberately under-invests in AI and automation relative to hype cycles. The 10% testing budget allows exploration without committing significant resources before the foundation is ready.

Allocate 15-20% of Phase 1 budget as contingency. Data infrastructure projects frequently encounter scope expansion as hidden data quality issues surface.

Phase 1 Key Metrics

Data Quality Score (Target: 85%+): Develop a composite score measuring data health across dimensions including completeness, accuracy, timeliness, and consistency.

SST Coverage Percentage (Target: 80%+): Measure the proportion of conversions captured through server-side tracking versus client-side only.

First-Party Data Percentage (Target: 60%+): Track the proportion of targeting and personalization powered by first-party versus third-party data.

Consent Capture Rate (Target: 95%+): Measure compliance infrastructure effectiveness including opt-in rates, verification coverage, and consent completeness.

Phase 2: The Cognitive Layer (2027-2028)

With data foundation established, Phase 2 adds intelligence. AI augmentation, real-time coaching, ecosystem orchestration – the capabilities that transform data into competitive advantage. This phase builds on Phase 1 infrastructure; attempting it without the foundation produces AI systems that hallucinate, coaches that mislead, and partners who cannot trust your data quality.

Phase 2 also introduces organizational change. Moving from “leads” to “buying groups” is not just a technology shift – it requires new metrics, new incentives, and new ways of working.

Priority Investment 1: AI Augmentation for Lead Scoring

Traditional lead scoring assigns static points based on demographic and firmographic attributes. A CEO gets more points than a manager. A company with 500 employees scores higher than one with 50. This approach fails to capture the behavioral signals that actually predict conversion.

AI-augmented lead scoring analyzes patterns across thousands of conversions to identify the signals that matter. The model might discover that time spent on pricing pages predicts conversion better than job title, or that prospects who return within 48 hours convert at 3x the rate of those who do not.

Implementation priorities include:

Model training: Use your Phase 1 data warehouse to train models on historical conversion data. The quality of your training data directly determines model quality.

Feature engineering: Identify the signals available for scoring – engagement patterns, content consumption, timing, source characteristics – and make them available to models in real-time.

Score integration: Connect model outputs to lead routing and sales prioritization.

Continuous learning: Establish feedback loops that update models as new conversion data arrives.

The target outcome: prediction accuracy that materially exceeds rule-based scoring, with conversion rates on high-scored leads 3-5x those on low-scored leads.

Priority Investment 2: Real-Time Cognitive Coaching Tools

AI systems like Cogito and Salesken analyze sales conversations in real-time, detecting emotional shifts and providing live coaching prompts. These systems transform average performers into high performers by extending human perception into domains humans cannot access alone.

Implementation requires both technology and culture change:

- Platform selection: Evaluate cognitive coaching platforms based on your sales motion – inside sales, field sales, call center – and integration requirements.

- Pilot deployment: Start with a subset of the sales team, measuring performance against a control group.

- Training and adoption: Salespeople need to learn how to receive and act on coaching prompts without breaking conversational flow.

- Ethical framework: Establish policies governing what data is captured, how it is used, and what crosses the line from coaching to manipulation.

Priority Investment 3: Ecosystem Orchestration (Nearbound)

Trust flows through relationships, not advertising. Phase 2 operationalizes this insight by integrating partner data into CRM workflows and building systematic “introduction request” processes.

Partner-influenced revenue can grow to 80% of new business in mature organizations, with win rates increasing by 40% and deal sizes by 50%. These are not marginal improvements – they represent a fundamental shift in how high-performing companies acquire customers.

Implementation priorities include:

Partner data integration: Connect ecosystem platforms (Crossbeam, Reveal) to your CRM, enabling sales reps to see which prospects have relationships with your partners.

Introduction request process: Design the operational workflow for requesting and tracking partner introductions.

Attribution framework: Measure partner influence on pipeline and revenue.

Partner portfolio development: Identify gaps in your partner ecosystem and systematically recruit partners who serve your target accounts.

Target: 40%+ of qualified pipeline influenced by partner relationships by end of Phase 2.

Priority Investment 4: Buying Group Scoring Implementation

Traditional lead scoring evaluates individuals. Buying group scoring evaluates the collective – how many stakeholders are engaged, which roles are represented, what is the overall account activity level.

The performance differential makes this shift essential. Forrester research documents that organizations embracing buying group approaches see 20-50% improvement in conversion rates when delivering verified buying groups to sales. Top performers achieve 17x increase in conversion rates and 4x improvement in win rates. Compare this to traditional lead-centric processes showing a 99% failure rate from inquiry to close, with only 7% of leads from marketing rated as high quality by sales.

Implementation requires rethinking your data model:

Buying group detection: Use AI to identify when multiple contacts from an account are researching simultaneously.

Group-level scoring: Develop scores that aggregate individual engagement into account-level metrics.

Scoring model evolution: Train models that predict account outcomes, not just individual lead outcomes.

Sales enablement: Surface buying group intelligence to sales reps in actionable form.

Phase 2 Organizational Changes

Shift from “Leads” to “Buying Groups” Reporting: Replace lead volume metrics with account engagement metrics, buying group coverage scores, and multi-contact conversion rates.

Marketing Compensation Tied to Revenue: Marketing compensation includes metrics on pipeline creation and influence, not just lead delivery. Marketing and sales share portions of compensation tied to identical revenue targets.

AI Skills Development Program: Hands-on instruction in specific AI tools deployed, training on prompt engineering, developing judgment about when to trust AI recommendations and when to override them.

Phase 2 Budget Allocation

| Investment Area | % of Tech Budget | Change from Phase 1 |

|---|---|---|

| Data infrastructure | 20% | -10% (maintenance mode) |

| AI/ML tools | 30% | +20% (from testing budget) |

| Ecosystem platforms | 15% | New allocation |

| Compliance technology | 15% | -10% (maintenance mode) |

| Training/change mgmt | 10% | New allocation |

| Testing/emerging | 10% | Maintained |

Note the new allocation for training and change management. AI tools only produce value when people use them effectively.

Phase 2 Key Metrics

AI Adoption Rate (Target: 70%+): Percentage of licensed users actively using AI tools.

Prediction Accuracy (Target: 3x+ conversion rate differential): Conversion rate difference between top and bottom score quintiles.

Response Time (Target: Sub-5-minute): Time from lead capture to initial sales contact for high-intent leads.

Partner-Influenced Revenue Percentage (Target: 40%+): Closed revenue with documented partner influence.

Phase 3: The Agentic Future (2028-2030)

Phase 3 positions you for the industry transformation that will define the next decade. AI agents as buyers. Agentic commerce at scale. Generative engine optimization. The capabilities that seem speculative today become competitive necessities by 2028.

Phase 3 investments carry more uncertainty than earlier phases. Agent protocols are still maturing. GEO best practices are emerging. The specific technologies that dominate may differ from those prominent today. The strategy is to build adaptive capability rather than bet on specific implementations.

Priority Investment 1: Structure Product Data for AI Agents

When AI agents request quotes, evaluate vendors, and execute purchases on behalf of human decision-makers, structured data becomes essential. Agents cannot interpret your beautifully designed website the way humans can. They need machine-readable data in standard formats.

Implementation priorities include:

Schema.org implementation: Implement comprehensive structured data markup – Product, Service, Offer, Organization, FAQ schemas at minimum.

API development: Build APIs that agents can query directly for pricing, availability, and specifications.

Catalog standardization: Organize product and service information in formats that support automated comparison.

Agent testing: Regularly test how AI systems (ChatGPT, Claude, Perplexity, Google AI) represent your products and services.

Priority Investment 2: Implement Agent Protocols (MCP, A2A, ACP)

The agent protocol landscape consolidated rapidly in 2025, with clear patterns emerging. Anthropic’s Model Context Protocol (MCP) achieved industry-standard status within one year of its November 2024 launch, now powering 10,000+ published servers with adoption by OpenAI, Microsoft, Google, and virtually every major AI provider.

Three frameworks are emerging as complementary standards:

Model Context Protocol (MCP): Standardizes how AI agents connect to tools and data sources. It is the dominant protocol for agent-to-tool communication (vertical integration).

Agent-to-Agent Protocol (A2A): Developed by Google with Microsoft collaboration, enables agents from different platforms to communicate and coordinate (horizontal integration). Now at v0.3 production-ready status with 100+ partners.

Agent Communication Protocol (ACP): Developed by IBM through the BeeAI platform, focuses on local-first agent coordination with minimal network overhead.

The enterprise adoption statistics confirm urgency: 79% of organizations have implemented AI agents at some level, 96% of IT leaders plan to expand agent implementations in 2025, and Gartner predicts 40% of enterprise apps will feature task-specific AI agents by 2026 – up from less than 5% in 2025.

Implementation strategy for Phase 3:

- Multi-protocol fluency: Build capability to support multiple protocols rather than betting on one winner.

- MCP priority: Given current adoption momentum, prioritize MCP implementation for your core systems.

- A2A readiness: As enterprise agent ecosystems mature, A2A capability enables participation in multi-agent workflows.

- Swappable architecture: Design systems with protocol abstraction layers that can adapt as standards evolve.

Priority Investment 3: GEO Strategy Implementation

Generative Engine Optimization – optimizing content for citation and representation by AI systems – becomes essential as AI agents increasingly mediate information discovery.

The business case is compelling. Princeton and Georgia Tech research documented 40% visibility improvements for optimized content, with adding statistics achieving 30-40% improvement and citing authoritative sources boosting visibility up to 115% for lower-ranked sites. More importantly, AI search visitors are 4.4x more valuable than traditional organic – with 7:35 average session duration (vs. 4:41 for Google organic), 23% lower bounce rates, and 41% more time on site.

Expert projections place the GEO-SEO crossover by end of 2027, when AI search will drive equal economic value to traditional search. By 2028, AI-powered search is projected to capture 30-40% of informational queries, with organic search traffic down 50% from the AI shift. McKinsey projects $750 billion in US revenue flowing through AI search by 2028.

Yet only 16% of brands systematically track AI search performance, creating massive first-mover advantage.

Implementation priorities include:

Citation optimization: Structure content so AI systems can cite and quote you accurately. Clear claims, attributed statistics, and quotable statements improve citation probability.

Entity establishment: Build clear “entity” presence that AI systems recognize – comprehensive Wikipedia entries, consistent information across authoritative sources, structured data that confirms identity.

Content architecture: Organize information for AI extraction, not just human reading.

Monitoring and iteration: Regularly test how AI systems represent your organization.

Priority Investment 4: Build Algorithmic Trust Framework

AI agents do not evaluate vendors the way humans do. They assess structured signals – verified reviews, documented credentials, consistent data, technical reliability.

Framework components include:

Reputation infrastructure: Ensure your presence on review platforms (G2, Capterra, TrustRadius, industry-specific sites) is comprehensive, accurate, and actively managed.

Credential documentation: Make certifications, compliance attestations, and qualifications available in structured formats that agents can verify programmatically.

Technical reliability: API uptime, response time, and consistency become trust signals.

Content verification: As synthetic content proliferates, verifiable authenticity becomes differentiating. Implement content provenance standards (C2PA) that prove your content is genuine.

Phase 3 Budget Allocation

| Investment Area | % of Tech Budget | Purpose |

|---|---|---|

| Agent infrastructure | 25% | API development, protocol support, structured data |

| AI/ML tools | 25% | Maintained from Phase 2; expanded capabilities |

| Spatial/AR-VR | 15% | Digital twins, visualization tools |

| GEO optimization | 10% | Content optimization for AI visibility |

| Ecosystem platforms | 10% | Maintained from Phase 2 |

| Testing/emerging | 15% | Increased for rapid technology evolution |

The increased testing budget reflects higher uncertainty in Phase 3.

Phase 3 Key Metrics

API Query Volume: Number of API queries from identified AI agents.

Agent Conversion Rate (Target: 10%+ by 2030): Qualified leads originating from AI agent interactions.

Algorithmic Trust Score: Positive, accurate representation in major AI systems.

GEO Citation Rate (Target: 50%+ of relevant queries): Percentage of relevant queries where AI systems mention you.

Transformation by Business Model

The transformation phases apply differently depending on your position in the lead economy.

For Lead Generators/Publishers

Compliance Infrastructure Is Existential: The regulatory environment becomes more stringent, not less. Publishers without robust compliance infrastructure face existential risk. Phase 1 priorities include TrustedForm implementation on all lead capture, consent language that survives regulatory scrutiny, documentation systems that retain evidence for 5+ years, and audit-ready processes.

Quality Over Quantity: Whether the FCC’s one-to-one consent rule returns or state mini-TCPAs proliferate, the trajectory points toward more restrictive consent requirements. Phase 2 priorities include quality scoring that predicts buyer acceptance rates, return rate reduction through better pre-qualification, buyer feedback loops, and premium positioning based on verified quality metrics.

Technology Investment Non-Negotiable: Publishers still operating on manual processes – Excel tracking, email-based delivery, relationship-based quality management – will not survive the transformation. The operational efficiency gap becomes insurmountable.

Prepare for AI Agents as Future Buyers: Phase 3 priorities include API-first lead delivery capability, structured data that agents can evaluate, real-time inventory and pricing availability, and agent protocol support (MCP, A2A).

First-Party Data Development: Publishers who capture email addresses, phone numbers, and declared preferences own assets. Publishers who drive traffic to forms and pass leads immediately own nothing.

GEO Optimization for LLM Visibility: When consumers ask AI assistants for recommendations, publishers who establish GEO visibility early will capture this emerging traffic.

For Lead Buyers

Speed-to-Contact as Primary Differentiator: Response within 5 minutes converts at 8x the rate of response within 30 minutes. Implementing the five-minute rule is fundamental to competitive success. Phase 1-2 priorities include automated routing, dialer infrastructure, AI-powered prioritization, and 24/7 coverage capability.

First-Party Data Development: The most profitable leads are those you generate yourself. Every buyer should invest in first-party data strategies alongside purchased lead channels.

Vendor Compliance Verification: Buying leads from non-compliant generators creates liability. Phase 1 priorities include generator compliance questionnaires and audits, TrustedForm/Jornaya certificate verification, consent language review processes, and contract protections.

CRM Modernization for AI: Legacy CRM systems will not support AI augmentation. Phase 1-2 priorities include modern CRM with AI integration capabilities, data quality that supports model training, workflow automation infrastructure, and integration readiness for cognitive tools.

Buying Group Identification: B2B buyers need capability to identify and engage buying groups, not just individual leads.

Agent Integration Preparation: When AI agents evaluate and recommend vendors, your presence in their consideration set matters. Phase 3 priorities include structured data for AI comprehension, review platform presence, API capability, and algorithmic trust framework development.

For Platforms

API-First Architecture: Platforms must be accessible to AI agents through APIs, not just humans through interfaces.

Built-In Privacy Compliance: Platforms that simplify compliance win customers.

Real-Time Processing: The future requires real-time routing, validation, and optimization. Batch processing becomes competitive disadvantage.

Agent Protocol Support: Platforms become infrastructure for AI agent ecosystems. Protocol support determines participation.

Implementation Sequencing

Phase 1 Sequencing (2025-2027)

Months 1-6: Begin data warehouse implementation and hire Revenue Data Architect. Launch SST implementation on highest-volume traffic sources. Complete data model design.

Months 6-12: Expand SST coverage. Begin clean room pilots. Complete warehouse deployment. Establish baseline metrics.

Months 12-18: Refine Phase 1 systems. Begin Phase 2 planning. Validate foundation readiness.

Phase 2 Sequencing (2027-2028)

Months 1-6: Begin AI lead scoring development. Pilot cognitive coaching. Launch ecosystem platform integration.

Months 6-12: Roll out AI scoring across full lead flow. Implement buying group reporting. Scale ecosystem orchestration.

Months 12-18: Optimize Phase 2 systems. Begin Phase 3 planning. Validate cognitive layer readiness.

Phase 3 Sequencing (2028-2030)

Year 1 (2028): Structured data implementation for agent accessibility. Implement MCP protocol support. Launch GEO optimization.

Year 2 (2029): Expand API capabilities. Add A2A protocol support. Iterate GEO strategy.

Year 3 (2030): Full agent-ready infrastructure. Comprehensive algorithmic trust framework. Continuous adaptation to protocol evolution.

Risk Considerations and Uncertainty Management

Phase 1 Risks: Data infrastructure projects frequently encounter scope expansion as hidden data quality issues surface. SST implementations may require additional integration work. Contingency protects against cutting corners on foundation quality.

Phase 2 Risks: AI performance variance requires iteration cycles. Adoption resistance demands change management investment. Shifting from leads to buying groups creates metric discontinuity – prepare leadership for apparent performance declines during transition.

Phase 3 Risks: Technology evolution, market timing uncertainty, and regulatory changes require building adaptive capability rather than betting on specific implementations. The strategy is developing skills and infrastructure that transfer across multiple possible futures.

The Failure Rate Reality

The stakes are high, and the failure rates are sobering. An MIT NANDA Report found 95% of generative AI pilots fail to achieve rapid revenue acceleration. S&P Global documented that 42% of companies abandoned most AI initiatives in 2025 – up from 17% in 2024. RAND Corporation research shows AI project failure rates exceeding 80%, double that of non-AI IT projects.

The primary culprits: data quality issues (43% of failures), lack of technical maturity (43%), integration difficulties (48%), and budget constraints (50%).

One pattern stands out from this wreckage: purchased AI solutions succeed 67% of the time versus roughly 22% for internal builds. This has driven a dramatic shift – 76% of AI use cases are now purchased rather than built internally, up from 53% in 2024.

The transformation roadmap accounts for these realities, emphasizing foundation-building over experimentation and vendor partnerships over custom development.

Frequently Asked Questions

1. What is the timeline for the lead generation transformation?

The transformation unfolds in three phases: Phase 1 (Data Foundation, 2025-2027) focuses on establishing data sovereignty and recovering lost tracking signal. Phase 2 (Cognitive Layer, 2027-2028) deploys AI augmentation across the revenue process. Phase 3 (Agentic Future, 2028-2030) positions companies for AI agents as autonomous buyers. Each phase builds on the previous, and attempting to skip phases typically results in costly rebuilding.

2. How much should I budget for the transformation?

Phase 1 emphasizes infrastructure (30% data infrastructure, 20% server-side tracking, 25% compliance technology, 15% analytics, 10% testing). Phase 2 shifts toward AI tools (30%) with new allocations for ecosystem platforms (15%) and training (10%). Phase 3 adds agent infrastructure (25%), spatial computing (15%), and GEO optimization (10%). Total investment depends on organization size, but plan for 15-20% contingency in Phase 1 as data quality issues frequently expand scope.

3. What is server-side tracking and why does it matter?

Server-side tracking routes conversion data through your own servers before forwarding to ad platforms, bypassing browser restrictions that now block 30%+ of client-side tracking. Implementation recovers 20-40% of lost conversion signals, with documented Meta Conversions API improvements of 22-38% and Google Enhanced Conversions delivering +5-17% average lifts. Current adoption sits at 20-25% of SMBs, with 70% projected by 2027.

4. What are data clean rooms and when should I implement them?

Data clean rooms enable secure collaboration with partners – matching customer lists, measuring campaign overlap, identifying opportunities – without exposing raw data. Ninety percent of B2C marketers now use clean rooms. Average investment runs $879,000, with 62% spending $200,000+. Start with pilot programs in Phase 1 (3-6 months to initial insights), then scale in Phase 2.

5. What is agentic commerce and how will it affect my business?

Agentic commerce refers to AI agents acting as autonomous buyers – shopping, negotiating, and transacting without human intervention. McKinsey projects $3-5 trillion globally by 2030. For lead generation, agents will bypass forms entirely, querying APIs directly. The “conversion moment” migrates from your landing page to the algorithm’s decision logic. Companies without machine-readable data and API infrastructure become invisible to agent-mediated commerce.

6. What is the difference between buying group scoring and traditional lead scoring?

Traditional lead scoring evaluates individuals with static point values. Buying group scoring evaluates the collective – how many stakeholders are engaged, which roles are represented, overall account activity. Forrester documents 20-50% conversion improvement from buying group approaches, with top performers achieving 17x increase in conversion rates and 4x improvement in win rates.

7. What is Generative Engine Optimization (GEO)?

GEO optimizes content for AI citation rather than search ranking. As discovery shifts to AI platforms like ChatGPT (800 million weekly users), being cited in AI-generated answers becomes as important as search ranking. Princeton research documented 40% visibility improvements for optimized content. AI search visitors are 4.4x more valuable than traditional organic traffic. Expert projections place the GEO-SEO crossover by end of 2027.

8. What protocols should I prepare for in the agentic future?

Three complementary protocols are emerging: Model Context Protocol (MCP) for agent-to-tool communication (industry standard with 10,000+ servers), Agent-to-Agent Protocol (A2A) for cross-platform agent coordination (v0.3 production-ready with 100+ partners), and Agent Communication Protocol (ACP) for local-first deployment. Prioritize MCP given current adoption, but build with protocol abstraction layers for future flexibility.

9. How do I measure success across the three phases?

Phase 1 metrics: Data quality score (target 85%+), SST coverage (80%+), first-party data percentage (60%+), consent capture rate (95%+). Phase 2 metrics: AI adoption rate (70%+), prediction accuracy (3x+ conversion differential), response time (sub-5-minute), partner-influenced revenue (40%+). Phase 3 metrics: API query volume, agent conversion rate (10%+ by 2030), algorithmic trust score, GEO citation rate (50%+).

10. What are the biggest risks to transformation success?

AI project failure rates exceed 80%, with primary culprits being data quality issues (43%), lack of technical maturity (43%), integration difficulties (48%), and budget constraints (50%). Purchased AI solutions succeed 67% of the time versus 22% for internal builds. The roadmap mitigates these risks by emphasizing foundation before capability, vendor partnerships over custom builds, and phased implementation with validation gates between phases.

Key Takeaways

-

The three-phase roadmap spans 2025-2030: Phase 1 builds data foundation (server-side tracking, data warehouse, compliance), Phase 2 adds cognitive layer (AI scoring, real-time coaching, buying groups), Phase 3 enables agentic future (agent protocols, GEO, algorithmic trust).

-

Data foundation is non-negotiable prerequisite – 95% of AI pilots fail without it, with data quality issues causing 43% of failures. Purchased AI solutions succeed 67% versus 22% for internal builds.

-

Server-side tracking recovers 20-40% of lost conversion signals. Meta Conversions API delivers 22-38% improvement. Google Enhanced Conversions provides +5-17% lifts. Current adoption sits at 20-25%, projected to reach 70% by 2027.

-

Phase 2 organizational changes require shifting from “leads” to “buying groups” reporting, tying marketing compensation to revenue, and investing 10% of budget in AI skills development alongside technology.

-

Agentic commerce could reach $3-5 trillion globally by 2030. ChatGPT has 800 million weekly users. AI agents will bypass forms to query APIs directly, making machine-readable data and API infrastructure competitive requirements.

-

GEO-SEO crossover is projected by end of 2027. AI search visitors are 4.4x more valuable than traditional organic. Only 16% of brands systematically track AI search performance, creating first-mover advantage.

-

Business model-specific priorities differ: publishers must prioritize compliance and quality over volume, buyers need speed-to-contact and first-party generation, platforms require API-first architecture and real-time processing.

-

Budget allocation evolves across phases: Phase 1 emphasizes infrastructure (30%) and compliance (25%), Phase 2 shifts to AI tools (30%) with training allocation (10%), Phase 3 balances agent infrastructure (25%), spatial computing (15%), and GEO (10%).

Sources

- Grand View Research - Lead Generation Software Market - Market size projections ($5.11B in 2024 to $12.37B by 2033, 10.32% CAGR)

- Precedence Research - Lead Generation Software Market - Broader market projections reaching $32.1B by 2035

- Anthropic - Model Context Protocol Announcement - MCP protocol documentation with 10,000+ published servers

- Forrester Research - Buying group research showing 20-50% conversion improvement; B2C clean room adoption data (90% of marketers)

- Snowflake - Data warehouse platform for first-party data foundation and clean room infrastructure

Statistics current as of December 2025.