AI-referred traffic jumped 527% in five months. 25% of ChatGPT’s most-cited URLs have zero Google visibility. The metric that mattered for two decades – search position – has been displaced by citation frequency in AI-generated responses.

A lead generation company ranks position one for “best mortgage lead vendors” on Google. Their SEO team celebrates. Traffic looks solid. Then someone asks ChatGPT the same question.

The AI responds with three recommendations. None of them is the company ranking first on Google. The businesses mentioned have modest search rankings but one thing in common: their content structure, authority signals, and entity clarity made them citation-worthy to language models.

The first company paid six figures for that ranking. The cited companies paid nothing for the mention. Yet the AI response reaches 600 million monthly ChatGPT users – and those users convert at 4.4x the rate of traditional search visitors.

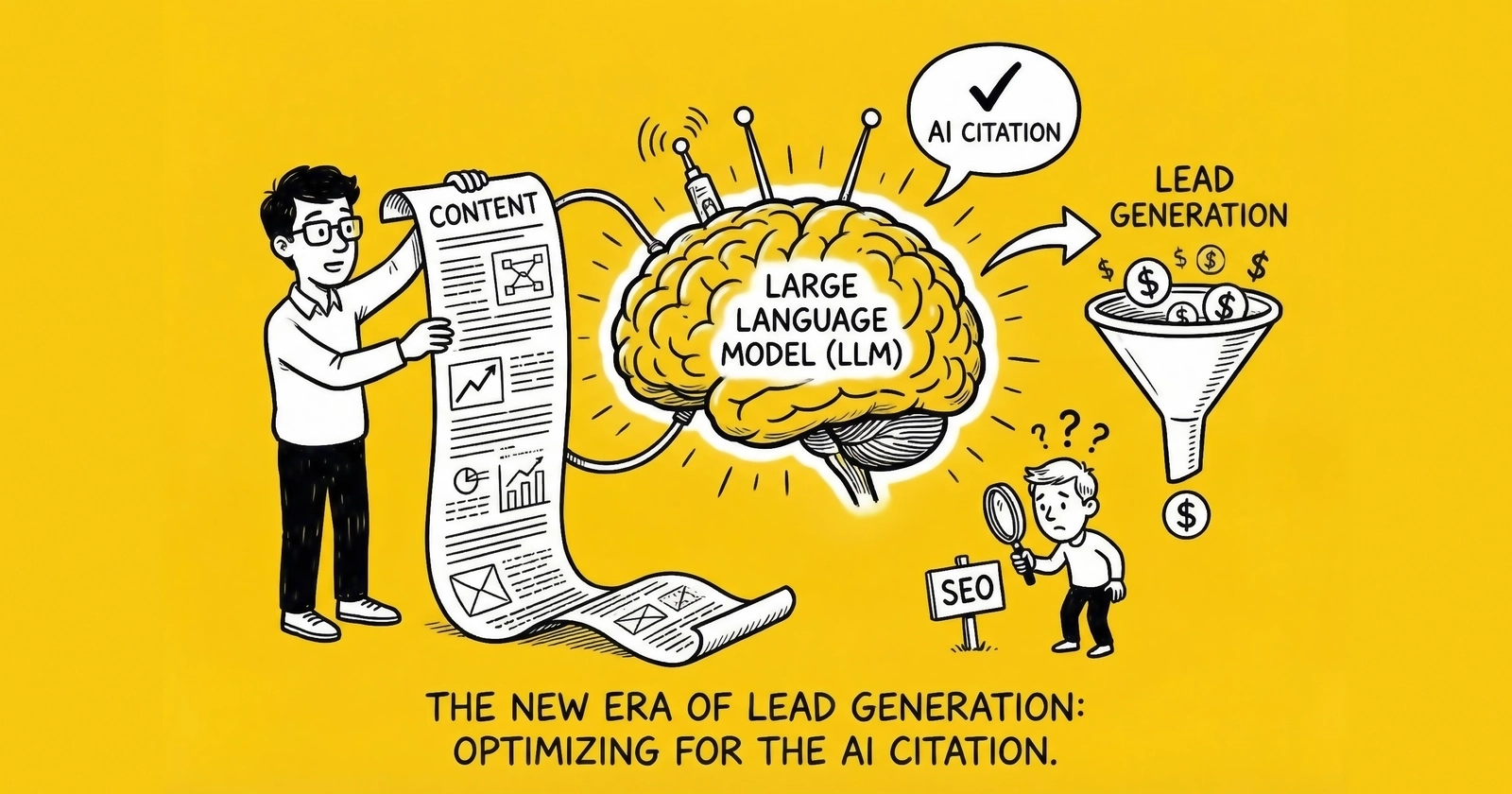

This is LLMO – Large Language Model Optimization, also called Generative Engine Optimization (GEO). It’s not about ranking. It’s about being cited. And for lead generators operating in a visibility-driven industry, ignoring this shift means watching competitors capture traffic from channels that didn’t exist three years ago.

The End of the Click-Driven Paradigm

For twenty-five years, digital marketing operated under one assumption: higher rankings meant more traffic. Google’s PageRank created a quantifiable hierarchy. Position one was worth exponentially more than page two. Entire industries – SEO agencies, keyword platforms, rank tracking software – built themselves around this metric.

That era is ending.

Why Traditional SEO Metrics Are Becoming Obsolete

The traditional SEO metric – ranking position – was always a proxy. Nobody wanted to rank number one for a keyword. They wanted traffic. They wanted customers. Ranking was simply the most measurable path to those goals.

Proxies break down when the underlying system changes. When users stop clicking through to websites, the proxy loses correlation to actual outcomes. A lead generation company can rank position one for a high-volume keyword and receive diminishing returns if AI systems answer the question without citing them.

The data is unambiguous:

| Metric | Finding |

|---|---|

| Citation overlap | Only 10% overlap between ChatGPT citations and Google rankings for short-tail queries |

| Zero Google visibility | 25% of top 1,000 ChatGPT-cited URLs show zero organic visibility in Google |

| Top 3 citations | 50% of ChatGPT’s top 3 cited URLs have no organic visibility |

| Content freshness | 89.7% of ChatGPT’s top cited pages were updated in 2025 |

A company can optimize extensively for traditional search and still be excluded from AI responses if the model doesn’t consider them authoritative. Conversely, a company establishing clear authority signals can be cited in AI responses even without top rankings.

The 527% AI Traffic Growth Nobody Prepared For

Between January and May 2025, AI-referred sessions jumped from 17,076 to 107,100 – a 527% increase in five months. Adobe Analytics recorded 1,200% growth in generative AI traffic between July 2024 and February 2025. Google AI Overviews reached 1.5 billion monthly users. ChatGPT hit 600 million monthly users by March 2025.

Gartner predicts traditional search engine volume will drop 25% by 2026 as users rely on AI assistants.

For lead generators, these numbers represent both threat and opportunity. Threat because traditional SEO investments may yield diminishing returns. Opportunity because AI search visitors convert 4.4x better than traditional organic visitors – they’ve already done their research, they know what they want, and they’re ready to act.

Platform Market Share: ChatGPT Dominates, But Others Matter

Understanding where your audience seeks answers determines where optimization efforts should focus:

| Platform | Market Share | Session Value | Citation Style |

|---|---|---|---|

| ChatGPT | 77.97% | – | Inconsistent, favors depth |

| Perplexity | 15.10% | $3.12/visit | Citation-first, numbered references |

| Gemini | 6.40% | – | Real-time retrieval |

| Claude | 0.17% | $4.56/visit | Training data focus, technical accuracy |

| DeepSeek | 0.37% | – | Emerging market |

Claude’s $4.56 session value – the highest among platforms – reflects its user base: technical decision-makers seeking detailed, accurate information. For B2B lead generation, this audience profile matters.

How AI Systems Evaluate Sources Differently

Google’s ranking algorithm answers one question: “Which page would most users find most helpful?” It analyzes hundreds of signals – content relevance, backlink quality, user behavior – and produces a ranking.

Language models work from a different question: “What can I synthesize to provide the best answer?” They’re not ranking pages. They’re evaluating which sources contain credible, relevant information worth including in responses.

From PageRank to Citation Authority

When multiple sources confirm the same information, language models gain confidence. When a source has strong credibility signals, they prioritize it. When information is recent and specific, they include it.

Citations serve multiple purposes in this system:

- Attribution – allowing users to verify information

- Credibility signals – indicating which sources the model trusts

- Information trail – showing how knowledge flows through the web

From a language model’s perspective, being cited means being trusted. Your information made it into the response because the model evaluated it as credible. This is a stronger signal than ranking position, which might reflect keyword optimization more than actual authority.

Why 25% of Top ChatGPT-Cited URLs Have Zero Google Visibility

The divergence between search rankings and AI citations reveals fundamental differences in evaluation:

Traditional SEO rewards:

- Keyword density and placement

- Backlink volume and authority

- Technical optimization (speed, mobile, structure)

- User behavior signals

LLMO rewards:

- Entity clarity and consistency

- Author credentials and expertise signals

- Content structure that enables extraction

- Factual accuracy and source attribution

- Cross-platform authority confirmation

A lead generation blog post can rank highly through backlink building and keyword optimization while providing surface-level content. That same post gets ignored by AI systems that detect thin authority signals.

Conversely, a deeply technical article with limited backlinks but strong author credentials, specific data, and clear entity definitions can earn AI citations without ranking well in traditional search.

The 89.7% Freshness Factor – Recency in AI Citation

ChatGPT’s most-cited pages share one characteristic: 89.7% were updated in 2025. Language models interpret freshness as a credibility signal, particularly for topics where information changes rapidly.

For lead generation content, this has direct implications:

- TCPA compliance guides need current regulatory information

- CPL benchmarks require recent market data

- Platform comparisons must reflect current feature sets

- Industry trend analysis loses citation value quickly

Content published in 2023 about lead generation practices may rank well in Google but get overlooked by AI systems seeking current information. Update cadence becomes a citation factor, not just an SEO factor.

The Four Pillars of LLMO for Lead Generation

Citation frequency isn’t random. Language models follow patterns that can be understood and optimized. Four pillars determine whether your content gets cited or ignored.

Entity Architecture – Defining Your Business Precisely

AI systems process entities – distinct, recognizable things. Your company is an entity. Your team members are entities. Your products are entities. The relationships between these entities matter profoundly.

Traditional SEO largely ignored entity clarity. A lead generation company might describe itself as “a marketing agency” on one page, “a lead provider” on another, and “a performance marketing partner” on a third. For Google’s algorithm, this flexibility worked fine. For language models, it creates ambiguity.

Weak entity definition:

“We provide marketing services and help businesses grow.”

Strong entity definition:

“A mortgage lead generation company specializing in exclusive live transfers, delivering 12,000+ verified leads monthly to regional lenders with 87% contact rates and 14% conversion to funded loans.”

The specific version gives language models clear reference points. They understand not just what you do, but exactly who you serve, what outcomes you deliver, and how you differ from alternatives.

For lead generators, entity clarity means documenting:

- Specific verticals served (auto insurance, Medicare, solar, home services)

- Lead delivery methods (ping/post, live transfer, aged leads)

- Compliance frameworks (TrustedForm, Jornaya, TCPA certification)

- Geographic coverage and licensing

- Integration capabilities (Salesforce, custom API, LeadsPedia)

E-E-A-T Amplification – Trust Signals That AI Systems Detect

Google introduced E-E-A-T – Experience, Expertise, Authoritativeness, Trustworthiness – for content quality evaluation. Language models take this further, scanning your entire digital presence for consistency. Our guide to E-E-A-T trust signals for lead generation covers the specific signals that matter most.

Experience means showing real-world involvement. Case studies, campaign results, operational specifics. A claim that you’re “experienced in lead generation” carries less weight than “delivered 2.3 million Medicare leads across 47 states during 2025 AEP, maintaining 91% TrustedForm certification rate.”

Expertise appears through technical depth and specificity. Language models detect whether content comes from someone who operates in the industry or someone who researched it for an article. Crucially, expertise signals must match your audience – consumer-facing content shows expertise through specific outcomes, local knowledge, and practical guidance, while B2B content targeting industry peers can use technical terminology appropriate to that audience.

Authoritativeness comes from external recognition. When your content is cited by industry publications, mentioned in compliance discussions, or referenced by platform vendors, language models recognize these signals. A white paper on your website carries less weight than one cited in an ActiveProspect blog post.

Trustworthiness ties everything together. For lead generation – a YMYL (Your Money or Your Life) category that can affect financial outcomes – trust signals matter more. Document your compliance processes. Show your TrustedForm integration. Reference specific FTC or state regulatory requirements. Link to your privacy policy and consent language.

Content Structure for AI Synthesis

Human readers scan content, jumping to sections of interest. Language models process differently. They analyze structure, extract relationships, and identify how ideas connect.

Research shows clear patterns in what gets cited:

| Content Pattern | Citation Impact |

|---|---|

| List sections | 80% of ChatGPT-cited articles include lists (vs. 28.6% of Google top results) |

| Average list count | Pages cited by ChatGPT average 14 list sections |

| Heading hierarchy | Sequential H1-H2-H3 structure cited nearly 3x more often |

| Answer length | Optimal section opener: 40-60 words |

| Semantic cues | ”In summary,” “step 1,” “common mistake” trigger extraction |

For lead generation content, structure means:

Question-based headers:

- “How does ping/post lead distribution work?”

- “What contact rates should buyers expect?”

- “Why do exclusive leads cost more than shared leads?”

Direct answer openers:

Ping/post distribution routes leads through a two-phase auction. The ping phase transmits partial data for buyer evaluation and bidding. The post phase delivers complete lead information to the winning bidder. This process completes in under 200 milliseconds.

Scannable formats:

- Numbered steps for processes

- Bullet lists for features or requirements

- Tables for comparisons and benchmarks

- Code blocks for API specifications

Cross-Platform Optimization – Building Citation Sources

The fragmentation of AI platforms means no single optimization works everywhere. Content structured for ChatGPT’s conversational style might perform differently in Perplexity’s research format or Google’s AI Overviews.

Sophisticated LLMO involves:

Multi-channel distribution:

- Your website (foundation)

- Industry publications (LeadsCon, PerformanceIN)

- Compliance resources (ActiveProspect blog, Jornaya documentation)

- Research platforms (industry whitepapers, benchmark studies)

Citation source building: When authoritative platforms mention your content, those citations become signals to language models. A case study on your website carries modest weight. The same study referenced in a platform vendor’s documentation carries substantially more.

Platform-specific considerations:

- Perplexity: Real-time retrieval, most transparent citations, generates referral traffic

- ChatGPT: Favors depth over freshness, citations inconsistent but influential

- Claude: Relies heavily on training data, prioritizes technical accuracy

- Google AI Overviews: 82% of citations come from deep topic pages, not homepages

Content Patterns That Get Cited

Beyond structure, specific content patterns correlate with higher citation frequency. Understanding these patterns reveals why some lead generation content gets mentioned while similar content gets ignored.

The 80% List Factor – Why Bullet Points Win

80% of articles cited by ChatGPT include at least one list section. Pages cited by ChatGPT average 14 list sections. Compare this to Google’s top results, where only 28.6% include lists.

Why? Lists enable clean extraction. When a user asks “What are the benefits of exclusive leads?”, a language model can pull a bullet list directly into the response. A narrative paragraph requires synthesis, introducing potential error and reducing confidence.

Effective list content for lead generation:

- “7 questions to ask before signing a lead vendor contract”

- “The 5 compliance requirements every lead generator needs”

- “8 metrics that indicate lead quality problems”

- “12 red flags in lead purchase agreements”

Each list becomes a potential citation point. Each bullet becomes extractable content.

Question-Based Headers and 2-Sentence Answers

Language models parse question-answer structures efficiently. When headers phrase the questions users ask, and opening sentences provide direct answers, the content becomes citation-ready.

Weak header:

Lead Quality Considerations

Strong header:

How Do You Identify Low-Quality Lead Vendors?

Effective answer opener:

Low-quality lead vendors show consistent patterns: refusal to provide sample leads, vague descriptions of traffic sources, no compliance documentation, and contracts that prohibit chargebacks. Request TrustedForm certificates, source transparency reports, and 7-day trial periods before committing volume.

The question header matches user queries. The answer opener provides an extractable response. The follow-up adds depth for users who click through.

The 40-60 Word Answer Capsule

Research indicates optimal citation-ready answers fall between 40-60 words. Long enough to be substantive, short enough to extract without modification.

Example – 52 words:

Cost per lead varies dramatically by vertical and lead type. Auto insurance shared leads typically run $8-15, while exclusive live transfers reach $40-80. Medicare leads during AEP can exceed $100 exclusive. The variance reflects intent quality, competition, and conversion probability. Benchmark your CPL against vertical-specific data, not industry averages.

This format works because it:

- Answers the implied question directly

- Provides specific numbers

- Acknowledges variance

- Offers actionable guidance

- Fits within extraction parameters

Semantic Cues That Trigger Citation

Certain phrases signal importance to language models:

- “The most important factor is…”

- “In summary…”

- “Step 1 / Step 2 / Step 3…”

- “Common mistakes include…”

- “The key difference is…”

- “According to [source]…”

These phrases function as extraction triggers. They indicate which content represents core concepts versus supporting detail.

Lead Generation Industry Applications

LLMO principles apply universally, but implementation varies by vertical. Lead generation’s specific characteristics – regulatory complexity, real-time operations, buyer-seller dynamics – create unique optimization opportunities.

Insurance Lead Content That Gets Cited

Insurance leads operate under intense regulatory scrutiny. Content addressing compliance, carrier requirements, and state-specific rules has citation potential because:

- Questions are common and specific

- Information changes with regulatory updates

- Authoritative sources are limited

- Incorrect answers carry liability

High-citation-potential topics:

- Medicare marketing guidelines (CMS-4192-F updates)

- State mini-TCPA requirements (Florida, Oklahoma, Washington)

- Carrier appointment requirements by state

- Consent language requirements for multi-carrier leads

Content structured around specific regulations, with citation to primary sources (CMS, FTC, state insurance commissioners), earns authority signals that AI systems recognize.

Mortgage and Lending Visibility in AI Responses

Mortgage lead generation faces unique challenges: RESPA requirements, licensing complexity, rate-sensitive timing. Content addressing these specifics with current data has citation value.

High-potential structures:

- State licensing requirement comparisons

- RESPA compliance for lead generators

- CPL benchmarks by loan type and geography

- Lead-to-funding conversion rate analysis

The key is specificity. Generic content about “mortgage lead generation” competes with thousands of pages. Content about “NMLS licensing requirements for mortgage lead aggregators operating in Texas” answers specific questions that AI systems field.

Legal Lead Generation Authority Signals

Legal leads carry the highest compliance burden and CPLs. Content demonstrating understanding of:

- State bar advertising rules

- Attorney client solicitation boundaries

- Mass tort lead qualification standards

- Retainer agreement requirements

This content positions generators as authoritative because few competitors invest in this depth. The narrower the expertise demonstrated, the more likely AI systems cite it for relevant queries.

Measuring LLMO Success

Traditional SEO had measurement clarity. Rank tracking tools showed positions. Analytics showed traffic. Attribution showed conversions. LLMO measurement is less mature but not impossible.

Citation Tracking Tools and Methods

Several platforms now monitor AI citations:

| Tool | Starting Price | Capabilities |

|---|---|---|

| LLMO Metrics / GEO Metrics | EUR 80/month | ChatGPT, Gemini, Claude, Perplexity, DeepSeek, Copilot |

| Peec AI | EUR 89/month | Brand visibility, sentiment, competitor benchmarking |

| Semrush AI SEO Toolkit | Included | ChatGPT visibility, sentiment share, content gaps |

| SE Ranking AI Search Toolkit | $89/month add-on | AI Overviews, ChatGPT, Gemini monitoring |

These tools track brand mentions across platforms, identify which queries cite your content, and benchmark against competitors. The data isn’t as clean as rank tracking, but it provides directional insight.

The Session Value Difference – Claude at $4.56/Visit

Not all AI traffic carries equal value. Claude users generate $4.56 average session value – the highest across platforms. Perplexity users generate $3.12. This variance reflects audience composition.

For B2B lead generation companies selling to sophisticated buyers, Claude and Perplexity traffic may convert better than ChatGPT volume despite smaller numbers. Segment your analytics by AI referrer to understand actual value.

Proxy Metrics When Direct Measurement Fails

Without comprehensive citation tracking, proxy metrics provide insight:

Brand search volume: When AI mentions increase, users search your brand name. Monitor branded search trends in Google Search Console.

Direct traffic trends: AI citations drive direct visits when users remember your brand rather than click citations. Unusual direct traffic growth can indicate AI visibility.

Referral patterns: Perplexity provides measurable referral traffic. Other platforms show as direct or may be identifiable through UTM parameters in llms.txt implementations.

Manual testing: Regularly query ChatGPT, Claude, Perplexity, and Google AI Overviews with questions your target audience asks. Note whether you’re cited, who is cited instead, and what those cited sources have in common.

Implementation Roadmap for Lead Generators

Transitioning from SEO-only to LLMO-inclusive strategy requires structured implementation. The following roadmap balances quick wins with foundational investment.

Week 1-2: Foundation

Entity audit:

- Document how your company is described across all digital properties

- Identify inconsistencies in terminology, service descriptions, geographic claims

- Create entity definition document with precise, specific language

- Update homepage, about page, and service pages with consistent definitions

Author credentialing:

- Create or expand author bio pages

- Document industry experience with specific metrics (years, lead volume, verticals)

- Add schema markup for Person entities

- Link to LinkedIn and industry profiles

Week 3-4: Content Restructuring

High-value content audit:

- Identify 10-15 pages with highest traffic or strategic importance

- Restructure with question-based headers

- Add 40-60 word answer openers to each section

- Insert list sections (minimum 5 per page)

- Add semantic cue phrases

FAQ expansion:

- Add FAQ schema to key pages

- Structure questions as users actually ask them

- Provide direct, extractable answers

- Include specific numbers and data points

Week 5-6: Technical Implementation

llms.txt deployment:

- Create llms.txt at root directory

- Define content hierarchy and permissions

- Create extended llms-full.txt for comprehensive access

- Monitor crawler access logs

Schema markup expansion:

- Implement Organization schema with complete properties

- Add Article schema to blog content

- Deploy FAQPage schema where appropriate

- Validate with Google Rich Results Test

Week 7-8: Authority Building

Citation source development:

- Identify industry publications accepting contributed content

- Pitch content to platform vendor blogs

- Participate in industry forums and discussions

- Seek speaking opportunities at industry events

Content freshness protocol:

- Establish quarterly update schedule for key content

- Add “last updated” dates to articles

- Refresh statistics and benchmarks with current data

- Document update history

Key Takeaways

-

Citation frequency is replacing search ranking as the primary visibility metric. AI platforms drive 527% traffic growth while traditional search declines. The companies being cited – not ranked – capture this traffic.

-

25% of ChatGPT’s most-cited URLs have zero Google visibility. Traditional SEO and LLMO optimize for different signals. Success in one doesn’t guarantee success in the other.

-

AI search visitors convert 4.4x better than traditional organic. The traffic quality from AI citations exceeds traditional search because users arrive with refined intent.

-

Content structure determines citation probability. 80% of cited articles include lists. Sequential heading hierarchy gets cited 3x more. Question-based headers with 40-60 word answers optimize for extraction.

-

Entity clarity and E-E-A-T signals drive AI trust. Consistent, specific descriptions of your business, credentials, and expertise enable language models to recognize authority.

-

Measurement is emerging but actionable. Tools like LLMO Metrics, Peec AI, and Semrush provide citation tracking. Proxy metrics (brand search, direct traffic, manual testing) supplement formal measurement.

-

Implementation follows a structured sequence. Entity definition, content restructuring, technical implementation, and authority building require coordinated effort over 6-8 weeks.

-

Early movers have disproportionate advantage. IDC forecasts companies will spend 5x more on LLMO than SEO by 2029. The window for establishing citation authority before competition intensifies is narrowing.

Frequently Asked Questions

What is LLMO and how is it different from SEO?

LLMO – Large Language Model Optimization – focuses on getting your content cited by AI systems like ChatGPT, Claude, and Perplexity. Traditional SEO optimizes for search engine rankings. The key difference: SEO helps you get found in search results, LLMO helps you get mentioned in AI-generated answers. These require different strategies because AI systems evaluate authority, entity clarity, and content structure differently than search algorithms.

Does LLMO replace traditional SEO for lead generators?

No. LLMO and SEO are complementary strategies serving different discovery channels. SEO captures users actively searching with commercial intent. LLMO captures users asking AI assistants for recommendations and information. Most lead generation companies should pursue both, with resource allocation shifting toward LLMO as AI traffic share grows.

How long before LLMO efforts show results?

Citation improvements can appear within 2-4 weeks for established brands implementing structural changes. New domains typically see meaningful citation frequency after 3-6 months. Unlike SEO, where ranking improvements compound slowly, LLMO gains can be rapid once AI systems recognize your authority signals.

Which AI platform should lead generators prioritize?

Prioritize based on your audience’s behavior. ChatGPT holds 77.97% market share, making it important by volume. But Claude users generate $4.56 average session value – highest across platforms – reflecting a technical, decision-making audience valuable for B2B lead generation. Perplexity provides measurable referral traffic and transparent citations. Test queries on each platform to understand where your content currently appears.

What content structure gets cited most often?

Three patterns correlate with high citation frequency: (1) Question-based headers matching how users ask questions, (2) 40-60 word answer openers providing direct, extractable responses, and (3) list sections enabling clean content extraction. Pages cited by ChatGPT average 14 list sections versus 28.6% of Google top results.

How does E-E-A-T apply to LLMO for lead generation?

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) matters more for LLMO than traditional SEO. Language models scan your entire digital presence for consistency. Experience means showing real campaign data and operational specifics. Expertise appears through technical depth and audience-appropriate specificity – match your terminology to your reader’s context. Authoritativeness comes from external citations and recognition. Trustworthiness requires documented compliance processes – critical for lead generation’s YMYL classification.

What is llms.txt and should lead generators implement it?

llms.txt is a proposed web standard giving website owners control over how AI systems access content. Over 600 sites have adopted it, including Stripe, Cloudflare, and Anthropic. Vercel reports 10% of signups now come from ChatGPT after AI optimization. Lead generators should implement llms.txt to define content hierarchy and ensure AI crawlers access your most important content efficiently.

How do I track AI citations for my lead generation content?

Several tools now provide citation tracking: LLMO Metrics (EUR 80/month), Peec AI (EUR 89/month), Semrush AI SEO Toolkit, and SE Ranking AI Search Toolkit ($89/month). Beyond tools, monitor proxy metrics: brand search volume trends, direct traffic changes, and manual testing of queries on ChatGPT, Claude, and Perplexity.

Why do some pages with low Google rankings get cited by AI?

AI systems evaluate different signals than search engines. A page might rank poorly due to limited backlinks but get cited because it has clear entity definitions, strong author credentials, specific data, and extractable content structure. The 25% of ChatGPT’s most-cited URLs with zero Google visibility demonstrate that authority for AI and authority for search are measured differently.

What technical requirements do AI crawlers have?

AI crawlers require fast page loads (TTFB under 200ms, LCP under 2.5s), server-side rendered content (most AI crawlers don’t execute JavaScript), and clear content structure. Key crawler user agents include GPTBot and OAI-SearchBot (OpenAI), ClaudeBot (Anthropic), and PerplexityBot. Ensure robots.txt allows these crawlers access to important content.

How does content freshness affect AI citations?

89.7% of ChatGPT’s most-cited pages were updated in 2025. Language models interpret freshness as a credibility signal, particularly for topics with rapidly changing information. Lead generation content about compliance requirements, CPL benchmarks, and platform features should be updated quarterly at minimum to maintain citation relevance.

What ROI can lead generators expect from LLMO investment?

Companies implementing LLMO strategies report 45% increases in citations and 60% more direct traffic from AI-generated responses. AI search visitors convert 4.4x better than traditional organic visitors. IDC projects companies will spend 5x more on LLMO than traditional SEO by 2029, reflecting expected ROI. The exact return depends on your vertical, content quality, and implementation thoroughness.

Should I optimize differently for ChatGPT versus Perplexity?

Yes. ChatGPT favors depth and comprehensive coverage, with inconsistent citation practices. Perplexity uses real-time retrieval with transparent, numbered citations and generates measurable referral traffic. Google AI Overviews pull 82% of citations from deep topic pages, not homepages. Optimize your cornerstone content for all platforms, but understand each platform’s citation patterns when evaluating results.

How does LLMO affect lead generation compliance content?

Compliance content has high LLMO potential because questions are specific, information changes with regulations, and authoritative sources are limited. Content about TCPA requirements, state mini-TCPA laws, CMS Medicare marketing rules, and consent documentation can earn citations by providing accurate, current, well-structured answers. Include citations to primary regulatory sources to strengthen authority signals.

What’s the relationship between schema markup and LLMO?

Schema markup helps both search engines and language models understand your content structure. Microsoft confirmed in March 2025 that schema helps LLMs understand content. Pages with proper schema can boost AI citation chances by over 36%. Implement Organization schema for entity clarity, Article schema for content pages, FAQPage schema for question-answer content, and Person schema for author credentialing.