By 2030, AI agents will handle trillions in commerce without human intervention. The lead form you spent years perfecting may be bypassed entirely by algorithms querying APIs. Here is how to prepare for machine-to-machine lead buying before your competitors do.

The lead generation industry has operated on a consistent model for two decades: humans fill out forms, lead distribution systems route data, sales teams make contact, deals close. Every optimization effort targets this human-centered flow.

That model is about to encounter its most significant transformation since the industry’s creation.

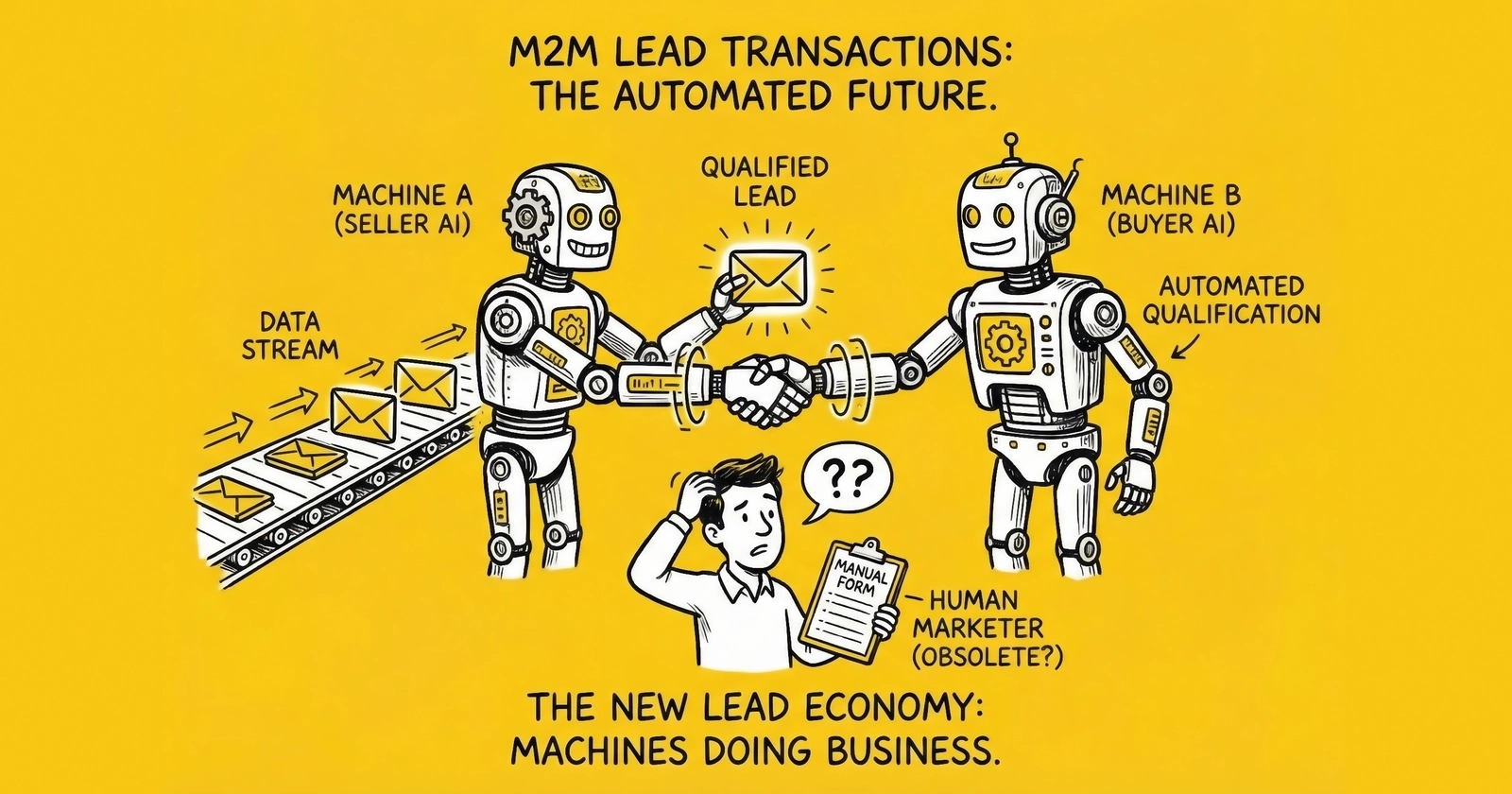

Machine-to-machine (M2M) lead transactions represent a fundamental restructuring of how buyers discover, evaluate, and acquire leads. Instead of humans navigating landing pages and submitting forms, AI agents will query APIs directly, negotiate pricing algorithmically, and complete transactions without human intervention at each step.

McKinsey projects agentic commerce could reach $3-5 trillion globally by 2030, with the U.S. B2C market alone representing up to $1 trillion in orchestrated revenue. Adobe data shows AI-driven traffic to U.S. retail sites surged 4,700% year-over-year in July 2025. ChatGPT now exceeds 800 million weekly users, and major platforms have launched shopping integrations that enable AI agents to research, compare, and purchase without leaving conversation interfaces.

For lead generation operators, the implications are existential. The forms you have optimized, the landing pages you have tested, the qualification flows you have refined – these mechanisms assume human visitors navigating browser interfaces. When the “customer” becomes an AI agent acting on a human’s behalf, every assumption changes.

This guide covers the M2M transformation underway, the protocols enabling automated lead buying, how to build API-first infrastructure, the algorithmic bidding systems reshaping pricing, and the specific steps required to prepare your operation for the machine-to-machine future.

What Are Machine-to-Machine Lead Transactions?

Machine-to-machine lead transactions occur when AI systems negotiate, price, and execute lead purchases without direct human involvement in individual decisions. The human sets parameters – budget limits, quality thresholds, targeting criteria – and the algorithm handles everything else.

This is not theoretical capability. The infrastructure is operational now.

The Current State of Automated Lead Buying

Ping-post lead distribution already operates in real-time auctions where leads sell in milliseconds. What changes with true M2M transactions is the removal of human touchpoints throughout the buying process.

Today’s lead buying follows a predictable sequence: a lead generator captures consumer information via form, and the lead distribution platform receives that data and “pings” potential buyers. Buyers respond with bids based on programmatic rules, the platform accepts the highest qualifying bid and “posts” full lead data, and that lead enters the buyer’s CRM for human follow-up. Finally, a human sales team contacts the lead and works toward conversion.

The M2M future compresses and automates additional steps in this chain. A consumer expresses intent to an AI assistant – “I need auto insurance quotes” – and the AI agent gathers parameters through conversation, collecting coverage needs, budget constraints, and vehicle information. The agent then queries lead marketplace APIs with structured parameters, while lead sellers’ AI systems evaluate the request and respond with offers. Buyer and seller agents negotiate price and terms algorithmically, and the transaction completes via API with no forms and no landing pages. Lead data flows directly into buyer systems, and in some cases, AI handles initial qualification before any human contact occurs.

The “lead” as traditionally understood – a form submission with consumer-entered data – may become optional. The lead becomes an API transaction containing structured parameters that an AI compiled from conversation.

Why M2M Transactions Are Inevitable

Three converging forces make machine-to-machine lead buying inevitable rather than speculative.

AI Capability Maturation

Large language models crossed the threshold from answering questions to executing tasks. OpenAI’s Operator, launched in January 2025, demonstrated practical autonomous task completion – browsing websites, filling forms, and executing transactions. ChatGPT’s shopping integrations with Shopify and Etsy enable purchase completion without leaving the conversation interface.

The capability to navigate commerce autonomously exists. Application to lead generation follows naturally.

Protocol Infrastructure

The major technology companies have released competing standards for agent commerce. OpenAI’s Agentic Commerce Protocol (ACP), developed with Stripe, enables secure transactions within chat interfaces. Google’s Agent-to-Agent (A2A) Protocol enables autonomous agents to communicate and negotiate as peers. Anthropic’s Model Context Protocol (MCP) standardizes how AI systems access external resources and execute tasks.

These protocols solve the technical problems of agent authentication, transaction security, and cross-platform communication. The infrastructure to support M2M commerce is being built now.

Payment Integration

Visa, Mastercard, PayPal, and Stripe have invested in agent payment capability. The technical challenges – authentication, authorization, fraud prevention, chargebacks – have solutions in production or advanced development.

When an AI agent can authenticate its authority to transact, verify payment authorization, complete purchases, and handle disputes, the final barrier to automated commerce falls.

The API Economy for Lead Generation

Machine-to-machine transactions require APIs – application programming interfaces that enable software systems to communicate without human intermediation. For lead generation, this means restructuring how leads are created, distributed, and purchased.

From Form-Based Capture to API-First Architecture

Traditional lead generation architecture assumes human interaction:

Human visits landing page

→ Human reads content and evaluates offer

→ Human fills out form

→ Form submission creates lead record

→ Lead enters distribution system

→ Buyers receive and respond to leadsAPI-first architecture assumes machine interaction:

AI agent receives human intent

→ Agent queries lead marketplace API

→ API returns available options with pricing

→ Agent evaluates options against criteria

→ Agent selects optimal match

→ Transaction completes via API

→ Lead data flows to buyer systemThe capture moment migrates upstream. Instead of optimizing landing page conversion, you optimize API response quality and speed. Instead of persuading humans with emotional copy, you satisfy algorithmic selection criteria with structured data.

Building API Infrastructure for Lead Operations

Lead generation businesses building for the M2M future need API capability across three functions.

Lead Intake APIs

For lead generators, intake APIs enable agents to submit leads programmatically. The agent collects consumer information through conversation and transmits it via API rather than form submission.

Effective intake APIs accept structured JSON payloads with consumer data and validate required fields in real-time, returning confirmation with lead ID and status. They must support consent documentation and certificate attachment while providing error handling with actionable messages that enable automated troubleshooting.

Lead Query APIs

For buyers and aggregators, query APIs enable agents to discover available inventory. Instead of reviewing dashboards, an AI agent queries available leads matching specific criteria.

Query APIs must accept filter parameters including geography, vertical, price range, and quality tier, returning matching leads with relevant attributes. They require real-time availability checking and must enable programmatic bidding on specific leads while providing pricing transparency that agents can evaluate.

Transaction APIs

Transaction APIs execute the purchase, handling payment, data transfer, and confirmation.

These APIs accept purchase requests with payment authorization and execute atomic transactions where the purchase either succeeds or fails completely – no partial states. Upon successful purchase, they return full lead data and generate and store consent and transaction documentation. Robust transaction APIs also support dispute and return workflows.

Current API Capabilities in Lead Distribution

Major lead distribution platforms already offer API capabilities that form the foundation for M2M transactions.

boberdoo provides APIs for lead submission, distribution, and reporting, with their system supporting programmatic lead routing and automated buyer bidding based on predefined rules. LeadsPedia offers APIs for lead posting, real-time validation, and distribution automation, enabling programmatic campaign management and automated quality scoring. Phonexa provides APIs for call tracking, lead management, and attribution, supporting server-side tracking and programmatic lead routing.

These platforms were built for human-configured automation – rules set by operators, executed by systems. The evolution toward full M2M adds AI agents as both buyers and sellers, negotiating and transacting without human involvement in individual decisions.

Algorithmic Bidding: How Machines Price Leads

Real-time bidding for leads is not new. Ping-post systems have executed auctions in milliseconds for over a decade. What changes with M2M is the sophistication of bidding logic and the removal of human oversight from individual pricing decisions.

How Algorithmic Lead Bidding Works

Current lead pricing typically follows rules set by humans – “bid $45 for California auto insurance leads with drivers aged 25-45,” or “bid $80 for exclusive mortgage leads with 700+ credit score,” with caps like “do not bid more than $60 regardless of other factors.”

Algorithmic bidding learns optimal pricing from outcomes. The system observes which leads convert at what rates and calculates true value by lead attributes. It adjusts bids in real-time based on predicted value while incorporating market conditions such as competitor behavior and inventory levels. Rather than adhering to arbitrary bid limits, the system optimizes toward revenue or margin targets.

The algorithm processes more variables simultaneously than human operators can manage. A sophisticated bidding system might evaluate hundreds of signals – consumer demographics, time of day, device type, traffic source, historical conversion rates by similar leads, current inventory levels, competitor bidding patterns – and produce an optimal bid in milliseconds.

Value-Based Bidding Models

The shift from rule-based to algorithmic bidding enables value-based pricing models where bid amounts reflect predicted lead value rather than category averages.

Traditional category-based pricing treats all leads within a segment identically: all auto insurance leads in California bid at $45, all mortgage leads with 700+ credit bid at $75. Pricing varies by broad category, not individual lead quality.

Value-based algorithmic pricing evaluates each lead individually. Lead A – a California auto lead for a 28-year-old homeowner with 750 credit – might receive a bid of $67, while Lead B – also California auto but for a 42-year-old renter with 680 credit – might receive a bid of $38. Pricing reflects predicted conversion probability for the specific lead rather than category averages.

Value-based bidding requires feedback loops connecting lead acquisition to downstream conversion. When your system knows which leads actually convert – and at what revenue – it can calculate true lead value and bid accordingly.

Implementing Algorithmic Bidding

Lead buyers implementing algorithmic bidding need several components working together.

Data Infrastructure

Algorithmic bidding requires clean, connected data encompassing lead attributes captured at acquisition, contact and conversion outcomes tracked by lead, and revenue and margin calculated by lead source. Feedback loops must continuously update the bidding system with fresh outcome data. Without closed-loop data connecting acquisition to outcome, algorithmic bidding cannot learn optimal pricing.

Prediction Models

The bidding system needs models predicting lead value across multiple dimensions. Conversion probability models determine which leads close. Revenue prediction models forecast at what value those conversions occur. Margin models account for acquisition cost, processing cost, and return risk. These models train on historical data and update as new conversions occur.

Bidding Logic

The system translates predicted value into bid amounts through a consistent process. It calculates expected value by multiplying conversion probability times expected revenue, then determines maximum bid maintaining margin targets. It adjusts for market conditions and inventory needs, then executes the bid within latency requirements – typically under 100 milliseconds.

Monitoring and Override

Even algorithmic systems require human oversight. Dashboard visibility into bidding behavior allows operators to understand system decisions. Alert systems flag anomalous patterns for investigation. Manual override capability handles strategic situations where automated decisions would be inappropriate. Regular model performance review ensures the system continues to optimize effectively.

The Competitive Dynamics of M2M Bidding

When multiple buyers deploy algorithmic bidding systems, competition intensifies. The buyer with better prediction models – more accurately identifying which leads will convert – can bid higher on valuable leads and lower on poor ones, achieving better unit economics than competitors using simpler rules.

This creates a competitive advantage cycle. Better data enables better predictions, which enable more efficient bidding, which captures more valuable leads, which generates more data – and the cycle repeats. The winners in M2M lead buying will be operators who invest in data infrastructure and prediction capability. The losers will be operators using static rules against algorithmic competitors.

Preparing Your Operation for M2M Transactions

The transformation timeline provides a window for preparation. Those who build M2M-ready infrastructure now will capture compounding advantages as adoption accelerates.

Immediate Actions (Now Through 2025)

Audit Your Data Architecture

Can AI agents access structured information about your offerings? Assess whether you have APIs that external systems can query and whether your lead data is stored in machine-readable formats. Determine if you can expose pricing, availability, and quality metrics programmatically and whether your consent documentation is accessible via API.

If your data only exists as rendered web pages or locked in proprietary systems, you are unprepared for agent queries.

Implement Schema.org Markup

The minimum viable preparation is comprehensive structured data on all key pages. Schema.org provides standardized formats that AI systems can parse.

Organization schema conveys company information, service areas, and contact details. Service schema describes offerings, pricing models, and capabilities. Product schema defines lead products, quality tiers, and pricing structures. FAQ schema presents common questions and answers that agents may query. Review schema provides customer feedback and ratings in machine-readable format.

Even before agent protocols mature, structured data improves visibility in AI-generated answers and positions you for future integration.

Build First-Party Data Capabilities

M2M transactions depend on data you control. Capture and store click identifiers (gclid, fbclid, ttclid) server-side rather than relying on client-side cookies. Build first-party cookie infrastructure for identity persistence across sessions. Develop email-based identity resolution for cross-session tracking, and implement consent management that documents permissions programmatically.

First-party data survives browser restrictions that block third-party tracking. It also provides the foundation for API-based transactions where you must authenticate data provenance.

Monitor AI Visibility

Start tracking how your brand appears in AI-generated responses. Query major AI platforms – ChatGPT, Claude, Perplexity – about your vertical and document which competitors appear in AI recommendations. Note what structured data AI systems extract about your offerings and establish baseline metrics before optimization.

Understanding your current AI visibility tells you where to focus improvement efforts.

Medium-Term Preparation (2026-2027)

Develop API Capability

Build endpoints that can serve structured data about your products, services, pricing, and availability. Start with read-only endpoints that allow agents to query your information, then add authentication and rate limiting for security. Document your API for potential integrators and progress to transactional endpoints where agents can purchase leads.

API development does not require building from scratch. Your existing lead distribution platform may offer API access that you can extend or expose.

Track Protocol Development

Monitor the evolution of agent commerce protocols. Watch OpenAI’s ACP for integration documentation and partner announcements. Track Google’s A2A for enterprise adoption and industry implementations. Follow Anthropic’s MCP resource connection specifications.

Understanding which protocols gain adoption in your vertical guides your integration strategy.

Experiment with Agent Interactions

As agent shopping tools become available, test how they evaluate your offerings. Use AI assistants to search for services in your vertical and note what information agents extract and present. Identify gaps in your data that disadvantage you and test your API responses with programmatic queries.

This testing reveals optimization opportunities before competitive pressure forces adaptation.

Develop Algorithmic Trust Strategy

Understand what signals AI agents use to evaluate trustworthiness: domain authority and backlink profiles, review sentiment and rating patterns, data completeness and accuracy, response time and availability, and historical transaction performance.

Build these signals systematically. The trust markers that persuade human visitors – testimonials, design quality – may differ from signals that satisfy algorithmic evaluation, such as structured data and verified credentials.

Strategic Positioning (2028 and Beyond)

Deploy Agent-Ready Infrastructure

Full integration with dominant agentic commerce protocols means building endpoints that agents can query, negotiate with, and transact through. Real-time pricing APIs must respond to market conditions dynamically. Automated negotiation logic handles agent requests without human intervention, and transaction completion occurs without manual touchpoints.

Develop Agent-Specific Optimization

Just as you currently A/B test for human conversion, you will need to optimize for agent selection. Test different data structures for agent preference and optimize response time and data completeness. Experiment with pricing and availability presentation and measure inclusion rate in agent consideration sets.

Maintain Human Capability

Not all commerce will shift to agents simultaneously. Different demographics adopt at different rates, and high-value complex transactions may retain human preference longer. Regulatory requirements may mandate human oversight in some verticals. Maintain capability across both agent and human channels throughout the transition.

The Transformation of Lead Quality Signals

Human buyers evaluate lead quality through brand familiarity, website professionalism, review sentiment, and social proof. These signals evolved to influence human psychology.

AI agents optimize for different criteria.

Quality Signals That Matter to Machines

Data Completeness

For human buyers, incomplete information prompts questions. For AI agents, missing data may mean exclusion from consideration.

Agents evaluating lead sources will check whether all standard fields are populated, whether contact information is validated and formatted consistently, whether geographic and demographic attributes are complete, and whether consent documentation is attached and verifiable. Leads with incomplete data get filtered before consideration.

Verifiable Credentials

Human buyers often skip verification – they trust the website or the referral. AI agents may enforce verification as selection criteria.

They will check whether the company is licensed in relevant states and whether certifications are current and verifiable. They will look for third-party sources confirming claimed capabilities and track records of successful transactions. Building verifiable credentials that AI systems can confirm programmatically becomes competitive advantage.

Response Time and Availability

In human contexts, “fast response” means hours or a day. In agent contexts, response must be instantaneous.

API queries expect sub-second responses. Availability must be current, not last updated yesterday. Pricing must be real-time, not “call for quote.” Transaction completion must be immediate. Agents will not wait. Sources that cannot respond at machine speed become invisible.

Historical Performance Data

AI systems can process historical performance to inform selection. They evaluate the source’s conversion rate by lead type, return rate and dispute history, consistency of quality over time, and what other buyers report about the source. Performance data that would overwhelm human analysis becomes selection criteria for algorithms.

Quality Signals That Diminish in Importance

Brand Recognition

Brand familiarity matters to humans because it creates trust shortcuts. AI agents can evaluate trust through data rather than brand sentiment. A well-structured offering from an unknown source may outperform a poorly-documented offering from a recognized brand.

Visual Design

Website design influences human perception of credibility. AI agents do not see your website – they query your API. Design investment shifts from visual presentation to data architecture.

Testimonials and Social Proof

Humans are persuaded by social proof. AI agents value testimonials only if structured as verifiable review data with provenance. Anonymous testimonials carry no weight algorithmically.

Persuasive Copy

The emotional appeals that persuade humans – urgency, fear of missing out, aspirational messaging – do not affect algorithms. Selection criteria become explicit and logical.

Compliance and Legal Considerations for M2M

Machine-to-machine transactions raise new compliance questions that operators must address.

Consent in Agent-Mediated Transactions

TCPA consent requirements do not change because an AI agent initiates the transaction. The human whose information is being shared must have provided prior express written consent.

Agent Authority

When an AI agent acts on a human’s behalf, what authority has the human granted? Can an agent provide consent for future contact?

The emerging answer: agents operate within authorization boundaries set by humans. The human grants permission for the agent to share certain information with certain parties. The agent cannot expand those permissions.

Consent Documentation

M2M transactions need machine-readable consent records. The certificate documenting consumer consent, such as TrustedForm or Jornaya certificates, must be accessible via API, verifiable by receiving parties, and auditable for compliance review.

One-to-One Consent

Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, many sophisticated buyers require consent specific to each seller. In M2M transactions, the agent should obtain or verify consent for each party receiving consumer data to satisfy buyer requirements and reduce litigation risk.

Data Privacy in API Transactions

CCPA, GDPR, and state privacy laws govern data sharing regardless of transaction mechanism.

API transactions must respect opt-out signals. Data minimization principles apply – share only necessary information. Consumer access and deletion requests must be honored, and cross-border transfers must comply with applicable frameworks.

The API infrastructure must include privacy controls: consent verification before data sharing, opt-out registry checking, data retention and deletion capabilities, and audit logging for compliance review.

Liability in Automated Transactions

When an AI agent makes a poor decision, who bears responsibility?

The legal frameworks are still developing, but current guidance suggests the human who deployed the agent retains ultimate responsibility. Operators must maintain oversight and audit capabilities. Automated decisions must be explainable and reversible, and systems must have manual override capability.

Building M2M capability does not mean abandoning human oversight. It means structuring oversight at the system level rather than the transaction level.

Case Study: The Evolution of Ping-Post to M2M

Ping-post lead distribution provides a template for understanding how M2M transactions will develop.

How Ping-Post Evolved

Ping-post began as a simple optimization: instead of selling leads to a single buyer at a fixed price, publishers could auction each lead to the highest bidder.

Stage 1: Manual Configuration

Early ping-post required humans to configure buyer accounts and credentials, set bid amounts and acceptance criteria, monitor distribution and adjust rules, and reconcile transactions and payments. Automation handled routing; humans handled everything else.

Stage 2: Rule-Based Automation

Platforms added rule engines enabling automated bid selection based on configured priorities, automatic routing based on lead attributes, scheduled reports and alerts, and integration with CRM and sales systems. Human configuration was still required, but execution became automated.

Stage 3: Algorithmic Optimization

Advanced platforms introduced machine learning for bid optimization, predictive quality scoring, dynamic pricing based on market conditions, and automated A/B testing of routing rules. Humans set objectives; algorithms determined how to achieve them.

Stage 4: M2M Integration (Emerging)

The next evolution adds API endpoints for agent queries, automated negotiation with buyer agents, real-time pricing based on inventory and demand, and transaction completion without human touchpoints.

The progression from manual to automated to algorithmic to M2M follows a consistent pattern. Each stage removes human involvement from routine decisions while maintaining human oversight of strategy and exceptions.

Lessons for M2M Preparation

Operators who led in each ping-post evolution stage captured advantages. Early movers in real-time auctions accessed better buyers. Operators investing in automation achieved lower operational costs. Those deploying algorithmic optimization achieved better unit economics.

The pattern suggests that M2M preparation delivers similar advantages. Early movers in agent-ready infrastructure will capture opportunities that latecomers miss.

The Timeline for M2M Adoption

Based on current technology trajectories and industry projections, here is a realistic timeline for M2M lead transaction adoption.

2025-2026: Foundation Phase

During this phase, agentic commerce protocols (ACP, A2A, MCP) are gaining adoption in retail, and major platforms are testing agent shopping integrations. Lead distribution platforms are expanding API capabilities, and early experiments with agent-based lead queries are underway.

Operators should use this window to audit data architecture for machine readability, implement Schema.org markup, build first-party data infrastructure, and monitor protocol development and early implementations.

2026-2027: Early Adoption Phase

This phase sees agent commerce protocols mature with lead-specific extensions. The first lead exchanges deploy agent-ready endpoints, while sophisticated buyers test algorithmic bidding against agents. Industry standards for M2M lead transactions begin to emerge.

Operators should develop API capability for lead queries and transactions, test agent-compatible interfaces in sandbox environments, build algorithmic trust signals, and pilot M2M transactions with forward-looking partners.

2027-2028: Mainstream Integration Phase

Major lead buyers deploy agent purchasing at scale, and lead distribution platforms offer standard M2M connectivity. Industry benchmarks emerge for agent transaction performance, with early M2M adopters showing measurable competitive advantages.

Operators should deploy production M2M infrastructure, develop agent-specific optimization strategies, integrate algorithmic bidding with feedback loops, and scale M2M volume while maintaining human channels.

2029-2030: Market Penetration Phase

McKinsey’s agentic commerce projections mature to $3-5T global market size. M2M becomes a standard channel alongside human-facing interfaces, and operators without M2M capability face significant disadvantage. New entrants build M2M-native operations from the start.

Operators should optimize M2M performance as the primary channel for some segments while maintaining human capability for segments with slower adoption. They should leverage M2M data for competitive intelligence and adapt strategy based on actual adoption patterns.

Frequently Asked Questions

What exactly is machine-to-machine (M2M) lead buying and how does it differ from current automated systems?

M2M lead buying occurs when AI systems negotiate, price, and execute lead purchases without direct human involvement in individual decisions. Current automated systems like ping-post still rely on humans to configure rules, set bid limits, and make strategic decisions. True M2M removes humans from the transaction loop entirely – the algorithm receives parameters (budget, quality criteria, targeting), evaluates available inventory, negotiates pricing, and completes purchases autonomously. The human sets strategy; the machine executes transactions.

When will M2M lead transactions become mainstream? Is this really happening soon?

The infrastructure is operational now. OpenAI launched shopping integrations in 2025, and Adobe reports 4,700% year-over-year growth in AI-driven retail traffic as of July 2025. For lead generation specifically, expect early adoption in 2026-2027, mainstream integration in 2027-2028, and market penetration by 2029-2030. The timeline is compressed because the underlying technology (AI agents, payment protocols, API infrastructure) already exists. The question is integration and adoption, not invention.

What happens to lead forms when AI agents buy leads directly?

Lead forms assume humans navigating browser interfaces. AI agents bypass forms, querying APIs directly with structured parameters. The “lead” transforms from a form submission to an API transaction. This does not mean forms disappear immediately – human channels will persist. But operators must build API infrastructure to capture the growing volume of agent-mediated traffic. Without API capability, you become invisible to agent queries.

How do I build API capability for M2M transactions if I am not technical?

Start with your existing lead distribution platform. Major platforms (boberdoo, LeadsPedia, Phonexa) offer API access that you can leverage. Contact your platform provider about exposing endpoints for external queries. For custom development, the investment is typically 2-4 weeks of developer time for basic API capability. The cost of implementation is modest compared to the risk of missing the M2M transition.

What is algorithmic bidding and why does it matter for lead buying?

Algorithmic bidding uses machine learning to set bid amounts based on predicted lead value rather than static rules. Instead of “bid $45 for all California auto leads,” the algorithm evaluates each lead individually – analyzing attributes, historical conversion patterns, and market conditions – to calculate optimal bid amounts. Buyers with better prediction models win more valuable leads at efficient prices. As M2M adoption increases, algorithmic bidding becomes table stakes for competitive lead buying.

How do consent and TCPA compliance work in M2M transactions?

TCPA requirements do not change because an AI agent initiates the transaction. The human whose information is shared must have provided prior express written consent. Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, many sophisticated buyers require consent specific to each seller. In M2M contexts, consent documentation must be machine-readable, verifiable via API, and auditable. Your API infrastructure should include consent verification before data sharing.

What trust signals matter to AI agents evaluating lead sources?

AI agents evaluate different criteria than humans. Key signals include: data completeness (all fields populated and validated), verifiable credentials (licenses and certifications AI can confirm), response time (sub-second API responses), historical performance (conversion rates and return history), and structured data quality (proper Schema.org markup). Traditional human trust signals like brand recognition and visual design carry less weight in algorithmic evaluation.

Will M2M transactions replace human-to-human lead buying entirely?

No. The transformation will be gradual and uneven. Different demographics adopt at different rates, high-value complex transactions may retain human preference, and regulatory requirements may mandate human oversight in some verticals. Operators should maintain capability across both agent and human channels. The winning strategy is “and” not “or” – building M2M capability while preserving human channels for segments that adopt more slowly.

What is the ROI on investing in M2M infrastructure now?

The ROI is difficult to quantify precisely because the market is emerging. However, the pattern from previous technology shifts suggests early movers capture compounding advantages. Practitioners who invested early in ping-post, mobile optimization, and server-side tracking gained positions that latecomers struggled to match. The cost of M2M preparation (API development, structured data implementation, algorithmic bidding) is modest compared to the risk of falling behind as adoption accelerates.

How should I prioritize M2M preparation against other business priorities?

Start with actions that deliver value regardless of M2M timing: Schema.org markup improves SEO and AI visibility today; first-party data infrastructure addresses cookie deprecation; API capability enables better platform integrations. These investments pay dividends now while positioning you for M2M adoption. Avoid betting everything on M2M timeline predictions, but ensure you are not caught unprepared when adoption accelerates.

Key Takeaways

-

Machine-to-machine lead transactions will reshape how leads are discovered, priced, and purchased. McKinsey projects agentic commerce reaching $3-5 trillion globally by 2030, with AI agents bypassing forms to query APIs directly.

-

The infrastructure for M2M is operational now. OpenAI, Google, and Anthropic have launched agent commerce protocols. Major payment processors have built agent transaction capability. The question is adoption timing, not technology feasibility.

-

API-first architecture becomes competitive requirement. Without endpoints that agents can query, you become invisible to machine-mediated commerce. Start with read-only endpoints and progress to transactional capability.

-

Algorithmic bidding replaces rule-based pricing. Buyers with better prediction models – accurately identifying which leads convert – win more valuable leads at efficient prices. Invest in data infrastructure and feedback loops that enable algorithmic optimization.

-

Trust signals transform. Human buyers value brand recognition and visual design. AI agents evaluate data completeness, verifiable credentials, response time, and historical performance. Build the signals that matter to machines.

-

Compliance requirements persist in M2M transactions. TCPA consent, one-to-one rules, and data privacy laws apply regardless of transaction mechanism. Your API infrastructure must include consent verification and audit capability.

-

The timeline is compressed but not immediate. Expect foundation phase through 2026, early adoption in 2026-2027, mainstream integration in 2027-2028, and market penetration by 2029-2030. Preparation now delivers compounding advantage.

-

Maintain both channels. M2M will not replace human channels immediately. Build agent capability while preserving human-facing interfaces for segments that adopt more slowly.

-

The winners will be operators who prepare now. Early movers in previous technology shifts (ping-post, mobile, server-side tracking) captured advantages that latecomers struggled to match. The M2M transition follows the same pattern.

Statistics and projections current as of December 2025. Market conditions, technology capabilities, and adoption timelines continue to evolve.