How to measure what actually drives lead generation results, allocate budget based on reality rather than platform self-reporting, and build attribution infrastructure that survives the privacy-first era.

Attribution determines where your money goes.

Every dollar you spend on lead generation flows toward activities you believe produce results. But belief and reality often diverge. That Facebook prospecting campaign showing 400% ROAS in platform reporting might deliver 30% incremental lift when properly tested. The Google brand campaign claiming credit for 2,000 conversions might be capturing demand you would have won anyway. Meanwhile, the awareness campaign that looks expensive and ineffective might be the only thing creating the demand your closing channels harvest.

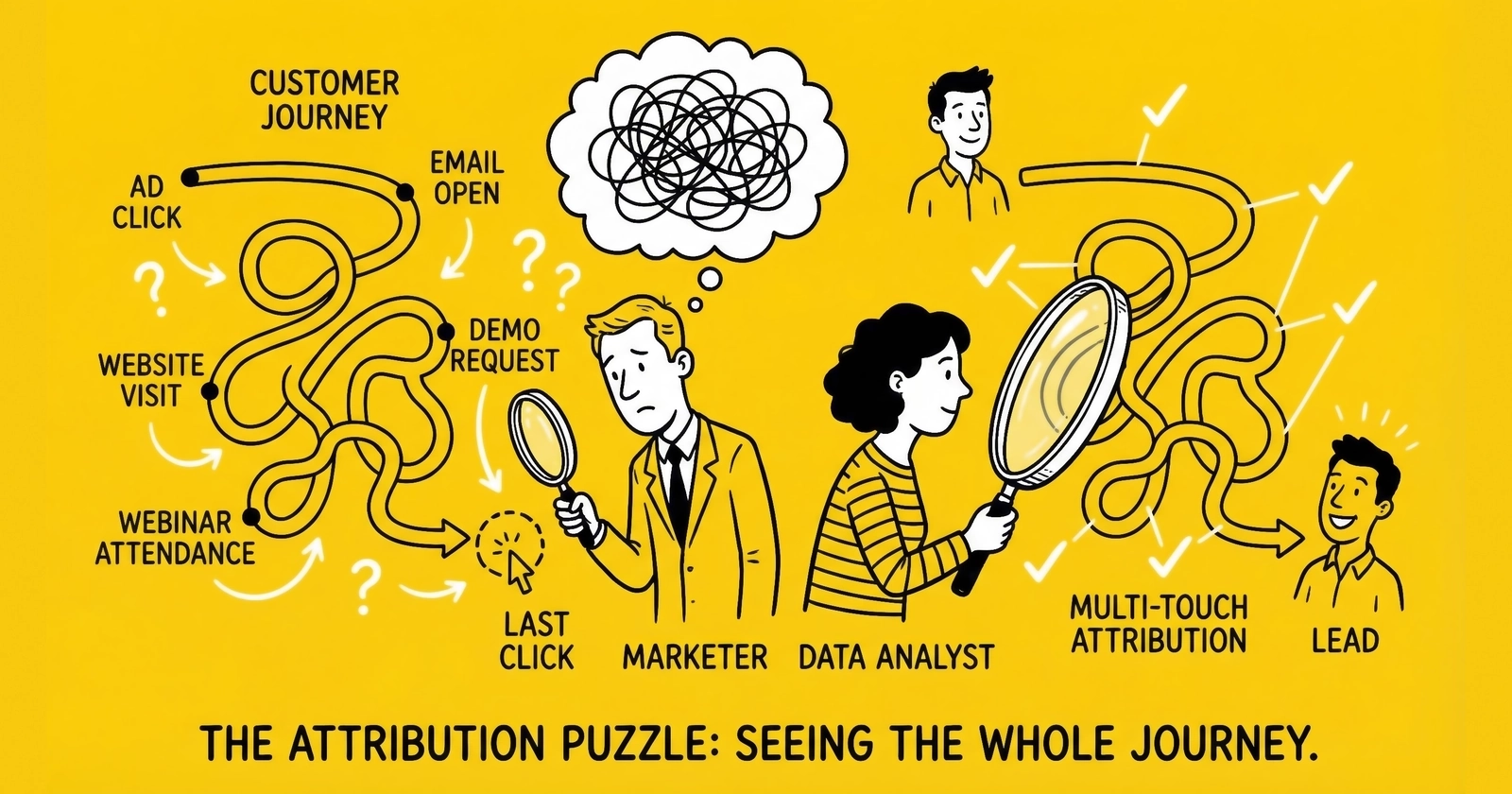

Multi-touch attribution models answer the fundamental question: which marketing touchpoints actually deserve credit for conversions? Get this wrong, and you systematically misallocate budget. You starve the activities that create demand while overfunding the ones that merely capture it. You celebrate vanity metrics while margin evaporates.

For lead generation businesses operating on 10-20% net margins, a 30-40% attribution gap does not just reduce visibility. It destroys the fundamental feedback loop that makes traffic arbitrage profitable. Those who master attribution build sustainable businesses. Those who trust surface metrics optimize their way into bankruptcy.

This guide covers the major attribution models, explains when each works best, reveals the implementation challenges that make accurate measurement genuinely difficult, and provides practical frameworks for choosing and implementing the right approach for your operation.

The math determines your margin. Let us get it right.

Why Attribution Matters for Lead Generation

Lead generation economics depend on traffic arbitrage. You buy clicks at one price and sell leads at another. Margin depends entirely on knowing true cost per lead by source. When browser restrictions cause 30-40% signal loss, you are optimizing traffic mix based on incomplete data, potentially funding unprofitable sources while starving profitable ones.

Consider an operation spending $100,000 monthly across five channels. Standard attribution reporting shows:

| Channel | Spend | Reported Conversions | Reported CPA | Reported ROAS |

|---|---|---|---|---|

| Facebook Prospecting | $25,000 | 400 | $62.50 | 180% |

| Google Non-Brand | $30,000 | 600 | $50.00 | 240% |

| Google Brand | $15,000 | 500 | $30.00 | 400% |

| Native Ads | $20,000 | 300 | $66.67 | 150% |

| Retargeting | $10,000 | 350 | $28.57 | 420% |

Looking at these numbers, the obvious move is shifting budget from Facebook and Native to Retargeting and Brand, where efficiency appears highest.

This analysis contains a fundamental error. Retargeting and Brand capture demand that other channels created. Without Facebook prospecting introducing new consumers to your brand, there would be no one to retarget. Without non-brand search driving research-stage traffic, fewer consumers would search your brand name. The “efficient” channels are harvesting crops planted by the “inefficient” ones.

According to Forrester Research, companies using advanced attribution models achieve 15-30% improvement in marketing ROI through more effective budget allocation. That improvement represents real money. On a $1 million annual marketing spend, proper attribution can recover $150,000-$300,000 in wasted allocation.

The stakes extend beyond marketing efficiency – understanding your true cost per lead requires accurate attribution. Lead generation businesses that misattribute performance make systematic errors that compound over time. They cut awareness spending and wonder why retargeting audiences shrink. They double down on brand search and watch branded query volume decline. They celebrate short-term efficiency gains that erode long-term competitive position. Attribution accuracy determines not just campaign performance but business survival.

What Is Multi-Touch Attribution?

Attribution is the practice of assigning credit for conversions to the marketing touchpoints that influenced them. When a consumer fills out your lead form, attribution answers: which ads, content, emails, and interactions deserve credit for that conversion?

Multi-touch attribution distributes credit across all touchpoints in the customer journey, acknowledging that modern paths to conversion involve multiple interactions, each contributing something to the outcome. This contrasts with single-touch models like first-touch or last-touch that assign 100% credit to one interaction.

The challenge arises because modern customer journeys rarely follow simple linear paths. A typical lead generation conversion might unfold across six distinct touchpoints: the consumer sees a Facebook ad while scrolling but does not click; later they search “[problem] solutions” on Google and click your organic result; they read your blog post but leave without converting; the next day they see a retargeting ad on a news site; they search your brand name and click a paid ad; finally they fill out your lead form. Six interactions contributed to one conversion, and multi-touch attribution models determine how credit distributes across them.

Why Multi-Touch Matters for Lead Generation Specifically

Lead generation businesses face attribution challenges that differ meaningfully from ecommerce. The conversion event and the revenue event are disconnected. A lead form submission happens at moment A. The lead sale to a buyer happens at moment B. The buyer’s acceptance, meaning no return, confirms at moment C. Value realization can span weeks, making it harder to connect marketing activity to actual revenue.

Lead quality varies independently of conversion. Two leads captured from identical ad sets might generate completely different outcomes. One sells for $50 exclusive while the other gets returned. Attribution that stops at form submission misses the quality dimension entirely. Multi-buyer distribution complicates revenue attribution further. A single lead might sell to three buyers for a total of $75. Which touchpoint gets credit for which portion of that revenue?

Consideration cycles compound these challenges. A solar lead typically involves 7-12 touchpoints over 2-3 weeks before form submission. A mortgage lead might research for 30-60 days across dozens of sessions. Insurance shoppers compare quotes across multiple sites and sessions before committing.

These complications make multi-touch attribution both more difficult and more important for lead generation. Single-touch models create dangerous distortions. Getting it wrong does not just misallocate marketing spend. It distorts your understanding of which leads actually make money.

Single-Touch Attribution Models: The Foundation

Before exploring multi-touch approaches, understanding single-touch models provides necessary context. Many operations still rely on these simpler models, and knowing their limitations explains why multi-touch matters.

First-Touch Attribution

First-touch attribution assigns 100% of conversion credit to the initial marketing interaction that introduced the consumer to your brand. If a consumer first encountered you through a Facebook ad, then visited via organic search, then converted through retargeting, first-touch gives all credit to Facebook. The logic holds that without that initial introduction, nothing else would have happened. First touch created the opportunity.

This model works well when measuring demand generation effectiveness. If your business depends on continuously reaching new consumers, first-touch reveals which channels successfully expand your addressable audience. It also suits short sales cycles where consumers convert within hours or days of first exposure, since first and last touch may be the same interaction anyway. Growth-stage businesses benefit from understanding what creates awareness and initial interest. Marketing teams struggling to justify awareness campaigns use first-touch to demonstrate that prospecting creates the opportunity for downstream conversion.

The limitations become apparent in longer consideration cycles. First-touch ignores nurture effectiveness entirely. A consumer who first saw your Facebook ad but needed six retargeting impressions and three emails before converting received no measured value from those touchpoints. The model overvalues broad reach channels that create many first touches but few conversions. In verticals like mortgage or solar where consumers research for weeks, first-touch attributes value to interactions so temporally distant from conversion that the causal link becomes questionable. Sophisticated ad platforms can game first-touch by engineering impressions. A consumer planning to search for insurance might see a display ad first because the platform predicted their intent.

Last-Touch Attribution

Last-touch attribution assigns 100% of conversion credit to the final marketing interaction before conversion. Using the same journey example, if a consumer first saw a Facebook ad, then engaged with organic content, then clicked a retargeting ad, then converted through brand search, last-touch gives all credit to the brand search campaign. Whatever happened last was decisive.

Last-touch is the default attribution model in most analytics and ad platforms because it is straightforward to measure. You simply look at the referrer or UTM source for the converting session. The model works for optimizing conversion efficiency when you have sufficient demand and need to maximize conversion rates on that demand. It identifies which channels close effectively. Short, impulse-driven cycles where decisions happen quickly with minimal research benefit from last-touch since the final touch genuinely represents most of the influence. Budget-constrained operations prioritizing proven converters over uncertain demand generators reduce risk with this approach. Last-touch also resembles how sales teams think about closing.

The model’s limitations can prove fatal. Last-touch credits harvesters while starving planters. Brand search and retargeting campaigns excel at last-touch because they reach consumers who already intend to convert. But that intent was created elsewhere. Overinvesting in harvesters while cutting planters eventually depletes the demand pool. The model cannibalizes organic demand by crediting ads for conversions that would have happened anyway. A consumer who would have typed your URL directly might click a branded paid ad first. It ignores assist value entirely – the retargeting impression that reminded someone to return, the email that educated them, the comparison content that positioned you favorably.

Consider this real scenario: An operation cuts Facebook prospecting because it shows poor last-touch performance. Retargeting and brand search maintain strong numbers for two months as they harvest existing cookies and brand awareness. By month three, the audience pool is depleted, retargeting reach drops 40%, and brand search volume declines 25%. The “efficient” channels were parasites feeding on a host the operator killed.

Multi-Touch Attribution Models Explained

Multi-touch attribution distributes credit across all touchpoints in the customer journey. Several models exist, each with different distribution logic that suits different business contexts.

Linear Attribution

Linear attribution splits credit equally across all touchpoints. Five touches mean each receives 20% credit. Ten touches mean each receives 10% credit. Every touchpoint in the journey receives identical credit regardless of position, timing, or engagement depth. If a consumer saw a display ad, clicked a search ad, read a blog post, received an email, and converted through retargeting, each of these five touchpoints receives 20% of the conversion value.

The simplicity of linear attribution makes it easy to explain and implement. It ensures no touchpoint is completely ignored and provides a balanced view of full-funnel contribution. Calculations and reporting remain straightforward. The weakness lies in treating a fleeting impression the same as an engaged session. The model does not reflect reality where some interactions matter more than others. It may overvalue low-quality touchpoints that add little genuine influence and cannot distinguish between meaningful engagement and incidental exposure. Linear attribution suits operations wanting a simple step beyond single-touch without complex modeling, or where touchpoint quality remains relatively consistent throughout the journey.

Time-Decay Attribution

Time-decay attribution weights recent touchpoints more heavily than earlier ones. Credit diminishes backward through the journey. The conversion-proximate touch might receive 40% credit, the one before it 25%, and so on until early touches receive minimal allocation.

A half-life parameter determines how quickly credit decays. With a 7-day half-life, a touchpoint that occurred 7 days before conversion receives half the credit of the final touchpoint. A touchpoint 14 days prior receives one-quarter, and the decay continues exponentially. This approach reflects recency bias in actual decision-making, acknowledging that later interactions often have more direct influence. It balances between first-touch and last-touch extremes while accounting for the temporal proximity of influence.

The model undervalues introduction and education touchpoints that may have been essential despite occurring early. Arbitrary decay rate selection affects results significantly – different half-life choices produce dramatically different credit distributions. Time-decay may discourage investment in upper-funnel content that creates the opportunity for later conversion. This model works best for businesses with short-to-medium consideration windows of 1-4 weeks where recent interactions genuinely drive final decisions.

Position-Based (U-Shaped) Attribution

Position-based attribution emphasizes first and last touches, typically allocating 40% to each while spreading the remaining 20% across middle interactions. The first touchpoint representing introduction receives 40% credit. The final touchpoint representing conversion receives 40% credit. All middle touchpoints split the remaining 20% equally. In a five-touch journey, the first and last each get 40%, and the three middle touches each get approximately 6.7%.

This approach honors both introduction and conversion, acknowledging that beginning and ending the journey matter most. It remains simple to understand and explain while balancing demand generation and conversion efficiency perspectives. The weakness lies in the arbitrary 40/40/20 split that may not reflect actual influence in your specific business. Position-based attribution undervalues important middle-journey moments like product comparison and objection resolution. It treats all middle touches equally regardless of engagement depth and may not suit businesses where middle-funnel content proves critical to conversion. This model fits businesses where initial awareness and final conversion are clearly the most important moments, with relatively undifferentiated middle-journey content.

W-Shaped Attribution

W-shaped attribution adds weight to the lead creation moment, distributing perhaps 30% to first touch, 30% to lead creation, 30% to conversion, and 10% across other touchpoints. This works well for B2B and longer cycles where the middle transition from anonymous visitor to identified lead represents a meaningful milestone.

Three key moments receive primary credit: initial awareness, lead capture when the prospect provides contact information, and final conversion. The remaining credit spreads across other touchpoints. This model explicitly recognizes that the moment someone becomes a known lead is strategically important. It captures value of both awareness and activation touchpoints.

Implementation requires clear identification of the “lead creation” moment, making the model more complex to configure and explain. Like other rule-based models, it still applies predetermined weights that may not reflect actual influence. W-shaped attribution may not suit B2C with shorter cycles where the lead capture moment does not represent a distinct strategic milestone. This model fits B2B lead generation, longer sales cycles, and operations where the lead capture event is distinct from both first awareness and final conversion.

Custom Weighted Attribution

Custom weighted models allow you to define your own credit distribution based on business knowledge and historical analysis. You analyze historical conversion paths, conduct incrementality tests, and apply business judgment to assign weights to different touchpoint types, positions, or channels. For example, you might assign 35% to first touch, 20% to email engagement, 25% to product page views, and 20% to the converting session.

This approach reflects your specific business reality and can incorporate institutional knowledge about what actually matters in your funnel. It adapts to unique funnel structures and bridges the gap between rule-based and algorithmic models. The model requires significant analysis to develop and carries risk of bias in weight selection. Periodic recalibration keeps the weights aligned with evolving business dynamics. Custom weighted attribution suits operations with deep conversion path data and strong business insights who want more control than rule-based models but cannot implement algorithmic attribution.

Data-Driven (Algorithmic) Attribution

Algorithmic attribution uses machine learning to assign credit based on actual conversion patterns in your data. Rather than applying predetermined rules, these models analyze which touchpoint sequences correlate with conversions versus non-conversion.

Google’s data-driven attribution, available in Google Ads, examines your conversion paths to identify which interactions most influence outcomes. The algorithm compares paths that led to conversions with paths that did not, identifying which touchpoints and sequences appear disproportionately in successful journeys. Facebook offers similar algorithmic tools within their ecosystem. These models require sufficient conversion volume – typically 600+ monthly conversions minimum – along with consistent tracking infrastructure across touchpoints, historical data spanning multiple months, and technical resources for implementation and interpretation.

Algorithmic attribution offers several advantages over rule-based approaches. Results derive from actual data rather than assumptions. The models can reveal counterintuitive patterns – that “weak” creative might actually be an essential assist. They adapt as your marketing mix changes and continuously improve with more data. The weaknesses include the significant data volume requirement, the “black box” nature that makes results hard to explain to stakeholders, and unpredictable shifts between measurement periods. These models still operate within each platform’s walled garden, limiting cross-channel visibility. Algorithmic attribution suits high-volume operations with mature tracking infrastructure seeking optimization beyond rule-based models.

Comparison: Which Model Fits Your Business?

Choosing the right attribution model depends on your business reality, data infrastructure, and decision needs. No model is universally correct, and the best approach often involves multiple models serving different purposes.

Your typical consideration window provides the first selection criterion. If consumers typically convert within 7 days of first exposure, first-touch and last-touch likely produce similar results, and simpler models suffice. When the window extends to 7-30 days, multi-touch models add value by capturing middle-journey influence. Beyond 30 days, multi-touch becomes essential – consider W-shaped for B2B or complex B2C journeys.

Monthly conversion volume determines whether algorithmic models are viable. Under 300 monthly conversions means data is insufficient for algorithmic approaches. Use rule-based multi-touch instead. Between 300-600 conversions, algorithmic models are possible but may be unstable, so test with caution. Over 600 monthly conversions makes algorithmic models viable if your tracking infrastructure supports them.

| Factor | Recommended Model |

|---|---|

| Short cycles (under 7 days) | Last-touch or linear |

| Medium cycles (7-30 days) | Position-based or time-decay |

| Long cycles (30+ days) | W-shaped or algorithmic |

| Under 300 monthly conversions | Rule-based (linear, position-based) |

| 300-600 monthly conversions | Position-based, test algorithmic |

| Over 600 monthly conversions | Algorithmic with rule-based validation |

| Simple tracking (platform pixels only) | Platform-native, focus on incrementality |

| Intermediate (CDP or unified tracking) | Rule-based multi-touch across channels |

| Advanced (data warehouse, identity resolution) | Algorithmic and cross-channel models |

Your tracking infrastructure sophistication also guides the decision. Basic tracking limited to platform pixels constrains you to platform-native attribution, so focus on incrementality testing for ground truth. Intermediate tracking with a CDP or unified tracking enables rule-based multi-touch across channels. Advanced tracking with a data warehouse and identity resolution makes algorithmic attribution and cross-channel models viable.

Sophisticated operations do not choose one model. They use multiple models for different purposes: last-touch for daily campaign optimization, position-based for monthly budget allocation, first-touch for demand generation assessment, and incrementality testing for ground-truth validation. When models tell consistent stories, confidence is high. When they conflict, investigation reveals underlying dynamics that single-model views would miss.

The Incrementality Testing Imperative

The limitations of all attribution models drive growing interest in incrementality testing – the practice of measuring what actually changes when you turn marketing on or off. A 2024-2025 industry analysis showed 52% of brands and agencies now use incrementality testing, up significantly from prior years. The methodology provides ground truth that attribution models, by design, cannot.

Attribution vs. Incrementality: The Key Distinction

Attribution tells you which touchpoints were present in conversion paths. Incrementality tells you which touchpoints caused conversions that would not have happened otherwise. The distinction matters enormously for budget allocation.

Consider the difference: A retargeting campaign shows 800% ROAS in platform reporting because it reaches users who already visited your site and were likely to convert. Attribution credits retargeting. But an incrementality test might reveal only 20% of those conversions were truly incremental – the other 80% would have happened without the retargeting exposure. The true ROAS is 160%, not 800%. Channels that look efficient may be harvesting demand rather than creating it, and only incrementality testing reveals which is which.

Incrementality Testing Methods

Geo experiments compare geographic regions where you advertise versus similar regions where you do not. If sales increase 15% in test markets and 2% in control markets, you have 13% incremental lift. This approach requires geographically segmentable markets, sufficient volume in each test region, 4-8 week test duration for reliable results, and statistical analysis of confidence intervals.

Holdout tests randomly exclude a portion of your audience from campaigns and compare their conversion rates to exposed audiences. If the exposed group converts at 4% and the holdout at 3.2%, your incremental lift is 0.8 percentage points, representing 25% relative lift. Platform lift studies from Meta Conversion Lift and Google Conversion Lift handle randomization and measurement within their ecosystems, reporting incremental conversions attributable to platform campaigns.

Time-based toggles turn campaigns off for defined periods and measure impact. This approach is simpler to execute but confounds pause effects with organic trends, making isolation of true incrementality difficult.

Test one channel at a time to isolate effects. Run tests for 4+ weeks to account for variance. Ensure test and control groups are genuinely comparable. Calculate statistical significance before drawing conclusions. Use incrementality alongside – not instead of – attribution models, recognizing that incrementality answers “how much” while attribution answers “through what path.”

Companies implementing proper incrementality measurement have achieved 10-20% improvements in marketing efficiency by reallocating spend from campaigns that look good to campaigns that actually work – a practice closely related to incrementality testing methodologies.

Implementation Challenges in the Privacy-First Era

Understanding attribution models is necessary. Implementing them successfully in 2025 is genuinely difficult due to compounding privacy restrictions that erode the measurement foundation.

The Privacy Landscape

Browser restrictions have fundamentally changed tracking capabilities. Safari’s Intelligent Tracking Prevention limits client-set cookies to seven days, and just 24 hours for traffic arriving from domains classified as trackers. Firefox’s Enhanced Tracking Protection operates similarly. Together, these browsers represent approximately 19% of global traffic.

Ad blockers compound the challenge. Over 31% of internet users worldwide employ ad blockers – 912 million people globally. That figure rises to 42% among users ages 18-34 who represent your highest-intent prospects. iOS App Tracking Transparency has shifted mobile tracking dramatically, with approximately 75% of iOS users opting out of tracking when given the choice. iOS users represent approximately 60% of US smartphone market share and skew toward higher income brackets – precisely the demographics most likely to convert on high-value leads.

While Google reversed course on third-party cookie deprecation in Chrome in 2024, as discussed in the death of third-party cookies, Firefox and Safari already block third-party tracking by default. The regulatory and competitive environment continues moving toward privacy-first solutions regardless of Chrome’s policies.

The cumulative effect means client-side tracking captures only 60-70% of actual conversions in many scenarios. The conversions you can measure do not represent a random sample – they are systematically biased toward older users, less privacy-conscious users, single-device journeys, and platforms with fewer restrictions.

Cross-Device Attribution Breakdown

Consumer behavior has become inherently multi-device. A user might see your solar ad on their iPhone during a morning commute, research options on their desktop during lunch, and finally submit a lead form on their iPad that evening. Traditional cookie-based tracking cannot connect these touchpoints.

Industry analysis suggests that 30-40% of conversions involve multiple devices in the path to purchase. Without deterministic identifiers like hashed email addresses matched across devices, these multi-device journeys appear as separate, unrelated sessions. Your attribution model credits only the final device touchpoint, systematically undervaluing upper-funnel campaigns that initiate consideration on different devices.

Walled Garden Limitations

Each major platform operates as a walled garden with limited data sharing. Attribution within Facebook is straightforward. Attribution across Facebook, Google, TikTok, and your own properties requires stitching together fragmented data. When Facebook and Google both claim credit for the same conversion, your total “attributed” conversions exceed actual conversions. De-duplication requires independent measurement systems that platforms do not naturally provide.

View-through attribution adds further complexity. Did that impression someone saw but did not click actually contribute to conversion? Platform self-attribution says yes. A consumer who saw a Facebook ad and converted within seven days gets attributed to Facebook, even if they clicked nothing. View-through attribution inflates platform-reported performance. Independent measurement studies consistently show lower incremental impact from view-through impressions than platforms claim. The impression may have contributed something – but rarely as much as platform reporting suggests.

Server-Side Tracking: The Technical Foundation

Server-side tracking has become essential infrastructure for maintaining attribution accuracy despite browser restrictions, as detailed in our guide to server-side tracking and first-party data. The fundamental difference lies in where tracking execution occurs.

Client-side tracking relies on the user’s browser to execute JavaScript that fires tracking pixels. This creates multiple failure points: ad blockers prevent loading, browser privacy settings block requests, ITP restrictions limit cookie access, and page issues can interrupt tracking.

Server-side tracking restructures this flow. When a consumer visits your landing page, your server captures click identifiers like gclid, fbclid, and ttclid, storing them in first-party cookies. When conversion happens, your server receives the form data, retrieves stored click identifiers from cookies, and fires API calls directly to ad platforms. Conversion data reaches platforms via server-to-server connection. To the browser, the tracking request looks like a standard form submission to your domain. There is nothing for ad blockers to intercept because the tracking communication never goes to a third-party domain from the client side.

Signal Recovery Rates

Businesses implementing server-side tracking report 20-40% more tracked conversions. The specific recovery depends on audience composition. High mobile and young demographics see 25-35% signal recovery. Mixed desktop and mobile audiences typically recover 15-25%. B2B desktop-heavy audiences see 10-15% signal recovery. One study found a 23% average ROAS lift after implementing server-side tracking with enhanced conversions. The improvement came not from changing campaign strategy, but simply from feeding better data into existing campaigns.

Enhanced Conversions for Attribution

Enhanced conversions supplement standard tracking by sending hashed first-party customer data alongside conversion events. Platforms match this data against their user databases to improve attribution, particularly for cross-device conversions.

When you send hashed email addresses or phone numbers with conversion events, platforms can match conversions across devices where users are logged in. A user who clicks an ad on mobile but converts on desktop gets properly attributed because the hashed email matches across both sessions.

For lead generation, Enhanced Conversions for Leads is particularly valuable. When a lead eventually converts to a sale, often days or weeks after initial capture, you can upload the conversion with the original lead’s hashed email. Platforms attribute the sale back to the initial ad click, improving your understanding of which campaigns drive actual revenue, not just form fills.

Building Your Attribution Stack

Practical implementation requires the right combination of tools, processes, and organizational alignment across three layers of infrastructure.

The data collection layer needs server-side tracking implementation through GTM Server-Side, direct API, or platform integration. Click ID persistence requires first-party cookies plus hidden form fields. Consistent UTM parameters across all campaigns enable cross-channel tracking. Enhanced conversion implementation for major platforms completes the collection layer.

The data storage layer requires a customer data platform or equivalent for unified profiles, a data warehouse for historical path analysis, and a lead-level attribution database connecting sources to revenue.

The analysis layer includes attribution modeling tools whether platform-native or independent, incrementality testing infrastructure, and visualization and reporting dashboards.

Implementation Roadmap

Phase one during weeks 1-4 establishes the foundation. Audit current tracking coverage and identify gaps. Implement consistent UTM parameter strategy. Set up server-side tracking for primary platforms. Configure enhanced conversions for Google and Meta.

Phase two during weeks 5-8 builds multi-touch infrastructure. Deploy position-based attribution as baseline. Build lead-level P&L connecting source to revenue. Create source-level profitability reporting. Establish cross-channel deduplication.

Phase three during weeks 9-12 focuses on optimization. Launch first incrementality test on highest-spend channel. Compare multi-touch insights to platform reporting. Develop multi-model dashboard for different decision needs. Document methodology and train team.

Common Implementation Mistakes

Trusting platform defaults leads many operations astray. Platform-provided attribution data is useful but biased. Platforms benefit from showing strong performance and use favorable attribution windows.

Ignoring incrementality creates false confidence. Attribution shows correlation; incrementality proves causation. Without incrementality testing, you cannot distinguish demand creation from demand capture.

Over-engineering too early wastes resources. Start with simpler multi-touch models before investing in algorithmic approaches. Sophisticated models on poor data produce sophisticated garbage.

Failing to connect to revenue undermines the entire purpose. Attribution that stops at form submission misses the quality dimension. Lead generators must connect marketing source to actual collected revenue.

Frequently Asked Questions

Which multi-touch attribution model is best for lead generation?

No single model fits all lead generation businesses. For short consideration windows under 7 days, last-touch often provides sufficient accuracy. For cycles of 7-30 days, position-based (U-shaped) attribution offers good balance between simplicity and accuracy, giving 40% credit each to first and last touch with 20% distributed across middle interactions. For longer cycles or B2B lead generation, W-shaped attribution adds weight to the lead capture moment. For high-volume operations with 600+ monthly conversions and sophisticated tracking, data-driven algorithmic models optimize based on actual patterns in your data.

How does multi-touch attribution differ from first-touch and last-touch models?

First-touch assigns 100% credit to the initial brand interaction. Last-touch assigns 100% credit to the final interaction before conversion. Multi-touch distributes credit across all touchpoints in the journey, acknowledging that multiple interactions typically influence a conversion. The key advantage of multi-touch is recognizing that different channels play different roles – some create awareness, others nurture consideration, others close conversions – and each deserves proportional credit based on their contribution.

What is incrementality testing and why does it matter for attribution?

Incrementality testing measures the true causal impact of marketing by comparing outcomes between groups exposed to marketing versus control groups that were not exposed. While attribution tells you which touchpoints were present in conversion paths, incrementality tells you which actually caused conversions that would not have happened otherwise. A 2024-2025 industry analysis found 52% of sophisticated marketers now use incrementality testing because it reveals ground truth that no attribution model can provide – particularly whether high-performing channels are creating demand or merely capturing demand that would have converted anyway.

How do privacy restrictions affect multi-touch attribution accuracy?

Privacy restrictions significantly degrade attribution accuracy. Safari limits cookies to 7 days (or 24 hours for traffic from classified tracker domains). Over 31% of internet users employ ad blockers globally, rising to 42% among users ages 18-34. Approximately 75% of iOS users opt out of tracking via App Tracking Transparency. Combined, these restrictions mean client-side tracking captures only 60-70% of actual conversions in many scenarios. Server-side tracking, enhanced conversions with hashed customer data, and first-party data strategies help recover 20-40% of lost signals.

What data do I need for effective multi-touch attribution?

Effective multi-touch attribution requires user-level tracking across touchpoints, consistent UTM parameters on all campaigns, conversion events connected to user identifiers, and 60-90 days of historical data. For algorithmic models, you need 600+ monthly conversions minimum. Additionally, you need click ID persistence (storing gclid, fbclid, etc. in first-party cookies and hidden form fields) to connect paid clicks to eventual conversions. For lead generation specifically, connecting marketing source to actual collected revenue (not just form submissions) provides the most actionable insights.

Should I use platform-reported attribution data or independent measurement?

Use both, but for different purposes. Platform-provided attribution data is useful for within-platform optimization – identifying which ad sets, creatives, or audiences perform best relative to each other. However, platforms benefit from showing strong performance and use favorable attribution windows and view-through crediting. For cross-channel budget decisions, use independent measurement: lead-level P&L analysis connecting sources to revenue, incrementality testing to validate true lift, and multi-model comparison to identify discrepancies between what platforms report and what actually happened.

How often should I review attribution data and adjust models?

Daily for anomaly detection and campaign pacing. Weekly for source-level performance and tactical optimizations. Monthly for channel allocation decisions and strategic adjustments. Quarterly for incrementality validation and model evaluation – compare rule-based results to algorithmic insights and incrementality test results. Annually for comprehensive model audit – evaluate whether your current approach still fits your business reality given changes in traffic mix, consideration cycles, and privacy landscape.

What is the difference between linear and time-decay attribution?

Linear attribution splits credit equally across all touchpoints regardless of timing – five touches means each receives 20% credit. Time-decay attribution weights recent touchpoints more heavily, with diminishing credit for earlier interactions – the conversion-proximate touch might receive 40% credit, with exponentially decreasing weights backward. Time-decay better reflects consumer behavior where recent exposures typically have more direct influence on conversion decisions. Choose linear when touchpoint quality is relatively consistent throughout the journey; choose time-decay when later-stage interactions genuinely drive final decisions.

How can I implement multi-touch attribution with limited resources?

Start with position-based (U-shaped) attribution as a baseline – it is simple to implement and captures the most important dynamics (introduction and conversion) while acknowledging middle-journey contribution. Use platform-native reporting for within-platform optimization. Implement consistent UTM parameters across all campaigns and landing pages. Build a simple lead-level P&L in a spreadsheet connecting marketing source to revenue outcome. Conduct one incrementality test per quarter on your highest-spend channel. This approach provides 80% of the value with 20% of the complexity of a full attribution stack.

What if my attribution models conflict with each other?

Conflicting models often reveal important dynamics rather than representing a problem to solve. When last-touch shows Google brand search as your best channel but first-touch credits Facebook prospecting, the conflict reveals a critical insight: Facebook creates demand that Google captures. Neither model is “wrong” – they answer different questions. Use the conflict as diagnostic information. Investigate which channels create demand versus which capture it. Let incrementality testing arbitrate by measuring what actually happens when you change spend in each channel. Multiple models provide triangulation that improves decision confidence.

How do I handle view-through conversions in multi-touch attribution?

View-through conversions – where someone saw but did not click an ad before converting – present a measurement challenge. Platform self-attribution typically credits these liberally, often using 7-28 day windows. Independent measurement studies consistently show lower incremental impact from view-through impressions than platforms claim. The practical approach: include view-through at reduced weight (perhaps 20-50% of a click’s credit) in your multi-touch model, but validate with incrementality testing. If a channel performs well only with view-through included, run an incrementality test to determine whether those impressions actually contributed or merely coincided with conversions that would have happened anyway.

Key Takeaways

Attribution determines budget allocation and margin. Companies using advanced attribution models achieve 15-30% improvement in marketing ROI through more effective spending. On $1 million annual spend, that represents $150,000-$300,000 recovered from misallocation.

Single-touch models create dangerous distortions. First-touch overvalues awareness channels; last-touch overvalues conversion channels. Last-touch is particularly dangerous because it credits channels that capture demand rather than create it, leading operators to cut awareness spending and watch downstream performance collapse.

Multi-touch models distribute credit across the journey. Linear splits equally, time-decay weights recent touches, position-based emphasizes first and last (40/40/20), W-shaped adds weight to lead capture, and algorithmic learns from actual patterns in your data.

Choose your model based on business reality. Short cycles under 7 days can use simpler models. Medium cycles of 7-30 days benefit from position-based. Long cycles and B2B need W-shaped or algorithmic. Data volume matters – algorithmic requires 600+ monthly conversions.

Incrementality testing provides ground truth. Attribution shows correlation; incrementality proves causation. 52% of sophisticated marketers now use incrementality testing because it reveals whether “high-performing” channels create demand or merely harvest it.

Privacy restrictions have degraded attribution accuracy. Safari cookie limits, ad blockers (31% globally, 42% among ages 18-34), and iOS ATT opt-outs (75%) mean client-side tracking captures only 60-70% of actual conversions. The missing conversions are not random – they systematically underrepresent your highest-value demographics.

Server-side tracking recovers 20-40% of lost signals. By routing conversion data through your servers before sending to ad platforms, you bypass browser restrictions. Enhanced conversions using hashed customer data enable cross-device attribution and extend measurement windows.

Use multiple models for different purposes. Daily optimization with last-touch, monthly allocation with position-based, demand generation assessment with first-touch, ground-truth validation with incrementality testing. When models agree, confidence is high. When they conflict, investigate.

Connect attribution to revenue, not just conversions. Lead generators must track which marketing sources produce leads that actually sell without returns, incorporating lead quality scores into the analysis. Attribution stopping at form submission misses the quality dimension that determines profitability.

Implementation requires discipline beyond tools. Consistent UTM parameters, click ID persistence, cross-channel deduplication, lead-level P&L, and regular incrementality validation. The organizations that win do not just deploy better technology – they build measurement into their operational discipline.

Statistics and methodologies current as of late 2025. Attribution models and privacy regulations evolve continuously; validate current platform capabilities before implementation. This article is part of The Lead Economy series on lead generation measurement and optimization.