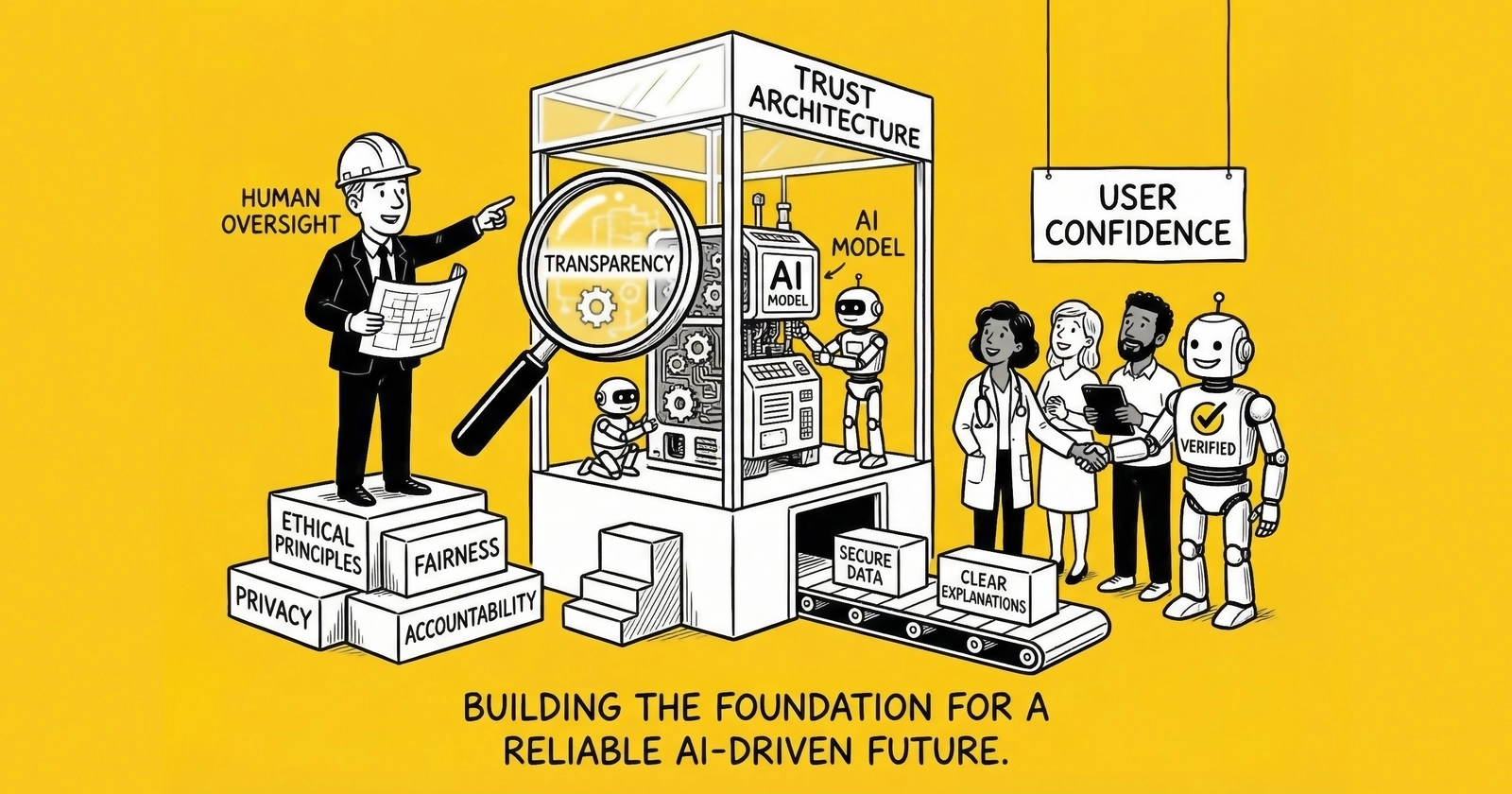

When AI agents become the buyers, your brand story matters less than your data integrity. Trust architecture is the infrastructure that makes you visible, verifiable, and selectable in a world where algorithms choose vendors. Here’s how to build it.

The lead generation industry is undergoing a fundamental restructuring. Not a gradual evolution. A wholesale transformation in how trust is established, verified, and monetized.

McKinsey projects agentic commerce will generate $3-5 trillion globally by 2030, with the U.S. B2C market alone representing up to $1 trillion in AI-orchestrated revenue. ChatGPT now has more than 800 million weekly users. Half of all consumers use AI when searching the internet. Adobe data shows AI-driven traffic to U.S. retail sites surged 4,700% year-over-year in July 2025.

These numbers represent more than market growth. They signal a migration of decision-making from humans to algorithms. And algorithms evaluate trust differently than humans do.

When a human researches insurance providers, they respond to brand familiarity, emotional appeals, social proof, and persuasive design. When an AI agent evaluates the same providers on a consumer’s behalf, it processes structured data, verifies credentials, compares parameters, and selects based on explicit criteria. The psychology-based trust signals you spent years perfecting become invisible to machine evaluation.

This is the trust architecture challenge: building the infrastructure that makes your business trustworthy to both human prospects and the AI agents increasingly acting on their behalf. Those who solve this challenge will capture disproportionate value as the transformation unfolds. Those who don’t will find their leads drying up without understanding why.

This guide covers the components of trust architecture, the transparency requirements that underpin it, the governance frameworks that protect it, and the strategic investments that build sustainable competitive advantage in the AI-driven future.

The Trust Crisis in Lead Generation

Before mapping the future, acknowledge the present. The lead generation industry faces a trust deficit that AI is simultaneously exposing and amplifying.

The Human Trust Problem

Consumer trust in lead generation has eroded steadily. A 2024 Edelman Trust Barometer study found that only 36% of consumers trust businesses to do what is right, representing a five-percentage-point decline from 2023. For industries associated with aggressive marketing tactics, the numbers drop further. Financial services and insurance, representing the highest-volume lead generation verticals, consistently rank among the least trusted sectors.

The trust erosion has tangible consequences. Response rates to sales outreach have declined approximately 20% over the past five years across most verticals. Email open rates for marketing messages dropped from 25% in 2020 to under 18% by 2024. Consumers who do engage increasingly use disposable contact information, knowing their data will be sold.

The average TCPA settlement now exceeds $6.6 million. Litigation rates continue climbing despite the regulatory uncertainty following the FCC’s 2024 one-to-one consent rule being vacated by the Eleventh Circuit in January 2025. Serial litigators actively target lead generators, representing an estimated 70%+ of TCPA lawsuits. The legal environment reflects the underlying trust problem: consumers feel sufficiently violated by current practices to support an entire litigation industry.

The AI Trust Transformation

AI agents don’t experience trust the way humans do. They don’t feel violated by spam. They don’t resent aggressive tactics. They evaluate trust through entirely different mechanisms.

When an AI agent evaluates a vendor on behalf of a consumer, it assesses data verifiability, historical performance, credential documentation, and structural transparency. It queries APIs, parses structured data, and compares parameters. The emotional shortcuts humans use to establish trust are irrelevant to algorithmic evaluation.

This creates both a challenge and an opportunity.

The challenge: the trust signals you’ve built for humans don’t transfer to AI evaluation. Brand awareness, marketing spend, and persuasive copy become invisible when the evaluator is an algorithm.

The opportunity: algorithmic trust can be engineered systematically. Unlike human trust, which requires expensive long-term brand building, algorithmic trust can be constructed through deliberate infrastructure investment. The playing field resets, and first movers gain lasting advantage.

Why Trust Architecture Matters Now

The window for building trust architecture is narrow. By 2027, industry analysts project that LLM traffic will overtake traditional Google search. By 2030, AI agents will mediate trillions of dollars in commerce. The infrastructure you build now determines your visibility and selectability in the agentic future.

Operators who wait face compounding disadvantage. Early movers establish algorithmic authority that becomes self-reinforcing. Their data gets used to train AI systems. Their structured information populates knowledge graphs. Their verified credentials become benchmarks against which competitors are measured. The competitive moat widens with every passing quarter.

This isn’t speculation. The transformation is already underway. Those who recognize it are building trust architecture while competitors focus on optimizing systems designed for human evaluation.

Components of Trust Architecture

Trust architecture comprises five interconnected layers. Each layer addresses a specific dimension of trust that matters in AI-mediated commerce. Together, they create the infrastructure that makes your business discoverable, verifiable, and selectable.

Layer 1: Data Integrity Infrastructure

Data integrity forms the foundation. Without clean, consistent, verifiable data, nothing else matters. AI systems trained on inconsistent data produce inconsistent outputs. Agents evaluating unreliable information downgrade confidence scores. The garbage-in-garbage-out principle applies with devastating force to algorithmic trust.

Single Source of Truth. Your organization’s data is currently scattered across platforms: CRM, marketing automation, ad platforms, analytics tools, lead distribution systems. Each platform has its own data model, its own definitions, its own retention policies. “Lead” means something different in your marketing automation platform than in your CRM. Conversion metrics don’t reconcile between Google and your internal systems.

This fragmentation is fatal in the AI context. Establish a data warehouse (Snowflake, BigQuery, Databricks, or similar) as the Single Source of Truth. All systems feed data into the warehouse. All analysis draws from the warehouse. When AI systems query your data, they receive consistent, reconciled information.

Data Quality Monitoring. Establish alerts for data quality issues: missing fields, impossible values, failed pipeline runs. Data quality degrades without active maintenance. You need systems that surface problems before they corrupt downstream analytics and before AI agents encounter inconsistencies that damage your trust scores.

Identity Resolution. Prospects interact through multiple channels before converting. The person who clicked your ad, visited your site anonymously, then filled out a form might appear as three different records. Identity resolution stitches these together, creating unified views that enable accurate attribution and consistent data presentation.

Documentation Standards. Every data element should have clear provenance documentation: where it came from, when it was collected, under what consent, how it’s maintained. This documentation becomes essential when AI systems verify claims and when regulators audit practices.

Layer 2: Consent and Compliance Framework

Consent infrastructure protects both legal compliance and algorithmic trust. AI agents increasingly verify consent status before processing leads. Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, industry practice and buyer requirements have established stricter standards that AI systems evaluate programmatically.

Consent Capture Mechanism. Many sophisticated buyers require consumers to give prior express written consent to each seller separately. Pre-checked boxes, bundled consent for multiple sellers, and comparison-shopping sites listing dozens of buyers without clear individual selection face increasing buyer rejection even without regulatory mandate. Your consent capture should reflect this granularity.

Consent Verification Infrastructure. TrustedForm certificates, Jornaya LeadID (compare TrustedForm vs Jornaya), and similar verification services document the consent moment. These certificates provide third-party verification that AI systems can evaluate. They demonstrate not just that consent was claimed, but that it was documented with cryptographic verification.

Consent Retrieval Systems. When challenged on consent validity, you need retrieval systems that can produce the complete audit trail within hours, not days. This includes the form as it appeared, the disclosures presented, the consumer’s affirmative action, the certificate documentation, and the chain of custody.

State-Specific Compliance. Mini-TCPA laws in Washington, Florida, and other states create additional requirements beyond federal baseline. California’s CCPA and its 2023 amendments add consent obligations for personal information processing. Your compliance framework must track these variations and ensure appropriate consent for each jurisdiction.

Layer 3: Transparency Mechanisms

Transparency builds both human and algorithmic trust. Humans respond to perceived honesty. Algorithms evaluate data completeness and consistency. Transparency mechanisms serve both audiences.

Pricing Transparency. Lead pricing varies by quality, geography, vertical, and buyer. Transparent pricing doesn’t mean publishing your rate card. It means consistent, logical pricing structures that can be explained and verified. Hidden fees, retroactive adjustments, and opaque billing destroy trust with both human partners and AI systems that detect inconsistency.

Quality Transparency. Define lead quality clearly. Document the validation steps each lead undergoes. Publish contact rates, return rates, and conversion metrics at granular levels. When AI agents evaluate lead quality, they compare stated quality against verifiable outcomes.

Process Transparency. Document how leads are generated, validated, routed, and delivered. Make these processes discoverable by AI systems through structured data and API documentation. Hidden processes create evaluation gaps that algorithms interpret negatively.

Outcome Transparency. Track and share downstream outcomes: contact rates, conversion rates, return rates, customer lifetime value. Our cohort analysis guide explains how to measure these effectively. This data feeds the feedback loops that help AI systems optimize toward actual value rather than stated quality.

Layer 4: Machine-Readable Trust Signals

AI agents evaluate trust through structured data. The trust signals that influence human decisions are invisible to algorithms unless they’re encoded in machine-readable formats.

Schema.org Markup. Implement comprehensive Schema.org markup across all digital properties. This structured data makes your business discoverable by AI systems. This includes Organization schema (establishing corporate identity), Product schema (describing lead products), Review schema (documenting social proof), and LocalBusiness schema (verifying physical presence). This structured data enables AI systems to understand your business in their native format.

API Architecture. Build APIs that expose your capabilities to AI agents. When an agent queries “what insurance lead products do you offer in Texas?”, your API should provide structured responses that answer completely and consistently. The form-based capture model that served humans becomes insufficient when agents expect API interfaces.

Credential Documentation. Document licenses, certifications, partnerships, and verifications in machine-readable formats. NAICS codes, state license numbers, industry certifications, and platform partnerships should be discoverable through structured data and API responses.

Performance Metrics. Expose performance metrics through structured formats. Average contact rate by product type. Return rates by time period. Customer satisfaction scores. AI agents evaluate these metrics when making selection decisions. Hidden metrics are assumed to be bad metrics.

Layer 5: Algorithmic Trust Signals

Beyond machine readability, certain signals specifically influence AI agent decision-making. These signals address how algorithms evaluate trustworthiness rather than how they access information.

Historical Performance Data. AI systems weight historical performance heavily. Consistent performance over time builds algorithmic trust. Volatile performance damages it. Even if your average metrics are strong, high variance suggests unreliability that agents factor into selection decisions.

External Verification. Third-party verification from recognized sources carries weight in algorithmic evaluation. Better Business Bureau ratings, industry association memberships, published case studies, and verified reviews all contribute to external authority that AI systems can assess.

Regulatory Compliance Record. Clean compliance history signals trustworthiness. AI systems can evaluate public enforcement actions, litigation history, and regulatory filings. A history of violations, even resolved ones, creates negative signals that persist in training data.

Partner Network Quality. The quality of your partnerships reflects on your trustworthiness. AI systems evaluate the companies you work with. Associations with low-quality or non-compliant operators create negative signals by association.

Transparency Requirements in the AI Era

Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, transparency requirements for lead generation continue evolving. Regulatory bodies worldwide are implementing similar frameworks. And AI systems are developing their own evaluation criteria that exceed regulatory minimums.

Transparency Standards in Practice

Consent Best Practices. Many sophisticated buyers require consumers to give prior express written consent to each seller separately. This means separate consent flows, clear identification of each recipient, and verifiable documentation of each consent action. Comparison shopping sites listing multiple buyers increasingly require individual selection for each to satisfy buyer requirements.

Best practices avoid consent mechanisms that obscure the identity of message senders or the nature of communications. Hyperlinked disclosures buried in dense terms and conditions face buyer rejection. Consent should be “clearly and conspicuously” disclosed regardless of regulatory minimums.

State Privacy Laws. California’s CCPA, as amended by CPRA in 2023, requires businesses to disclose personal information selling and sharing practices. Consumers have the right to opt out of such sales. Similar laws in Virginia, Colorado, Connecticut, and other states create overlapping requirements.

These laws require transparency about data practices that many lead generators have historically obscured. The “we may share your information with partners” language that satisfied previous requirements now fails to meet explicit disclosure mandates.

Financial Services Regulations. For financial verticals like mortgage and insurance, sector-specific regulations add transparency requirements. RESPA prohibits certain referral fee arrangements in mortgage. Insurance licensing requirements mandate disclosure of agent relationships. These vertical-specific requirements layer on top of general transparency mandates.

AI-Driven Transparency Expectations

AI systems evaluate transparency beyond regulatory requirements. They assess data completeness, consistency, and logical coherence. Gaps in transparency create evaluation penalties even when they don’t violate regulations.

Pricing Logic Transparency. AI agents evaluating lead sources compare pricing structures across vendors. Opaque pricing, unexplained variations, or illogical pricing relationships raise algorithmic red flags. If your Texas auto insurance leads cost $45 but your Florida leads cost $95 without clear explanation, an AI agent might flag the inconsistency.

Process Transparency. How do you generate leads? What validation steps do you apply? How do you route and deliver? AI systems increasingly expect this information to be discoverable. Companies that provide clear process documentation rank higher in algorithmic evaluation than those with black-box operations.

Outcome Transparency. What happens after lead delivery? What contact rates, conversion rates, and return rates characterize your leads? AI systems trained on industry benchmarks compare your stated outcomes against expected ranges. Outlier claims without supporting evidence damage credibility.

Building Transparency Infrastructure

Meeting transparency requirements demands infrastructure investment, not just policy changes.

Consent Management Platforms. Implement consent management that captures, documents, and retrieves consent at granular levels. These platforms should track consent by recipient, by channel, by purpose, with timestamps and verification certificates. Integration with lead distribution ensures consent flows through the entire lifecycle.

Disclosure Frameworks. Build disclosure frameworks that adapt to regulatory requirements across jurisdictions. Rather than maintaining separate disclosures for each state, implement dynamic disclosure systems that apply appropriate requirements based on consumer location and vertical.

Data Subject Access Request Processing. Privacy laws grant consumers rights to access, correct, and delete their data. Build systems that process these requests efficiently. The 45-day response requirement under CCPA means manual processing becomes impractical at scale.

Audit Trail Infrastructure. Maintain complete audit trails of data collection, processing, sharing, and deletion. These trails serve multiple purposes: regulatory compliance, litigation defense, and AI verification. When an AI system queries your data practices, the audit trail provides verifiable answers.

AI Governance for Lead Generation

AI is simultaneously the evaluator of trust and a tool requiring governance. The AI systems you deploy for lead scoring, validation, routing, and optimization create their own trust implications. Governance frameworks ensure these systems enhance rather than undermine your trust architecture.

The Governance Challenge

AI systems make decisions that affect consumers, partners, and regulatory standing. A lead scoring model might discriminate against protected classes without human awareness. A fraud detection system might create false positives that deny legitimate consumers. An optimization algorithm might push toward practices that maximize short-term metrics while damaging long-term trust.

These risks aren’t hypothetical. Research from MIT indicates that 95% of generative AI pilots fail to achieve rapid revenue acceleration. S&P Global documented that 42% of companies abandoned most AI initiatives in 2025, up from 17% in 2024. RAND Corporation research shows AI project failure rates exceeding 80%, double that of non-AI IT projects.

The failure modes often involve governance gaps: insufficient oversight, inadequate testing, missing feedback loops, and unclear accountability. Building governance infrastructure prevents these failures while ensuring AI deployment strengthens rather than undermines trust.

Governance Framework Components

Model Documentation. Every AI model in production should have complete documentation: training data sources, feature engineering, performance metrics, known limitations, and potential bias vectors. This documentation serves internal oversight and increasingly serves external audit requirements.

Testing Protocols. Before deployment, AI models require rigorous testing: performance validation against holdout data, bias testing across protected classes, stress testing under edge cases, and A/B testing against existing systems. Testing should be documented and repeatable.

Monitoring Infrastructure. Production AI systems require ongoing monitoring: performance degradation detection, drift identification, anomaly alerting, and outcome tracking. A model that performed well at deployment may deteriorate over time as data patterns shift.

Human Oversight Requirements. Define which decisions require human review. High-stakes decisions like rejecting leads, flagging fraud, or adjusting pricing should have human oversight mechanisms. Define escalation paths for edge cases the AI cannot confidently handle.

Feedback Loops. Build systems that learn from outcomes. When a lead flagged as fraudulent turns out to be legitimate, that feedback should improve the model. When a high-scored lead fails to convert, that outcome should inform future scoring. Closed-loop systems improve continuously.

Audit Capabilities. Maintain the ability to audit AI decisions retrospectively. When a consumer, partner, or regulator asks why a particular decision was made, you should be able to provide clear explanation. This requires logging decision inputs, outputs, and the model version in use.

Ethical Considerations

Beyond compliance, ethical AI deployment builds trust with consumers, partners, and the broader ecosystem.

Fairness. AI systems should not discriminate against protected classes. This requires testing for disparate impact across race, gender, age, disability status, and other protected characteristics. Fairness isn’t just legal compliance; it’s trust infrastructure.

Transparency. When AI makes decisions affecting consumers, appropriate transparency is required. This doesn’t mean explaining neural network architectures. It means providing meaningful information about how decisions are made and what factors influence outcomes.

Privacy. AI systems process sensitive personal information. Governance must ensure this processing respects privacy principles: data minimization, purpose limitation, storage limitation, and security requirements. Privacy-preserving AI techniques like federated learning and differential privacy may be appropriate for sensitive applications.

Accountability. Clear accountability structures ensure someone is responsible for AI outcomes. When an AI system causes harm, there should be clear ownership and remediation paths. Diffuse accountability allows problems to persist.

Implementing Governance

Effective governance requires organizational commitment, not just policies.

Executive Sponsorship. AI governance requires executive-level sponsorship to overcome resistance from teams optimizing for speed or cost. Without senior leadership commitment, governance becomes checkbox compliance rather than substantive protection.

Cross-Functional Oversight. Governance committees should include technical, legal, compliance, and business perspectives. AI decisions that seem optimal technically may create legal exposure. Decisions that seem legally safe may be technically impractical. Cross-functional oversight catches issues that single-perspective review misses.

Training and Culture. Teams deploying AI need training on governance requirements and the reasoning behind them. Governance perceived as bureaucratic overhead gets circumvented. Governance understood as trust protection gets embraced.

Continuous Improvement. Governance frameworks should evolve as AI capabilities, regulatory requirements, and best practices develop. Static governance becomes obsolete. Build review cycles that update requirements based on emerging risks and opportunities.

Ethical Lead Generation: Beyond Compliance

Compliance sets the floor. Trust architecture requires building well above it. Ethical lead generation creates sustainable competitive advantage that compliance-only approaches cannot match.

The Compliance Trap

many practitioners treat compliance as the goal rather than the baseline. They ask “what’s the minimum we need to do to avoid litigation?” rather than “what practices build lasting trust?”

This approach creates several problems:

Regulatory Lag. Regulations follow industry practices, often by years. Practices that are currently compliant may become non-compliant as regulators catch up. Operating at the compliance floor means constant disruption as requirements tighten.

Consumer Perception. Consumers don’t distinguish between technically compliant and genuinely trustworthy. Practices that meet legal requirements but violate consumer expectations damage trust regardless of compliance status.

Partner Quality. High-quality buyers increasingly evaluate supplier ethics, not just compliance. Operating at compliance minimum attracts bottom-tier buyers while premium buyers seek ethical partners.

AI Evaluation. AI systems increasingly evaluate ethical dimensions beyond compliance. Consumer complaint patterns, review sentiment, and outcome data all contribute to algorithmic trust scores that mere compliance doesn’t address.

Ethical Standards That Build Trust

Consumer-Centric Consent. Beyond one-to-one consent requirements, design consent flows that consumers actually understand. Use plain language. Present choices clearly. Avoid dark patterns that technically satisfy requirements while confusing consumers. Ethical consent builds trust; manipulative consent destroys it.

Contact Frequency Management. Compliant contact doesn’t mean unlimited contact. Consumers who receive multiple calls daily from multiple buyers become hostile regardless of whether each call was individually consented. Implement frequency caps that respect consumer tolerance, not just legal limits.

Quality Commitment. Don’t sell leads you know are low quality. If validation suggests a lead is unlikely to be contactable, don’t pass it through to buyers who will return it anyway. Short-term revenue from questionable leads damages long-term relationships and algorithmic trust scores.

Outcome Responsibility. Take responsibility for downstream outcomes. If your leads consistently result in consumer complaints, investigate why. If buyers report contact difficulties, diagnose the problem. Outcome responsibility builds trust with partners while generating data that improves operations.

Transparent Pricing. Price leads based on actual value delivered, not maximum extraction. Volume discounts, quality-based pricing, and outcome-based adjustments align your interests with buyer interests. Adversarial pricing damages relationships and invites alternative sourcing.

Operationalizing Ethics

Ethical principles require operational implementation.

Ethics Review Process. Before launching new campaigns, products, or practices, conduct ethics review. Would this practice embarrass us if exposed publicly? Would we be comfortable if our consumers understood exactly what we’re doing? If the answer is no, reconsider.

Consumer Feedback Integration. Build systems that capture and analyze consumer feedback. Complaints, opt-outs, and satisfaction ratings provide early warning of trust problems. Integrate this feedback into operational decisions.

Partner Feedback Loops. Regularly survey buyer partners on quality, ethics, and relationship satisfaction. Their perspective identifies issues invisible from your operational vantage point.

Employee Ethics Training. Train employees on ethical standards and the reasoning behind them. Employees who understand why certain practices are prohibited make better judgment calls in ambiguous situations.

Incentive Alignment. Examine whether compensation structures incentivize ethical behavior. If commissions reward volume without quality adjustment, you’re incentivizing practices that damage trust. Align incentives with long-term value creation.

Building Sustainable Competitive Advantage

Trust architecture creates competitive advantage that compounds over time. Unlike advantages based on technology or tactics, which competitors can copy, trust-based advantage becomes self-reinforcing.

The Compounding Effect

Trust compounds in several dimensions:

Data Advantage. High-trust operations attract better data through voluntary sharing, longer relationships, and richer feedback loops. Better data enables better AI models, which improve outcomes, which build more trust. The virtuous cycle accelerates over time.

Partnership Quality. High-trust operators attract high-quality partners. Premium buyers prefer ethical suppliers. Premium publishers prefer compliant buyers. Partnership quality creates operational advantages that further differentiate from lower-trust competitors.

Algorithmic Authority. AI systems increasingly weight historical performance and external verification. Early trust builders accumulate algorithmic authority that newcomers cannot quickly replicate. The training data that shapes AI evaluation includes years of performance history.

Regulatory Resilience. High-trust operations adapt more easily to regulatory changes. If you’re already operating above compliance minimums, new requirements create less disruption. Competitors operating at the floor face constant adjustment costs.

Crisis Resistance. Trust built over time provides resilience during crises. When something goes wrong, and something always goes wrong, established trust creates grace periods for resolution. Low-trust operators face immediate escalation.

Strategic Investment Priorities

Building sustainable advantage requires strategic investment across multiple horizons.

Phase 1: Data Foundation (2026-2027). Establish data infrastructure that supports trust architecture: data warehouse as Single Source of Truth, server-side tracking for signal recovery, consent management platform, and first-party data strategy. Budget allocation: 30% data infrastructure, 20% server-side tracking, 25% compliance technology, 15% analytics, 10% emerging technology testing.

Phase 2: Cognitive Layer (2027-2028). Build AI capabilities on the data foundation: AI-powered lead scoring, predictive analytics, dynamic pricing optimization, and fraud detection. Implementation should follow governance frameworks established in Phase 1. Budget allocation shifts toward AI tools while maintaining infrastructure investment.

Phase 3: Agentic Capability (2028-2030). Prepare for AI-mediated commerce: API architecture for agent queries, machine-readable trust signals, protocol support for emerging agent standards, and GEO optimization for AI discovery. This phase depends entirely on successful Phase 1 and 2 execution.

Attempting Phase 3 without Phase 1 foundation is the primary failure mode. Organizations that skip foundational work end up rebuilding it later at higher cost under competitive pressure.

Measurement and Optimization

Trust architecture requires measurement to optimize.

Trust Metrics. Develop metrics that track trust dimensions: consent completeness rates, consumer complaint ratios, partner satisfaction scores, regulatory inquiry frequency, and AI visibility indicators. Track these metrics over time to identify trends and opportunities.

Competitive Benchmarking. Monitor competitor trust signals: regulatory actions, industry reputation, partner quality, and AI visibility. Competitive position matters as much as absolute performance.

ROI Analysis. Quantify the return on trust investment: reduced litigation costs, improved partner terms, higher lead pricing, and better buyer retention. Trust investment should generate measurable returns that justify continued allocation.

Continuous Improvement. Use measurement to drive improvement. Identify weak dimensions and target investment accordingly. Trust architecture is never complete; it requires ongoing refinement as requirements and competitive dynamics evolve.

Implementation Roadmap

Trust architecture implementation follows a logical sequence. Each phase builds on previous phases, creating cumulative capability.

Immediate Actions (30 Days)

Audit Current State. Assess existing trust infrastructure across all five layers. Where are the gaps? What’s working? What’s compliant but suboptimal? This audit establishes the baseline for improvement.

Define Metrics. Establish the trust metrics you’ll track: consent rates, complaint ratios, partner satisfaction, regulatory compliance status, and AI visibility indicators. You can’t improve what you don’t measure.

Identify Quick Wins. Some trust improvements require minimal investment: updating disclosures, fixing consent language, documenting processes. Capture these wins while planning larger initiatives.

Assign Ownership. Trust architecture requires clear ownership. Designate a trust architecture leader with cross-functional authority. Without clear ownership, trust becomes everyone’s responsibility and no one’s priority.

Near-Term Initiatives (90 Days)

Consent Infrastructure Upgrade. Implement or upgrade consent management to meet buyer-driven one-to-one consent requirements with complete audit trails. This is the highest-risk gap in most operations.

Schema.org Implementation. Implement comprehensive structured data markup across digital properties. This relatively low-cost initiative creates immediate AI visibility improvements.

Process Documentation. Document lead generation, validation, routing, and delivery processes. This documentation serves multiple purposes: operational consistency, training, audit response, and AI transparency.

Partner Feedback Program. Establish systematic partner feedback collection. Monthly surveys or quarterly reviews provide insight into trust perceptions from your most important stakeholders.

Medium-Term Projects (6-12 Months)

Data Warehouse Implementation. Establish Single Source of Truth infrastructure. This foundation enables the advanced analytics and AI capabilities in subsequent phases.

Server-Side Tracking Deployment. Migrate tracking infrastructure to recover 20-40% of lost conversion signals. The improved data quality strengthens all trust measurement capabilities.

Governance Framework Development. Build AI governance infrastructure: documentation requirements, testing protocols, monitoring systems, and oversight structures. This framework prepares for cognitive layer investments.

API Architecture Planning. Design API architecture that will serve AI agent queries. Even if full implementation waits for Phase 3, architectural planning should begin now.

Long-Term Transformation (12-24 Months)

AI Capability Deployment. Implement AI-powered scoring, fraud detection, and optimization within established governance frameworks.

Clean Room Pilots. Launch data clean room partnerships with key partners. Learn the capabilities and limitations before broader deployment.

Agent-Ready Infrastructure. Build the interfaces, protocols, and data structures that AI agents will require for commercial interaction.

Continuous Optimization. Trust architecture is never complete. Establish continuous improvement cycles that refine capabilities as requirements and competitive dynamics evolve.

Frequently Asked Questions

What is trust architecture and why does it matter for lead generation?

Trust architecture is the infrastructure that makes your business trustworthy to both human prospects and the AI agents increasingly acting on their behalf. It encompasses data integrity, consent management, transparency mechanisms, machine-readable trust signals, and algorithmic credibility. Trust architecture matters because AI-mediated commerce is projected to reach $3-5 trillion globally by 2030. AI agents evaluate trust through structured data, verified credentials, and historical performance rather than brand awareness or persuasive marketing. Without trust architecture, you become invisible to algorithmic evaluation even as agent-mediated commerce grows.

How does AI change the way trust is established in lead generation?

AI evaluates trust differently than humans. Humans respond to brand familiarity, emotional appeals, social proof, and persuasive design. AI agents process structured data, verify credentials, compare parameters, and select based on explicit criteria. Machine-readable trust signals like Schema.org markup, API documentation, and verified performance metrics replace the psychology-based signals that influence humans. This shift means traditional marketing investments in brand building and persuasive content become less effective as AI agents mediate more commercial decisions.

What are the key components of trust architecture?

Trust architecture comprises five layers: data integrity infrastructure (Single Source of Truth, data quality, identity resolution), consent and compliance framework (granular consent capture, verification, retrieval systems), transparency mechanisms (pricing, quality, process, and outcome transparency), machine-readable trust signals (Schema.org markup, APIs, credential documentation), and algorithmic trust signals (historical performance, external verification, compliance record). Each layer addresses a specific dimension of trust that matters in AI-mediated commerce.

How do consent standards affect trust architecture?

Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, many sophisticated buyers require consumers to give prior express written consent to each seller separately. This creates granular consent requirements that AI systems can evaluate programmatically. Your consent infrastructure must capture consent by recipient, document the consent moment with verification certificates, and maintain retrieval systems that can produce complete audit trails. Weak consent becomes both a litigation risk and an algorithmic trust deficit, as AI agents increasingly verify consent status before processing leads.

What is Generative Engine Optimization and why does it matter?

Generative Engine Optimization (GEO) is the practice of optimizing content for AI citation rather than traditional search ranking. As discovery shifts from Google to AI platforms, being included in AI-generated answers becomes as important as search ranking. LLMs typically cite 2-7 domains per response, far fewer than Google’s 10+ search results. GEO requires structuring content for extraction, including citable facts, building external authority, and implementing structured data. Industry projections suggest LLM traffic will overtake traditional Google search by end of 2027, making GEO increasingly critical.

How should lead generators approach AI governance?

AI governance requires documentation of all production models (training data, features, limitations, bias vectors), testing protocols before deployment (performance validation, bias testing, stress testing), monitoring infrastructure for production systems (performance degradation, drift detection, anomaly alerting), human oversight requirements for high-stakes decisions, feedback loops that enable continuous learning, and audit capabilities for retrospective decision explanation. Research shows AI project failure rates exceeding 80%, often due to governance gaps. Effective governance prevents failures while ensuring AI deployment strengthens rather than undermines trust.

What distinguishes ethical lead generation from mere compliance?

Compliance sets the floor. Ethical lead generation builds well above it by designing consent flows consumers actually understand, implementing contact frequency caps that respect tolerance rather than legal limits, refusing to sell leads known to be low quality, taking responsibility for downstream outcomes, and pricing based on value delivered rather than maximum extraction. Ethical practices build sustainable competitive advantage because they create trust with consumers, attract premium partners, improve algorithmic evaluation, and provide regulatory resilience as requirements tighten.

How does trust architecture create sustainable competitive advantage?

Trust architecture creates compounding advantage through data accumulation (high-trust operations attract better data through voluntary sharing and richer feedback loops), partnership quality (premium buyers and publishers prefer ethical partners), algorithmic authority (AI systems weight historical performance that newcomers cannot quickly replicate), regulatory resilience (operating above compliance minimum reduces disruption from new requirements), and crisis resistance (established trust provides grace periods when problems occur). Unlike tactical advantages that competitors can copy, trust-based advantage becomes self-reinforcing over time.

What’s the relationship between first-party data and trust architecture?

First-party data, information you collect directly from prospects and customers, becomes the most reliable foundation for targeting, personalization, and AI training as third-party data degrades. First-party data strategy requires value exchange design (offering benefits in return for information), progressive profiling (collecting data over time rather than all at once), zero-party data capture (information prospects explicitly share), and data activation infrastructure (connecting the warehouse to activation platforms). First-party data is more trustworthy to AI evaluation because its provenance is clear and verifiable.

How should lead generators prioritize trust architecture investments?

Follow a phased approach. Phase 1 (2026-2027) establishes data foundation: warehouse as Single Source of Truth, server-side tracking, consent management, and first-party data strategy. Phase 2 (2027-2028) builds cognitive layer: AI-powered scoring, predictive analytics, governance frameworks. Phase 3 (2028-2030) develops agentic capability: API architecture for agent queries, machine-readable trust signals, protocol support. Attempting Phase 3 without Phase 1 foundation is the primary failure mode. The sequence matters because each phase enables the next.

Key Takeaways

-

Trust architecture is the infrastructure that makes your business discoverable, verifiable, and selectable by both human prospects and AI agents. Without it, you become invisible as AI-mediated commerce grows toward $3-5 trillion globally by 2030.

-

AI evaluates trust differently than humans. Algorithms process structured data, verify credentials, and compare parameters rather than responding to brand awareness or persuasive marketing. The psychology-based trust signals you built for humans don’t transfer to algorithmic evaluation.

-

Trust architecture comprises five layers: data integrity infrastructure, consent and compliance framework, transparency mechanisms, machine-readable trust signals, and algorithmic trust signals. Each layer addresses a specific dimension of trust that matters in AI-mediated commerce.

-

Though the FCC’s one-to-one consent rule was vacated by the Eleventh Circuit in January 2025, buyer-driven one-to-one consent requirements create explicit, granular standards that AI systems can evaluate programmatically. Consent infrastructure must capture, verify, and retrieve consent at the individual recipient level with complete audit trails.

-

AI governance is essential because AI systems make decisions affecting consumers, partners, and regulatory standing. Governance requires model documentation, testing protocols, monitoring infrastructure, human oversight, feedback loops, and audit capabilities.

-

Ethical lead generation builds above compliance minimums by designing consent consumers understand, implementing frequency caps that respect tolerance, refusing to sell low-quality leads, taking outcome responsibility, and pricing based on value delivered.

-

Trust architecture creates compounding competitive advantage through data accumulation, partnership quality, algorithmic authority, regulatory resilience, and crisis resistance. Unlike tactical advantages, trust-based advantage becomes self-reinforcing over time.

-

Implementation follows a logical sequence: data foundation (Phase 1), cognitive layer (Phase 2), agentic capability (Phase 3). Attempting advanced phases without foundational work is the primary failure mode.

-

First-party data becomes the most reliable foundation as third-party data degrades. First-party data strategy requires value exchange design, progressive profiling, zero-party data capture, and activation infrastructure.

-

The window for building trust architecture is narrow. Early movers establish algorithmic authority that newcomers cannot quickly replicate. The competitive moat widens with every passing quarter.

Statistics and projections current as of December 2025. AI capabilities, regulatory requirements, and market adoption continue to evolve rapidly. Consult legal counsel for compliance guidance specific to your situation.