Lead quality means different things to different buyers. What one insurance carrier calls a “premium lead” another rejects as substandard. The mortgage lender celebrating 4% conversion rates operates in a different reality than the solar installer demanding 15% appointment-set rates. Understanding what buyers actually measure – and why those metrics matter – separates operators who command premium pricing from those trapped in commodity competition.

This disconnect between seller perception and buyer reality costs the lead generation industry billions annually. Generators assume validation equals quality. Buyers know validation is table stakes – the real question is whether leads convert to revenue. Bridging this gap requires understanding quality from the buyer’s perspective, then building systems that deliver what they actually need.

What Is Lead Quality Really?

Quality extends far beyond pass/fail validation. A lead can pass every technical check – valid phone number, deliverable email, real address, proper consent documentation – and still represent zero value to a buyer who cannot reach the consumer or close the sale.

Validation confirms the lead is real. Quality predicts whether it will convert.

This distinction matters because the economics are fundamentally different. Validation costs pennies per lead and happens in milliseconds. Quality emerges over days or weeks as buyers attempt contact, conduct conversations, and close sales. The feedback loop between generation and outcome creates the information asymmetry that defines buyer-seller relationships.

Consider what buyers actually experience. A mortgage lender purchases 100 leads at $65 each, investing $6,500 in acquisition. All 100 leads passed validation – phone numbers work, addresses verify, consent certificates exist. But when loan officers start dialing:

- 62 consumers answer or return calls (62% contact rate)

- 34 engage in meaningful conversation about their financing needs

- 8 submit applications

- 3 close loans

That 3% conversion rate is the quality metric that matters. The lender paid $6,500 to generate 3 loans – $2,167 per closed loan before operational costs. Whether that works depends on loan profitability, not whether phone numbers validated correctly.

Quality exists on a spectrum. At the high end: consumers actively researching solutions, with genuine intent to purchase, who respond immediately to outreach and engage substantively. These leads convert at double or triple average rates. In the middle: consumers who expressed interest but require nurturing before purchasing – they represent the bulk of lead volume. At the low end: consumers who submitted information reluctantly, perhaps to access gated content, who ignore outreach and never intended to purchase.

The generator’s challenge is identifying where each lead falls on this spectrum before sale, using signals available at capture time. The buyer’s challenge is distinguishing quality from capability – a lead that didn’t convert might have been low quality, or the sales process might have failed a qualified prospect.

The Core Quality Metrics Buyers Track

Sophisticated buyers track quality through four primary metrics, each revealing different aspects of lead performance. Understanding what each metric measures enables generators to speak buyers’ language and build systems that deliver documented value.

Contact Rate

Contact rate measures the percentage of leads where buyers successfully reach the consumer: (Leads Contacted / Leads Attempted) x 100.

Contact rate is the first quality gate. If buyers cannot reach consumers, nothing else matters. A lead with perfect data that never answers the phone generates zero revenue.

What drives contact rate:

Phone number accuracy. Validated numbers that are disconnected, reassigned, or simply incorrect produce zero contact. Line type matters – mobile phones have higher contact rates than landlines because consumers carry them constantly. VoIP numbers may indicate tech-savvy consumers or someone creating disposable contact information.

Consumer intent timing. Leads captured during active shopping moments (the consumer just compared insurance quotes) have higher contact rates than leads captured during passive browsing (the consumer clicked an ad while scrolling social media). The difference can be 20-30 percentage points.

Speed to contact. Industry research consistently shows that leads contacted within one minute have 391% higher conversion rates. By 30 minutes, the window largely closes. Fast contact improves contact rate because consumers are still engaged with their inquiry.

Multi-channel capture. Leads that provide both phone and email enable multiple contact attempts. SMS confirmation during the capture process validates the phone number works and the consumer is responsive.

Benchmark contact rates by vertical:

| Vertical | Average Contact Rate | Top Performer Rate |

|---|---|---|

| Insurance | 55-65% | 75-85% |

| Mortgage | 50-60% | 70-80% |

| Solar | 45-55% | 65-75% |

| Legal | 60-70% | 80-90% |

| Home Services | 65-75% | 85-95% |

Individual buyer contact rates depend heavily on operational factors: call center staffing, attempt frequency, calling hours, and caller ID presentation. Two buyers purchasing identical leads achieve different contact rates based on how effectively they work the leads.

Conversion Rate

Conversion rate measures the percentage of leads that ultimately purchase: (Customers Acquired / Leads Purchased) x 100.

Conversion rate is the ultimate quality metric because it directly connects lead cost to revenue. A $50 lead that converts at 5% costs $1,000 per customer. A $75 lead that converts at 8% costs $938 per customer. The higher-priced lead is actually cheaper on a per-acquisition basis.

What drives conversion rate:

Consumer qualification. Leads that match buyer criteria – credit score for mortgage, coverage needs for insurance, property characteristics for solar – convert at higher rates than leads that technically qualify but represent edge cases.

Intent strength. Consumers actively comparing options convert better than those passively browsing. Form fields that capture intent signals (timeline to purchase, current situation, specific needs) help identify high-intent prospects.

Competitive timing. First responders win. Research indicates 78% of customers purchase from the first company that responds to their inquiry. Building speed-to-lead workflows is essential for maximizing conversion rates. Leads shared across multiple buyers see lower conversion rates for all except the fastest responder.

Product fit. A consumer seeking minimum coverage at lowest price won’t convert with a premium insurance carrier regardless of lead quality. Qualification questions that reveal fit prevent mismatches.

| Vertical | Average Conversion | Top Performer Rate |

|---|---|---|

| Auto Insurance | 8-12% | 15-20% |

| Health Insurance | 5-8% | 10-15% |

| Mortgage (Purchase) | 2-4% | 5-7% |

| Mortgage (Refinance) | 3-5% | 6-10% |

| Solar | 6-10% | 12-18% |

| Personal Injury Legal | 15-25% | 30-40% |

Conversion rate varies more by buyer capability than any other quality metric. Strong sales processes, compelling offers, and persistent follow-up can double conversion rates on identical lead populations.

Return Rate

Return rate measures leads returned for refund or credit: (Leads Returned / Leads Purchased) x 100.

Return rate is the quality metric generators control most directly. Returns indicate leads that failed basic quality standards – bad contact information, duplicate submissions, consumers who claim they never requested contact, or leads that don’t meet stated buyer criteria.

What drives return rate:

Validation failures. Phone numbers that don’t connect, emails that bounce, addresses that don’t exist. Comprehensive real-time validation eliminates most of these before leads reach buyers.

Fraud and bot traffic. Approximately 30% of leads sold by third-party vendors are fraudulent, according to industry research. Bot-generated submissions, human fraud farms, and incentivized form completions create leads that pass validation but represent no genuine purchase intent.

Duplicate submissions. The same consumer appearing in multiple buyers’ queues generates returns when detected.

Specification mismatches. Leads that don’t meet buyer filters – wrong geography, insufficient credit score, disqualifying characteristics – but were routed anyway due to system errors.

Consumer disputes. “I never filled out this form” is the most concerning return reason because it suggests either fraud or consent problems.

Industry return rate benchmarks:

| Quality Tier | Return Rate | Buyer Relationship Status |

|---|---|---|

| Premium | Under 5% | Preferred partner |

| Standard | 5-10% | Acceptable vendor |

| Below Standard | 10-20% | Probationary status |

| Unacceptable | Over 20% | Relationship at risk |

Return rates above 15% typically trigger pricing renegotiation or volume reduction. Above 25%, relationships terminate. On 1,000 leads at $40 each, a 10-point increase in return rate costs $4,000 monthly in direct refunds – plus the acquisition cost for those leads.

Time to Close

Time to close measures duration from lead delivery to customer conversion.

High-intent leads close faster. Consumers actively shopping (insurance renewal in 30 days, rate lock expiration, imminent move) close faster than those in early research phases. Single-decision-maker purchases close faster than committee decisions.

| Vertical | Average Time to Close | Fast Close (High Quality) |

|---|---|---|

| Auto Insurance | 7-14 days | 1-3 days |

| Mortgage | 30-45 days | 21-30 days |

| Solar | 45-90 days | 30-45 days |

| Legal (PI) | Same day to 7 days | Same day |

| Home Services | 1-7 days | Same day |

Buyers increasingly value speed alongside conversion rate when evaluating sources because faster closes return capital sooner.

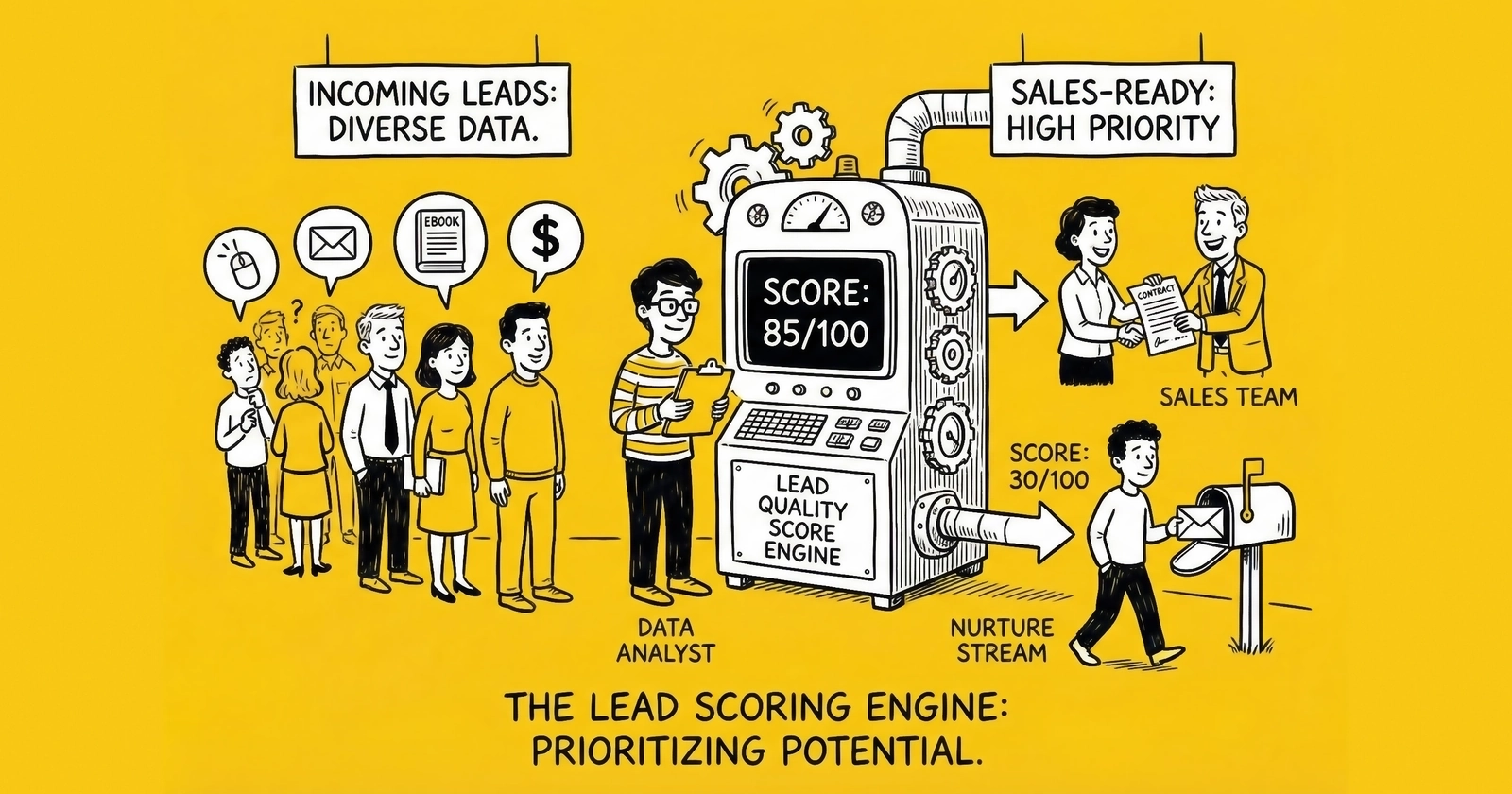

How Lead Scoring Systems Work

Lead scoring transforms raw lead data into conversion probability predictions. The shift from rules-based to predictive models represents one of the most significant capability improvements available.

Rules-Based Scoring

Traditional lead scoring assigns point values to characteristics based on assumed importance. A California lead gets +10 points. A mobile phone number adds +5 points. Prior insurance coverage adds +15 points. The total determines priority.

Rules-based systems work when you understand which characteristics predict conversion and those relationships remain stable. They fail when characteristics interact in non-obvious ways or market conditions change. Humans cannot optimize across hundreds of variables simultaneously.

Predictive Lead Scoring

Machine learning models analyze thousands of historical leads with known outcomes to identify patterns that predict conversion. The model considers every available attribute – submitted data, validation results, behavioral signals, source characteristics, timing factors – and learns which combinations correlate with success.

Training data assembly. Collect historical leads with disposition information: contacted/not contacted, converted/didn’t convert, sale value, time to close.

Feature engineering. Transform raw data into model-ready inputs: lead source, geography, stated intent, time of day submitted, form completion speed.

Model training. Algorithms identify patterns across thousands of leads. The model might discover that leads submitted between 10 AM and 2 PM from mobile devices convert at 2x average rate – a pattern no human would identify.

Scoring deployment. The trained model scores new leads in real-time before routing or pricing decisions occur.

The impact of predictive scoring:

- Companies using AI-powered lead scoring see 25% average conversion increases

- Businesses report up to 45% conversion rate improvements

- Sales cycles shorten by 28% when teams focus on high-quality leads

- 75% of businesses using AI qualification report significant improvement

Yet only 44% of companies use lead scoring systems. The majority treat all leads the same – a structural inefficiency creating opportunity.

Intent Data Enhancement

Intent data platforms track buying signals: content consumption, product research, competitor visits, technology installations. For B2B operations, this identifies accounts showing purchase intent before form submission.

- 93% of B2B marketers using intent data report increased conversion rates

- 65% say intent signals improve pipeline forecasting accuracy

- 95% link intent data to positive sales outcomes

Intent-enriched leads command premiums because they demonstrably perform better.

Vertical-Specific Quality Indicators

What constitutes “quality” varies dramatically across verticals.

Insurance Lead Quality

Insurance quality centers on coverage need, competitive timing, and qualification factors.

Critical indicators:

Current coverage status. Consumers currently insured but seeking better rates represent higher-quality leads than uninsured consumers who may face underwriting challenges.

Policy renewal timing. Leads captured within 30-60 days of renewal convert at higher rates. Renewal dates create natural purchase windows.

Multi-line opportunity. Consumers interested in bundling auto, home, and other policies represent higher lifetime value.

| Indicator | Premium Quality | Standard Quality |

|---|---|---|

| Currently Insured | Yes | Unknown |

| Renewal Window | Within 45 days | Over 90 days |

| Bundle Interest | Multiple products | Single product |

| Contact Preference | Phone preferred | Email only |

Mortgage Lead Quality

Mortgage quality depends on rate sensitivity, qualification factors, and transaction type.

Critical indicators:

Loan-to-value ratio. Borrowers with significant equity (low LTV) present less risk. LTV above 80% often requires mortgage insurance.

Credit score bands. Prime borrowers (740+) convert at higher rates with premium pricing. Subprime borrowers (below 620) have limited options.

Debt-to-income ratio. Monthly obligations relative to income predict approval probability. High DTI leads face qualification challenges.

| Indicator | Premium Quality | Standard Quality |

|---|---|---|

| Credit Score | 740+ stated | 680-739 stated |

| LTV | Under 70% | 70-80% |

| DTI | Under 35% | 35-43% |

| Timeline | Under 30 days | 60+ days |

Solar Lead Quality

Solar quality requires geographic precision and property-specific qualification.

Critical indicators:

Homeownership verification. Renters cannot install solar. Property ownership must be confirmed, not just claimed.

Utility bill range. Bills under $100/month often indicate insufficient usage to justify solar economics. Premium leads show $200+ bills.

Roof characteristics. Age, material, orientation, and shading affect installation feasibility.

| Indicator | Premium Quality | Standard Quality |

|---|---|---|

| Homeownership | Verified owner | Self-reported |

| Electric Bill | Over $200/month | $100-150/month |

| Roof Age | Under 10 years | 10-20 years |

| Shading | Minimal | Moderate |

Legal Lead Quality

Legal lead quality focuses on case value potential and jurisdiction match.

Critical indicators:

Incident recency. Recent incidents (within statute of limitations, preferably within 6 months) represent actionable cases.

Injury severity. Significant injuries requiring medical treatment have higher settlement value.

Liability clarity. Clear liability enables faster resolution than disputed situations.

The Quality-Volume Tradeoff

Scaling lead volume almost inevitably degrades quality. Understanding why this happens – and implementing countermeasures – determines whether growth becomes sustainable.

Why quality degrades at scale:

Traffic source exhaustion. High-quality sources have finite capacity. The first 100 leads from a new campaign come from high-intent consumers. The next 1,000 require broader, lower-intent audiences. Understanding CPL benchmarks by industry helps set expectations for this quality-volume tradeoff.

Validation shortcuts. Under pressure to meet volume targets, operators loosen requirements. Leads that would have been rejected get accepted.

Source diversification risks. Scaling requires adding new sources. Each introduces unknown quality. A single bad source can contaminate overall metrics.

Buyer capacity limits. Flooding buyer queues past operational capacity means leads age before contact, reducing conversion across all sources.

Managing the tradeoff:

Set quality floors that cannot be compromised for volume. Define minimum metrics (contact rate above 65%, return rate below 10%) that stop scaling when breached.

Track metrics by source, not just aggregate. A source delivering 500 leads with 25% returns harms you more than one delivering 200 leads with 5% returns.

Scale incrementally (20-30% increases) with quality validation between increments. This prevents collapses that damage relationships.

The economics:

| Approach | Volume | Price | Return Rate | Net Revenue |

|---|---|---|---|---|

| High-volume, lower-quality | 10,000 | $35 | 18% | $287,000 |

| Moderate-volume, higher-quality | 7,000 | $48 | 6% | $315,840 |

Higher quality generates 10% more revenue with 30% less volume – and builds buyer preference that enables pricing power.

Building Quality Feedback Loops

Quality systems without feedback are flying blind. True quality management requires continuous learning from outcomes.

Collecting Buyer Feedback

Return reason codes. Standardize why leads get returned:

- Contact failures (bad phone, disconnected, wrong number)

- Consumer disputes (never requested contact, doesn’t remember form)

- Qualification mismatches (wrong geography, doesn’t meet criteria)

- Already purchased (bought elsewhere)

- Not interested (answered but declined engagement)

Each reason implies different root causes. “Bad phone number” indicates validation failures. “Never requested contact” suggests fraud or consent problems.

Disposition reporting. Establish data feeds from buyer CRMs showing contact outcomes and conversion status. This data trains scoring models and validates source performance.

Quality scorecards. Request periodic buyer assessments comparing your performance to other sources. Qualitative feedback reveals issues raw numbers miss.

Closing the Loop

Quality data without action is worthless. Build processes that translate feedback into optimization:

Source management. Sources consistently delivering quality earn increased allocation and premium payouts. Persistent problems trigger termination.

Validation refinement. Return patterns reveal gaps. If specific patterns emerge (disconnected numbers from carrier X), add targeted checks.

Model updates. Outcome data feeds scoring models. As market conditions change, models retrain to reflect current patterns.

Negotiating Quality Thresholds with Buyers

Vague agreements like “reasonable quality” invite disputes. Specific thresholds create accountability.

SLA Structures

Return rate caps:

- 0-8%: No action required

- 8-12%: Warning issued, improvement plan required

- 12-18%: Pricing reduction of 10-15%

- Over 18%: Contract pause pending resolution

Contact rate minimums:

- Above 70%: Premium pricing

- 60-70%: Standard pricing

- Below 60%: Reduced pricing or return credits

Dispute resolution mechanisms:

- Return documentation requirements (what evidence buyers must provide)

- Return window limits (3-7 days for contact issues, 30 days for conversion disputes)

- Audit rights (verify return claims if rates seem inflated)

- Escalation procedures (operational contacts to management to mediation)

Pricing for Quality Tiers

| Quality Tier | Metrics | Pricing |

|---|---|---|

| Premium | Return under 5%, contact over 75% | +15-20% |

| Standard | Return 5-10%, contact 65-75% | Base rate |

| Below Standard | Return 10-15%, contact 55-65% | -10-15% |

| Probation | Return over 15%, contact under 55% | -25-30% or pause |

This structure aligns incentives: generators earn more by delivering quality, buyers pay less for substandard leads.

Quality Improvement Strategies

Enhanced Validation Protocols

Multi-layer phone validation: Line type, carrier identification, disconnected detection, DNC registry, reassigned number database. Cost: $0.02-0.10 per lead – trivial compared to return costs. See our guide on real-time lead validation for implementation details.

Email deliverability testing: SMTP verification catches typos, deactivated accounts, and nonexistent domains that passed format validation.

Address standardization: CASS-certified validation plus property appends (ownership status, year built, estimated value) for property-related verticals.

Source Quality Management

Monitor every quality metric by source. Aggregate metrics mask variation – a blended 10% return rate might include one source at 3% and another at 25%.

Termination criteria:

- Return rates over 20% for two consecutive weeks

- Fraud detection over 15%

- Repeated consent documentation failures

- Pattern of invalid contact information

Enforce criteria consistently. Short-term revenue loss from terminating a bad source is always less than relationship damage from continued poor quality.

Pre-Sale Lead Enhancement

SMS verification. After capture but before sale, send SMS asking consumers to confirm. Those who respond demonstrate engagement and verify phone numbers work.

Email engagement tracking. A brief email sequence maintains engagement and filters intent. Opens and clicks indicate higher quality.

AI pre-qualification calls. Voice AI conducts brief qualification conversations. Adds $1-3 per lead but significantly improves quality for premium positioning.

Industry data shows “sales-ready” leads command 2-3x pricing versus raw leads. The premium exists because these leads demonstrably perform better.

Frequently Asked Questions

What is a lead quality score?

A numerical rating predicting likelihood of conversion, generated by analyzing lead characteristics against historical conversion data. Higher scores indicate leads more likely to convert, enabling prioritization and premium pricing.

What contact rate should I expect from quality leads?

Quality leads achieve 65-85% contact rates depending on vertical. Insurance and home services run higher (70-85%); mortgage and solar run lower (60-75%). Below 55% indicates validation problems or low-intent sources.

How do I calculate true cost per converted customer?

Divide total lead cost (acquisition, validation, delivery) by customers converted. If you spend $10,000 on 200 leads and convert 8 customers, cost per conversion is $1,250. Include returns: if 30 leads return, you paid for 200 but only had 170 sellable.

What return rate is acceptable to buyers?

Under 8% is acceptable for quality partnerships. 8-15% triggers concern and may prompt pricing renegotiation. Over 15% risks reduced volume or termination. Premium tier requires under 5%.

How does lead scoring differ from lead validation?

Validation confirms data is accurate – the phone works, email delivers, address exists. Scoring predicts whether validated leads will convert, considering intent signals, timing, source quality, and demographic fit.

How quickly should I contact leads for best conversion?

Within one minute shows 391% higher conversion. By 30 minutes, the window largely closes. 78% of customers purchase from the first company that responds.

What vertical-specific metrics matter most?

- Insurance: Renewal timing, coverage status, bundling interest

- Mortgage: Credit score, LTV, property type, rate sensitivity

- Solar: Ownership verification, utility bill range, roof characteristics

- Legal: Incident recency, injury severity, jurisdiction match

How do I improve quality without reducing lead volume?

Source-level optimization. Identify highest-quality sources and allocate more budget. Add validation layers that catch problems without rejecting good leads. Scale incrementally with quality validation between increases.

Should I pay more for exclusive leads?

Exclusive leads typically cost 50-100% more but convert at significantly higher rates. Calculate cost per conversion for each type. If exclusives at $80 convert at 10% ($800/customer) while shared at $40 convert at 3% ($1,333/customer), exclusives provide better economics. Our guide on exclusive vs shared leads covers this decision in depth.

Why does my conversion rate differ from what generators claim?

Generators report averages across all buyers. Your rate depends on your sales process, product fit, follow-up speed, and competitive positioning. Two buyers purchasing identical leads achieve different conversion rates.

Key Takeaways

Quality is outcome-dependent, not validation-dependent. A lead can pass every technical check and still represent zero value. Validation is table stakes. Conversion prediction separates quality operations from commodity generators.

The four core metrics tell different stories. Contact rate measures reachability. Conversion rate measures sales effectiveness. Return rate measures data quality. Time to close measures urgency and fit. Track all four.

Predictive scoring outperforms rules-based approaches. Machine learning models identify patterns humans cannot detect. Companies using AI-powered lead scoring see 25%+ conversion increases.

Vertical-specific quality indicators matter. What constitutes premium quality in insurance differs from mortgage, solar, and legal. Build qualification around the indicators that predict conversion in your vertical.

Scaling degrades quality unless you manage it. Traffic source exhaustion, validation shortcuts, and buyer capacity limits cause decline. Set quality floors, track at the source level, scale incrementally.

Feedback loops enable continuous improvement. Quality systems without outcome data cannot improve. Establish buyer feedback mechanisms, analyze return patterns, translate insights into action.

SLA structures create accountability. Vague quality agreements invite disputes. Specific thresholds for return rates, contact rates, and dispute resolution protect both parties.

Pre-sale nurturing enables premium positioning. Leads verified through SMS, email engagement, or AI pre-qualification command 2-3x pricing versus raw leads.

Lead quality measurement represents one of the highest-leverage opportunities in lead generation operations. Generators who understand buyer quality metrics and build systems delivering documented performance differentiate themselves from commodity competitors. The information asymmetry that plagues the industry dissolves when both sides commit to quality measurement and continuous improvement.