By 2030, AI agents will generate $3-5 trillion in commerce – shopping, negotiating, and transacting without humans. The lead forms you’ve spent years perfecting may become irrelevant. Here’s what’s actually happening, what it means for your business, and how to prepare while there’s still time.

The most significant transformation since the invention of the web is unfolding. Not in research papers or five-year projections. Now.

In September 2025, OpenAI launched instant checkout capabilities in ChatGPT, starting with Etsy integrations for U.S. users. Shopify support followed quickly. By December, Instacart added full end-to-end shopping – meal planning, cart building, and purchase – without ever leaving the chat interface. More than one million merchants are expected to follow.

This is not a feature update. This is the opening move in a restructuring of how commerce operates at its foundation.

McKinsey projects agentic commerce could generate up to $1 trillion in U.S. retail revenue by 2030, with global projections reaching $3-5 trillion. For context, total U.S. e-commerce sales in 2024 were approximately $1.1 trillion. Agentic commerce is not adding to existing e-commerce – it’s positioned to equal or exceed it within five years.

For lead generation specifically, the implications are existential. The mechanisms you’ve built – forms, landing pages, qualification flows, call centers – were designed for humans navigating browsers. What happens when the “customer” is an AI agent acting on that human’s behalf? What happens when the lead form gets bypassed entirely by an API query? Understanding what lead generation is today provides essential context for seeing where it’s headed.

This guide maps the transformation underway and provides the strategic framework for preparation. Those who understand what’s coming will build the architecture to capture the agentic future. Those who don’t will wonder where their leads went.

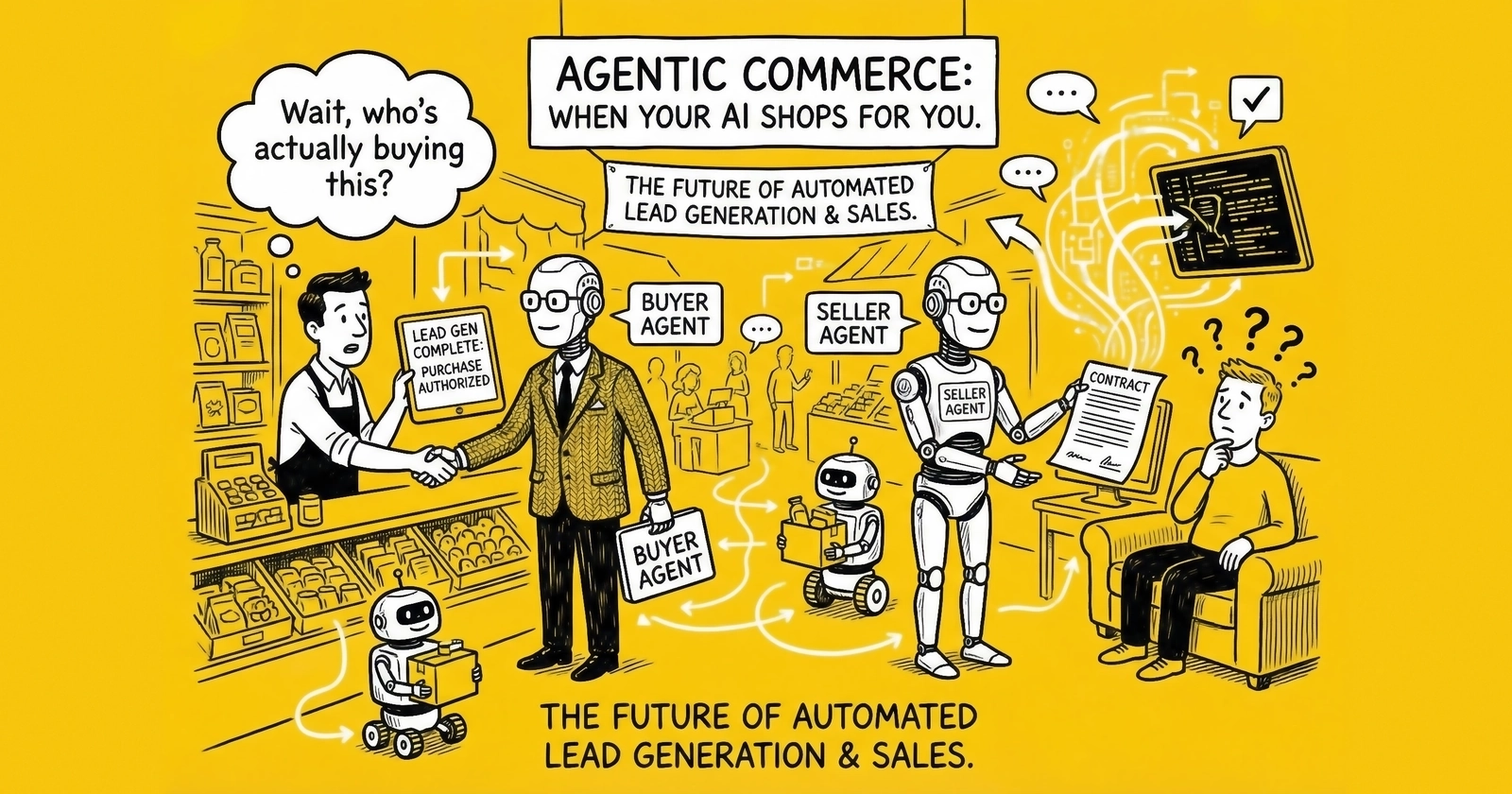

What Is Agentic Commerce?

Agentic commerce is a model in which artificial intelligence agents shop, negotiate, and transact on behalf of humans – autonomously. These are not chatbots answering questions. These are systems that receive a directive (“Find me the best auto insurance for my situation”), execute multi-step tasks across multiple platforms, evaluate options, negotiate terms, and complete purchases, all without human intervention at each step.

The technology matured faster than anyone predicted. ChatGPT now has more than 800 million weekly users. Half of all consumers use AI when searching the internet. Adobe data shows AI-driven traffic to U.S. retail sites surged 4,700% year-over-year in July 2025. The infrastructure for AI agents to act commercially – protocols for payment, authentication, and merchant integration – shipped in late 2025.

The Scale of What’s Coming

McKinsey’s research frames the magnitude:

The U.S. B2C retail market alone could see up to $1 trillion in orchestrated revenue from agentic commerce by 2030. Global projections reach $3-5 trillion. By comparison, total U.S. e-commerce sales in 2024 were approximately $1.1 trillion.

Equally significant: 60% of companies are expected to integrate AI agents into their operations by 2025. This is not experimental adoption by early movers – it’s mainstream deployment across industries. The infrastructure to support agentic commerce is not being built for a hypothetical future; it’s being built to meet current enterprise demand.

The agentic AI market itself is projected at $10 billion by 2026, reflecting 35% compound annual growth. Companies integrating AI agents into operations are expected to see 40% improvement in lead-to-purchase conversion by 2028 – not from better marketing, but from AI handling more of the conversion process itself.

Why Now?

Three forces converged to make agentic commerce viable in 2025:

Model Capability. Large language models crossed the threshold from answering questions to executing tasks. They can now browse websites, fill forms, compare options, and complete transactions. OpenAI’s Operator, launched in January 2025, demonstrated practical autonomous task completion.

Protocol Infrastructure. The major technology companies rolled out competing standards for agent commerce. OpenAI’s Agentic Commerce Protocol (ACP), developed with Stripe, enables secure transactions within chat interfaces. Google’s Agent-to-Agent (A2A) Protocol enables autonomous agents to communicate and negotiate. Anthropic’s Model Context Protocol (MCP) standardizes how AI systems access external resources.

Payment Integration. Visa, Mastercard, PayPal, and Stripe built the rails for agent-initiated transactions. The technical problem of how an AI agent pays for something – with appropriate authorization, fraud protection, and compliance – has been solved at the infrastructure level.

How the Customer Journey Transforms

McKinsey describes agentic commerce as transforming shopping “from a series of discrete steps – searching, browsing, comparing and buying – into a continuous, intent-driven flow powered by autonomous AI systems.”

Traditional e-commerce assumes humans at every step: humans searching, humans clicking, humans reading, humans deciding, humans purchasing. Each step represents an optimization opportunity – and each step is where you’ve been competing.

Agentic commerce collapses those steps. The human expresses intent: “I need auto insurance that covers my teenager’s new car.” The agent handles everything else: gathering quotes, comparing coverage, negotiating rates, completing applications. The human approves a recommendation. The agent executes.

For lead generators, this is transformation – not evolution. The forms you’ve optimized, the landing pages you’ve tested, the qualification questions you’ve refined – these were designed for human interaction. An AI agent does not read your persuasive copy. It queries your API.

Three Interaction Models for AI Agents

Agentic commerce is manifesting through three distinct interaction models, each with different implications for lead generation:

Model 1: Agent-to-Site

In this model, AI agents browse human-readable websites on behalf of users. The agent navigates your site, processes your content, completes your forms, and executes transactions – but the interface remains designed for humans.

This model is operational today. OpenAI’s Operator browses websites autonomously. Users delegate tasks; the agent completes them. For lead generators, this means your existing infrastructure still functions, but the user experience shifts. The agent fills your form faster than any human. It ignores your persuasive imagery. It processes your disclosures at machine speed.

Optimization in this model means ensuring your site is agent-navigable: clear form structures, logical page flows, minimal friction that would frustrate an autonomous system.

Model 2: Agent-to-Agent

In this model, buyer agents negotiate directly with seller agents. No human interface required. Parameters are exchanged; negotiations occur; transactions complete – machine to machine.

This model is emerging. Google’s A2A Protocol enables agents to communicate as autonomous peers across different platforms. When a consumer’s shopping agent queries a merchant’s sales agent, the interaction happens in structured data exchanges, not webpage visits.

For lead generation, this model is more disruptive. The “conversion moment” migrates upstream – from your landing page to the algorithm’s decision logic. You are no longer optimizing for human psychology. You are optimizing for machine selection criteria.

The lead form potentially disappears entirely. Instead, you expose an API. The buyer’s agent queries your API with parameters: coverage requirements, budget constraints, location data. Your system responds with offers. The negotiation happens in milliseconds.

Model 3: Brokered Interactions

In this model, platform intermediaries connect consumer agents with merchant agents, providing trust verification, transaction facilitation, and dispute resolution.

This model mirrors how lead aggregation already works – but with agents replacing humans at both ends. A consumer deploys their insurance-shopping agent. The agent connects to a marketplace (like current lead exchanges) where merchant agents compete for the business. The marketplace verifies credentials, enforces terms, and facilitates transactions.

For practitioners already in the aggregation and distribution business, this model represents potential continuity – if they build the infrastructure to intermediate agent interactions rather than human ones. The evolution of ping-post systems toward agent-to-agent transactions is already visible.

The Agentic Commerce Timeline

The transformation is accelerating faster than initial projections suggested:

2024-2025: Foundation Phase

Protocols emerged. OpenAI launched Operator in January 2025 and the Agentic Commerce Protocol in September 2025. Google released A2A and AP2 protocols. Payment processors enabled agent transactions. ChatGPT shopping integrations went live with Shopify and Etsy merchants.

This phase established the technical infrastructure. The building blocks now exist for AI agents to discover products, negotiate terms, authenticate users, process payments, and complete transactions.

2026-2027: Early Adoption

Expect widespread merchant integration with agentic protocols. The million-plus Shopify merchants with ChatGPT checkout access will be joined by major retailers building direct agent integrations. Consumer adoption will accelerate as AI assistants become default shopping companions.

The 60% of companies integrating AI agents by 2025 will have operational experience by this phase – experience that shapes how B2B agentic commerce develops. Early adopters will establish patterns that latecomers must follow.

By end of 2027, industry analysts project LLM traffic will overtake traditional Google search. The shift from browser-based to agent-mediated commerce will be visible in traffic patterns and conversion data.

2027-2028: Mainstream B2B Integration

B2B commerce follows consumer patterns with a lag. By 2028, expect AI agents to handle routine procurement, vendor evaluation, and service selection. The “leads” your business generates may increasingly come from AI systems querying your infrastructure rather than humans filling forms.

2029-2030: Market Penetration

McKinsey’s projections mature: $1 trillion U.S. B2C, $3-5 trillion global. By this point, not having agentic commerce capability is not a competitive disadvantage – it’s operational obsolescence.

How AI Agents as Buyers Changes Everything

The transformation creates both existential threats and unprecedented opportunities. Understanding the specific impacts clarifies the strategic response.

Current State vs. Agentic Future

| Current State | Agentic Future |

|---|---|

| Human fills form | Agent queries API |

| Marketing to humans | Marketing to algorithms |

| Brand awareness campaigns | Algorithmic trust signals |

| SEO for Google | GEO for LLMs |

| Website conversion optimization | API response optimization |

| Psychology-based persuasion | Parameter-based matching |

| Lead scoring on behavior | Agent intent parsing |

| Speed-to-contact competition | Instant API response |

The Form May Become Obsolete

The lead capture form – the fundamental mechanism of digital lead generation – was designed for humans who need visual prompts, clear instructions, and step-by-step guidance. An AI agent does not need any of that.

When agents query businesses directly through structured APIs, the form-based capture model transforms into something unrecognizable. The “lead” is not a form submission – it’s an API request containing structured parameters that an agent compiled from conversation with a human.

This does not mean lead generation disappears. It means the mechanism shifts. You are still connecting buyers with sellers. You are still qualifying intent and routing opportunities. But the interface changes fundamentally.

Consider the operational implications:

Lead capture becomes API design. Instead of optimizing form fields and copy, you are optimizing API endpoints and response structures. Understanding API lead posting provides the technical foundation for this transition.

Conversion rate becomes inclusion rate. The metric that matters is not “what percentage of visitors submit forms” but “what percentage of agent queries include you in the consideration set.”

Landing page testing becomes response testing. A/B testing shifts from visual elements to data structure, response time, and parameter completeness.

Human psychology becomes machine criteria. The emotional appeals that persuade humans – urgency, social proof, fear of missing out – do not affect algorithms. Selection criteria become explicit and logical.

Attribution Complexity Multiplies

If a consumer asks ChatGPT for insurance recommendations and ChatGPT surfaces your brand, did your marketing work? If the consumer’s personal AI agent queries your API based on criteria the consumer specified months ago, which campaign gets credit?

Traditional attribution models assume human journeys with identifiable touchpoints. Agent-mediated journeys collapse touchpoints and obscure the paths that led to selection. The attribution challenge you face today intensifies dramatically.

New attribution questions emerge:

GEO attribution. Your content influenced an LLM during training. That LLM now recommends you. How do you attribute revenue to content that trained an AI?

Agent influence attribution. An agent’s selection criteria were shaped by many factors – some within your control (your data quality), some outside it (agent algorithm updates). How do you isolate your contribution?

Multi-agent attribution. The consumer’s agent consulted with the vendor’s agent, which consulted with a marketplace agent. Who influenced the final decision?

The honest answer: current attribution frameworks are not equipped for agentic commerce. New models will emerge, but expect a period of measurement uncertainty.

Speed Advantages Evaporate

In current lead generation, speed-to-contact creates competitive advantage. The first responder wins. But when the “contact” is an API query and the response is instantaneous, speed differentiates nothing.

Competition shifts to selection criteria: Which providers does the agent include in its consideration set? What parameters determine ranking? How does an agent evaluate “trustworthiness” when comparing offers?

The speed-to-contact metric becomes irrelevant because there is no “contact” to respond to. The agent query and your response happen in milliseconds. Everyone responds instantly. The differentiation happens before the query – in whether you are included at all.

Quality Signals Transform

Human buyers evaluate providers through brand familiarity, website professionalism, review sentiment, and social proof. These signals evolved to influence human psychology.

AI agents optimize for different criteria: data structure quality, response completeness, verification credentials, price competitiveness, availability accuracy. The “signals” that win agent consideration may differ substantially from those that win human conversion.

Consider how different signals perform:

Brand recognition. Matters to humans (familiarity equals trust). May matter less to agents (unless brand is a proxy for data quality or verified credentials).

Visual design. Matters to humans (professional appearance signals trustworthiness). Irrelevant to agents (they do not see your website).

Testimonials. Matter to humans (social proof persuades). May matter to agents only if structured as verifiable review data with provenance.

Response time. Matters in human context (speed wins). Table stakes in agent context (everyone is fast).

Data completeness. Moderate importance to humans (they will ask if information is missing). Critical to agents (missing data may mean exclusion from consideration).

Verifiable credentials. Nice-to-have for humans (they often do not check). Essential for agents (may be hard selection criteria).

The organizations that succeed in agentic commerce will build the trust signals that matter to machines, not just the persuasion signals that matter to humans.

Machine-to-Machine Lead Transactions

Major payment processors have built systems enabling AI agents to initiate and complete transactions autonomously. No human fills out a form. No human clicks “submit.” The algorithm decides; the algorithm buys.

What’s Changing

The “conversion moment” – the point where a prospect becomes a lead or customer – is migrating upstream. In traditional lead generation, conversion happens on your landing page when a human submits information. In agentic commerce, conversion happens in the agent’s decision logic before it ever contacts you.

That lead form you spent years perfecting? An AI agent might bypass it entirely, querying your API instead. The persuasive copy, the trust badges, the testimonials – none of it matters to an algorithm evaluating structured parameters.

The Payment Infrastructure

Visa, Mastercard, PayPal, and Stripe have all invested in agent payment capability. Key infrastructure elements include agent authentication (tokenized permissions with transaction limits), fraud prevention (new models based on agent reputation and authorization chains), dispute resolution (frameworks for agent-initiated purchases the human did not want), and spending controls (budgets and approval thresholds that constrain agent purchasing).

Lead Generation Implications

For lead generators, M2M commerce means:

Form bypass. When agents can query and transact programmatically, the form-based lead capture model becomes optional rather than required. You need to be reachable through API, not just through landing pages.

Instant response requirement. Agent queries expect immediate responses. The 24-hour lead response time that feels fast in human terms is infinitely slow for machine interactions. API latency becomes competitive differentiator.

Transparent pricing. Agents optimize on explicit parameters. Hidden fees, unclear pricing, and “call for quote” models disadvantage you in agent consideration sets. Price transparency becomes selection criteria.

Quality signal evolution. Human buyers evaluate providers through brand, reviews, and gut feel. Agents evaluate through structured data, verified credentials, and quantifiable metrics. The trust signals that win must be machine-readable.

Information Syndication: The New Lead Generation Model

The paradigm shift from “traffic generation” to “information syndication” redefines what lead generation means.

The Mindset Shift

Stop thinking about “lead capture.” Start thinking about “information syndication.”

Your website is not a destination anymore – it’s a source of truth for AI intermediaries. The question is not “how do I get humans to my site?” It’s “how do I ensure AI agents find, trust, and recommend my data?”

This represents a fundamental inversion of the lead generation model. For twenty years, success meant attracting humans to your properties and converting them there. In the agentic model, success means syndicating your information to AI systems that make recommendations on your behalf.

Traditional web metrics become partially obsolete. Page views matter less when agents consume data through APIs. Time-on-site is irrelevant when agents process information in milliseconds. Bounce rate means nothing when the “visitor” is a bot extracting structured data.

The metrics that matter are: inclusion rate (how often do agents recommend you?), citation rate (how often do AI summaries reference your content?), and conversion rate from agent-initiated queries.

From Destination to Source

Consider how this changes lead generation strategy:

SEO optimized for rankings becomes GEO optimized for citations. Traditional SEO aimed to rank number one for target keywords, driving human clicks. GEO aims to be cited by AI systems when users ask relevant questions. The optimization techniques differ significantly.

Landing pages designed for conversion become data structures designed for extraction. Landing pages used psychology, design, and copy to persuade humans. Data structures use schemas, APIs, and structured formats to inform machines. The skillsets are different.

Traffic acquisition becomes information distribution. Media buying drove humans to your site. Information syndication ensures your data reaches AI systems wherever they operate. The channels are different.

Form submissions become API queries. The lead used to arrive as a form submission containing consumer-entered data. The lead may arrive as an API query containing agent-compiled parameters. The technical requirements are different.

Structured Data Imperative

AI agents do not read websites the way humans do. They parse structured data, process API responses, and extract information from machine-readable formats. Sites without structured data become “invisible” to agent-mediated discovery.

The implementation requirements:

Schema markup becomes essential rather than optional. JSON-LD structured data for products, services, offers, and organizations helps AI systems understand and represent your information accurately. For lead generators, particularly important schemas include LocalBusiness, Service, Offer, and AggregateRating.

API-first architecture enables agent queries. “Headless” content systems that serve structured data through APIs position you for agent integration as protocols mature.

Content optimization shifts focus. Rather than writing for human persuasion, content needs to be factually dense, clearly structured, and easily extractable. The TL;DR summary matters more than the narrative journey.

Data freshness matters. AI systems can evaluate recency. Content with recent timestamps and current data signals active maintenance – a trust factor for AI evaluation.

API-First Architecture for the Agentic Future

“Headless” content architecture – separating content management from presentation – becomes strategic infrastructure for agentic commerce.

Why Architecture Matters

Traditional websites couple content with presentation. The information exists as rendered HTML pages designed for human browsers. AI agents can scrape this information, but the process is inefficient and error-prone.

API-first architecture separates concerns. Content lives in structured databases, accessible through documented APIs. Human-facing websites render that content for browsers. Agent-facing endpoints serve the same content in machine-optimized formats.

This architectural shift enables:

Multi-channel publishing. The same content serves human websites, mobile apps, and AI agents through appropriate interfaces. Update the data once; every channel reflects the change.

Real-time accuracy. Agents query current data rather than cached pages. Inventory, pricing, and availability reflect actual state. For lead generation, this means quote availability, pricing tiers, and qualification criteria remain synchronized across all interfaces.

Efficient processing. Agents receive structured responses rather than parsing HTML. The interaction is faster and more reliable. What takes an agent 30 seconds of web scraping takes 200 milliseconds via API.

Extensibility. As new agent protocols emerge (ACP, A2A, AP2, MCP), API-first architecture adapts more easily than tightly-coupled websites. The data layer remains stable while presentation layers evolve.

The Lead Generation API Opportunity

For lead generators, API-first architecture creates competitive opportunities:

Agent-ready quoting. An AI agent shopping for insurance on behalf of a consumer could query your API with the consumer’s parameters and receive a structured quote response. The interaction that currently takes a human filling out a form happens programmatically.

Real-time inventory. When agents shop for services, availability matters. An API can communicate current capacity, turnaround times, and geographic coverage in ways static web pages cannot.

Qualification automation. The qualification questions you currently ask through forms could be answered through API parameters. The agent pre-qualifies the lead before presenting options to the consumer.

Negotiation capability. In agent-to-agent commerce models, your system needs to receive offers, evaluate parameters, and respond with acceptances or counter-proposals. This requires API infrastructure that does not currently exist in most lead generation operations.

Implementation Approach

For existing operations, layer agent-ready capabilities onto current infrastructure:

Start with data structure. Map your lead-relevant information into structured schemas: service types, geographic coverage, pricing structures, qualification requirements, availability, and trust signals.

Build API endpoints. Create authenticated endpoints serving structured data. Start with read-only endpoints before implementing transactional ones.

Implement Schema markup. Even without full API architecture, Schema.org markup makes existing pages more machine-readable – the minimum viable preparation.

Document for agents. Provide integration documentation as protocols mature. Think of this as the agentic equivalent of your website.

Version carefully. Agent integrations create dependencies. Plan for versioning from the start.

The Visibility Imperative

Companies without structured data become “invisible” to agent-mediated discovery. Providers locked in unstructured websites simply do not exist in the agent’s consideration set. This is the agentic equivalent of not appearing in Google search results – except in agent-mediated commerce, the provider that cannot be queried programmatically may not be found at all.

Generative Engine Optimization: Marketing to Algorithms

As discovery shifts from Google to AI platforms, a new discipline has emerged: Generative Engine Optimization (GEO) – optimizing content for AI citation rather than search ranking.

The Discovery Shift

ChatGPT reached 100 million users faster than any application in history. As of early 2025, it has more than 800 million weekly users. Google’s AI Overviews appear on billions of searches monthly – at least 13% of all search results pages. Industry projections suggest LLM traffic will overtake traditional Google search by end of 2027.

This is not replacing SEO. It’s adding a parallel optimization requirement. Your content needs to rank in traditional search AND be cited by AI systems AND be queryable by AI agents. Each requires different optimization approaches. Our guide to SEO for lead generation covers traditional optimization, while GEO represents the emerging frontier.

GEO vs. SEO

Traditional SEO optimizes for ranking factors: backlinks, keywords, technical signals, user engagement. The goal is appearing high in search results so users click through to your site.

GEO optimizes for citation factors: content structure, factual density, source authority, semantic clarity. The goal is being included in AI-generated answers – often without users visiting your site at all.

Key differences:

Source selection. LLMs typically cite 2-7 domains per response, far fewer than Google’s 10+ blue links. Getting included in that smaller set requires different signals.

Extraction over ranking. AI systems extract and synthesize information. Content optimized for easy extraction – clear headers, bullet points, summary sections – performs better than narrative content optimized for engagement.

Authority weighting. LLMs heavily weight perceived authority. Wikipedia dominates ChatGPT citations (47.9% of top citations). Authoritative industry sources, research publications, and established platforms receive preferential treatment.

GEO Implementation

Practical optimization for AI citation:

Structure for extraction. Use clear headers, TL;DR summaries, and organized sections that AI can parse and cite. The content immediately following headers should directly answer the implicit question. For lead generation content, this means structuring service pages with explicit capability statements, pricing frameworks, and qualification criteria that AI systems can extract and present.

Include citable facts. Statistics, specific numbers, dated information, and verifiable claims are more likely to be cited than general statements. “30% of leads are fraudulent” cites better than “lead fraud is common.” For lead generators, this means documenting your specific performance metrics, customer outcomes, and operational capabilities in formats AI systems can reference.

Build external authority. AI systems evaluate source credibility partly through external validation. Brand mentions across authoritative sites, Wikipedia presence, and industry citations all contribute to LLM trust signals. Getting mentioned in industry publications, analyst reports, and authoritative reviews improves your likelihood of AI citation.

Implement structured data. Schema markup helps AI systems understand your content’s meaning and context. For lead generation businesses, particularly valuable schemas include:

- Organization schema with your service area, contact information, and credentials

- Service schema detailing your offerings and pricing models

- FAQ schema answering common buyer questions

- Review schema aggregating customer testimonials

Monitor AI visibility. New tools track how often your content is cited in AI responses. Platforms like Profound, Semrush’s AI Toolkit, and others enable tracking brand visibility across AI platforms. Establish baseline visibility metrics now to measure improvement.

Optimize for voice and chat interfaces. As more queries come through voice assistants and chat interfaces, content optimized for natural language queries outperforms keyword-stuffed pages. Write the way people ask questions, not the way traditional SEO suggested.

The Lead Generation GEO Challenge

Lead generators face a particular challenge: content optimized for persuasion is poorly suited for extraction. An AI agent evaluating an insurance lead generator does not need testimonial videos or urgency messaging. It needs coverage types, service areas, pricing structures, and qualification requirements in extractable formats.

Algorithmic Brand Safety: Managing AI Reputation

In an agentic world, brand perception is not just shaped by your marketing – it’s shaped by what AI systems say about you. Managing algorithmic reputation becomes a critical function.

The New Reputation Risk

AI systems can “hallucinate” – generating plausible but false information. When an AI agent tells a consumer incorrect information about your offerings, you have a reputation problem you did not create and may not even know about.

The hallucination risk is real. In documented cases, AI systems have generated false claims about companies – incorrect pricing, fabricated reviews, nonexistent products, and invented controversies. The consumer receiving this information has no way to distinguish hallucination from fact. They act on incorrect information, and you bear the consequences.

More subtly, AI systems absorb sentiment from training data. If online discussions about your brand skew negative, AI responses may reflect that sentiment – steering agents and users away from your offerings based on accumulated perception rather than current reality.

The training data problem creates lag. Improvements you make today do not appear in AI responses until the next training cycle. A reputation crisis that you resolved months ago may continue affecting AI recommendations if the training data captured the crisis but not the resolution.

New Threat Vectors

New risks include competitive manipulation (poisoning training data with negative information), outdated information presented as current, context collapse (criticism for one product applied company-wide), and synthetic content loops where AI systems train on AI-generated content about your brand.

Reputation Management for AI

Monitor AI outputs. Regularly query major AI platforms about your brand. Build a monitoring program that samples AI responses systematically, not just when problems emerge.

Correct misinformation proactively. Surface accurate data through authoritative channels. Update structured data and refresh key content to make accurate information more accessible than inaccurate information.

Manage training data exposure. Actively publishing accurate, authoritative content improves the training data that influences AI perception – updating Wikipedia entries, publishing on authoritative platforms, and ensuring your content is well-indexed.

Build trust signals. AI systems evaluate trustworthiness through domain authority, external citations, consistency across sources, and recency of updates. Invest in signals that AI systems weight in credibility assessments.

Prepare for crisis response. Document correct information, establish channels for reaching AI platform providers, and develop communication strategies for customers who received incorrect AI-generated information.

Preparing for the Agentic Future: A Practical Roadmap

The transformation timeline provides a window – but the window is measured in years, not decades. Here’s how to prepare:

Immediate Actions (Now)

Audit your data architecture. Can AI agents access structured information about your offerings? If your data only exists as rendered web pages, you are unprepared for agent queries.

Implement Schema markup. The minimum viable preparation is comprehensive Schema.org structured data on all key pages. This improves both GEO visibility and future agent accessibility.

Monitor AI visibility. Start tracking how your brand appears in AI-generated responses. Establish baselines before optimization so you can measure improvement.

Preserve your human infrastructure. Agentic commerce is additive, not immediately replacing human channels. Continue optimizing for human conversion while building agent readiness.

Medium-Term Preparation (2026-2027)

Build API capability. Develop endpoints that can serve structured data about your products, services, pricing, and availability. Even before agent protocols mature, API infrastructure positions you for integration.

Track protocol development. Monitor ACP, A2A, AP2, and MCP progress. Understand which protocols are gaining adoption in your vertical and prepare integration plans.

Experiment with agent interactions. As agent shopping tools become available, test how they evaluate your offerings. Identify gaps in your data or presentation that disadvantage you in agent consideration.

Develop algorithmic trust strategy. Understand what signals AI agents use to evaluate trustworthiness. Build those signals systematically – not just for current visibility, but for future agent selection.

Strategic Positioning (2028+)

Deploy agent-ready infrastructure. Full integration with dominant agentic commerce protocols. Endpoints that agents can query, negotiate with, and transact through.

Develop agent-specific optimization. Just as you currently A/B test for human conversion, you will need to optimize for agent selection. Understand the parameters agents weight and position accordingly.

Maintain human capability. Not all commerce will shift to agents simultaneously. Different demographics, verticals, and use cases will adopt at different rates. Maintain capability across channels.

Frequently Asked Questions

1. What exactly is agentic commerce?

Agentic commerce is a model where AI agents shop, negotiate, and complete transactions on behalf of humans – autonomously. Unlike chatbots that answer questions, these systems receive a goal (“Find me the best auto insurance”), execute multi-step tasks across platforms, compare options, and can complete purchases without human intervention at each step. OpenAI, Google, and Anthropic have all released protocols enabling this capability, with live integrations on Shopify, Etsy, and Instacart as of late 2025.

2. How big will agentic commerce become?

McKinsey projects agentic commerce could generate $1 trillion in U.S. B2C retail revenue by 2030, with global projections reaching $3-5 trillion. For context, total U.S. e-commerce in 2024 was approximately $1.1 trillion. The agentic AI market itself is projected at $10 billion by 2026 with 35% compound annual growth. This is not incremental expansion – it’s a parallel commercial architecture emerging alongside traditional e-commerce.

3. Will AI agents completely replace lead forms?

Lead forms will not disappear immediately, but their role will diminish. In the Agent-to-Site model (operational today), agents still complete forms – just faster and without reading persuasive content. In the Agent-to-Agent model (emerging), agents bypass forms entirely, querying APIs directly. The transition will be gradual: human-centric forms remain relevant for segments and use cases that adopt slowly, while API-first infrastructure becomes essential for capturing agent-mediated opportunities.

4. What is machine-to-machine (M2M) commerce in lead generation?

M2M commerce occurs when AI buyer agents negotiate directly with seller agents without human interface. Parameters are exchanged, negotiations occur, and transactions complete – all machine to machine. For lead generation, this means the “conversion moment” migrates from your landing page to the algorithm’s decision logic. Major payment processors (Visa, Mastercard, PayPal, Stripe) have built infrastructure enabling agents to initiate transactions autonomously with appropriate authentication and fraud controls.

5. What is Generative Engine Optimization (GEO)?

GEO optimizes content for AI citation rather than search engine ranking. As discovery shifts from Google to AI platforms – ChatGPT has 800 million weekly users, and LLM traffic is projected to overtake Google search by end of 2027 – being cited in AI responses becomes as important as ranking in search results. GEO requires structuring content for extraction, including citable facts, building external authority, and implementing comprehensive Schema.org markup. LLMs typically cite only 2-7 domains per response, making inclusion in that small set critical.

6. How do I make my business visible to AI agents?

Start with structured data. Implement Schema.org markup (JSON-LD format) for products, services, pricing, and organization information. Build API endpoints that can serve structured responses to agent queries. Ensure your content is factually dense, clearly organized, and easily extractable. Monitor how AI systems represent your brand and correct misinformation proactively. Companies without machine-readable data become invisible to agent-mediated discovery – the agentic equivalent of not appearing in Google search results.

7. What protocols should I prepare for?

Four major protocols are emerging: OpenAI’s Agentic Commerce Protocol (ACP), developed with Stripe, enables transactions within ChatGPT. Google’s Agent-to-Agent (A2A) Protocol enables autonomous agent communication. Anthropic’s Model Context Protocol (MCP) standardizes how AI systems access external resources. Google’s AP2 protocol provides additional agent capabilities. Track which protocols gain adoption in your vertical and prepare integration plans accordingly. The protocols are not mutually exclusive – you may need to support multiple standards.

8. How does attribution work in agentic commerce?

Attribution becomes significantly more complex. If ChatGPT recommends your brand based on content that influenced its training, which campaign gets credit? If a consumer’s agent queries your API based on preferences specified months ago, what drove the conversion? Traditional attribution models assume human journeys with identifiable touchpoints. Agent-mediated journeys collapse touchpoints and obscure decision paths. New attribution frameworks are needed, and expect a period of measurement uncertainty as the industry adapts.

9. What is algorithmic brand safety?

Algorithmic brand safety involves managing how AI systems represent your brand. AI can hallucinate – generating plausible but false information about your pricing, products, or reputation. Training data lag means improvements you make today may not appear in AI responses until the next training cycle. Competitive manipulation through poisoned training data is an emerging threat. Monitor AI outputs about your brand regularly, correct misinformation through authoritative channels, and build trust signals that AI systems weight in credibility assessments.

10. How much time do I have to prepare?

The window is measured in years, not months – but the timeline is compressed. Foundation-phase infrastructure shipped in 2024-2025. Early adoption will accelerate through 2026-2027. Mainstream B2B integration arrives 2027-2028. Market penetration reaches critical mass by 2029-2030. Practitioners who build agent-ready infrastructure now – structured data, API architecture, GEO optimization, algorithmic trust signals – will capture compounding advantages. Those who wait until agent commerce is dominant will rebuild from scratch while competitors operate at scale.

Key Takeaways

-

McKinsey projects agentic commerce will generate $1 trillion in U.S. B2C revenue and $3-5 trillion globally by 2030 – equal to or exceeding current total e-commerce. ChatGPT’s 800 million weekly users and live shopping integrations signal mainstream adoption is underway.

-

Three interaction models are emerging: Agent-to-Site (AI browses human websites), Agent-to-Agent (machine-to-machine negotiation), and Brokered Interactions (platform intermediaries connecting buyer and seller agents).

-

AI agents will bypass lead forms, querying APIs directly with structured parameters. The “conversion moment” migrates from landing page optimization to algorithmic selection criteria. Operators without API infrastructure become invisible to agent-mediated commerce.

-

Speed-to-contact advantages evaporate when everyone responds instantly. Competition shifts to inclusion rate – whether agents consider you at all – and the trust signals that influence algorithmic selection.

-

Generative Engine Optimization (GEO) becomes a parallel discipline to SEO. LLMs cite only 2-7 domains per response, and industry projections suggest LLM traffic will overtake traditional Google search by end of 2027.

-

API-first architecture and comprehensive Schema.org markup transform from nice-to-have to competitive requirement. Companies without machine-readable data become invisible to agent-mediated discovery.

-

Algorithmic brand safety requires monitoring AI outputs, correcting misinformation proactively, and building trust signals that matter to machines – not just persuasion signals that matter to humans.

-

The timeline is compressed: foundation phase complete (2024-2025), early adoption underway (2026-2027), mainstream B2B integration arriving (2027-2028), market penetration reaching critical mass by 2029-2030. The window for preparation is open, but shrinking.

Sources

-

Model Context Protocol - Anthropic’s open standard for connecting AI assistants to external data sources and tools, enabling the agent-to-system integration described in agentic commerce architectures.

-

Schema.org - The structured data vocabulary referenced for enabling machine-readable content that AI agents can parse directly, essential for agent-to-site interaction patterns.

-

Google Developers: Structured Data - Technical documentation for implementing the structured data markup that enables AI agents and search engines to understand and process business information.

-

Meta AI Research - Research publications on large language models and AI agent capabilities underlying the agentic commerce projections.

-

IBM: Machine Learning - Technical reference for the machine learning concepts powering AI agent decision-making, including the neural network architectures behind autonomous systems.

Statistics, protocol information, and market projections current as of December 2025. Agentic commerce capabilities and adoption continue to evolve rapidly.