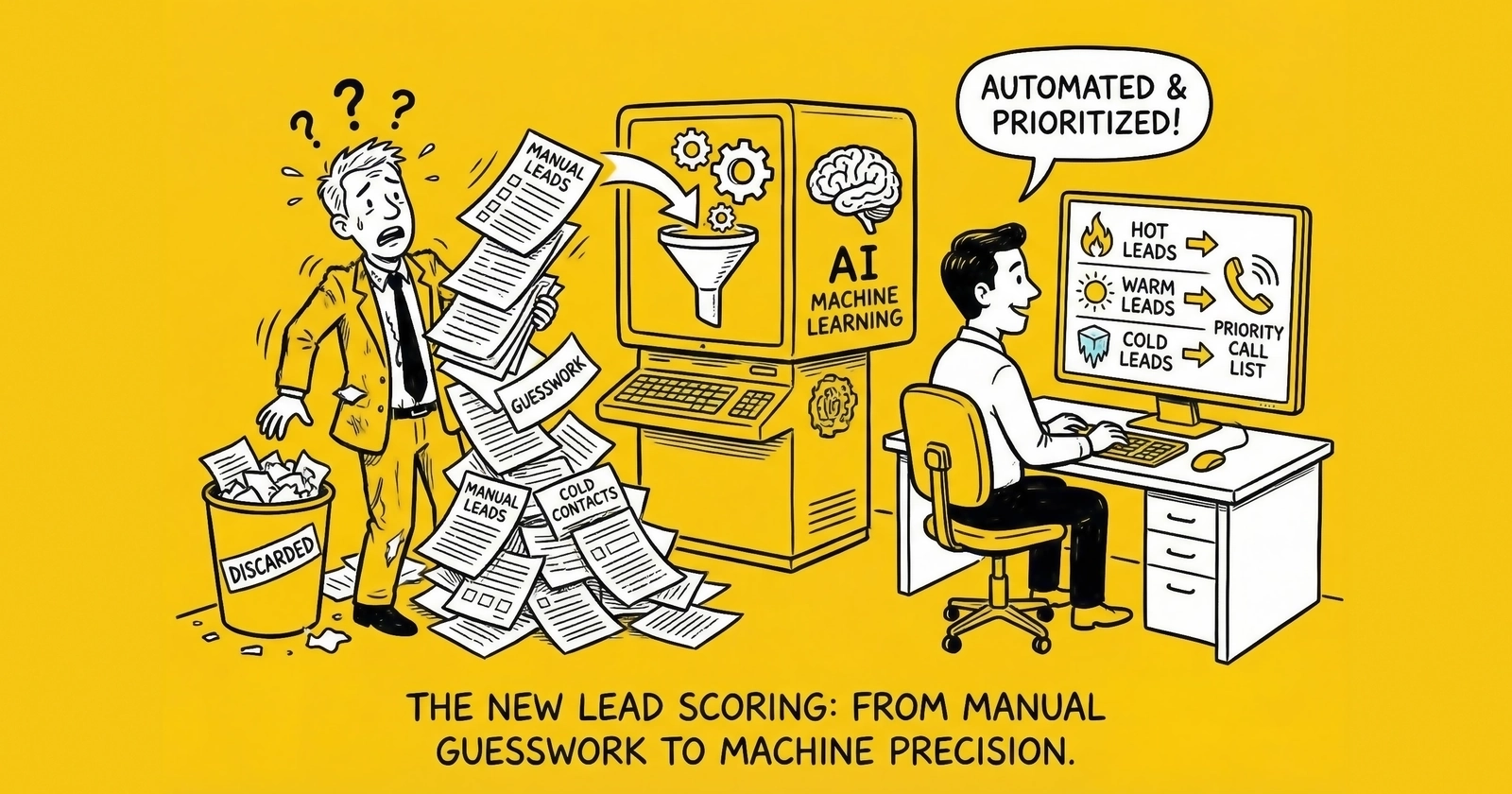

Predictive lead scoring separates operators who optimize from those who guess. Machine learning models find patterns invisible to human intuition, enabling 15-40% improvements in conversion rates by focusing resources on leads most likely to buy.

Your sales team calls leads in the order they arrive. The first lead of the morning might be a tire-kicker who browsed your site for 30 seconds and submitted a form to access a free guide. The twentieth lead might be a decision-maker with budget authority who spent 12 minutes comparing your pricing page against competitors and submitted a detailed request for consultation.

Both leads look identical in your CRM. Same fields populated. Same timestamp priority. Same treatment.

This is the lead prioritization problem, and it costs businesses millions annually in misallocated sales resources, slower response times to high-value prospects, and conversion rates that plateau despite increasing lead volume. Traditional scoring methods, based on demographic filters and arbitrary point systems, capture only the most obvious patterns. They miss the subtle behavioral signatures that actually predict conversion.

Machine learning changes the equation fundamentally. Rather than humans guessing which characteristics matter, algorithms analyze thousands of conversion outcomes and identify patterns that would never occur to even experienced practitioners. The result: lead prioritization that actually predicts who will buy, not just who looks good on paper.

This article provides the complete framework for implementing AI-powered lead scoring: how these systems work, what data feeds them, how to build and maintain scoring models, and the realistic outcomes operators achieve. Whether you are building internal scoring capability or evaluating vendor solutions, understanding these mechanics separates strategic adoption from expensive disappointment.

The Business Case for Predictive Lead Scoring

Before diving into technical architecture, let us establish why AI lead scoring matters operationally and financially. The investment required, both in technology and organizational change, demands clear understanding of the payoff.

The Priority Problem

Every lead generation operation faces the same fundamental constraint: limited sales capacity meeting variable lead quality. A sales team can only work so many leads per day. Response time matters enormously. Speed-to-lead research consistently shows that leads contacted within five minutes convert at dramatically higher rates than those contacted in 30 minutes or more, a principle explored in depth in our speed-to-lead guide.

But not all leads deserve equal speed. A lead with 5% conversion probability should not receive the same immediate attention as a lead with 35% conversion probability. When sales teams treat all leads equally, they systematically under-prioritize high-value opportunities while over-investing in leads that will never convert.

The math compounds quickly. Consider an operation generating 500 leads daily with a sales team capable of contacting 300 within the first hour. Without scoring, those 300 contacts are essentially random selections from the 500. With effective scoring, those same 300 contacts target the leads with highest conversion probability, potentially doubling or tripling actual conversions without adding sales headcount.

Quantifying the Improvement

Industry research on AI-powered lead scoring implementations reveals consistent patterns. Organizations implementing predictive scoring report 15-40% improvements in conversion rates, depending on baseline sophistication and data quality. Sales cycle length decreases as representatives focus on prospects with genuine buying signals rather than nurturing leads that will never progress.

The efficiency gains extend beyond conversion rates. When sales teams trust the prioritization system, they stop wasting time second-guessing assignments or cherry-picking leads based on intuition. Follow-up sequences align with predicted intent levels. Marketing qualified leads (MQLs) more reliably translate to sales qualified leads (SQLs) because the definition of “qualified” is based on actual outcome data rather than arbitrary thresholds.

Perhaps most importantly, predictive scoring creates feedback loops that improve over time. Every lead that converts or fails to convert feeds additional training data to the model. Six months of continuous learning typically outperforms initial deployment by 20-30% in prediction accuracy. The system gets smarter as you use it.

What Traditional Scoring Misses

Traditional lead scoring assigns points based on demographic fit and explicit engagement. These rules capture obvious patterns that any experienced sales manager would recognize. They do not capture the subtle behavioral signatures that actually differentiate buyers from non-buyers.

Machine learning models find patterns like these:

- Leads who view the pricing page twice with 24-48 hour gaps convert at 3x the rate of single-view leads

- Leads arriving via branded search terms convert at 2.4x the rate of generic category terms

- Leads completing forms in 45-90 seconds convert at higher rates than both faster submissions (potential bots) and slower submissions (potential confusion)

- Leads from certain email domains systematically outperform based on organizational culture characteristics invisible in demographic data

No human would discover these patterns without systematic analysis of thousands of outcomes. The patterns exist in the data, waiting for algorithms sophisticated enough to find them.

How Machine Learning Lead Scoring Works

Understanding the mechanics of predictive scoring helps operators make better implementation decisions, evaluate vendor claims, and maintain systems effectively over time.

The Foundation: Outcome Data

Every machine learning model requires training data connecting inputs (lead characteristics) to outputs (conversion outcomes). For lead scoring, this means historical leads with known results: which leads converted to customers, which progressed to opportunities but stalled, which never engaged after initial contact.

The quality and quantity of this training data determines everything that follows. Models trained on 500 leads produce unreliable predictions. Models trained on 50,000 leads with complete outcome tracking produce actionable intelligence. The minimum threshold for meaningful model development is typically 5,000-10,000 leads with documented outcomes, though more data consistently produces better results.

Outcome definition matters significantly. “Conversion” might mean different things in different contexts:

- Form submission to qualified lead (marketing conversion)

- Qualified lead to sales accepted lead (sales conversion)

- Sales accepted lead to closed deal (revenue conversion)

- Closed deal to successful customer (value conversion)

Each stage can be predicted, but models optimized for earlier stages may not predict later stages accurately. A lead likely to become sales accepted is not necessarily likely to become a paying customer. Organizations must decide which outcome matters most for their operational context and train models accordingly.

Feature Engineering: What the Model Sees

Raw lead data rarely predicts conversion directly. Feature engineering transforms raw inputs into signals that reveal underlying patterns.

Consider a simple data point: the lead arrived at 3:47 PM on a Tuesday. Raw timestamp data tells the model little. Engineered features derived from that timestamp tell much more:

- Hour of day (3 PM falls in afternoon business hours)

- Day of week (Tuesday is typically high-engagement midweek)

- Minutes past the hour (47 might indicate end-of-meeting timing)

- Days from month end (proximity to budget cycles)

- Deviation from typical submission time for this lead source

Each derived feature gives the model another dimension for pattern recognition. Sophisticated scoring systems might derive dozens of features from a single raw data point.

The most predictive features typically fall into several categories:

Behavioral features capture how leads interact with your digital properties. Page views, time on site, scroll depth, return visits, content consumption patterns, and form interaction dynamics. These features often predict conversion better than any demographic data because they reveal actual intent rather than assumed fit.

Temporal features capture timing patterns. When leads engage, how quickly they progress through content, time between touchpoints, and response latency to communications. Urgency signals often hide in temporal data invisible to casual observation.

Source features encode origin characteristics. Traffic channel, campaign, keyword, referrer, device type, and geographic origin. Different sources attract different lead quality even when leads appear identical on other dimensions. Understanding first-party versus third-party leads provides context for how source influences quality.

Firmographic features describe organizational characteristics for B2B leads. Company size, industry, technology stack, recent funding, hiring velocity, and organizational structure. These features predict organizational readiness and budget authority.

Engagement features track interactions with marketing communications. Email opens, click patterns, webinar attendance, content downloads, and social engagement. The pattern of engagement often matters more than any single interaction.

Model Architecture Options

Several machine learning approaches apply to lead scoring, each with distinct characteristics.

Logistic regression remains surprisingly effective for lead scoring despite being among the simplest approaches. The model predicts probability of binary outcomes (will convert / will not convert) based on weighted feature contributions. Logistic regression’s primary advantage is interpretability. You can see exactly which features drive predictions and how much each contributes. This transparency helps operators understand and trust the system while identifying data quality issues that might compromise predictions.

Random forest models aggregate predictions from many decision trees, each trained on different data subsets. The ensemble approach reduces overfitting and handles non-linear relationships that logistic regression misses. Random forests typically achieve higher accuracy than logistic regression but sacrifice some interpretability. Feature importance rankings show which inputs matter most, but the interaction effects between features become difficult to trace.

Gradient boosting (implementations like XGBoost, LightGBM, and CatBoost) builds trees sequentially, with each new tree correcting errors from previous trees. These models often achieve the highest accuracy in lead scoring applications but require more careful tuning to prevent overfitting. Gradient boosting has become the default choice for many production scoring systems.

Neural networks can capture arbitrarily complex patterns but require substantially more training data and computational resources. For most lead scoring applications, the marginal accuracy gains from neural networks do not justify the additional complexity. Neural networks become relevant only at very large scale with hundreds of input features and millions of training examples.

The practical recommendation for most operations: start with logistic regression for interpretability and baseline performance, then evaluate whether gradient boosting improvements justify the reduced transparency. Only consider neural networks if you have dedicated data science resources and clear evidence that simpler models leave significant accuracy on the table.

Training and Validation

Model training follows established machine learning practices with considerations specific to lead scoring.

Data splitting separates historical leads into training sets (typically 70-80% of data) used to build the model and validation sets (20-30%) used to evaluate performance on data the model has not seen. This separation prevents the model from memorizing training examples rather than learning generalizable patterns.

For lead scoring specifically, temporal validation often matters more than random splitting. Leads from January through September might train the model, with October through December reserved for validation. This approach tests whether patterns discovered in historical data predict future behavior rather than just interpolating within the training period.

Class imbalance presents challenges in lead scoring because conversion rates are typically low. If only 5% of leads convert, a model that predicts “will not convert” for every lead achieves 95% accuracy while being completely useless. Techniques like oversampling the minority class, undersampling the majority class, or adjusting class weights during training address this imbalance.

Performance metrics must align with operational goals. Accuracy alone misleads when classes are imbalanced. More relevant metrics include:

- Precision: Of leads the model identifies as high-quality, what percentage actually convert?

- Recall: Of leads that actually convert, what percentage did the model identify as high-quality?

- AUC-ROC: Overall model discrimination ability, measuring how well the model separates converters from non-converters regardless of threshold

- Lift: How much better does model-guided prioritization perform compared to random selection?

A model achieving 3x lift in the top decile, meaning the top 10% of scored leads convert at 3x the baseline rate, provides substantial operational value even if absolute precision remains modest.

Data Requirements and Integration

Effective lead scoring depends entirely on data quality. The most sophisticated algorithms produce garbage when fed garbage. Understanding what data matters and how to integrate it determines whether implementation succeeds or fails.

Essential Data Categories

Lead attributes form the foundation: contact information, company details, demographic data, and any information collected through forms or enrichment. The challenge is not collecting this data but normalizing it for model consumption. “Enterprise” means different things to different organizations. Job titles follow no standard taxonomy. Industry classifications vary by data source.

Standardization protocols must convert messy real-world data into consistent model inputs. Map job titles to seniority categories. Normalize company size to defined ranges. Convert industries to standardized classification codes. This preprocessing work often requires more effort than model development itself.

Behavioral data tracks interactions across your digital ecosystem. Website analytics capture page views, session duration, and navigation patterns. Marketing automation platforms track email engagement, content downloads, and campaign responses. CRM systems record sales interactions, meeting outcomes, and communication history.

Integrating behavioral data requires technical infrastructure connecting disparate systems. Customer data platforms (CDPs) increasingly serve this integration function, unifying identity across touchpoints and providing consolidated behavioral profiles for scoring models.

Validation data from external services adds quality signals often more predictive than self-reported information. Phone validation reveals line type, carrier, and connection status. Email validation indicates deliverability and domain reputation. Address verification confirms location accuracy. Fraud scores identify suspicious submission patterns. Our guide to lead validation across phone, email, and address covers these verification techniques in detail.

These validation signals correlate strongly with conversion outcomes. A lead with a disconnected phone number will never convert through phone-based sales regardless of how qualified they appear on demographic dimensions. Incorporating validation results dramatically improves model performance.

Third-party enrichment appends additional context unavailable from direct interactions. Firmographic data providers add company details, technology usage, and organizational characteristics. Intent data vendors identify accounts showing buying signals across the web. Contact data services enhance thin profiles with additional attributes.

Enrichment addresses the “partial form” problem where leads provide minimal information during initial capture. A lead who submits only email address can be enriched with company, title, industry, and estimated revenue, transforming a nearly-useless record into a scorable profile.

Integration Architecture

Data integration for lead scoring follows one of several architectural patterns.

Batch processing extracts data from source systems periodically (nightly or hourly), transforms it into analytical format, and loads it into a data warehouse or scoring platform. This approach works for operations where real-time scoring is not critical and where leads can wait hours for scores. The advantage is simpler integration; the disadvantage is scoring latency.

Real-time streaming scores leads at the moment of submission, enabling immediate prioritization and routing decisions. This requires event-driven architecture where form submissions trigger scoring workflows that complete within seconds. For operations where speed-to-lead matters, real-time scoring is essential. The implementation complexity and infrastructure costs are substantially higher than batch processing.

Hybrid approaches score leads in real-time based on immediately available data, then re-score periodically as additional data accumulates. An initial score based on form data and immediate validation might update 24 hours later incorporating behavioral data from subsequent site visits. This pattern captures the urgency benefits of real-time scoring while improving accuracy over time.

Integration with CRM systems requires bidirectional data flow. Scores must push to CRM records where sales teams can act on them. Outcome data must flow back to scoring systems for model retraining. This bidirectional integration often proves the most challenging technical component, particularly with legacy CRM implementations.

Data Quality Governance

The adage “garbage in, garbage out” applies with particular force to machine learning systems. Data quality issues tolerable in traditional reporting become model-breaking in predictive applications.

Completeness measures whether required fields are populated. Missing values must be handled systematically through imputation, exclusion, or special encoding.

Accuracy measures whether field values represent reality. Email addresses that pass format validation might be undeliverable. Phone numbers might belong to the wrong person. Validation against external sources provides accuracy checks.

Consistency measures whether the same reality appears identically across records. “IBM” and “International Business Machines” should resolve to a single entity. Without normalization, models treat these as distinct companies.

Timeliness measures whether data arrives when needed. Behavioral data arriving 48 hours after lead submission cannot inform real-time scoring.

Organizations attempting sophisticated scoring without quality foundations consistently fail, blaming technology when the problem was always data.

Building Your Scoring Model: Step by Step

Implementation follows a structured process from data preparation through production deployment. Each phase has specific deliverables and common failure modes worth understanding in advance.

Phase 1: Discovery and Data Audit

Before writing any code, audit available data sources and define project scope.

Inventory existing systems containing lead and customer data: CRM platforms, marketing automation tools, website analytics, call tracking systems. Document what data each contains and what integration challenges exist.

Define the target outcome precisely. What does “conversion” mean? At what pipeline stage do you need prediction? A model predicting qualified opportunities within 30 days differs fundamentally from one predicting eventual customer lifetime value.

Assess data sufficiency against minimum requirements. How many historical leads exist with documented outcomes? Are there sufficient examples of both positive and negative outcomes for model training?

Identify data gaps that might limit model performance. If you lack behavioral tracking, models will rely entirely on demographic features. Understanding these gaps upfront helps set realistic expectations.

This discovery phase typically requires 2-4 weeks and often reveals that data preparation will consume more effort than model development itself.

Phase 2: Data Preparation

Transform raw data into model-ready format through systematic preprocessing.

Extract data from source systems into a unified analytical environment. Data warehouses (Snowflake, BigQuery, Redshift) or specialized ML platforms provide appropriate infrastructure. The extraction must be reproducible, documented, and scheduled for periodic updates.

Clean and normalize raw data to consistent formats. Deduplicate records that might represent the same lead across systems. Standardize categorical values to consistent taxonomies. Handle missing values through imputation, exclusion, or special encoding. Convert timestamps to consistent time zones.

Engineer features from raw inputs. Create derived variables that capture patterns invisible in raw data. Time-based features, interaction combinations, rolling aggregates, and category encodings all transform data into more predictive formats. Feature engineering is often the highest-leverage activity in the entire process. A well-engineered feature set frequently matters more than algorithm selection.

Create training datasets with appropriate outcome labels. Join lead attributes to conversion outcomes. Handle the time dimension carefully: features should only include information available at the time of scoring, not future information that would not exist in production use.

Data preparation typically consumes 40-60% of total project effort. Organizations consistently underestimate this phase, creating project overruns when preparation takes longer than naive estimates assumed.

Phase 3: Model Development

With clean, engineered data in hand, actual model development can proceed.

Establish baseline performance before building sophisticated models. What conversion rate does random lead selection achieve? What performance does a simple rule-based approach deliver? These baselines provide context for evaluating model improvements.

Split data appropriately for training and validation. Reserve holdout sets that the model never sees during development. Consider temporal splits that test prediction on future periods rather than interpolation within training periods.

Train candidate models using multiple algorithms. Logistic regression, random forest, and gradient boosting are standard candidates for lead scoring. Compare performance across algorithms to identify the best approach for your specific data characteristics.

Tune hyperparameters for best-performing models. Grid search or Bayesian optimization find parameter combinations that maximize validation performance without overfitting to training data. This tuning typically improves performance by 5-15% over default parameters.

Evaluate on holdout data after tuning is complete. The holdout set provides unbiased performance estimates since it was never used for training or tuning decisions. If holdout performance differs substantially from validation performance, overfitting may have occurred.

Analyze feature importance to understand what drives predictions. Which inputs matter most? Do the important features make business sense, or might they reflect data leakage or spurious correlations? This analysis builds trust in model behavior and identifies potential issues.

Model development might take 2-6 weeks depending on data complexity and team experience. The process is inherently iterative; expect multiple cycles of feature engineering and model refinement before achieving satisfactory performance.

Phase 4: Production Deployment

Models only create value when deployed to production systems where they inform operational decisions.

Build scoring pipeline that applies the model to new leads. Real-time scoring requires API infrastructure processing requests within latency constraints. Batch scoring requires scheduled jobs processing accumulated leads at regular intervals.

Integrate with operational systems where scores will be consumed. CRM systems need score fields populated automatically. Routing systems need score-based rules configured.

Establish monitoring for model performance in production. Track prediction accuracy by comparing scores to subsequent outcomes. Monitor for score distribution drift. Alert when performance degrades below acceptable thresholds.

Deployment typically requires 2-4 weeks of engineering and configuration work, plus ongoing operational overhead.

Phase 5: Continuous Improvement

Initial deployment is the beginning, not the end. Models require ongoing attention.

Retrain periodically as new outcome data accumulates. Monthly or quarterly retraining cycles are typical, though optimal frequency depends on how quickly your market changes.

Incorporate new features as additional data sources become available. Intent data, additional behavioral tracking, or enrichment services all potentially improve model performance.

Monitor for degradation and investigate performance changes. Model performance naturally degrades as the world changes. Prompt detection prevents accuracy decay from compounding.

Continuous improvement transforms scoring from a project into a capability. Organizations neglecting maintenance find initial benefits erode within 6-12 months.

Implementation Options: Build vs. Buy

Organizations pursuing AI lead scoring face a fundamental choice between building custom solutions and adopting vendor platforms. Neither approach is universally superior; the right choice depends on organizational context.

Building In-House

Custom development makes sense when you have specialized requirements that vendor platforms cannot accommodate, data science talent available to build and maintain systems, competitive advantage from proprietary scoring methodology, and sufficient scale to justify infrastructure investment.

The advantages of custom development include complete control over model architecture and features, deep integration with existing systems, proprietary methodology that competitors cannot replicate, and no ongoing vendor licensing costs.

The disadvantages are substantial: significant upfront investment (typically $200,000-500,000 for initial development), ongoing data science staffing requirements ($150,000-300,000 annually for qualified resources), infrastructure costs for model training and serving, and slower time-to-value compared to platform adoption.

Custom development is rarely appropriate for organizations processing fewer than 50,000 leads annually. The investment simply does not pay back at smaller scale.

Vendor Platforms

Specialized lead scoring vendors offer pre-built platforms that accelerate deployment while reducing technical complexity. These platforms are appropriate when you need faster time-to-value, lack dedicated data science resources, prefer predictable subscription costs over variable development costs, or need proven methodology without experimentation risk.

Leading platforms in the space include Salesforce Einstein, HubSpot Predictive Lead Scoring, Marketo, 6sense, Demandbase, and specialized providers like Infer, MadKudu, and Lattice Engines (now Dun & Bradstreet).

Platform advantages include rapid deployment (weeks rather than months), vendor-maintained models with automatic updates, proven methodology across many implementations, and predictable subscription pricing.

Platform disadvantages include limited customization for specialized requirements, dependency on vendor roadmap and viability, potential integration challenges with existing systems, and ongoing licensing costs that accumulate over time.

For most mid-market organizations processing 10,000-100,000 leads annually, vendor platforms provide the better path. The economics favor proven platforms over speculative custom development. Our guide on AI-powered lead scoring with predictive models provides deeper technical coverage of model architectures.

Hybrid Approaches

Some organizations adopt hybrid approaches: vendor platforms for foundation scoring while building custom models for specific high-value use cases. This works well when organizations have some data science capability but not enough for comprehensive custom development.

Realistic Expectations and Common Pitfalls

Implementing AI lead scoring delivers substantial benefits, but those benefits materialize only when organizations approach implementation realistically. Many deployments fail not from technical problems but from unrealistic expectations or organizational barriers.

What AI Scoring Can and Cannot Do

AI scoring can predict conversion probability more accurately than human intuition or rule-based systems. It can identify patterns in data that humans would never discover. It can enable consistent, scalable prioritization that does not degrade when volume increases or personnel changes.

AI scoring cannot fix fundamental lead quality problems. If your leads are systematically unqualified, scoring will predict their low conversion probability accurately but cannot transform them into better leads. Scoring optimizes prioritization of the leads you have; it does not improve the leads themselves. For context on what constitutes quality, see our guide to understanding lead quality scores.

AI scoring cannot compensate for data deficiencies. If you lack behavioral tracking, models cannot learn behavioral patterns. If outcome data is incomplete or inaccurate, models train on wrong signals. The technology amplifies existing data assets; it does not create them.

AI scoring cannot replace sales judgment entirely. Edge cases, relationship factors, and contextual knowledge that sales representatives possess should complement model predictions rather than being overridden by them. The most effective implementations use scores to inform human decisions rather than automate them completely.

Common Implementation Failures

Insufficient outcome data undermines many projects. Organizations attempt sophisticated modeling with hundreds or low thousands of training examples when tens of thousands are needed. The resulting models overfit to noise rather than learning genuine patterns.

Data leakage occurs when training data includes information that would not be available at scoring time. A model trained with outcome data available as features will appear to perform brilliantly during development but fail completely in production where outcomes are not yet known.

Ignoring drift causes gradual performance degradation. Market conditions change, lead sources evolve, and buyer behavior shifts. Models trained on historical patterns become decreasingly relevant over time. Organizations that deploy models without monitoring and retraining find that initial benefits evaporate within months.

Organizational resistance prevents adoption even when models perform well. Sales teams that distrust scores will ignore them or actively subvert recommended prioritization. Successful implementation requires change management that builds trust and demonstrates value, not just technical deployment.

Over-optimization on intermediate metrics creates models that perform well on accuracy measures but fail to improve business outcomes. A model might achieve high AUC while providing minimal lift in actual conversion rates. Evaluation metrics must connect to operational impact, not just statistical performance.

Measuring Success

Define success criteria before implementation begins. What improvement in conversion rate justifies the investment? What change in sales efficiency demonstrates value? What reduction in wasted effort on low-probability leads counts as success?

Track multiple metrics that together paint a complete picture:

- Conversion rate improvement comparing scored versus unscored lead handling

- Speed-to-lead improvement for high-scored leads

- Sales cycle reduction for opportunities originating from high-scored leads

- Revenue per lead comparing across score bands

- Sales team adoption measuring actual usage of scores in workflow

Give implementation sufficient time to demonstrate impact. Lead scoring benefits compound over retraining cycles; initial deployment rarely shows full potential. Evaluate after 6-12 months of operation, not after 6-12 weeks.

Integration with Lead Distribution

For organizations operating in the lead generation and distribution ecosystem, scoring integration with distribution platforms creates additional value beyond internal prioritization.

Scoring for Routing Decisions

Lead distribution platforms route leads to buyers based on match criteria and pricing. Traditional routing considers stated buyer preferences and delivers leads meeting those specifications. Score-enhanced routing adds predicted conversion probability to the matching equation.

A lead matching Buyer A’s criteria with 15% predicted conversion probability might route instead to Buyer B, who accepts broader criteria but achieves higher conversion on leads similar to this one. The scoring system knows from historical patterns that Buyer B will convert this particular lead at 25%, making them the better match despite less obvious demographic fit.

This scoring-informed routing optimizes outcomes across the distribution network rather than just matching leads to stated filters.

Premium Tier Pricing

Scores enable premium tier structures where higher-probability leads command higher prices. Buyers willing to pay premium for leads with documented higher conversion probability get priority access to top-scored leads. Budget-conscious buyers access lower-scored leads at reduced prices.

This tiered structure aligns incentives across the marketplace. Lead generators earn more for producing higher-quality leads. Buyers pay prices proportional to expected value. The marketplace as a whole operates more efficiently than flat-pricing alternatives.

Implementing premium tiers requires transparency about scoring methodology and historical score-to-outcome correlation. Buyers will not pay premiums based on claims; they need evidence that high scores actually predict high conversion in their specific context.

Feedback Loops

Distribution systems collecting disposition data from buyers create feedback loops that improve scoring over time. When Buyer A reports a lead converted, that outcome feeds back to update the model. When Buyer B returns a lead as unqualified, that negative signal also contributes.

These feedback loops require technical integration between distribution and scoring systems. Models improve substantially with diverse outcome data from across the buyer network.

The Future of AI Lead Scoring

Several developments will reshape scoring capabilities over the next 2-5 years.

Intent data integration increasingly combines third-party signals (accounts researching competitors across multiple publications) with first-party behavioral data. A lead from an account showing active category research carries different conversion probability than one from an account with no external intent signals.

Natural language processing extracts predictive signals from unstructured data: form comments, chat transcripts, email content, and call recordings. A lead who writes “urgent need before Q2 budget close” signals differently than “just exploring options.” NLP captures these distinctions at scale.

Multi-touch attribution integration will incorporate account journey context into individual scoring. A lead who is the third touch from an account with two previous engaged contacts scores differently than a first-touch lead from an unknown account.

Explainable AI addresses growing demands for model transparency. Techniques like SHAP values provide post-hoc explanations of individual predictions, enabling sales representatives to understand score basis and identify relevant talking points. Explainability also supports regulatory compliance in contexts where automated decisioning faces disclosure requirements.

Key Takeaways

-

AI lead scoring enables 15-40% conversion rate improvements by identifying patterns that human intuition misses and enabling systematic prioritization that scales without degradation.

-

Data is everything. Model sophistication matters less than data quality and quantity. Organizations with 50,000 or more leads with complete outcome tracking can build powerful scoring systems. Organizations with sparse, incomplete data will struggle regardless of algorithm choice.

-

Start simple, iterate continuously. Logistic regression with well-engineered features often outperforms complex neural networks with raw data. Begin with interpretable approaches, establish baselines, then add complexity only when simpler methods reach their limits.

-

Build vs. buy depends on scale and resources. Custom development makes sense for organizations with dedicated data science resources processing 50,000 or more leads annually. Vendor platforms serve most mid-market organizations more efficiently.

-

Integration matters as much as modeling. A brilliant model that does not connect to operational systems creates no value. Plan integration from the start, including CRM score propagation, sales workflow incorporation, and outcome feedback collection.

-

Maintenance is not optional. Models degrade without retraining. Market changes invalidate historical patterns. Organizations must commit to ongoing monitoring and periodic retraining as a permanent operational function, not a project that ends at deployment.

-

Measure business outcomes, not just model metrics. High AUC scores mean nothing if conversion rates do not improve. Define success in operational terms and evaluate against those criteria throughout implementation.

-

Feature engineering often delivers more value than algorithm selection. Invest time in deriving behavioral, temporal, and engagement features from raw data before experimenting with complex model architectures.

Frequently Asked Questions

How many leads do I need before AI lead scoring makes sense?

A minimum of 5,000-10,000 leads with documented conversion outcomes provides the training data foundation for meaningful models. Below this threshold, sample sizes are insufficient for reliable pattern detection, and models tend to overfit to noise rather than learn generalizable signals. Operations processing 1,000-5,000 leads monthly typically accumulate sufficient data within 6-12 months of systematic outcome tracking. If you are not currently tracking outcomes, start immediately; the data collection period is often the binding constraint on implementation timeline.

What conversion rate improvement should I expect from implementing AI scoring?

Organizations implementing predictive scoring typically see 15-40% improvement in conversion rates for prioritized leads, with the range depending on baseline sophistication and data quality. Operations that previously used no scoring or only basic demographic rules tend toward the higher end of improvement. Operations that already had sophisticated rule-based scoring see more modest gains. The improvement compounds with retraining: expect 20-30% additional improvement between initial deployment and mature system after 6-12 months of continuous learning.

How long does implementation take from start to production?

Typical implementation timelines range from 8-16 weeks for vendor platform adoption to 4-8 months for custom development. Vendor platforms require data integration, configuration, and validation but skip the model development phase. Custom development adds data preparation, feature engineering, model training, and validation cycles that extend the timeline substantially. The most common surprise is data preparation duration: organizations consistently underestimate how long it takes to clean, normalize, and engineer features from messy real-world data.

Should I build scoring in-house or buy a vendor platform?

The decision depends on scale, resources, and competitive considerations. Vendor platforms make sense for organizations processing 10,000-100,000 leads annually without dedicated data science resources. These platforms offer faster time-to-value and proven methodology at predictable subscription costs. Custom development makes sense for organizations processing 50,000 or more leads annually with data science talent available, particularly when proprietary scoring methodology provides competitive differentiation. For many mid-market organizations, the practical answer is vendor platforms; the investment in custom development rarely justifies the effort at smaller scale.

How does AI scoring work with my existing CRM and marketing automation?

Integration typically works through API connections or native platform capabilities. Major CRM platforms (Salesforce, HubSpot, Microsoft Dynamics) have established integration patterns for receiving scores from external systems or generating scores through built-in AI features. Scores populate standard fields or custom objects in your CRM, where they inform lead routing, sales workflow prioritization, and reporting. The technical integration is usually straightforward; the operational challenge is ensuring sales teams actually use the scores in their workflow rather than reverting to intuition-based prioritization.

What happens when the model makes wrong predictions?

All prediction models make errors; the goal is minimizing error rates, not eliminating them. High-scored leads will sometimes fail to convert, and low-scored leads will sometimes become valuable customers. The key is calibration: if your model says a lead has 30% conversion probability, roughly 30% of leads with that score should actually convert over a large sample. Well-calibrated models make reliable predictions in aggregate even when individual predictions miss. When models systematically over-predict or under-predict, retraining with updated data typically corrects the miscalibration.

How often should I retrain my scoring models?

Monthly or quarterly retraining cycles are typical, though optimal frequency depends on how quickly your market and lead characteristics change. Faster-changing environments benefit from more frequent retraining. Stable environments can operate effectively with quarterly cycles. The more important discipline is monitoring model performance continuously and triggering retraining when performance degrades below acceptable thresholds, regardless of scheduled cycle. Production monitoring should track prediction accuracy by comparing scores to subsequent outcomes and alert when accuracy deteriorates.

Can AI scoring help with leads that provide minimal information?

Yes, through third-party data enrichment that appends additional attributes to sparse leads. A lead who provides only email address can be enriched with company, title, industry, and firmographic details through data providers. This enrichment transforms nearly-useless sparse records into profiles with sufficient features for meaningful scoring. The enrichment cost (typically $0.10-$0.50 per lead) almost always pays for itself through improved prioritization of enriched leads.

What integration with intent data improves scoring?

Third-party intent data identifies accounts showing buying signals across the web, such as researching competitors, reading category content, or downloading relevant materials from other publishers. Integrating intent signals with first-party behavioral data typically improves scoring accuracy by 15-25% because the model sees buying readiness signals that would otherwise be invisible. Intent data integration requires vendors who collect cross-site signals and technical infrastructure to match leads against intent databases. The commercial and technical infrastructure for intent integration has matured substantially and is now accessible to mid-market organizations, not just enterprises.

How do I get my sales team to actually use lead scores?

Adoption requires demonstrating value and building trust. Start by showing historical analysis: what conversion rates did high-scored leads achieve compared to low-scored leads? If the data supports differentiated outcomes, share that evidence widely. Then make scores visible and actionable in the tools sales representatives already use rather than requiring them to access separate systems. Finally, track adoption metrics and celebrate wins when high-scored leads convert quickly. Resistance typically melts when representatives see that following scores actually makes their numbers easier to hit. Forcing adoption through mandate without building trust tends to generate workarounds rather than genuine usage.

Sources

-

scikit-learn: Ensemble Methods - Technical documentation for Random Forest, Gradient Boosting, and other ensemble methods commonly used in production lead scoring models.

-

XGBoost Documentation - Technical reference for XGBoost, the gradient boosting framework widely deployed in enterprise lead scoring due to its combination of accuracy and computational efficiency.

-

IBM: Machine Learning - Technical reference defining machine learning concepts, supervised learning paradigms, and the model training approaches underlying predictive lead scoring systems.

-

NIST Engineering Statistics Handbook - Statistical methodology reference for model validation, performance metrics (AUC, precision, recall), and the hypothesis testing framework for evaluating scoring model improvements.

-

Penn State STAT 200: Probability and Statistics - Statistical foundations for understanding conversion rate analysis, confidence intervals, and the data analysis methods used to measure lead scoring ROI.

This article draws on research and operational experience in lead generation and distribution. Statistics and platform information current as of late 2025.