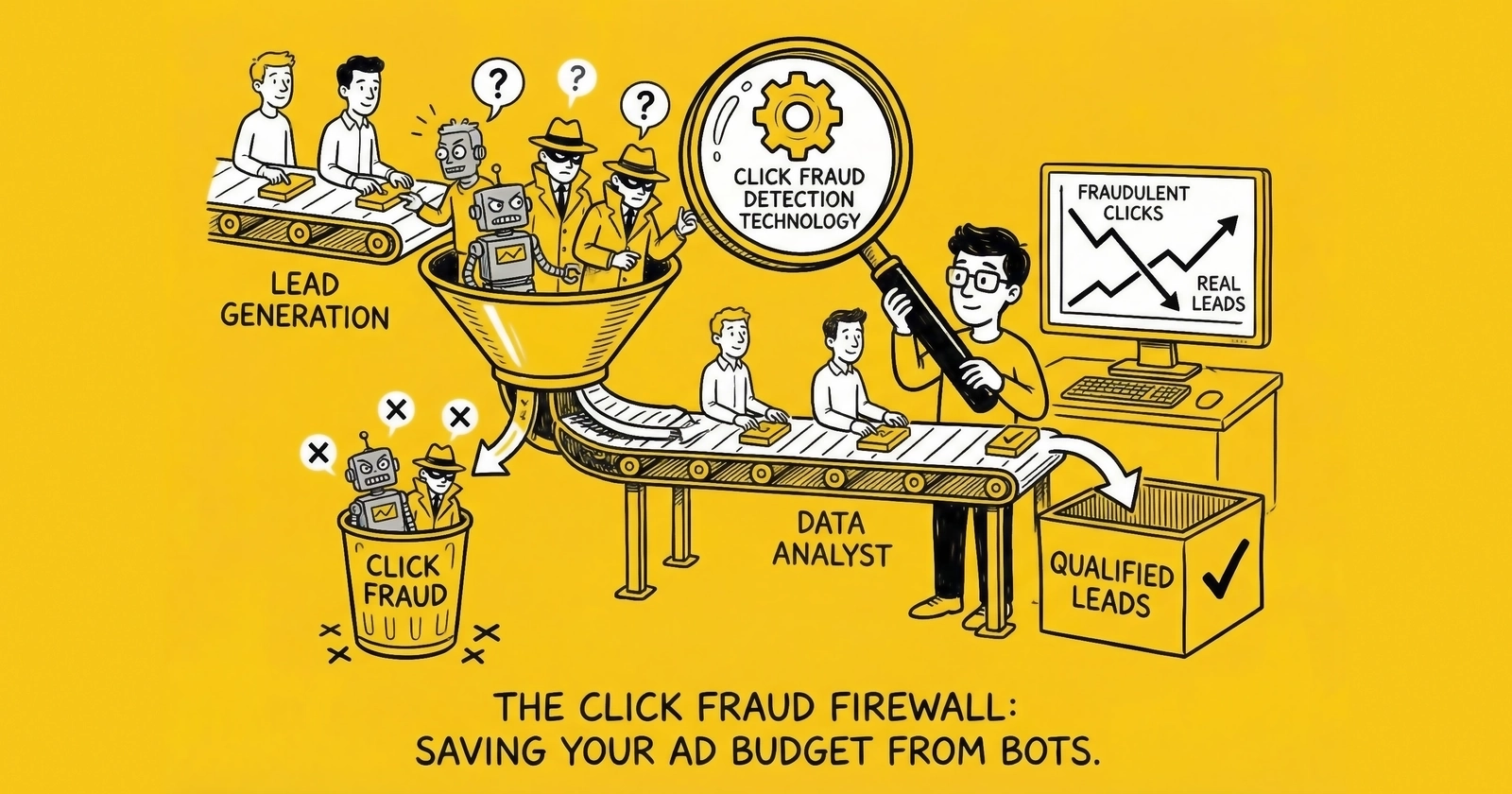

A deep dive into the detection systems, machine learning models, and technical architecture that separate profitable lead generation operations from those bleeding budget to fraudsters. Learn exactly how modern detection technology works – and how to implement it.

Every lead generation operation that runs paid traffic is engaged in an arms race they may not fully understand. On one side: your campaigns, designed to attract genuine consumers with purchase intent. On the other: an evolving ecosystem of bots, click farms, and sophisticated fraud operations designed to extract your ad budget without delivering any value.

The fraud side is winning more often than most practitioners realize. Industry research consistently shows that 14-25% of paid clicks are fraudulent, with lead generation verticals experiencing rates at the higher end of that range. When your cost per click runs $15-50 in competitive verticals like insurance, legal, and solar, that fraud rate translates to $3-12.50 of every $50 in ad spend going directly to fraudsters. Understanding these CPL benchmarks by industry helps contextualize the financial exposure.

But here is what separates successful operators from those slowly bleeding out: the technology they deploy to detect and prevent fraud before it destroys their margins. This guide examines that technology in detail – the detection methods, machine learning models, implementation patterns, and architectural decisions that determine whether you catch fraud at pennies per detection or pay for it at dollars per fraudulent lead.

The Technical Landscape of Click Fraud Detection

Click fraud detection has evolved from simple IP blocking to sophisticated multi-signal analysis powered by machine learning. Understanding this evolution helps you evaluate solutions and implement effective defenses.

First Generation: Rule-Based Detection

Early click fraud detection operated on explicit rules. If an IP address clicked more than three times in an hour, block it. If traffic came from a known datacenter range, reject it. If the user agent string matched a known bot signature, deny access.

Rule-based detection still forms the foundation of most prevention systems. These rules catch obvious fraud – and obvious fraud still accounts for a significant portion of invalid clicks. Google and Meta filter millions of basic bot clicks daily using rule-based systems before they ever bill advertisers.

Common rule-based detection parameters:

| Detection Rule | What It Catches | Limitations |

|---|---|---|

| IP velocity (clicks per hour) | Aggressive bots, competitors | Misses distributed attacks |

| Datacenter IP ranges | Simple bots, server-based attacks | Misses residential proxy networks |

| Known bad IPs/devices | Previously identified fraud sources | Does not catch new sources |

| User agent patterns | Bot frameworks with known signatures | Sophisticated bots spoof agents |

| Geographic mismatches | Traffic from untargeted countries | Misses domestic fraud |

| Session timing thresholds | Instant clicks, zero time on site | Misses human click farms |

Rule-based detection remains essential but insufficient. Modern fraudsters study detection rules and engineer around them. A bot that clicks three times per hour per IP, rotates through residential proxies, and includes realistic user agent strings passes most rule-based filters.

Second Generation: Statistical Anomaly Detection

Statistical approaches analyze traffic patterns to identify anomalies that deviate from legitimate baseline behavior. Rather than explicit rules, these systems learn what normal looks like and flag deviations.

Key statistical methods include:

Distribution Analysis: Legitimate traffic follows predictable distributions. Click timing across hours of day approximates normal curves with peaks during business hours and troughs overnight. Geographic distribution matches population density. Device mix reflects market share statistics. When traffic significantly deviates from these expected distributions, anomaly detection flags it for review.

Velocity Scoring: Beyond simple threshold rules, velocity scoring examines acceleration patterns. A sudden 300% increase in clicks from a previously inactive IP range, even if each individual IP stays below detection thresholds, indicates coordinated fraud activity.

Correlation Analysis: Legitimate users exhibit consistent patterns across dimensions. A user on a mobile device typically shows mobile screen resolution, mobile browser behavior, and geographic location consistent with mobile usage. When correlations break – desktop browsing patterns from claimed mobile devices, or US timezone activity from claimed UK locations – statistical detection identifies the inconsistency.

Clustering Algorithms: Traffic can be grouped by behavioral similarity. Legitimate traffic naturally clusters around common user archetypes (mobile researchers, desktop comparers, quick converters). Fraudulent traffic often forms unusual clusters with distinctive patterns. K-means clustering, DBSCAN, and hierarchical clustering all find application in identifying these anomalous groupings.

Statistical detection catches fraud that follows patterns, even when individual instances pass rule-based filters. Its weakness: truly sophisticated fraud that mimics legitimate distribution patterns.

Third Generation: Machine Learning Detection

Machine learning models represent the current state of the art in click fraud detection. These systems ingest hundreds of features per click, learn complex non-linear relationships between features and fraud probability, and continuously improve as they encounter new fraud patterns.

How ML detection works:

Feature Engineering: Every click generates hundreds of potential features. IP characteristics (type, location, velocity, reputation). Device characteristics (fingerprint, screen properties, installed fonts). Behavioral characteristics (mouse movement patterns, click timing, scroll behavior). Session characteristics (pages visited, time on site, form interactions). Network characteristics (TLS fingerprint, HTTP headers, connection timing).

The art of ML fraud detection lies in engineering features that capture fraud signals without creating excessive noise. Raw features are combined, normalized, and transformed to maximize predictive value.

Model Architecture: Most production fraud detection systems employ ensemble methods – multiple models whose outputs are combined for final classification. Random forests, gradient boosting machines (XGBoost, LightGBM), and neural networks each capture different aspects of fraud behavior. Ensemble combinations outperform any single model type.

Training Paradigms: Fraud detection models train on labeled historical data – clicks known to be fraudulent or legitimate based on downstream outcomes. The challenge is label quality: how do you know which historical clicks were truly fraudulent? Approaches include:

- Confirmed fraud (clicks from known bot IPs, failed form submissions)

- Inferred fraud (clicks that never converted despite high conversion probability)

- Adversarial training (synthetic fraud patterns added to training data)

- Semi-supervised learning (leveraging unlabeled data alongside limited labeled examples)

Real-Time Inference: Production systems must score clicks in milliseconds. Model optimization for inference speed – pruning, quantization, distillation – enables real-time detection without introducing latency that would degrade user experience.

Continuous Learning: Fraud patterns evolve constantly. Models that trained on 2023 fraud patterns underperform against 2025 techniques. Production systems implement continuous learning pipelines that retrain models on recent data, test new models against holdout sets, and gradually roll out improvements.

Core Detection Technologies in Depth

Beyond the generational evolution of detection approaches, several specific technologies deserve detailed examination. These form the building blocks of comprehensive fraud detection systems.

IP Intelligence and Reputation

IP analysis remains foundational to fraud detection, but modern IP intelligence goes far beyond simple blacklists.

IP Type Classification: The distinction between residential, mobile, datacenter, and proxy IPs provides the first layer of risk assessment. Services like MaxMind, IP2Location, and Digital Element classify IPs by type with high accuracy. These integrate with lead validation and verification services for comprehensive protection. Datacenter traffic is not inherently fraudulent – corporate networks and VPN users generate legitimate datacenter traffic – but datacenter origin combined with other risk signals substantially increases fraud probability.

Autonomous System Number (ASN) Analysis: IPs belong to numbered autonomous systems operated by specific organizations. Some ASNs are associated primarily with legitimate consumer ISPs; others are known to host proxy services, VPN providers, or hosting infrastructure commonly used for fraud. ASN reputation scoring adds context beyond individual IP analysis.

IP Velocity and History: Real-time tracking of IP behavior across multiple clients enables velocity-based detection. An IP that clicked on 50 different advertisers in the past hour exhibits patterns inconsistent with legitimate browsing. Cross-client intelligence, where detection platforms share anonymized IP behavior data, substantially improves detection compared to single-advertiser analysis.

Geolocation Confidence: IP geolocation is not binary. Detection systems score geolocation confidence based on multiple data sources, ISP registration data, and historical location patterns. When a click claims New York but IP evidence suggests Mumbai with 85% confidence, that mismatch signals fraud regardless of exact certainty.

Implementation considerations: IP intelligence APIs typically charge $0.001-$0.01 per lookup. At scale, these costs become significant. Caching strategies that store lookups for 24-48 hours reduce API costs while maintaining detection accuracy, since IP characteristics rarely change faster than daily.

Device Fingerprinting

Device fingerprinting identifies unique devices based on their configuration characteristics. When implemented properly, fingerprinting persists across sessions and browsing modes, enabling detection of repeat fraud attempts even when IPs change.

Canvas Fingerprinting: Browsers render graphics using the HTML5 canvas element. Due to differences in graphics hardware, drivers, and rendering engines, the same drawing instructions produce subtly different outputs on different devices. These differences, hashed into a fingerprint, identify devices with high uniqueness. Canvas fingerprinting works across browsers on the same device and persists even when cookies are cleared.

WebGL Fingerprinting: Similar to canvas, WebGL (Web Graphics Library) renders 3D graphics with device-specific variations. The combination of GPU vendor, renderer string, and rendering output creates additional fingerprint entropy.

Audio Context Fingerprinting: The Web Audio API processes audio signals with hardware-dependent characteristics. The same audio processing code produces measurably different outputs on different devices, adding another fingerprint dimension.

Font Detection: The set of installed fonts varies by device. JavaScript can detect which fonts are available by measuring rendering width of test characters in each font. The specific font set creates a fingerprint component.

Browser Property Collection: Screen resolution, color depth, timezone, language settings, installed plugins, Do Not Track settings, and numerous other browser-accessible properties combine to create fingerprint distinctiveness.

Fingerprint Entropy and Uniqueness: A single fingerprint component may have only dozens of possible values. Combined, hundreds of components create fingerprints with entropy sufficient to uniquely identify most devices. Research suggests that browser fingerprints are unique for 80-95% of devices.

Anti-Fingerprinting Countermeasures: Privacy-focused browsers like Brave and Firefox implement fingerprinting protection that randomizes certain properties. Detection systems must account for these countermeasures – a fingerprint that changes every session may itself be a fraud signal if inconsistent with the browser’s claimed identity.

Implementation architecture: JavaScript-based fingerprinting executes in the user’s browser and transmits fingerprint data to your server. Server-side storage enables cross-session matching. Hashing the fingerprint (rather than storing raw component data) reduces storage requirements and addresses privacy concerns while maintaining matching capability.

Behavioral Analysis

The most sophisticated fraud detection examines how users interact with pages, not just what data they submit. Behavioral analysis detects fraud that passes all device and network checks.

Mouse Movement Analysis: Human mouse movements follow characteristic patterns – curved trajectories, micro-adjustments as the cursor approaches targets, acceleration and deceleration curves that reflect motor control. Bot mouse movements are often linear, lack micro-corrections, or exhibit perfectly constant velocity. Machine learning models trained on movement vectors can distinguish human from automated interaction with 85-95% accuracy.

Keystroke Dynamics: When users type, they exhibit unique timing patterns – dwell time (how long a key is held), flight time (time between releasing one key and pressing the next), and error correction patterns. Bots typing at perfectly constant speed or with suspiciously uniform timing patterns fail keystroke analysis.

Scroll Behavior: Legitimate users scroll with variable velocity, pause on content, and scroll incrementally rather than directly to page bottom. Bots often do not scroll at all, or scroll with mechanical uniformity that reveals their nature.

Session Navigation Patterns: Human browsing sessions follow logical patterns – users read content, click links to learn more, compare options, and eventually convert or leave. Bot sessions often proceed directly to conversion points without the exploratory behavior that precedes legitimate purchase decisions.

Temporal Patterns: The timing between page load and form completion, between field completions, and between page navigations all carry behavioral signals. A form completed in 2 seconds (impossible for human reading and typing) or with suspiciously uniform inter-field timing indicates automation.

Implementation architecture: Behavioral analysis requires JavaScript that captures interaction events and transmits them for analysis. This creates trade-offs: comprehensive event capture enables better detection but increases page weight and may impact performance. Sampling strategies that capture sufficient behavior without overwhelming bandwidth balance these concerns.

Network and Protocol Analysis

Analysis at the network protocol level reveals fraud signals invisible to application-layer detection.

TLS Fingerprinting (JA3/JA4): When clients establish TLS connections, they advertise supported cipher suites, extensions, and parameters in a ClientHello message. The specific combination creates a fingerprint that identifies the client software. A request claiming to be Chrome but presenting a JA3 fingerprint matching a Python requests library reveals client spoofing.

HTTP Header Analysis: Legitimate browsers send consistent, predictable HTTP headers. Automated clients often omit standard headers, include headers in unusual order, or present header combinations that no legitimate browser produces.

TCP/IP Fingerprinting: Operating systems implement TCP/IP stacks with characteristic variations in window sizes, options, and timing. Passive OS fingerprinting can identify claimed-browser mismatches (claiming to be Chrome on Windows while exhibiting Linux TCP behavior).

Connection Timing: Network characteristics like TCP handshake timing, time-to-first-byte, and request spacing carry information about client location and nature. Requests that arrive with datacenter-like timing precision despite claiming residential origin merit additional scrutiny.

Encrypted Traffic Analysis: Even with encrypted payloads, traffic analysis of packet sizes, timing, and patterns can identify automated behavior. This emerging area shows promise for detecting sophisticated bots that otherwise evade application-layer detection.

Machine Learning Model Architecture for Fraud Detection

Building effective ML-based fraud detection requires careful architectural decisions across data pipeline, model design, and production deployment.

Feature Engineering for Fraud Detection

The features you engineer determine detection effectiveness more than model choice. Fraud detection feature engineering follows established patterns.

Categorical Encoding: Features like IP type, browser name, and country require encoding for ML consumption. One-hot encoding works for low-cardinality features; target encoding or embedding layers handle high-cardinality features like ASN or city.

Temporal Features: Time-based features capture fraud patterns that vary by hour, day, or week. Click hour, day of week, time since campaign start, and time since last click from same IP all carry predictive signal.

Velocity Features: Aggregated counts over time windows – clicks per IP in last hour, clicks per device fingerprint in last day, clicks per campaign in last minute – capture fraud bursts invisible at individual-click level.

Ratio Features: Proportional features often outperform raw counts. The ratio of datacenter to residential IPs in a campaign, the ratio of mobile to desktop traffic, and similar proportional measures normalize for volume differences.

Interaction Features: Combinations of features often reveal fraud that individual features miss. The interaction of datacenter IP with zero time-on-site, or high velocity with known bad ASN, captures signals stronger than component features alone.

Embedding Features: For high-dimensional categorical data like IP addresses, learned embeddings that map similar entities to nearby vectors capture latent fraud relationships that explicit features miss.

Model Selection and Ensemble Design

Different model types excel at different fraud patterns. Production systems typically ensemble multiple approaches.

Gradient Boosting (XGBoost, LightGBM, CatBoost): These models excel at tabular data with mixed feature types. They handle missing values gracefully, provide feature importance rankings, and train quickly on moderate-sized datasets. Gradient boosting typically forms the backbone of fraud detection ensembles.

Random Forests: While generally less accurate than gradient boosting, random forests are more resistant to overfitting and provide calibrated probability estimates. They work well as ensemble components.

Neural Networks: Deep learning models capture complex non-linear patterns in behavioral and sequence data. LSTM networks excel at temporal patterns in session behavior. Autoencoders detect anomalies by learning to reconstruct legitimate traffic and flagging reconstruction errors.

Isolation Forests: For unsupervised anomaly detection, isolation forests identify outliers without requiring labeled fraud data. They complement supervised models by catching novel fraud patterns that labeled training data may not include.

Ensemble Strategies: Combining model outputs through voting, averaging, or stacking typically improves detection by 5-15% over single best models. Stacking – training a meta-model on base model outputs – captures complementary strengths while limiting individual model weaknesses.

Training Pipeline Design

Fraud detection model training requires handling imbalanced data, avoiding label leakage, and managing temporal shifts.

Class Imbalance: Fraud typically represents 15-25% of traffic – significant but still minority. Techniques for handling imbalance include:

- Oversampling minority class (SMOTE, ADASYN)

- Undersampling majority class

- Class weights that penalize misclassifying minority examples

- Threshold optimization that prioritizes recall over precision

Temporal Splitting: Time-series cross-validation prevents leakage of future information into training. Training on January data and validating on February data simulates real deployment where models must generalize to future fraud patterns.

Feature Drift Monitoring: Fraud patterns shift as attackers adapt. Monitoring feature distributions over time identifies when model inputs no longer resemble training data, signaling need for retraining.

Label Quality Management: Labels come from multiple sources with varying reliability. Confirmed fraud (known bot IPs, failed honeypots) provides high-confidence labels. Inferred fraud (zero conversion, immediate bounce) is noisier. Weighting examples by label confidence improves model quality.

Production Deployment Considerations

Moving fraud detection from training notebooks to production requires addressing latency, reliability, and observability.

Latency Requirements: Click fraud detection must score requests in 10-50 milliseconds to avoid impacting ad auctions or page load. Model optimization techniques include:

- Feature precomputation (looking up IP intelligence before model inference)

- Model distillation (training smaller models to mimic larger ones)

- Quantization (reducing numerical precision for faster inference)

- Batching (processing multiple requests together for GPU efficiency)

Reliability Architecture: Fraud detection failure should not block legitimate traffic. Architectures typically include:

- Fallback to rule-based detection if ML scoring fails

- Circuit breakers that disable detection during outages

- Graceful degradation that allows traffic when confidence is low

- Redundant model serving with automatic failover

Observability and Debugging: Understanding why models flag specific clicks requires:

- Feature logging for flagged requests

- SHAP or LIME explanations identifying influential features

- Dashboards showing detection rates, false positive rates, and feature distributions

- A/B testing infrastructure for evaluating model changes

Implementing Click Fraud Detection: Practical Architecture

Translating detection technology into working systems requires integration with your lead generation infrastructure.

Architecture Options by Scale

Small Operations (Under $10,000/month ad spend): Third-party solutions like ClickCease, Lunio, or TrafficGuard provide turnkey detection without infrastructure investment. These platforms integrate via JavaScript tags and API connections to ad platforms, automating IP exclusion and providing detection dashboards.

Cost: $79-500/month depending on volume Implementation time: 1-2 hours Typical detection rate: 60-80% of fraud

Mid-Market Operations ($10,000-100,000/month ad spend): Enterprise fraud platforms (CHEQ, TrafficGuard Enterprise) provide more sophisticated detection with dedicated support. Custom integration, advanced behavioral analysis, and cross-channel protection justify higher costs at this scale.

Cost: $500-5,000/month Implementation time: 1-2 weeks Typical detection rate: 75-90% of fraud

Enterprise Operations (Over $100,000/month ad spend): Custom detection systems built on fraud detection APIs (Fingerprint.js, HUMAN, Arkose Labs) combined with in-house ML models provide maximum control and detection capability. This approach requires engineering investment but enables competitive differentiation.

Cost: $5,000-50,000/month including engineering time Implementation time: 2-6 months Typical detection rate: 85-95% of fraud

Integration Architecture

Fraud detection integrates at multiple points in the lead generation flow:

Pre-Click Detection (Ad Platform Level): IP exclusion lists uploaded to Google Ads and Meta Ads prevent fraudulent clicks before they occur. Automated systems sync exclusion lists hourly, adding newly detected fraud sources.

Landing Page Detection (JavaScript Layer): Client-side JavaScript captures device fingerprints, behavioral signals, and session characteristics. This data transmits to fraud detection services for real-time scoring.

Form Submission Detection (Server Layer): Server-side validation on form submission enables blocking fraudulent leads before they enter your system. Honeypot fields, timing analysis, and cross-referencing with pre-click signals enable comprehensive detection.

Post-Submission Detection (Async Analysis): Leads that pass real-time checks undergo additional async analysis. IP reputation lookups, identity verification, and cross-referencing with known fraud databases identify fraud that real-time processing missed.

Feedback Loop Integration: Conversion data, buyer returns, and quality complaints feed back into detection systems. Leads that never convert or that buyers reject as fraudulent provide training signal for detection models. Understanding lead return policies helps structure these feedback loops.

Data Pipeline for Detection

Effective fraud detection requires data infrastructure that captures, stores, and processes detection signals.

Event Collection: Every page load, click, scroll, and form interaction generates events. Collection infrastructure (Google Tag Manager, Segment, or custom event collectors) captures these events with sub-second latency.

Feature Store: Pre-computed features (IP reputation, historical velocity, device fingerprint match) store in feature stores that enable sub-millisecond retrieval during scoring. Redis, DynamoDB, or dedicated feature store platforms (Feast, Tecton) provide this capability.

Model Serving: ML models deploy behind model serving infrastructure (TensorFlow Serving, TorchServe, or cloud-native solutions like AWS SageMaker Endpoints) that handle load balancing, autoscaling, and version management.

Feedback Processing: Conversion events, return data, and quality signals process through streaming pipelines that update training datasets and trigger model retraining when performance degrades.

Detection Metrics and Performance Measurement

Evaluating fraud detection effectiveness requires understanding appropriate metrics and their trade-offs.

Core Detection Metrics

True Positive Rate (Recall): The percentage of actual fraud that your system detects. Higher recall means catching more fraud, but typically increases false positives.

False Positive Rate: The percentage of legitimate traffic incorrectly flagged as fraud. High false positive rates mean blocking real customers – often worse than letting some fraud through.

Precision: Of clicks flagged as fraud, what percentage are actually fraudulent? Low precision means wasting investigation effort on false alarms.

Area Under ROC Curve (AUC-ROC): The overall discriminative ability of your detection system across all threshold settings. AUC-ROC of 0.9 or higher indicates strong detection capability.

Precision-Recall AUC: For imbalanced fraud detection, precision-recall curves often provide more meaningful evaluation than ROC curves.

Business-Oriented Metrics

Fraud Savings: Total ad spend prevented from reaching fraudulent clicks. Calculated as (detected fraud clicks) x (average CPC).

Detection Cost per Dollar Saved: The ratio of detection system costs to fraud prevented. Healthy systems operate at $0.02-0.10 per dollar saved.

Lead Quality Improvement: Downstream lead quality metrics (contact rate, conversion rate, buyer return rate) compared before and after detection implementation.

False Block Rate: Estimated revenue lost to incorrectly blocked legitimate traffic. Calculating this requires A/B testing detection off for a sample.

Setting Detection Thresholds

The threshold at which fraud scores trigger blocking involves business trade-offs:

Conservative Thresholds (High Score Required to Block): Minimize false positives, letting some fraud through. Appropriate when lead values are high and blocking legitimate traffic is costly.

Aggressive Thresholds (Lower Scores Trigger Blocks): Maximize fraud detection at the cost of blocking some legitimate traffic. Appropriate when fraud rates are very high or lead values are moderate.

Dynamic Thresholds: Adjusting thresholds based on context – tighter during suspicious traffic spikes, looser during normal periods – balances detection and false positive costs.

Most operations find optimal thresholds through iterative testing, starting conservative and gradually tightening until false positive rates become problematic.

Benchmarking Detection Performance

Industry benchmarks provide context for evaluating your detection effectiveness:

| Metric | Average Performance | Top Quartile | Elite (Top 10%) |

|---|---|---|---|

| Fraud detection rate | 60-70% | 75-85% | 90%+ |

| False positive rate | 2-3% | 1-2% | Under 1% |

| Detection latency | 50-100ms | 20-50ms | Under 20ms |

| Cost per dollar saved | $0.05-0.10 | $0.03-0.05 | Under $0.03 |

Emerging Detection Technologies

The fraud detection landscape continues evolving. Several emerging technologies show promise for next-generation detection.

AI-Powered Behavioral Biometrics

Advanced behavioral analysis goes beyond mouse movements to capture unique biometric signatures in how users interact with devices. Typing rhythm, swipe patterns on mobile, and gait detection from device accelerometers create biometric profiles that are extremely difficult to replicate programmatically.

These systems can identify the same human user across devices and sessions based on behavioral consistency, enabling detection of fraud rings where multiple accounts exhibit identical behavioral signatures.

Zero-Knowledge Proof Identity Verification

Privacy-preserving cryptographic techniques enable verification of user properties without revealing underlying data. A user can prove they have previously verified their identity without transmitting any identifying information. This technology may enable fraud prevention that respects privacy requirements while still distinguishing verified humans from bots.

Federated Learning for Cross-Client Detection

Fraud patterns learned at one client could benefit all clients, but sharing fraud data raises privacy and competitive concerns. Federated learning enables collaborative model training where models update locally and only aggregated, anonymized gradients share across participants. This architecture may enable industry-wide fraud detection intelligence without compromising individual advertiser data.

Hardware-Attested Identity

Trusted Platform Modules (TPMs) and similar secure enclaves can cryptographically attest that requests originate from genuine hardware devices rather than emulated environments. As browsers and operating systems expose attestation capabilities, fraud detection can leverage hardware-level identity verification that software alone cannot spoof.

Vendor Comparison: Detection Technology Platforms

The market for fraud detection technology includes platforms at various sophistication and price levels.

ClickCease / Lunio

Technology approach: Rule-based detection with statistical anomaly detection. JavaScript tag captures device and behavioral signals. Automated IP exclusion list sync with Google Ads and Meta.

Strengths: Easy implementation, affordable pricing, effective against obvious fraud. Good for operations under $50,000/month spend.

Limitations: Less sophisticated ML capabilities, limited cross-client intelligence, primarily focused on Google and Meta platforms.

Pricing: $79-500/month based on spend volume.

CHEQ

Technology approach: Enterprise ML-based detection using proprietary models trained on billions of interactions. Behavioral analysis, device intelligence, and network-level detection combined in ensemble scoring.

Strengths: Sophisticated detection across all digital channels. Strong behavioral analysis. Comprehensive reporting and integration.

Limitations: Enterprise pricing excludes smaller operations. Implementation requires more effort than simpler solutions.

Pricing: $2,000-10,000+/month enterprise pricing.

TrafficGuard

Technology approach: Real-time ML detection with particular strength in mobile and affiliate traffic. SDK integration for mobile apps provides deep behavioral capture.

Strengths: Strong mobile detection. Affiliate fraud expertise. Multi-platform coverage.

Limitations: Desktop web detection less mature than mobile. Requires SDK integration for mobile benefits.

Pricing: $499+/month for mid-market plans.

HUMAN (formerly White Ops)

Technology approach: Deep network and behavioral analysis for bot detection. Specializes in distinguishing human from automated traffic at massive scale.

Strengths: Exceptional bot detection accuracy. Enterprise-grade scalability. Real-time decisioning.

Limitations: Focused on bot detection rather than all fraud types. Requires technical integration.

Pricing: Enterprise pricing based on volume.

Fingerprint.js

Technology approach: Device identification platform rather than complete fraud solution. Provides the fingerprinting layer that detection systems build upon.

Strengths: Industry-leading fingerprint accuracy (99.5%). Identifies returning visitors across sessions. Flexible integration for custom detection.

Limitations: Not a complete fraud solution – must build detection logic on top. Requires engineering investment.

Pricing: Free tier available; paid plans from $99/month.

Frequently Asked Questions

What detection rate should I expect from fraud prevention technology?

Enterprise-grade solutions typically achieve 75-90% detection rates against general click fraud. The remaining fraud often involves sophisticated human-powered click farms or novel attack vectors that detection systems have not yet learned. No solution catches all fraud – the goal is catching enough that remaining fraud costs less than enhanced detection would cost.

How quickly can detection systems identify new fraud patterns?

Rule-based systems require manual updates – days to weeks to address new patterns. ML-based systems with continuous learning pipelines can identify and adapt to new patterns within hours to days, depending on how quickly new patterns generate sufficient training signal.

Does fraud detection slow down my landing pages?

Well-implemented detection adds 10-50ms of latency – imperceptible to users. Poorly implemented solutions that block page rendering on synchronous API calls can add 200ms or more, noticeably impacting user experience. Evaluate implementation architecture when selecting vendors.

How do I know if my detection system is causing false positives?

A/B testing with detection disabled for a sample reveals false positive rates. Compare conversion rates between detection-on and detection-off groups. If the detection-on group shows significantly lower conversion rates that cannot be explained by blocked fraud, false positives may be too high.

Can sophisticated fraudsters bypass machine learning detection?

Sophisticated fraudsters continuously study detection systems and engineer workarounds. ML detection makes fraud more expensive by requiring fraudsters to invest in more realistic behavior simulation, rotating infrastructure, and anti-detection techniques. This increases their costs, reducing fraud profitability and frequency even when some attacks succeed.

What is the ROI of investing in fraud detection technology?

With 14-25% fraud rates on lead generation traffic, even modest detection effectiveness generates strong ROI. A $500/month detection platform that catches 50% of fraud on $50,000 monthly spend prevents $3,500-6,250 in fraudulent clicks – 700-1,250% ROI. Enterprise solutions at $5,000/month need only prevent $7,000 in fraud to break even, which occurs at the low end of detection effectiveness.

Should I build custom fraud detection or buy a platform?

Operations under $100,000/month ad spend should buy platforms – the engineering investment in custom solutions exceeds platform costs. Above $100,000/month, the economics shift: custom solutions provide better detection, competitive differentiation, and long-term cost advantages. Hybrid approaches that combine vendor platforms with custom ML layers provide intermediate options.

How does fraud detection interact with privacy regulations?

Fraud detection typically operates under legitimate interest provisions in GDPR and similar regulations, as it protects both businesses and consumers from harmful activity. For broader privacy compliance context, see our guide on consent and PEWC requirements. However, data retention policies, user transparency requirements, and specific implementation details require legal review. Vendor platforms typically handle compliance, while custom solutions require explicit privacy engineering.

What happens when I block traffic at the ad platform level versus the landing page level?

Ad platform blocking (IP exclusion lists) prevents fraudulent clicks from occurring – you do not pay for blocked traffic. Landing page blocking occurs after the click – you pay for the click but prevent the fraudulent lead from entering your system. Both are necessary: platform blocking reduces spend waste, landing page blocking catches fraud that bypasses platform detection.

How do I evaluate which fraud detection platform is right for my operation?

Request trials from multiple vendors and run them simultaneously against the same traffic. Compare detection rates, false positive rates, and integration complexity. Pay particular attention to how each platform handles traffic in your specific vertical – fraud patterns differ significantly between insurance, legal, solar, and home services.

Key Takeaways

Click fraud detection has evolved through three generations: rule-based systems that catch obvious fraud, statistical anomaly detection that identifies pattern deviations, and machine learning models that capture complex fraud signals across hundreds of features. Modern detection combines all three approaches.

Core detection technologies include IP intelligence, device fingerprinting, behavioral analysis, and network protocol analysis. Each catches different fraud types. Comprehensive detection requires layered implementation that examines traffic from multiple perspectives.

Machine learning fraud detection requires careful architecture: feature engineering that captures fraud signals, ensemble models that combine complementary approaches, training pipelines that handle imbalanced data and temporal drift, and production deployment that maintains low latency.

Implementation complexity scales with operation size. Small operations benefit from turnkey SaaS solutions at $79-500/month. Enterprise operations may justify custom detection systems that provide competitive differentiation and long-term cost advantages.

Detection effectiveness is measurable through both technical metrics (recall, precision, AUC) and business metrics (fraud savings, cost per dollar saved, lead quality improvement). Setting appropriate thresholds involves balancing fraud detection against false positive costs.

Emerging technologies including behavioral biometrics, zero-knowledge proofs, and hardware attestation may enable next-generation detection that is more accurate while remaining privacy-preserving.

The ROI of fraud detection investment is typically 500-1,500%. Even modest detection effectiveness generates strong returns given the 14-25% fraud rates that affect lead generation traffic. The question is not whether to invest in detection, but how much sophistication your operation requires.

Sources

-

Juniper Research: Digital Advertising Fraud Study - Research supporting the $84 billion annual ad fraud estimate and 14-25% click fraud rates referenced in this article.

-

ClickCease - Click fraud prevention platform offering real-time detection and IP exclusion list management for Google Ads and Meta.

-

CHEQ - Enterprise-grade fraud detection platform using ML-based behavioral analysis across digital channels.

-

TrafficGuard - Multi-platform fraud prevention with particular strength in mobile and affiliate traffic detection.

-

ActiveCampaign - Marketing automation platform with fraud detection integration capabilities for lead validation workflows.

This guide reflects click fraud detection technology as of late 2024 and early 2025. Detection capabilities, vendor offerings, and fraud techniques continue to evolve. Regular review of detection effectiveness and vendor capabilities ensures continued protection.