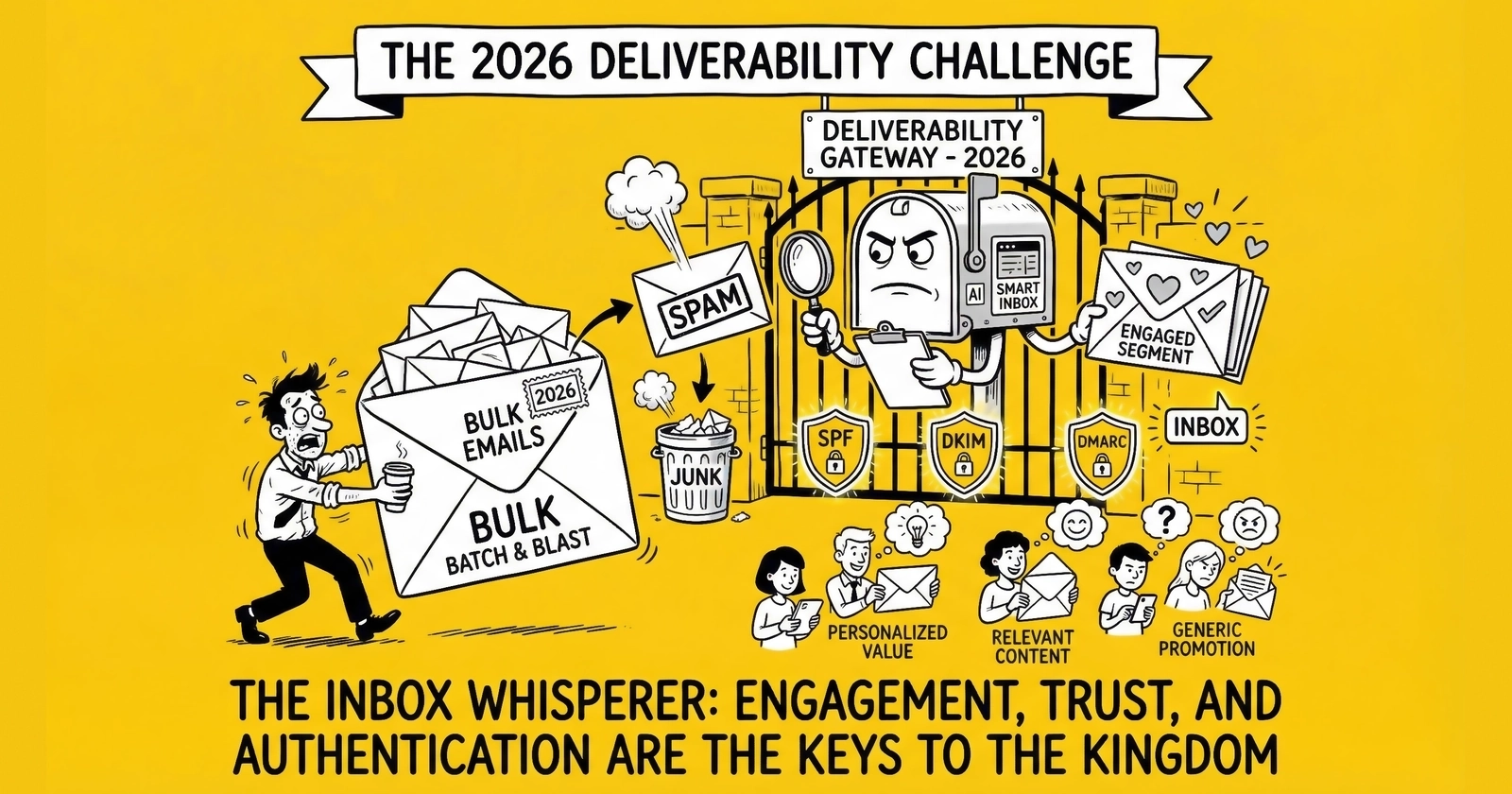

Deliverability is not “email marketing.” It’s whether your infrastructure is allowed to reach a human inbox.

Email deliverability has never been more challenging – or more expensive to ignore.

Global inbox placement sits around 84%, which means one in six legitimate marketing emails fails to reach its intended recipient. That number hides the part operators care about: the gap between top and average performers. The senders who run disciplined programs can hit 95%+ inbox placement, while marginal programs sit in the 80 – 90% band, and struggling senders can fall under 70%.

At 100,000 monthly emails, the gap between 95% and 80% inbox placement is 15,000 messages that never arrive. In lead generation, those aren’t “missed impressions.” They’re missed confirmations, missed follow-ups, missed reactivation attempts, and missed revenue. Deliverability is not a vanity metric. It’s whether your funnel works after you already paid for the lead.

The operational problem is that most teams don’t have instrumentation that matches the reality. Many programs still treat “delivered” as success – even though delivered only means a server accepted the message. That message can still be routed to spam or throttled without your dashboards screaming.

One study-level indicator of the confusion: 88% of senders can’t correctly define delivery rate. That gap in definition becomes a gap in diagnosis. Teams see “delivered” stay flat while conversions drop, then they blame lead quality, copy, or channel mix, when the underlying issue is inbox placement.

What changed is not only that inbox providers got “stricter.” The entire stack moved. Authentication moved from “recommended” to “enforced” for bulk senders, with real rejection behavior. Privacy changes made legacy engagement metrics unreliable, forcing new measurement discipline. AI spam filtering became more behavioral and more adaptive, punishing patterns more than individual messages. Regulatory pressure increased the cost of sloppy consent and sloppy unsub handling.

The scale of provider enforcement also changed what “bad email” looks like. Gmail alone blocks 100 million additional spam messages daily using AI-powered detection. Microsoft reported a 6× improvement in Business Email Compromise detection in Q1 2025 through behavioral analysis. Yahoo’s network maintains around 86% average deliverability, with a filtering approach that emphasizes domain reputation. You don’t have to love the details to see the direction: providers are investing, learning, and tightening.

This guide explains the current deliverability landscape and the playbooks disciplined operators use to stay in the inbox in 2026.

If you haven’t implemented bulk sender authentication requirements, start with Email Authentication Compliance Guide. Deliverability is easier to maintain than to recover – but only after you’re compliant.

The Current Deliverability Landscape

Understanding deliverability in 2026 starts with two clarifications teams still get wrong.

First, “delivered” is not “inbox.” Delivered means a receiving server accepted the message. That message can still be routed to spam, silently throttled, or filtered from view. Second, “one bad campaign” can contaminate a domain’s reputation. Providers respond to patterns. They don’t grade your emails individually.

This is why deliverability failures feel like “mystery performance drops” inside businesses that don’t instrument email as a delivery pipeline.

A common pattern looks like this: your sending volume is stable, acquisition is stable, and your ESP dashboard shows “delivered” holding steady. But reply rates fall, confirmation emails “don’t arrive,” and the sales team starts saying lead quality is down. What changed wasn’t your lead source. What changed was placement. The mail was accepted, then filtered.

The operational takeaway is uncomfortable but useful: if you only look at delivered and open rate, you can spend weeks optimizing the wrong layer. The faster diagnostic is always the same – what do bounces, deferrals, and provider-domain outcomes look like right now? Inbox providers enforce by throttling and filtering first, and your pipeline metrics show it before your revenue dashboard does.

Global Deliverability Metrics

Global inbox placement hovers around 84%. But the distribution is the real story:

- Top-performing senders achieve 95%+ inbox placement.

- Average performers see 80 – 90% placement.

- Struggling senders may see less than 70% reach the inbox.

Spam placement rates increased sharply through 2024 and continued tightening in 2025 as Google, Yahoo, and Microsoft implemented and hardened bulk sender enforcement.

One concrete signal of the tightening: spam placement rates nearly doubled from Q1 to Q4 2024. Providers didn’t suddenly “get mean.” They responded to a threat environment where malicious mail volume and sophistication were rising, and where recipient trust depends on keeping inboxes clean. Legitimate senders that behave like marginal senders get filtered like marginal senders.

What Senders Struggle With Most

Senders consistently report three problems: staying out of spam folders, maintaining list hygiene, and reducing bounces.

One deliverability survey quantified the pain:

- 47.9% of senders cite staying out of spam as their top challenge.

- 33.8% struggle with ongoing list quality.

- 28.4% face persistent bounce issues.

The uncomfortable reality is that many teams don’t have a deliverability problem – they have a definition problem. If you don’t distinguish “delivery” from “inbox placement,” you can’t diagnose why revenue dropped when email “looked fine.”

How Major Providers Filter Email

Each major mailbox provider employs sophisticated filtering, but their approaches differ.

Gmail uses TensorFlow-based AI that blocks over 100 million additional spam messages daily. Their RETVec system detects adversarial text manipulation – homoglyphs, LEET substitution, and intentional misspellings designed to evade filters. Gemini Nano provides on-device protection against novel scam patterns. Gmail’s filtering increasingly emphasizes engagement signals: messages from senders recipients regularly interact with receive preferential treatment.

Microsoft enhanced filtering significantly in May 2025, implementing AI-based detection that now rejects (not just filters) non-compliant emails. Their improved Business Email Compromise detection demonstrates 6× improvement in identifying sophisticated impersonation attacks.

Yahoo filters primarily by domain rather than IP address, making domain reputation the critical factor. Their approach maintains approximately 86% average deliverability across their network.

That nuance matters operationally. If you keep telling yourself “we have a dedicated IP so we’re safe,” but your domain reputation is poor, you’re optimizing the wrong variable.

The Reputation Signals Hierarchy

Providers weigh multiple signals, but the hierarchy is stable:

- Complaint rates – users explicitly marking mail as spam is the strongest negative signal.

- Engagement metrics – opens, clicks, replies, forwarding, time spent reading.

- Authentication compliance – SPF/DKIM/DMARC passing and alignment.

- Domain reputation – historical sending behavior tied to your domain.

- IP reputation – trust scores for your sending servers.

- Bounce rates and list hygiene – high bounces signal low-quality lists.

- Sending pattern consistency – sudden volume spikes trigger suspicion.

This hierarchy explains the most painful lesson in deliverability: perfect authentication does not guarantee inbox placement if recipients keep telling providers your mail is unwanted.

Apple Mail Privacy Protection: The Open Rate Revolution

Apple Mail Privacy Protection (MPP) broke the email measurement model many programs were built on. If your deliverability workflow still depends on “opens,” you are operating with unreliable instrumentation.

MPP, introduced with iOS 15 in September 2021, changes the meaning of an open by pre-loading tracking pixels in ways that don’t reflect human behavior. The result is predictable: open rates become inflated and less correlated with actual engagement.

The mechanics matter because they explain why “open rate optimization” stopped being a stable discipline. MPP can trigger pixel loads automatically, which means a message can register as opened even when a human never read it. For operators who used opens as a primary segmentation signal, that change doesn’t just affect reporting – it affects targeting.

How MPP Works

When a user enables Mail Privacy Protection on an Apple device:

- Apple’s servers preload email content, including tracking pixels, regardless of whether the user opens the email.

- This preloading occurs through Apple proxy servers, masking the recipient’s IP address.

- The tracking pixel registers as “opened” even if the user never views the message.

- Location information derived from IP addresses becomes unreliable.

The result is blunt: open rates for Apple Mail users approach 100%, which makes the metric meaningless for a substantial part of most audiences.

Adoption and Market Impact

MPP adoption among Apple Mail users exceeds 95%. With Apple Mail representing roughly 49.29% of all email opens (Litmus data from January 2025), this isn’t a niche measurement issue. It reshapes how most programs interpret performance.

One industry indicator of the distortion: the DMA reported open rates jumped from 19% in 2021 (pre-MPP) to 31.83% in 2022 (post-MPP). That near-doubling wasn’t a sudden global improvement in email marketing. It reflected artificial inflation from MPP.

Metrics Made Unreliable

MPP impacts more than “open rate.” It breaks adjacent metrics and workflows that depended on opens.

Open rates become inflated to the point of meaninglessness for Apple Mail users. Aggregate open rates combining Apple and non-Apple users become unreliable. Click-to-open rate (CTOR) collapses as a diagnostic tool because the denominator is inflated. Subject line A/B testing that optimizes for opens can’t reliably measure Apple Mail preferences. Send time optimization based on opens becomes polluted because the “open time” reflects Apple’s prefetch timing, not user behavior. IP-based personalization loses signal because Apple masks IP-derived location.

What This Means Operationally

Two mistakes happen after MPP:

- Teams keep using opens as a segmentation signal and slowly poison their own targeting.

- Teams throw up their hands and stop measuring engagement, which makes reputation drift invisible.

The operator response is not to abandon engagement tracking. It’s to promote signals that still mean something:

clicks, replies (high signal where they exist), conversions and downstream actions, and unsubscribe rates and complaint proxies.

MPP did not remove the need for segmentation. It removed a lazy proxy for segmentation.

The New Measurement Discipline

If you need a mental model: MPP pushes email programs toward behavior-based measurement rather than pixel-based measurement. That’s closer to how providers judge you anyway. Providers don’t care about your dashboard. They care about recipient behavior.

This also changes how you interpret tests. If opens are inflated, A/B testing subject lines based on open rate can become a noisy exercise. The operator move is to promote outcome metrics – clicks, conversions, downstream actions – and to treat “open” as one weak signal among several, not the controlling truth.

Adapting to the Post-MPP World

Programs that adapted did not “stop measuring.” They changed which signals they trusted.

Clicks become the primary engagement metric because they remain reliable across platforms. Reply rate can be high-signal for certain message types where clicks are uncommon. Conversion tracking matters more than any proxy metric. Segmenting by client – separating Apple Mail users from others in reporting – produces cleaner data for the non-Apple segment. Zero-party data collection (preference centers, direct surveys) becomes more valuable because it captures stated preferences rather than inferred behavior. And double opt-in becomes more important because when engagement is harder to interpret, initial list quality matters more.

The practical shift is to treat “engaged” as a composite signal and to stop treating opens as a standalone truth.

Engagement-Based Filtering: Virtuous and Vicious Cycles

Providers use engagement signals to infer whether recipients want your mail. That creates feedback loops that compound success and compound failure.

What Providers Measure

Providers evaluate behavior, not just message content:

- whether recipients open messages from you

- time spent reading

- click activity

- reply behavior

- forwarding

- moving messages to folders

- marking as spam or not spam

- deleting without opening

You cannot see all of these signals. But you can infer the direction when your engaged segments remain stable and your marginal segments collapse.

The Virtuous Cycle

High-quality programs experience:

- Relevant content and targeting produce engagement.

- Engagement signals trust to providers.

- Trust produces inbox placement.

- Inbox placement produces more engagement.

- Reputation strengthens over time.

This is why good senders often get away with “less perfect” technical setups – they’ve banked reputation through consistent recipient approval.

The Vicious Cycle

Struggling programs experience the inverse:

- Poor targeting or low-intent acquisition produces low engagement.

- Low engagement signals untrustworthiness.

- Providers increase spam filtering and throttling.

- Fewer recipients see mail, which reduces engagement opportunities.

- Reputation degrades over time.

Breaking the vicious cycle usually requires intervention that feels painful: aggressive list cleaning, frequency reduction, and tighter segmentation.

In the worst cases, the intervention extends beyond list cleaning. Teams may need to reimagine content and offers, and occasionally make infrastructure changes – new domains or IPs with fresh reputation – because the existing reputation debt is too heavy to unwind quickly. This is not a “reset button.” It is a last resort for programs that let the cycle run long enough to become structural.

Sunset Policies: The Discipline Most Teams Avoid

Sunset policies remove chronically disengaged recipients from active mailing. Only 24% of senders use sunset policies consistently, largely because teams resist shrinking lists. For detailed monitoring procedures, see our guide to bulk sender compliance monitoring.

But the math favors the disciplined operator. Mailing 100,000 addresses with 10% engaged and 90% disengaged often produces worse outcomes than mailing 50,000 addresses with 80% engaged. The smaller list achieves better placement, higher engagement, and more conversion opportunity because providers trust the stream.

Sunset policies aren’t about being “nice to the inbox.” They’re about protecting the asset you actually need: the ability to reach people who still respond.

AI and Machine Learning in Spam Filtering

Spam filtering evolved from keyword rules to behavioral prediction. That matters because it makes deliverability feel less deterministic – but it also makes it more predictable if you understand the inputs.

The Evolution of Filtering

Early spam filters relied on relatively simple techniques like keyword blocking, header analysis, blacklists, and heuristic scoring. Modern AI-powered filtering adds layers that are harder to game – behavioral analysis from recipient actions, natural language understanding of intent, network analysis that identifies coordinated campaigns, and faster adaptation to emerging techniques.

Gmail’s AI Capabilities (Why “Tricks” Stop Working)

Gmail’s filtering uses machine learning at massive scale and includes defenses against adversarial manipulation – the tactics spammers use to evade filters by obfuscating text. This is why “avoid spam words” advice is outdated. Providers aren’t looking for words. They’re looking for patterns and intent.

Gmail’s filtering stack includes TensorFlow models trained on massive message volumes to identify spam patterns, RETVec (Resilient and Efficient Text Vectorizer) designed to detect adversarial text meant to evade filters (character substitutions and Unicode tricks), Gemini Nano for on-device protection against novel scam patterns, and continuous learning driven by user feedback and emerging threats.

The AI-Generated Content Challenge

AI-generated content increased the sophistication of phishing and spam. One study of phishing mail between September 2024 and February 2025 found 82.6% of phishing emails contained AI-generated content – more convincing, more personalized, and harder to detect than older spam.

The consequence for legitimate senders is subtle but real: providers become more conservative because the baseline threat is higher. That raises the cost of sloppy acquisition and sloppy list hygiene, because providers have less tolerance for streams that resemble abusive patterns. In the same research window, Gmail and Outlook showed greater vulnerability to AI-generated phishing than Yahoo – a reminder that filtering is not uniform across providers, and that “safe on one provider” doesn’t mean safe everywhere.

Implications for Legitimate Senders

For legitimate programs, AI filtering means:

- content quality and relevance matter more than tricks

- consistency helps – sudden changes in patterns trigger scrutiny

- engagement is paramount because it’s the provider’s strongest “recipient wants this” signal

- deceptive practices backfire because providers can detect manipulation and recipients complain faster

AI didn’t make deliverability random. It made it more dependent on recipient reaction.

Regulatory Frameworks Affecting Deliverability

Email regulatory frameworks directly impact deliverability by shaping consent practices, list management, and sender behavior. Compliance is both a legal requirement and a deliverability best practice.

CAN-SPAM Act (United States)

The CAN-SPAM Act of 2003 establishes baseline requirements for commercial email in the United States.

Requirements include:

- no false or misleading header information

- no deceptive subject lines

- identification as advertisement

- physical postal address

- clear unsubscribe mechanism

- unsubscribe requests honored within 10 business days

Enforcement is not theoretical. The FTC can impose penalties up to $50,120 per email. There is no maximum penalty cap – a single campaign violating CAN-SPAM across millions of recipients could theoretically result in billions in penalties. CAN-SPAM is an opt-out model: you may email recipients until they unsubscribe, provided other requirements are met. It is widely considered a floor, not a ceiling, and meeting CAN-SPAM doesn’t guarantee deliverability.

GDPR (European Union)

The General Data Protection Regulation imposes stricter requirements and an opt-in model.

Requirements include:

- explicit opt-in consent (no pre-checked boxes)

- clear explanation of what recipients will receive

- documentation of consent

- right to access personal data

- right to erasure (“right to be forgotten”)

- data portability requirements

Enforcement includes maximum fines of €20 million or 4% of global annual turnover, whichever is higher. GDPR consent practices often produce higher-quality lists. Recipients who explicitly opt in are more engaged, reducing spam complaints and improving deliverability.

CASL (Canada)

Canada’s Anti-Spam Legislation is among the strictest in the world.

Requirements include:

- express or implied consent required

- clear identification of sender

- valid mailing address

- functional unsubscribe mechanism

- unsubscription within 10 business days

CASL also defines consent types with expiration:

- express consent: never expires unless withdrawn

- implied consent (from purchase): expires after 2 years

- implied consent (from inquiry): expires after 6 months

Enforcement includes penalties up to $10 million CAD per violation for organizations.

The Deliverability – Compliance Connection

Regulatory compliance and deliverability are deeply connected. Proper consent produces engaged subscribers, which reduces spam complaints. Easy unsubscription reduces complaints, because recipients use the unsubscribe link instead of the spam button. List hygiene requirements improve engagement by removing dead and unwilling recipients.

Providers reward legitimacy. Regulators punish illegitimacy. Operators who treat compliance as minimum acceptable behavior tend to experience fewer deliverability emergencies because their lists contain people who actually want the mail. For a broader view of how privacy regulations affect lead operations beyond email, see our coverage of GDPR, CCPA, and privacy technology in lead generation.

Emerging Trends and Future Requirements

The requirement trajectory is consistent: more identity enforcement, less tolerance for ambiguous compliance, and more weight on engagement behavior.

DMARC Policy Strengthening

Publishing a DMARC record with p=none can satisfy minimum requirements in some contexts, but it doesn’t provide enforcement. That minimum is already moving.

Current state: only 7.6% of domains enforce DMARC (p=quarantine or p=reject). The vast majority publish p=none records that provide visibility but no protection.

Expected evolution: requirements will escalate to mandate p=quarantine or p=reject, especially for bulk senders. The operator move is to progress policies deliberately rather than waiting for mandates – monitor (p=none), fix alignment and unknown senders, then ramp enforcement.

Timeline prediction: by mid-2026, major providers may require enforcement-level DMARC for bulk senders. The Gmail, Yahoo, and Microsoft bulk sender requirements already enforce baseline DMARC – the question is how quickly they move toward mandatory enforcement policies.

PCI DSS v4.0 DMARC Requirements

PCI DSS v4.0 introduces explicit email authentication requirements for organizations handling payment card data, effective in 2026:

“The entity implements controls to prevent use of phished credentials, including DMARC with a policy that prevents unauthorized use of the entity’s email domain.”

This requirement effectively mandates DMARC at p=reject for PCI-compliant organizations, adding regulatory pressure on top of provider enforcement.

“No Auth, No Entry”

The direction of travel is toward universal rejection of unauthenticated email. While not yet universal, the trend is clear:

- February 2024: Google and Yahoo mandate authentication for bulk senders.

- May 2025: Microsoft joins with similar requirements.

- 2025 and beyond: smaller providers follow the major providers’ lead.

Organizations treating authentication as optional will find their email increasingly unreliable.

Privacy-Preserving Measurement

Apple MPP demonstrated a broader truth: platform vendors will prioritize user privacy over marketer measurement capabilities. Expect continued evolution:

- additional platforms implementing similar privacy features

- further restrictions on tracking pixels and link tracking

- privacy-preserving aggregate reporting rather than individual tracking

- first-party data becoming the only reliable data source

Smart programs invest in zero-party data collection (explicit preference statements) and first-party behavioral data (on-site and in-app behavior) rather than depending on email tracking alone.

AI Content Detection

As AI-generated content proliferates, detection capabilities will expand.

Current state: AI phishing detection improves rapidly at major providers. Future state: potential requirements for AI content disclosure, similar to existing advertisement disclosure requirements. The operational implication is simple: if you use AI to draft, you still need human review and you should avoid “mass-sameness” patterns that resemble automated abuse.

Strategic Best Practices for Sustainable Deliverability

Most deliverability advice is either too tactical (“change subject lines”) or too generic (“send good content”). Operators need practices that protect a program under real conditions: multiple tools, fluctuating list quality, and periodic pressure to “send more.”

1) Treat Authentication and Suppression as Production Systems

Authentication breaks quietly. Suppression breaks quietly. Both are catastrophic when they break.

Operator rules that prevent outages:

- One owner for identity and compliance configuration.

- Central suppression logic across all sending systems.

- Regular audits for new senders (DMARC reporting) and broken alignment.

- Incident triggers based on deferrals/rejects, not on “engagement down.”

If you want a single operator test: can you name every system allowed to send from your domain, and would you notice within 24 hours if a new one appeared? If not, you’re running email on trust rather than control.

2) Build a Monitoring Cadence

Deliverability requires routine review. The cadence depends on volume.

Daily checks (high volume):

- deferrals and rejects by stream (marketing vs transactional)

- bounce spikes

- unsubscribe spikes

Weekly checks (all bulk senders):

- provider dashboards (Gmail, Yahoo, Microsoft where applicable)

- authentication pass and alignment trends

- blacklist checks where relevant

Monthly checks:

- list hygiene and sunset policy execution

- reactivation outcomes and suppression integrity

- authentication configuration audit (SPF lookup limits, DKIM selectors, DMARC policy)

Cadence is how you catch drift before providers enforce it for you.

One more practical rule: treat “reactivation” and “new acquisition source rollouts” as high-risk windows. During those weeks, your cadence should tighten, because that’s when complaint spikes and throttles are most likely.

3) Operationalize List Hygiene

List quality is the complaint-rate driver. If you treat list hygiene as optional, complaint rates become a lagging indicator that you only see after reputation damage.

Practices that protect programs:

- validate email addresses at point of collection where possible

- validate dormant cohorts before reactivation

- remove hard bounces immediately

- suppress complainers immediately

- implement sunset policies that stop mailing disengaged recipients

List hygiene is not a one-time clean. It’s a set of operating constraints: you either pay the cost continuously (validation, suppression, sunsetting), or you pay it all at once after a deliverability collapse.

4) Control Volume Changes Like Risk Events

Sudden volume spikes and sudden audience expansion are risk events. They are how good programs become bad programs.

Operator approach:

- ramp volume when testing new sources

- start with engaged cohorts first

- slow down when deferrals rise

- pause when complaint proxies spike

The goal is to avoid feeding negative signals long enough for providers to label your program as abusive.

If you need a simple control mechanism: ramp by cohort. Don’t jump from “recent engagers” to “the full database.” Providers don’t just notice volume changes – they notice that recipient sentiment shifts when you widen the audience.

5) Prioritize Consent Quality

Move beyond minimum consent requirements. Clear, specific opt-in produces higher-quality lists because recipients understand what they signed up for. That reduces the “I forgot who you are” dynamic that drives spam complaints.

Consent is not a one-time checkbox. It’s an ongoing relationship. Preference centers and frequency controls can reduce complaints by letting recipients choose “less” instead of choosing “spam.”

This is where compliance and deliverability meet. You can be technically compliant and still generate complaints if recipients feel surprised. Clear expectation-setting is the cheapest complaint-reduction strategy available. For operations handling phone outreach alongside email, similar principles apply to TCPA consent capture and documentation.

6) Separate Mail Streams

Use subdomains or separate infrastructure to separate transactional and marketing email. The goal is blast radius control – protecting critical delivery (confirmations, password resets, operational notices) from marketing reputation fluctuations.

Separation only works if you can maintain authentication and monitoring across streams. Fragmentation without discipline creates more failure modes, not fewer.

The practical version of this advice is not “create five subdomains.” It’s “protect the stream that keeps the business functioning.” If confirmations and password resets fail, you don’t just lose marketing performance – you break product and operations.

7) Plan for Privacy Evolution

Don’t depend on tracking capabilities that may disappear. Apple MPP demonstrated that platform vendors will sacrifice marketer measurement to protect user privacy.

Operational response: build first-party data collection, implement preference centers, and create value exchanges that encourage explicit data sharing. Treat email tracking as helpful, not foundational.

MPP is a reminder that you can’t build long-term strategy on vendor-granted tracking. If your segmentation and reporting require pixel accuracy, you’re exposed to future privacy shifts.

8) Stay Current

The email landscape changes continuously. Follow provider announcements, monitor industry publications, and treat deliverability as an evolving discipline requiring ongoing learning. The programs that survive enforcement changes are usually the ones that heard about them months earlier.

Conclusion

Deliverability in 2026 is a discipline, not a tactic. Authentication is mandatory. Engagement behavior drives filtering decisions. Privacy changes reshaped measurement. AI raised the baseline threat, which means providers are less tolerant of sloppy patterns. Regulatory pressure makes consent and opt-out handling more expensive to get wrong.

The programs that consistently reach the inbox do a few unglamorous things exceptionally well: they control identity, they keep complaint rates low through list discipline, they monitor the delivery pipeline, and they treat large sending changes as risk events.

Email still works. But it only works reliably for operators who treat it like infrastructure.

If you take one lesson from the last two years of enforcement changes, make it this: inbox providers are aligning around the same incentives. They reward mail recipients welcome and punish mail recipients reject. Everything else – tools, tactics, templates – is downstream of that reality in 2026.

That framing also clarifies what “optimization” means. It doesn’t mean chasing hacks or rewriting subject lines every week. It means building systems that prevent avoidable negative signals, noticing problems early enough to stop them, and resisting the short-term temptation to mail cohorts that behave like reputation debt. The inbox has turned into a gated channel. The gatekeepers are mostly automated. Your job is to make sure your program looks, behaves, and measures like one that belongs there.

Frequently Asked Questions

What’s the difference between delivery rate and inbox placement?

Delivery rate typically means the receiving server accepted the message. Inbox placement means the message reached the inbox rather than spam. You can have high delivery and low inbox placement – which is why teams think email “worked” while revenue drops.

Why did open rates become unreliable?

Apple Mail Privacy Protection changed how opens are measured by pre-loading tracking pixels. That inflates opens and makes them less correlated with human reading behavior. Operators should weight clicks, replies, conversions, and complaint/unsub signals more heavily.

If we have SPF/DKIM/DMARC, are we safe?

Authentication is the gate, not the guarantee. Providers still filter based on complaints and engagement. A compliant sender with high complaint rates can still be throttled or placed in spam.

Why do reactivation campaigns cause so many incidents?

Dormant recipients forgot they opted in. Some never opted in through high-quality consent flows. Reactivation tends to spike complaints and deferrals quickly, which can damage reputation across streams. If you reactivate, do it with validation, cohort ramping, and daily monitoring.

What is the fastest way to improve deliverability without changing infrastructure?

Reduce negative signals first.

The fastest wins usually come from list discipline rather than platform changes:

- stop mailing chronically disengaged cohorts (sunset)

- validate and suppress risky segments before big sends

- make unsubscribe effortless so recipients don’t use the spam button

- tighten acquisition sources that produce low intent and high complaints

These moves don’t require new tools. They require saying “no” to the campaigns that feel like easy volume but behave like reputation debt.

Do we need to move to DMARC p=reject now?

The direction is clear – enforcement-level DMARC becomes more common over time – but the operator sequence matters. Start with monitoring (p=none), use aggregate reports to identify unknown senders and alignment failures, then progress toward p=quarantine and p=reject once legitimate streams pass reliably.

Rushing to p=reject without a sender inventory is how teams accidentally block their own mail.

How do we think about “staying current” without chasing every deliverability rumor?

Use a simple rule: track changes that affect enforcement, measurement, and identity.

- Enforcement: provider announcements about bulk sender requirements, complaint thresholds, and new rejection behaviors.

- Measurement: privacy changes that invalidate existing metrics (MPP was the template).

- Identity: authentication requirements and policy shifts (DMARC escalation, alignment expectations).

You don’t need to read everything. You need to notice when the floor changes.

Key Takeaways

- Global inbox placement sits around 84%, and the gap between top and average performers is a revenue gap, not a marketing metric.

- Providers prioritize complaint rates and engagement behavior; authentication is required but not sufficient.

- Apple Mail Privacy Protection made open rates less reliable; operators need better engagement proxies and segmentation discipline.

- AI spam filtering punishes patterns and intent, not individual words. Tricks age out quickly.

- The operators who stay in the inbox run deliverability like infrastructure: monitoring cadence, list hygiene, suppression integrity, and controlled volume changes.

Sources

- Google Postmaster Tools - Gmail sender reputation monitoring and deliverability metrics dashboard

- Apple Mail Privacy Protection Support - Official documentation on Mail Privacy Protection features in iOS 15 and macOS Monterey

- Litmus Email Analytics - Verifies Apple Mail representing 49.29% of email opens and email client market share data

- DMARC.org - Official resource for Domain-based Message Authentication, Reporting and Conformance standards

- Validity Sender Reputation - Email deliverability benchmarks including 84% global inbox placement rate

- Forrester Research - Source for engagement-based filtering research and data-driven marketing benchmarks