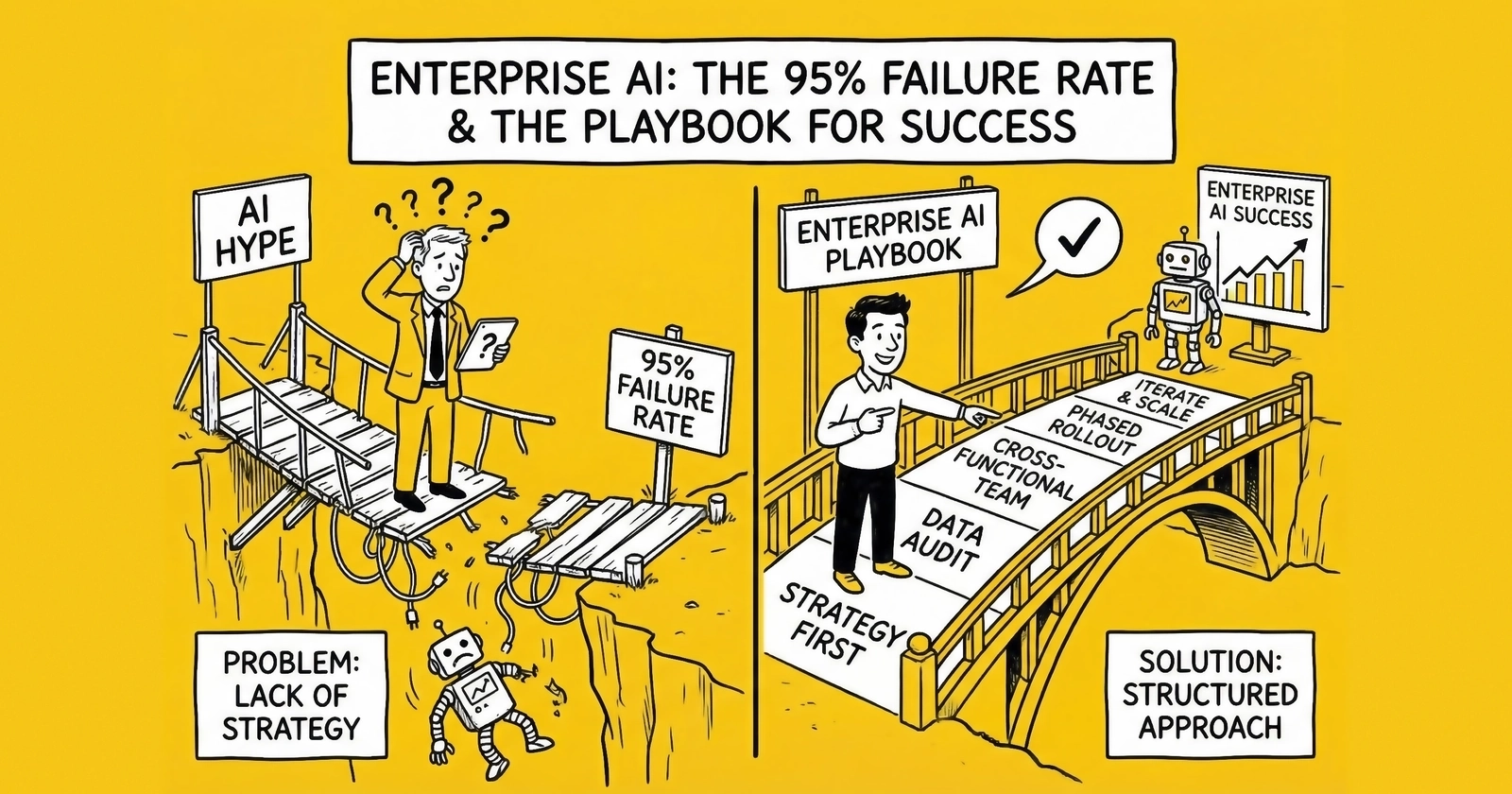

The implementation playbook that separates transformative AI deployments from expensive experiments

The Uncomfortable Truth

The numbers should stop every executive mid-presentation.

MIT’s Project NANDA analyzed 300 public AI deployments, conducted 150 interviews with business leaders, and surveyed 350 employees. Their conclusion: 95% of generative AI pilots fail to deliver measurable P&L impact.

Not “underperform expectations.” Not “require adjustment.” Fail.

This isn’t a technology problem. ChatGPT works. Claude works. The models deliver genuine capability. The failure happens in the space between capability and value – the implementation gap where promising pilots go to die.

The MIT research identified the pattern: “Sixty percent of organizations evaluated enterprise AI tools, but only 20 percent reached pilot stage and just 5 percent reached production.” The funnel narrows dramatically at each stage, with most initiatives stalling long before they demonstrate business value.

Meanwhile, a small cohort of organizations – roughly 6% according to McKinsey – qualify as “AI high performers” generating 5%+ EBIT impact. These organizations report 171% average ROI and $3.70 return per dollar invested. They’re not using different technology. They’re implementing differently.

This article examines what separates the 5% from the 95% – the specific practices, decisions, and organizational patterns that distinguish transformative AI deployments from expensive experiments.

Part I: Why Pilots Fail

The Learning Gap

MIT’s lead researcher Aditya Challapally identified the core issue with a phrase that should be etched into every AI strategy document: “The core barrier to scaling is not infrastructure, regulation, or talent. It is learning.”

Most enterprise AI systems don’t retain feedback, adapt to context, or improve over time. They’re static tools deployed into dynamic environments – frozen capabilities meeting constantly shifting requirements. The same professionals who use ChatGPT daily for personal tasks describe enterprise AI systems as “unreliable,” not because the underlying models are worse, but because enterprise deployments lack the context engineering and adaptation that make consumer tools useful. When you ask ChatGPT the same question twice, it might answer differently based on how you phrased it. When you ask an enterprise AI system the same question twice, it gives identical responses regardless of what you’ve learned since the first answer.

This creates what MIT calls “the GenAI Divide”: a fundamental gap between organizations that have figured out how to make AI learn and adapt versus those deploying static systems that never improve. The divide isn’t about technology budgets or talent pools. It’s about whether organizations treat AI as a one-time deployment or an evolving capability – and as AI shifts toward autonomous agents that act rather than just respond, this divide will only widen.

The divide manifests in specific failure patterns that appear with depressing regularity across industries. AI systems that work flawlessly in demos break in production when edge cases multiply and exceptions accumulate. Without mechanisms for learning and adaptation, every edge case requires manual intervention or system modification – and in real business environments, edge cases vastly outnumber the clean examples that demos showcase. Enterprise AI also suffers from what researchers call “context starvation”: systems that operate with limited context, unable to access the full information environment that would enable accurate, relevant responses. The same model that performs brilliantly with complete context fails when starved of necessary information. Finally, AI tools that don’t integrate deeply into existing workflows become optional extras that employees ignore. Standalone AI applications compete for attention against established tools and processes – and employees, already overwhelmed with software, default to what they know.

The Build vs. Buy Trap

MIT’s research revealed a striking pattern: purchasing AI tools from specialized vendors succeeds about 67% of the time, while internal builds succeed only one-third as often. Yet “almost everywhere we went, enterprises were trying to build their own tool,” researchers noted. Organizations systematically choose the lower-probability path.

Several factors drive this counterintuitive preference. Building internally feels like maintaining control, but internal builds often mean less control – fewer resources, slower iteration, more technical debt accumulating with each passing month. Organizations assume custom AI will provide competitive advantage, yet in practice most enterprise AI use cases (customer service, document processing, code assistance) don’t require proprietary models. The differentiation comes from implementation quality, not model uniqueness.

Security concerns often mask something else entirely. “We can’t use external AI because of data sensitivity” frequently reflects inadequate evaluation of vendor security rather than genuine risk assessment. Many enterprise AI vendors offer security controls superior to what internal teams can build with their constrained resources. And once internal builds begin, sunk cost psychology takes over. Pilot investments create pressure to continue even when purchased alternatives would perform better. The data is clear: for most use cases, purchasing from specialized vendors delivers higher success probability than internal development.

The Pilot Purgatory Pattern

A distinctive failure mode haunts enterprise AI: pilots that never end. Organizations launch with enthusiasm, initial results show promise, but scaling stalls. The pilot continues indefinitely – consuming resources, demonstrating capability, never delivering enterprise value.

MIT found that 56% of organizations take 6-18 months to move a GenAI project from intake to production. Many never complete the journey. The pilot becomes permanent – a perpetual experiment that justifies its existence through potential rather than results.

Pilot purgatory emerges from several interconnected failures. Without defined metrics for “success,” pilots can’t graduate to production; they accumulate positive anecdotes without demonstrating measurable impact. Organizations lack processes for evaluating pilots and deciding whether to scale, modify, or terminate – so pilots persist by default. Scaling requires commitment, and pilots feel safer with their limited scope and contained risk. Organizations choose perpetual piloting over the vulnerability of full deployment. And pilots compete with other priorities; without forcing functions, they remain perpetually “almost ready” for scale.

The 42% Abandonment Spike

The failure pattern is accelerating. According to industry research, 42% of companies abandoned most AI initiatives in 2025, up sharply from 17% in 2024. This spike reflects accumulated frustration: organizations that launched AI initiatives in 2023-2024 expected results by 2025. When results didn’t materialize, abandonment followed.

The abandonment pattern reveals unrealistic expectations colliding with implementation reality. Timelines that assumed smooth deployment encountered integration complexity. ROI projections that assumed rapid adoption met change management resistance. Technology assumptions that expected plug-and-play encountered data quality issues. The organizations abandoning AI aren’t concluding that AI doesn’t work – they’re concluding that their implementation approach doesn’t work. The distinction matters for what comes next.

Part II: What the 5% Do Differently

They Start with Problems, Not Technology

Failed implementations typically begin with a question: “We need an AI strategy. What can AI do for us?” Successful implementations begin differently: “We have these specific business problems. Can AI solve them better than alternatives?”

The difference sounds subtle but proves decisive. Technology-first thinking generates solutions seeking problems. Problem-first thinking ensures AI investments address genuine business needs with measurable outcomes. MIT’s research found that successful implementations “target specific pain points rather than attempting broad, generic AI deployments.” The 5% identify concrete problems – customer service resolution time, document processing backlog, code review bottleneck – and evaluate AI as one potential solution among alternatives. This approach naturally produces clear success criteria. If the problem is “customer service resolution takes 40 hours median,” success means reducing that number. The metric exists before the pilot begins.

They Buy Before They Build

The 67% vs. 22% success rate gap between purchased and built solutions reflects more than resource differences – it reflects learning accumulation. Specialized AI vendors have implemented across dozens or hundreds of organizations. They’ve encountered edge cases, refined workflows, developed best practices. Their solutions embed accumulated learning that internal teams would need years to develop.

The 5% recognize this asymmetry. They purchase solutions for common use cases and reserve internal development for genuinely differentiated capabilities. They ask: “What do we need to build that we can’t buy?” rather than “What can we build?” This doesn’t mean avoiding customization. Successful implementations extensively customize purchased solutions – integrating with internal systems, training on proprietary data, adapting to specific workflows. But they customize on top of proven foundations rather than building from scratch.

They Treat AI as Organizational Transformation

Failed implementations treat AI as technology deployment. Successful implementations treat AI as organizational transformation. The distinction appears in resource allocation: research indicates that high-performing organizations invest 70% of AI resources in people and processes, not just technology. They budget for change management, training, workflow redesign, and organizational development alongside technical implementation.

Accenture research shows that organizations with executive buy-in achieve 2.5x higher ROI – but executive buy-in means more than budget approval. It means visible leadership commitment: executives who use AI tools publicly, communicate strategic importance consistently, and hold organizations accountable for adoption. McKinsey’s research reinforces this pattern: “Half of AI high performers intend to use AI to transform their businesses, and most are redesigning workflows.” They’re not adding AI to existing processes; they’re reimagining processes around AI capabilities.

They Empower Line Managers, Not Just AI Labs

MIT identified a critical success factor: “Empowering line managers – not just central AI labs – to drive adoption.” Centralized AI teams can build sophisticated systems, but adoption happens at the operational level – in the daily work of line managers and their teams. When AI deployment is purely a technical initiative, it encounters resistance, indifference, and workarounds that undermine value.

Successful implementations distribute ownership. Line managers participate in use case identification, pilot design, and deployment decisions. They understand how AI fits their workflows because they helped design the integration. This distributed model also accelerates learning: line managers observe how AI performs in real contexts and provide feedback that improves systems. Centralized teams operating at distance from operations miss this feedback loop.

They Establish Governance Before Scaling

Failed implementations often add governance reactively – after problems emerge. Successful implementations establish governance proactively – before scaling begins. Proactive governance addresses the questions that become urgent at scale: Who can use which AI capabilities? What data can AI access? How are permissions managed? How do we validate AI outputs and detect errors? How do we meet regulatory requirements, document AI decisions, and enable audit? What could go wrong, and what containment measures exist?

Organizations that answer these questions before scaling can scale confidently. Organizations that defer governance face scaling barriers that require expensive retrofitting.

They Accept Realistic Timelines

The 42% abandonment spike reflects organizations expecting AI transformation in 12-18 months. Realistic timelines are longer. Research suggests successful organizations expect 2-4 year ROI timelines for enterprise AI transformation – not 2-4 years before any value (pilots can demonstrate value in months), but 2-4 years before AI delivers transformative enterprise-level impact.

This patience enables different decisions: investing in data quality improvements that take time but enable better AI performance, building organizational capabilities alongside technology deployment, allowing adoption curves to develop naturally rather than forcing premature scaling, and learning from early implementations before committing to enterprise rollout. Organizations with unrealistic timelines make short-term decisions that undermine long-term success. They skip data preparation, rush change management, force premature scaling, and abandon initiatives before they mature.

Part III: The Implementation Framework

Phase 1: Strategic Foundation (Weeks 1-6)

Objective: Align AI initiatives with business strategy and identify high-value opportunities.

Key Activities:

-

Problem Inventory: Catalog business problems that might benefit from AI. Interview operational leaders across functions. Focus on pain points with measurable costs: time, errors, delays, missed opportunities.

-

Opportunity Assessment: Evaluate each problem against criteria:

- Business impact (revenue, cost, risk)

- Data availability (exists, accessible, quality)

- Technical feasibility (proven approaches exist)

- Organizational readiness (change capacity, leadership support)

-

Prioritization: Select 2-3 initial opportunities that balance:

- High probability of success (good data, proven approaches, receptive organization)

- Meaningful business impact (worth the investment)

- Learning value (informs broader AI strategy)

-

Success Definition: For each selected opportunity, define:

- Specific metrics that indicate success

- Baseline measurements (current state)

- Target improvements (realistic, time-bound)

- Methods for measurement

Deliverables:

- Prioritized opportunity portfolio

- Success criteria for initial implementations

- Preliminary business cases with ROI projections

Common Mistakes:

- Selecting too many initial opportunities (spreading resources thin)

- Choosing technically interesting problems without business impact

- Defining success vaguely (“improve customer experience”)

- Skipping baseline measurement

Phase 2: Vendor and Technology Selection (Weeks 7-12)

Objective: Identify the right solutions for selected opportunities, favoring purchase over build.

Key Activities:

-

Market Scan: For each opportunity, identify:

- Commercial solutions specifically designed for this use case

- Platform capabilities from existing vendors (Microsoft, Google, Salesforce)

- Open-source alternatives with commercial support

- Build requirements if no suitable purchase option exists

-

Evaluation Criteria: Assess options against:

- Functional fit (does it solve the specific problem?)

- Integration capability (connects to existing systems?)

- Security and compliance (meets organizational requirements?)

- Total cost of ownership (licensing, implementation, maintenance)

- Vendor viability (will they exist in 3 years?)

-

Proof of Concept: For top candidates, conduct limited POCs:

- Use real (or realistic) data

- Test against actual workflows

- Involve end users in evaluation

- Measure against defined success criteria

-

Decision: Select solutions based on POC results, favoring:

- Proven solutions over novel approaches

- Vendor solutions over internal builds

- Integration capability over feature richness

Deliverables:

- Solution selection for each opportunity

- Implementation approach (configure, customize, or build)

- Vendor contracts and agreements

- Technical architecture decisions

Common Mistakes:

- Evaluating based on demos rather than POCs with real data

- Selecting based on features rather than integration capability

- Underestimating total cost of ownership

- Defaulting to build without seriously evaluating buy options

Phase 3: Pilot Implementation (Weeks 13-24)

Objective: Deploy initial solutions in controlled environments, validate value, and learn.

Key Activities:

-

Data Preparation: Before deployment:

- Assess data quality for AI use case

- Clean and prepare training/tuning data

- Establish data pipelines for ongoing operation

- Address privacy and security requirements

-

Technical Implementation:

- Configure or customize selected solutions

- Integrate with existing systems

- Implement monitoring and logging

- Establish feedback mechanisms

-

Change Management:

- Communicate pilot purpose and scope to affected teams

- Train pilot users on new tools and workflows

- Establish support channels for questions and issues

- Identify pilot champions who can assist peers

-

Controlled Deployment:

- Deploy to limited scope (team, region, use case subset)

- Monitor performance against success criteria

- Collect user feedback systematically

- Document issues and refinements

-

Iteration:

- Address issues identified during pilot

- Refine based on user feedback

- Adjust workflows based on observed behavior

- Update training and documentation

Deliverables:

- Deployed pilot solutions

- Performance data against success metrics

- User feedback and satisfaction data

- Refined implementation ready for scaling

Common Mistakes:

- Inadequate data preparation (causes poor AI performance)

- Insufficient change management (causes low adoption)

- Deploying too broadly initially (creates unmanageable scope)

- Not iterating based on feedback (locks in early problems)

Phase 4: Scale and Optimize (Weeks 25-52)

Objective: Expand successful pilots to enterprise deployment while maintaining performance.

Key Activities:

-

Scale Decision: Evaluate pilot results against success criteria:

- Did the pilot achieve target metrics?

- Is user adoption sustainable?

- Can the solution scale technically?

- Does the business case remain valid?

-

Scaling Strategy: For solutions that passed evaluation:

- Define rollout sequence (which groups/regions next?)

- Plan resource requirements (support, training, infrastructure)

- Establish governance for scaled operation

- Set timeline with milestones

-

Phased Rollout:

- Expand to additional user groups incrementally

- Maintain monitoring throughout scaling

- Adjust based on performance at each phase

- Build internal expertise progressively

-

Optimization:

- Analyze performance data across deployment

- Identify improvement opportunities

- Implement enhancements based on accumulated learning

- Establish continuous improvement processes

-

Value Realization:

- Measure business impact at enterprise scale

- Document ROI and lessons learned

- Communicate results to stakeholders

- Inform planning for additional AI initiatives

Deliverables:

- Enterprise-scale deployment

- Documented business impact and ROI

- Operational playbook for ongoing management

- Roadmap for additional AI initiatives

Common Mistakes:

- Scaling solutions that didn’t achieve pilot success criteria

- Attempting enterprise-wide deployment at once (vs. phased)

- Reducing support during scaling (when more is needed)

- Not measuring value at scale (losing sight of business impact)

Part IV: Critical Success Factors

Executive Sponsorship

Every successful enterprise AI implementation has visible executive sponsorship – not passive approval, but active championship. Effective executive sponsors communicate AI strategic importance across the organization, use AI tools publicly to model expected behavior, allocate resources and protect AI budgets during constraints, remove organizational barriers that impede implementation, and hold teams accountable for adoption and results.

Research indicates 2.5x higher ROI in organizations with strong executive sponsorship. The mechanism is straightforward: executive attention aligns organizational energy, unlocks resources, and overcomes resistance.

Data Foundation

Poor data quality causes 85% of AI project failures according to industry research. No amount of sophisticated modeling compensates for inadequate data. Enterprise data fragmentation compounds this challenge – AI systems can’t access data scattered across siloed systems. Data readiness requires quality (accurate, complete, consistent data verified for the specific AI use case, not just theoretical quality), accessibility (AI systems can reach required data – APIs exist, permissions allow access, latency permits real-time use), and governance (clear ownership, documented lineage, privacy compliance, security controls).

Organizations that skip data preparation pay later – in poor AI performance, expensive rework, and failed initiatives. Organizations that invest in data foundation accelerate AI success.

Change Management

AI changes how people work. Without change management, people resist, workaround, or ignore AI tools. Effective change management for AI includes clear, honest communication about what’s changing, why it matters, and how it affects roles – addressing fears directly rather than pretending they don’t exist. It requires training that goes beyond tool mechanics to workflow integration: how does AI fit into daily work? What decisions does it support? When should humans override AI recommendations? It demands accessible support during transition, with champions within teams who can assist peers and quick response to issues. And it needs feedback loops – mechanisms for users to report problems, suggest improvements, and influence AI development. People adopt tools they help shape.

Research shows only 37% of organizations invest adequately in change management for AI initiatives. The 63% underinvestment helps explain the 95% failure rate.

Governance Infrastructure

Governance enables scaling. Without governance, organizations either avoid scaling (missing value) or scale recklessly (creating risk). Enterprise AI governance requires a policy framework with clear rules for AI development, deployment, and operation – what’s permitted, what’s prohibited, what requires approval. It requires review processes that evaluate AI systems before deployment and during operation, covering technical, ethical, and compliance dimensions. It demands monitoring that provides visibility into AI behavior and performance, detecting problems before they escalate. It needs clear accountability with named individuals responsible for each AI deployment. And it requires incident response processes for addressing AI failures, errors, or harms – containment, remediation, communication.

Organizations that establish governance early can scale confidently. Organizations that defer governance create barriers that impede scaling later.

Part V: Measuring Success

Leading Indicators

Before enterprise impact materializes, leading indicators reveal whether implementation is on track. Adoption metrics matter most: active users divided by eligible users shows adoption rate, sessions per user per week reveals engagement depth, features used versus features available indicates capability utilization, and declining support requests suggest maturation. Quality metrics track AI output accuracy against baselines, error rate trends (which should decrease over time), override rates showing human corrections of AI recommendations, and user satisfaction scores. Operational metrics cover system availability and reliability, response time and latency, integration health, and data pipeline performance.

Lagging Indicators

Enterprise impact manifests in business outcomes. Efficiency metrics include time savings (hours saved per user, per process), cost reduction (labor, error correction, delays), and throughput increase (volume processed, capacity gained). Quality metrics track error reduction (defect rate, rework rate), consistency improvement (variance reduction), and compliance improvement (violation reduction). Business metrics capture revenue impact (new revenue, retained revenue), customer satisfaction (NPS, CSAT), and employee satisfaction (engagement, retention).

ROI Calculation

Enterprise AI ROI calculation follows standard investment analysis. Benefits (annual, ongoing) include labor cost savings (hours saved × loaded cost per hour), error cost reduction (errors avoided × cost per error), revenue impact (incremental revenue attributable to AI), and capacity value (additional volume × margin per unit). Costs (initial and ongoing) cover technology (licensing, infrastructure, implementation), people (AI team, training, change management), data (preparation, ongoing maintenance), and operations (monitoring, support, governance).

The formula is straightforward: ROI = (Annual Benefits - Annual Costs) / Total Investment. High-performing organizations achieve 5:1 returns on AI investments. Average organizations achieve 3:1 returns. Below 2:1 indicates implementation problems requiring attention.

Part VI: Recovery Playbook

For organizations with struggling AI initiatives, recovery is possible. The 42% abandonment rate reflects premature surrender, not inevitable failure.

Diagnose the Problem

Before fixing, understand what’s broken. Technology problems mean AI performs poorly even with good data and integration – requiring different models, better training, or vendor change. Data problems mean AI has insufficient or low-quality data – requiring data preparation, pipeline improvement, and quality investment. Integration problems mean AI works in isolation but doesn’t connect to workflows – requiring integration development, API work, and middleware. Adoption problems mean AI works but people don’t use it – requiring change management, training, and workflow redesign. Governance problems mean AI works but can’t scale due to risk concerns – requiring governance development, security hardening, and compliance work.

Most struggling initiatives have multiple problems. Prioritize based on impact and feasibility.

Reset Expectations

Failed AI initiatives often began with unrealistic expectations. Recovery requires resetting expectations based on realistic assessments: add 6-12 months to original timelines, reduce ROI projections by 30-50% initially (rebuilding as results improve), narrow scope to highest-probability use cases, and increase resources if original allocation was inadequate.

Communicate reset expectations clearly. Stakeholders with realistic expectations remain supportive through challenges. Stakeholders expecting miracles become obstacles.

Narrow Focus

Struggling initiatives often fail because they’re too broad. Recovery frequently requires narrowing: from enterprise rollout to single business unit, from multiple use cases to single use case, from custom build to vendor solution, from ambitious capabilities to basic functionality. Narrow focus enables concentrated resources, faster iteration, and clearer success criteria. Success in narrow scope builds credibility and capability for eventual expansion.

Invest in Foundations

Many failed initiatives skipped foundational investments. Recovery requires backfilling. Pause AI development to address data quality, accessibility, and governance – this feels like backward progress but enables forward progress. Add training, communication, and support that was missing, addressing the human factors that impede adoption. Establish the policies, processes, and monitoring that enable confident scaling. Foundation investment delays value realization but makes value realization possible.

Declare Victory Appropriately

Recovery doesn’t require achieving original ambitious goals. It requires demonstrating value that justifies continued investment. Define achievable success criteria for the recovery phase: pilot adoption above threshold, user satisfaction above target, performance improvements demonstrated, and foundation for scaling established. Achieve those criteria, communicate success, and build momentum for the next phase.

Key Takeaways

-

95% of enterprise AI pilots fail to deliver measurable P&L impact according to MIT Project NANDA research – the failure happens in implementation, not technology capability.

-

Buy beats build by 3:1 margin: Purchasing AI from specialized vendors succeeds 67% of the time versus 22% for internal builds, yet organizations systematically choose the lower-probability path.

-

The “GenAI Divide” stems from learning: Organizations that make AI systems learn and adapt succeed; those deploying static systems that never improve join the 95%.

-

Pilot purgatory traps 56% of organizations who take 6-18 months to move from intake to production – many never completing the journey due to unclear success criteria and governance gaps.

-

High performers invest 70% in people and process, not just technology – budgeting for change management, training, workflow redesign alongside technical implementation.

-

Executive sponsorship delivers 2.5x higher ROI through visible leadership commitment, public AI tool usage, consistent communication, and accountability for adoption.

-

Data quality causes 85% of AI project failures – no sophisticated modeling compensates for inadequate data. Verify quality for specific use cases, not theoretical standards.

-

2-4 year ROI timelines are realistic for enterprise transformation, enabling data quality investment, organizational capability building, and natural adoption curves.

-

The implementation framework spans 52 weeks across four phases: Strategic Foundation (weeks 1-6), Vendor Selection (weeks 7-12), Pilot Implementation (weeks 13-24), and Scale and Optimize (weeks 25-52).

-

Recovery is possible through diagnosis (technology, data, integration, adoption, or governance problems), expectation reset, narrowed focus, foundation investment, and appropriate victory declaration.

Sources

- MIT Sloan Management - Project NANDA research analyzing 300 AI deployments showing 95% fail to deliver P&L impact

- McKinsey & Company - AI high performer analysis showing 6% of organizations achieve 171% ROI and 5%+ EBIT impact

- Accenture AI Maturity and Transformation - Research on executive sponsorship delivering 2.5x higher ROI

Implementation statistics, failure rates, and success patterns current as of late 2025. Organizational circumstances vary; framework should be adapted to specific context.