How lead generation teams can effectively collaborate with AI systems across content creation, campaign optimization, lead scoring, and customer engagement while maintaining human oversight and quality.

The question facing lead generation operations is no longer whether to use AI but how to structure collaboration between human teams and AI systems for optimal results. Deloitte research indicates that while 23% of marketing activities currently incorporate AI, this percentage is expected to double within three years. For lead generation operators, effective human-AI collaboration determines whether AI investments produce measurable returns or become expensive experiments.

The distinction matters: AI works best when applied to specific tasks within human-directed workflows, not when treated as autonomous replacement for human judgment. Organizations achieving measurable AI ROI consistently report that success comes from thoughtful integration – assigning appropriate tasks to AI while preserving human oversight for strategic decisions, quality control, and relationship management.

This analysis examines how lead generation operations can structure effective human-AI collaboration across five core functions: content creation, campaign optimization, lead scoring and routing, customer engagement, and strategic planning. For background on how agentic AI is transforming enterprise operations, see our detailed analysis. The framework emphasizes practical implementation rather than theoretical capability.

The Collaboration Framework

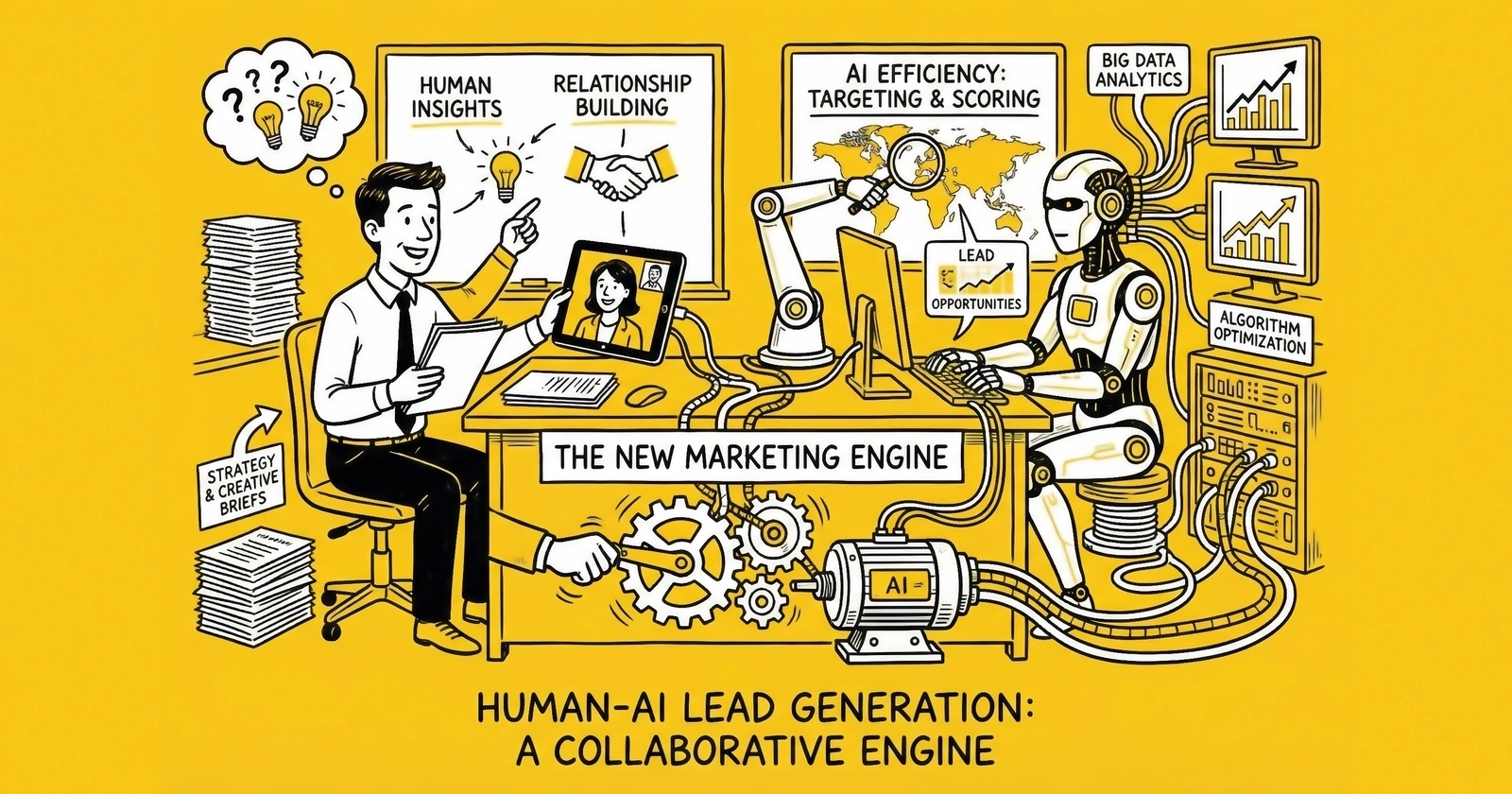

Effective human-AI collaboration requires clear understanding of what each party contributes. The framework divides work based on relative strengths:

AI Excels At:

- Processing large data volumes quickly

- Identifying patterns across multidimensional datasets

- Generating variations at scale (content, creative, messaging)

- Continuous monitoring without fatigue

- Consistent execution of defined rules

- Rapid iteration and testing

Humans Excel At:

- Strategic judgment and prioritization

- Understanding context and nuance

- Relationship building and trust development

- Ethical decision-making

- Creative conceptualization

- Quality assessment and brand protection

The collaboration model assigns tasks based on these strengths rather than defaulting all work to either party. Most effective implementations follow a pattern: AI generates, processes, or recommends; humans review, refine, and decide.

The Human-in-the-Loop Principle

The most effective AI implementations in lead generation maintain human oversight at critical decision points – what researchers call “human-in-the-loop” design. This doesn’t mean humans review every AI action; it means humans establish guardrails, monitor outcomes, and intervene when AI operates outside acceptable parameters.

For lead generation, critical human oversight points include:

- Content approval before publication

- Campaign budget threshold decisions

- Quality standards definition and enforcement

- Compliance rule establishment

- Buyer relationship management

- Strategic direction setting

AI operates within these human-defined boundaries, handling volume and velocity while humans ensure direction and quality.

Content Creation Collaboration

Content marketing serves lead generation through landing pages, educational content, email sequences, and advertising creative. AI content generation tools promise efficiency gains – research suggests AI can produce content 50 times faster than manual creation. The collaboration challenge is capturing this efficiency while maintaining quality and brand consistency.

The Content Collaboration Workflow

Effective human-AI content collaboration follows a structured workflow:

- Phase 1: Human Direction Humans define content requirements: topic, audience, tone, key messages, constraints, and success criteria. This strategic direction ensures AI output aligns with business objectives.

- Phase 2: AI Generation AI produces initial drafts, variations, or outlines based on human direction. The volume advantage appears here – AI can generate multiple approaches rapidly for human evaluation.

- Phase 3: Human Review and Refinement Humans evaluate AI output against quality standards, selecting promising approaches and providing refinement guidance. This preserves quality control while capturing efficiency.

- Phase 4: AI Iteration AI incorporates human feedback, producing refined versions that address identified issues. The iteration cycle continues until human standards are met.

- Phase 5: Human Approval Humans make final approval decisions before publication, ensuring brand consistency and quality standards.

Landing Page Content at Scale

For lead generation, landing page content benefits particularly from AI collaboration. Rather than testing three headline variations, operators can generate dozens of variations for testing. The workflow:

- Human defines the value proposition, target audience, and compliance requirements

- AI generates 20-30 headline variations, body copy alternatives, and CTA options

- Human reviews for quality, compliance, and brand fit, selecting 8-10 for testing

- AI formats approved variations for A/B testing implementation

- Human monitors test results and makes scaling decisions

This workflow produces more testing volume without sacrificing human judgment on quality and compliance.

Email Sequence Development

Lead nurturing sequences benefit from similar collaboration patterns:

- Human defines the nurturing strategy, sequence stages, and key messages

- AI drafts individual emails for each sequence stage

- Human reviews for tone, accuracy, and brand consistency

- AI generates subject line variations for testing

- Human approves final sequences and monitors performance

The human contribution focuses on strategy and quality; AI handles volume and variation.

Quality Control in AI Content

AI-generated content requires specific quality control measures:

- Factual Accuracy: AI can generate plausible but incorrect statements. Human review must verify facts, statistics, and claims before publication.

- Brand Voice Consistency: AI may drift toward generic phrasing. Human editors enforce brand voice standards that AI cannot reliably maintain.

- Compliance Review: Regulated industries (insurance, mortgage, legal) require compliance review that AI cannot perform. Human compliance officers must approve content before publication.

- Originality Verification: AI may produce content similar to training data. Human review ensures originality and avoids potential copyright issues.

Campaign Optimization Collaboration

Paid media campaigns – the primary traffic source for many lead generation operations – benefit from human-AI collaboration in bidding, targeting, and creative optimization.

Algorithmic Bidding with Human Oversight

Platform algorithms (Google, Meta, Microsoft) now handle most bidding decisions. The human role shifts from setting individual bids to defining objectives and constraints:

Human Responsibilities:

- Define campaign objectives and KPIs

- Set budget constraints and risk parameters

- Establish target CPA or ROAS thresholds

- Monitor performance against business goals

- Intervene when performance deviates significantly

AI Responsibilities:

- Real-time bid adjustments based on signals

- Audience expansion within defined parameters

- Creative rotation based on performance

- Budget allocation across ad sets

- Continuous optimization toward objectives

The collaboration succeeds when humans provide clear objectives and appropriate constraints while trusting AI to optimize within those boundaries.

Creative Testing at Scale

AI enables creative testing volume that manual processes cannot match:

- Human creates core creative concepts and brand guidelines

- AI generates variations (copy, imagery, format) within guidelines

- Platform AI tests variations and identifies winners

- Human reviews winning creative for quality and brand fit

- Human decides which winners to scale and when to refresh

This cycle produces faster creative iteration and performance improvement while maintaining human creative direction.

Anomaly Detection and Intervention

AI excels at monitoring campaign performance continuously – detecting anomalies that human monitoring would miss:

- Sudden CPL increases

- Conversion rate drops

- Budget pacing issues

- Quality score degradation

- Audience saturation signals

Effective collaboration routes anomaly alerts to human decision-makers who can investigate causes and determine appropriate responses. AI detects; humans diagnose and decide.

Lead Scoring and Routing Collaboration

Lead scoring represents mature AI application in lead generation – predictive models that evaluate conversion probability based on lead characteristics and behavior. Human-AI collaboration determines whether scoring improves or complicates operations.

Model Development Collaboration

Effective scoring models emerge from human-AI collaboration:

- Humans define what “good lead” means – the business criteria that indicate value

- Data teams prepare historical data connecting lead characteristics to outcomes

- AI identifies patterns predicting conversion that humans wouldn’t detect

- Humans validate that identified patterns make business sense

- AI generates scores for new leads based on validated patterns

- Humans monitor model performance and trigger retraining when needed

The collaboration balances AI pattern recognition with human business judgment – AI finds patterns, humans verify they’re meaningful. For detailed implementation approaches, see our guide on AI lead scoring with machine learning prioritization.

Dynamic Routing Decisions

AI-powered routing systems can evaluate leads against multiple buyer criteria simultaneously, optimizing across dimensions that rule-based systems cannot handle:

AI handles:

- Real-time evaluation against buyer criteria

- Predicted conversion probability per buyer

- Current buyer capacity and performance

- Dynamic pricing based on predicted value

Humans handle:

- Buyer relationship management

- Exception handling for unusual situations

- Performance review and buyer communication

- Strategic decisions about buyer mix

The collaboration enables optimization speed while preserving relationship quality that AI cannot maintain.

Feedback Loop Management

Continuous model improvement requires structured feedback:

- AI scores leads at submission

- Leads route to buyers based on scores and criteria

- Buyers report conversion outcomes (or systems track them)

- AI incorporates outcome data into model retraining

- Humans review model performance metrics

- Humans decide whether to adjust scoring weights or thresholds

Human oversight ensures the feedback loop improves business outcomes, not just statistical metrics. Our sales team lead quality feedback loops guide covers how to structure this collaboration effectively.

Customer Engagement Collaboration

Customer engagement – support, communication, relationship management – represents AI’s most sensitive application. The collaboration challenge is capturing efficiency without sacrificing relationship quality.

Conversational AI Implementation

Chatbots and conversational AI can handle initial lead engagement, qualification questions, and routine inquiries. For implementation approaches, see our guide on ChatGPT conversational lead qualification. Effective implementation defines clear boundaries:

AI handles:

- 24/7 availability for initial engagement

- Standard qualification questions

- FAQ responses

- Appointment scheduling

- Information collection

Humans handle:

- Complex objection handling

- Relationship-critical conversations

- Complaint resolution

- High-value prospect engagement

- Situations requiring empathy or judgment

The handoff between AI and human engagement must be smooth – prospects should not experience jarring transitions that undermine trust.

Response Assistance

AI can assist human agents rather than replacing them:

- Suggested responses for common inquiries

- Information retrieval from knowledge bases

- Real-time coaching based on conversation analysis

- Sentiment detection alerting agents to escalation needs

- Next-best-action recommendations

This assistance model preserves human relationships while improving response quality and speed.

Communication Personalization

AI enables personalization at scale – customizing communication based on recipient characteristics:

- Humans define personalization rules and constraints

- AI generates personalized content variations

- AI selects appropriate variations based on recipient data

- Humans review samples to ensure quality

- AI delivers personalized communications at scale

The collaboration produces relevant, personalized communication without requiring human customization of every message.

Strategic Planning Collaboration

Strategic decisions – market positioning, resource allocation, competitive response – represent areas where human judgment remains essential, but AI can inform better decisions.

Data-Driven Strategy Input

AI contributes to strategic planning through:

- Market Analysis: Competitive intelligence monitoring, trend identification from large datasets, opportunity sizing based on market data, and risk assessment from multiple signals.

- Performance Analysis: Historical pattern identification, attribution modeling, cohort performance comparison, and predictive forecasting.

- Scenario Modeling: What-if analysis at scale, sensitivity testing, resource allocation optimization, and risk scenario evaluation.

Humans synthesize AI-generated insights with business context, competitive understanding, and strategic judgment to make decisions.

Planning Process Integration

Effective strategic planning integrates AI analysis:

- AI prepares market analysis, performance data, and trend reports

- Humans interpret data in business context

- AI generates scenario projections based on human assumptions

- Humans evaluate scenarios and select strategic direction

- AI develops detailed execution plans based on strategic direction

- Humans refine plans and allocate resources

The process captures AI analytical capability while preserving human strategic judgment.

Implementation Considerations

Successfully implementing human-AI collaboration requires attention to several operational factors.

Change Management

Teams accustomed to traditional workflows may resist AI integration. Effective change management:

- Demonstrates AI value through quick wins

- Provides training on collaboration workflows

- Addresses concerns about job displacement

- Celebrates early successes

- Iterates based on team feedback

Quality Assurance

AI collaboration requires new quality assurance approaches:

- Sampling review of AI-generated content

- Outcome monitoring for AI decisions

- Escalation triggers for AI anomalies

- Periodic audits of AI performance

- Feedback mechanisms for quality issues

Governance and Compliance

Regulated industries require specific AI governance:

- Audit trails for AI decisions

- Explainability of AI recommendations

- Compliance review of AI-generated content

- Override capability for human intervention

- Regulatory alignment verification

Skill Development

Human-AI collaboration requires new skills:

- Prompt engineering for effective AI direction

- Quality assessment of AI output

- Workflow design for human-AI integration

- Data interpretation of AI analytics

- Technology management for AI tools

Organizations should invest in skill development alongside AI tool implementation.

Measuring Collaboration Effectiveness

Effective human-AI collaboration should produce measurable improvements:

- Efficiency Metrics: Content production volume increase, campaign optimization speed improvement, response time reduction, and cost per output decrease.

- Quality Metrics: Content quality scores (engagement, conversion), campaign performance improvement, lead scoring accuracy, and customer satisfaction maintenance.

- Team Metrics: Employee satisfaction with AI tools, skill development progress, collaboration workflow adoption, and issue resolution speed.

The goal is efficiency gains without quality degradation – AI should help teams do more good work, not produce more mediocre work.

Case Patterns: Collaboration in Practice

Understanding how human-AI collaboration works in actual operations helps guide implementation.

The Content Velocity Transformation

A lead generation operator struggled to test landing page variations at sufficient velocity. With two content staff, they could produce and test perhaps 10 headline variations monthly across multiple verticals.

Implementation: Introduced AI content generation with human review workflow. Human content staff defined requirements for each vertical – value propositions, compliance constraints, tone guidelines. AI generated 50+ variations per request. Humans reviewed, selecting top performers for testing and providing feedback to improve future generation.

Results: Testing velocity increased from 10 to 100+ variations monthly. Winning content identification accelerated. Human staff time shifted from drafting to strategic direction and quality review. Initial concerns about quality proved unfounded – human review maintained standards while AI handled volume.

Key Insight: The collaboration didn’t reduce human importance; it amplified human impact by multiplying output while preserving judgment at critical points.

The Campaign Optimization Shift

An insurance lead operator ran campaigns manually – individual bid adjustments, daily budget reallocations, keyword modifications based on human analysis. As campaign complexity grew, manual optimization couldn’t keep pace.

Implementation: Shifted to AI-optimized campaigns (Meta Advantage+, Google Performance Max) with human oversight focused on objective setting, constraint definition, and outcome monitoring. Humans defined target CPL, quality minimums, and budget guardrails. AI handled bidding, targeting, and real-time optimization within these constraints.

Results: Campaign performance improved 25% within 60 days. Human time freed from bid management enabled focus on creative testing, strategic planning, and buyer relationship management. Initial discomfort with reduced control gave way to appreciation of better outcomes.

Key Insight: Humans became more effective by defining objectives and constraints rather than making individual optimization decisions AI could handle better.

The Lead Scoring Refinement

A home services operator used rule-based lead scoring that staff felt was outdated but lacked resources to rebuild systematically.

Implementation: Deployed AI lead scoring with human validation workflow. AI analyzed historical data to identify conversion-predicting patterns. Humans reviewed AI-identified patterns for business sense – validating some, rejecting others that reflected correlation without causation. Ongoing human review of edge cases and scoring disputes provided continuous model feedback.

Results: Lead-to-conversion rates improved 15%. Buyer satisfaction increased as they received higher-intent leads. Disputes decreased. Importantly, human oversight caught several patterns AI identified that would have created compliance or fairness issues – the collaboration prevented AI from optimizing toward problematic criteria.

Key Insight: AI found patterns humans couldn’t detect from data volume, but humans prevented AI from implementing patterns that made statistical but not business sense.

Common Implementation Challenges

Human-AI collaboration implementations face predictable challenges worth anticipating.

Over-Automation Without Oversight

Some implementations push too much work to AI without adequate human oversight, creating quality and compliance risks.

Symptoms: Published content that doesn’t match brand voice. Campaign decisions that violate business constraints. Scoring that creates compliance concerns. Customer interactions that damage relationships.

Prevention: Build human review into workflows as non-negotiable step. Start with more human oversight than you think necessary; reduce as confidence builds. Monitor quality metrics to detect automation problems.

Under-Utilization Due to Distrust

Other implementations deploy AI tools that teams don’t actually use, wasting investment on theoretical capability.

Symptoms: AI tools purchased but not integrated into workflows. Teams doing work manually that AI could handle. Resistance to AI recommendations even when data supports them.

Prevention: Demonstrate AI value through quick wins. Involve teams in workflow design. Address concerns directly rather than dismissing them. Provide training that builds confidence.

Misaligned Expectations

Organizations sometimes expect AI to solve problems it can’t address or to work without the human input it requires.

Symptoms: Frustration that AI isn’t “smarter.” Disappointment in AI output quality without recognizing that quality depends on human direction. Unrealistic timelines for AI impact.

Prevention: Set realistic expectations from the start. Emphasize that AI augments rather than replaces human capability. Demonstrate that AI quality depends on human input quality. Plan for gradual improvement rather than immediate transformation.

Workflow Friction

Poorly designed collaboration workflows create friction that slows work rather than accelerating it.

Symptoms: Multiple handoffs that slow progress. Unclear responsibilities between AI and human tasks. Bottlenecks at human review points. Tool switching that interrupts flow.

Prevention: Design workflows with efficiency in mind. Minimize handoffs and context switches. Clarify responsibilities explicitly. Streamline tools and interfaces. Iterate on workflows based on user feedback.

Building AI-Ready Teams

Effective collaboration requires teams with appropriate skills and mindsets.

Essential Team Capabilities

- Prompt Engineering: Ability to communicate effectively with AI systems – providing clear direction, constraints, and context that produces useful output. This skill affects AI output quality directly.

- Quality Assessment: Ability to evaluate AI output critically – recognizing both good and problematic content, identifying patterns that need correction, providing feedback that improves future generation.

- Workflow Design: Ability to structure human-AI collaboration effectively – determining task division, designing review processes, optimizing for both efficiency and quality.

- Outcome Monitoring: Ability to monitor AI-influenced outcomes and detect problems – recognizing when AI decisions aren’t producing expected results, identifying patterns in errors, knowing when to intervene.

Skill Development Approaches

- Training Programs: Structured training on AI tools, prompt engineering, and collaboration workflows. Training should be practical rather than theoretical.

- Learning by Doing: Encourage experimentation with AI tools on low-stakes tasks before applying to production work. Experience builds confidence and capability.

- Cross-Team Learning: Share successes and failures across teams. What one team learns about effective AI collaboration benefits others.

- External Resources: Supplement internal training with external courses, workshops, and communities focused on AI collaboration skills.

Team Structure Considerations

- Centralized Expertise: Consider establishing AI expertise center that supports teams across the organization – sharing best practices, developing tools, and providing training.

- Distributed Capability: Ensure every team has members capable of effective AI collaboration, not just a small group of specialists.

- Clear Roles: Define who handles AI direction, who reviews output, who monitors outcomes. Ambiguous responsibility creates gaps.

Integration with Existing Operations

AI collaboration must integrate with existing tools, processes, and organizational structures.

Tool Integration

CRM Systems: Connect AI-generated insights with CRM data. AI scoring should flow into systems teams already use rather than requiring separate interfaces.

Marketing Platforms: Integrate AI content generation with existing content management and publishing workflows. AI creative should flow into campaign management tools smoothly.

Communication Tools: Enable AI assistance within communication channels teams use – Slack, email, collaboration platforms. Minimize tool switching.

Process Integration

Review Workflows: Build AI collaboration into existing review and approval processes rather than creating parallel workflows.

Reporting Structures: Include AI-related metrics in existing reporting dashboards. Don’t create separate AI performance tracking that competes for attention.

Quality Systems: Integrate AI output into existing quality assurance processes rather than treating AI work as separate from human work.

Organizational Integration

Department Boundaries: AI collaboration often crosses department boundaries – marketing, technology, operations. Clarify ownership and coordination mechanisms.

Budget Allocation: Determine how AI tool costs and collaboration development are budgeted across teams or centrally.

Success Metrics: Establish how AI collaboration success contributes to team and individual performance evaluation.

Key Takeaways

-

Effective human-AI collaboration assigns tasks based on relative strengths: AI excels at processing volume, identifying patterns, and consistent execution; humans excel at strategic judgment, relationship building, and quality assessment.

-

The human-in-the-loop principle maintains human oversight at critical decision points including content approval, budget decisions, quality standards, compliance rules, and strategic direction – while AI operates within human-defined boundaries.

-

Content creation collaboration follows a five-phase workflow: human direction, AI generation, human review, AI iteration, and human approval – capturing AI efficiency while maintaining quality control.

-

Campaign optimization shifts human responsibility from individual bid decisions to objective definition and constraint setting, trusting platform AI to optimize within defined parameters while humans monitor business outcomes.

-

Lead scoring collaboration balances AI pattern recognition with human business judgment: AI identifies conversion-predicting patterns from data, humans validate that patterns make business sense, and ongoing feedback loops require human oversight to ensure business outcome improvement.

-

Conversational AI requires clear boundary definition between AI-handled interactions (standard questions, scheduling, information collection) and human-handled situations (complex objections, complaints, high-value prospects, empathy-requiring conversations).

-

Strategic planning integrates AI analytical capability with human judgment: AI prepares analysis, identifies trends, and models scenarios; humans interpret data in business context, evaluate options, and make strategic decisions.

-

Quality assurance for AI collaboration requires new approaches: sampling review of AI output, outcome monitoring for AI decisions, escalation triggers for anomalies, and periodic performance audits.

-

Change management determines collaboration success. Teams need training on new workflows, demonstration of AI value through quick wins, and assurance that AI augments rather than replaces human contributions.

-

Measure collaboration effectiveness through both efficiency and quality metrics. The goal is enabling teams to do more good work – volume increases that sacrifice quality represent collaboration failure, not success.

Frequently Asked Questions

How do we decide which tasks to assign to AI versus humans?

Evaluate tasks across three dimensions: volume (high-volume tasks benefit most from AI), consistency (tasks requiring consistent execution suit AI), and judgment (tasks requiring nuanced judgment require humans). Content generation, data processing, and pattern recognition favor AI. Strategic decisions, quality assessment, and relationship management favor humans. Most tasks benefit from collaboration – AI handles volume and initial processing while humans provide direction and approval. Start by identifying your team’s highest-volume tasks and evaluate whether AI can handle initial work while humans review and refine.

What happens when AI makes mistakes in lead scoring or routing?

AI mistakes are inevitable and require systematic response. First, implement monitoring that detects performance degradation – conversion rates, buyer satisfaction, return rates – that signals model problems. Second, establish escalation triggers that alert human reviewers when metrics exceed thresholds. Third, maintain override capability for human intervention when AI decisions seem wrong. Fourth, use mistakes as training data to improve models over time. The goal isn’t preventing all AI mistakes but detecting them quickly, limiting impact, and learning from them. Organizations with mature AI operations accept that imperfect AI still outperforms no AI when properly monitored.

How do we maintain brand voice consistency when AI generates content?

Brand voice consistency requires structured inputs and vigilant review. First, develop detailed brand voice guidelines that AI can reference – tone descriptors, example phrases, prohibited terms. Second, include brand context in AI prompts for every content generation request. Third, establish human review as mandatory before any AI content publishes. Fourth, create feedback loops where human editors flag voice inconsistencies and these become training examples. Fifth, consider fine-tuning AI models on your approved content if volume justifies the investment. No AI maintains brand voice perfectly without human oversight, so build review into the workflow rather than expecting AI to handle voice independently.

How do we address team concerns about AI replacing their jobs?

Address concerns directly and honestly. First, acknowledge that AI changes work but emphasize that your implementation augments rather than replaces human contribution. Second, demonstrate how AI handles tedious work while creating opportunity for humans to focus on higher-value activities. Third, invest in skill development that makes team members more valuable with AI tools – prompt engineering, quality assessment, strategic direction. Fourth, involve team members in AI implementation decisions so they shape how AI integrates with their work. Fifth, celebrate successes that demonstrate human-AI collaboration producing better results than either alone. The teams most threatened by AI are those without AI skills; develop your team’s capabilities rather than eliminating positions.

What governance is required for AI in regulated industries like insurance or mortgage leads?

Regulated industries require specific AI governance structures. First, establish audit trails documenting AI decisions affecting consumers – lead scoring, routing, communication. Second, ensure explainability of AI recommendations so compliance officers can understand and verify decision logic. Third, maintain human review requirements for consumer-facing AI content before publication. Fourth, implement override capability ensuring humans can intervene in AI decisions when necessary. Fifth, verify that AI applications comply with industry regulations (TCPA, state insurance rules, RESPA) before deployment. Sixth, conduct periodic audits of AI performance against compliance requirements. Document everything – regulators increasingly expect organizations to demonstrate AI governance.

How do we measure ROI from human-AI collaboration investments?

Measure ROI across multiple dimensions. Efficiency gains include content production volume (before vs. after), campaign optimization speed, response time improvements, and cost per output. Quality maintenance tracks conversion rates, customer satisfaction, error rates, and compliance incidents to ensure efficiency gains don’t sacrifice quality. Skill development measures team capability growth and workflow adoption rates. Calculate investment including AI tool costs, training time, workflow development, and ongoing management. Compare total costs against combined efficiency and quality outcomes. Most organizations find that AI ROI appears gradually as workflows mature – expect 6-12 months before substantial measurable returns.

Should small lead generation operations invest in human-AI collaboration?

Yes, with appropriate scope. Small operations often benefit most from AI collaboration because they lack resources for large teams. Start with high-impact, low-complexity applications: AI-assisted content drafting, automated campaign monitoring alerts, chatbot handling of after-hours inquiries. Use accessible tools (ChatGPT, Claude, built-in platform AI) rather than building custom systems. Focus on augmentation that extends small team capacity rather than automation that requires significant oversight infrastructure. The key for small operations is choosing applications where AI handles volume while limited human resources focus on direction and quality. A three-person team with effective AI collaboration can match output of a ten-person team without AI.

How does AI collaboration affect competitive dynamics in lead generation?

AI collaboration is becoming table stakes – operators without effective human-AI collaboration increasingly compete at disadvantage against those who have it. The competitive differentiation shifts from whether you use AI to how effectively you use it. Operators with mature AI collaboration produce more content, test more variations, respond faster, and optimize more effectively than those still operating manually. The gap compounds over time as learning accelerates performance. For competitive positioning, develop AI collaboration capability before competitors in your market reach maturity – the learning curve advantage matters.

What AI tools should lead generation operators prioritize?

Prioritize tools that address your highest-volume, highest-impact tasks. For most operators: content generation tools (ChatGPT, Claude, Jasper) for landing pages and creative at scale; platform AI (Meta Advantage+, Google Performance Max) for campaign optimization; CRM-integrated AI for lead scoring and routing; and chatbot platforms for customer engagement. Avoid tools that solve problems you don’t have or require capabilities you don’t possess. Start with accessible, well-supported tools before considering custom development. The best AI tool is the one your team will actually use effectively.

How do we prevent AI collaboration from creating skill atrophy?

Skill atrophy occurs when teams offload tasks to AI without maintaining underlying capability. Prevention requires intentional design. Rotate team members through AI-assisted and manual tasks so skills stay sharp. Require understanding of AI output rather than blind acceptance – team members should be able to explain why AI recommendations make sense. Build training time into workflows where team members learn from AI patterns rather than just accepting them. Periodically execute tasks manually to validate AI output and maintain capability. The goal is AI augmentation that amplifies human skills, not AI replacement that erodes them.

What governance structures support effective human-AI collaboration?

Governance for human-AI collaboration includes several elements. Decision authority clarity defines which decisions AI can make autonomously vs. which require human approval. Quality standards specify acceptable AI output and review requirements. Escalation procedures define when human intervention is required. Audit capability enables retrospective review of AI decisions. Accountability assignment clarifies who is responsible for AI-influenced outcomes. Continuous monitoring tracks AI performance against standards. For lead generation specifically, compliance review of AI-generated content and AI-influenced decisions is essential. Governance should enable AI efficiency while maintaining human oversight of consequential decisions.

How do we build organizational AI readiness for human-AI collaboration?

Organizational AI readiness requires attention to multiple dimensions. Technical readiness includes data quality, integration capability, and infrastructure that supports AI tools. Skill readiness includes team capability to direct AI, evaluate output, and integrate AI into workflows – this often requires training investment. Cultural readiness includes organizational willingness to trust AI recommendations, adapt workflows, and accept new working patterns. Process readiness includes defined workflows, clear handoffs, and governance structures that accommodate AI involvement. Leadership readiness includes executive understanding of AI capability and limitations, appropriate expectations, and sustained commitment to capability building. Most organizations underestimate the change management required for effective AI collaboration and over-focus on tool selection.

How do we handle situations where AI and human judgment conflict?

When AI recommendations conflict with human judgment, the resolution depends on the stakes and evidence. For low-stakes decisions with good AI performance data, trust AI – humans often overweight intuition relative to data-driven recommendations. For high-stakes decisions or situations AI hasn’t encountered before, trust human judgment – AI may not understand context that humans recognize. For recurring conflicts, investigate the source – either AI models need refinement or human assumptions need updating. The collaborative model assumes humans set boundaries and AI operates within them; when conflict arises, humans retain decision authority while using conflicts as learning opportunities.

Sources

- Deloitte Digital. “Human-AI Marketing Collaboration: GenAI in Marketing Operations.” https://www.deloittedigital.com/us/en/insights/research/genai-human-marketing-operations.html

- McKinsey & Company. “The State of AI in 2025.” https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- Boston Consulting Group. “What CEOs Should Look For in an AI-First CMO.” https://www.bcg.com/publications/2025/what-ceos-should-look-for-in-an-ai-first-cmo

- Gartner. “CMOs’ Top Challenges & Priorities For 2026.” https://www.gartner.com/en/newsroom/press-releases/2025-12-04-cmos-top-challenges-and-priorities-for-2026

- Content Marketing Institute. “B2B Content and Marketing Trends: Insights for 2026.” https://contentmarketinginstitute.com/b2b-research/b2b-content-marketing-trends-research

- Forrester Research. “Predictions 2026: B2B Marketing, Sales, And Product.” https://www.forrester.com/press-newsroom/forrester-b2b-marketing-sales-product-2026-predictions/