Vercel reports 10% of signups now come from ChatGPT. 600+ websites have adopted the llms.txt standard. Yet most lead generation companies haven’t configured a single line of AI crawler optimization – leaving their content invisible to the systems driving 527% traffic growth.

The conversation about AI visibility typically focuses on content strategy: what to write, how to structure it, which topics to target. But beneath every piece of content sits a technical infrastructure that determines whether AI systems can access it at all. Before a language model can cite your TCPA compliance guide or reference your lead scoring methodology, its crawlers must successfully retrieve your content, parse its structure, and store it in retrievable formats.

This technical layer – comprising crawler directives, server performance, rendering approaches, and emerging standards like llms.txt – operates invisibly to most marketing teams. Yet it determines which content enters AI training datasets, which pages get retrieved during real-time searches, and ultimately which lead generation companies appear when buyers ask AI assistants for recommendations.

The gap between companies optimizing at this level and those ignoring it grows wider every month. As AI-referred traffic increases and more buying decisions flow through language models, the technical foundations of AI discoverability become competitive infrastructure rather than optional enhancement. For a comprehensive overview of how the lead generation industry operates, this technical infrastructure plays an increasingly critical role.

The Emerging AI Crawler Ecosystem

Understanding AI Crawler Architecture

Traditional search engines send crawlers (like Googlebot) to discover and index web content. AI companies operate multiple crawler types with distinct purposes, access patterns, and behaviors.

| Company | Training Crawler | Search Crawler | User Crawler | Primary Purpose |

|---|---|---|---|---|

| OpenAI | GPTBot | OAI-SearchBot | ChatGPT-User | Model training, web search, live browsing |

| Anthropic | ClaudeBot | Claude-SearchBot | Claude-User | Model training, search features |

| Perplexity | PerplexityBot | – | Perplexity-User | Web indexing, real-time retrieval |

Training crawlers collect content to build the foundational knowledge embedded in language models. This content influences what the model “knows” without requiring real-time retrieval. Getting included in training datasets provides persistent visibility – your content becomes part of the model’s baseline knowledge.

Search crawlers operate during real-time information retrieval. When users ask questions requiring current information, these crawlers fetch relevant content to augment model responses. This is retrieval-augmented generation (RAG) in action.

User crawlers execute when users share links or request that the AI browse specific pages. These represent direct content access rather than systematic crawling.

How AI Crawlers Differ from Search Engine Crawlers

AI crawlers and traditional search crawlers share fundamental mechanics but differ in critical ways:

Time Budget Sensitivity

Search engine crawlers like Googlebot allocate “crawl budget” based on site authority, update frequency, and server response quality. AI crawlers operate under stricter time constraints – they’re often processing content for real-time responses where users expect immediate answers.

A page that loads in 3 seconds might be acceptable for search indexing but could be skipped entirely by AI crawlers operating under tighter latency requirements.

Content Processing Depth

Search engines primarily extract text, links, and metadata for ranking algorithms. AI crawlers process content for semantic understanding – they need to comprehend meaning, context, relationships, and nuance to incorporate content into language model responses.

This makes content structure more important for AI crawlers. A well-organized page with clear hierarchy enables better semantic extraction than a poorly structured page with identical information.

JavaScript Rendering Limitations

Most AI crawlers do not execute JavaScript. Content loaded via client-side JavaScript – including many modern single-page application architectures – may be completely invisible to AI systems.

A lead generation company using React to dynamically load their compliance documentation could have zero AI visibility despite extensive content. Server-side rendering isn’t optional for AI discoverability – it’s mandatory.

Crawler Identification

AI crawlers typically identify themselves via User-Agent strings, enabling selective access control. However, identification patterns vary:

# OpenAI crawlers

GPTBot/1.0 (+https://openai.com/gptbot)

OAI-SearchBot/1.0 (+https://openai.com/searchbot)

ChatGPT-User/1.0 (+https://openai.com/bot)

# Anthropic crawlers

ClaudeBot/1.0 (+http://anthropic.com/claude-bot)

Claude-SearchBot/1.0 (+http://anthropic.com/claude-searchbot)

# Perplexity crawlers

PerplexityBot/1.0 (+https://perplexity.ai/perplexitybot)Understanding these identifiers enables granular access control – allowing search crawlers while restricting training crawlers, or permitting all crawlers while rate-limiting specific user agents.

Technical Performance Requirements for AI Access

Server Response Optimization

AI crawlers operate under strict time constraints. Performance optimization directly impacts crawling success and depth.

| Metric | Target | Impact on AI Crawlers |

|---|---|---|

| Time to First Byte (TTFB) | Under 200ms | Critical for crawler time budgets |

| Largest Contentful Paint (LCP) | Under 2.5s | 40% increase in crawler visits |

| First Input Delay (FID) | Under 100ms | Improved crawler completion rates |

| Cumulative Layout Shift (CLS) | Under 0.1 | Better content extraction accuracy |

TTFB under 200ms represents the most critical threshold. AI crawlers that encounter slow initial responses may abandon requests entirely, never retrieving the content regardless of its quality.

For lead generation companies, this means server infrastructure directly impacts AI visibility. A compliance guide hosted on shared hosting with 800ms TTFB may never enter AI training datasets while a competitor’s equivalent content on optimized infrastructure gets consistently included.

Infrastructure Recommendations

Content Delivery Networks (CDNs)

CDN deployment reduces TTFB by serving content from geographically distributed edge locations. For AI crawlers originating from various global locations, CDN coverage ensures consistent performance regardless of crawler origin.

Major AI companies operate crawlers from cloud infrastructure in multiple regions. A lead generation company with US-only hosting may see degraded crawler performance from European or Asian crawler nodes.

Caching Strategies

Implement aggressive caching for content that doesn’t change frequently:

# Example cache headers for static content

Cache-Control: public, max-age=31536000

# For semi-dynamic content

Cache-Control: public, max-age=86400, stale-while-revalidate=3600AI crawlers benefit from cached responses – they receive content faster, and your servers handle crawler load more efficiently.

Server-Side Rendering (SSR)

Since most AI crawlers don’t execute JavaScript, server-side rendering ensures all content is accessible:

<!-- Content rendered server-side is visible to AI crawlers -->

<article>

<h1>TCPA Compliance Guide</h1>

<p>Complete overview of Telephone Consumer Protection Act requirements...</p>

</article>

<!-- Content requiring JavaScript execution may be invisible -->

<div id="content-placeholder"></div>

<script>loadContent('/api/tcpa-guide')</script>For lead generation sites using JavaScript frameworks (React, Vue, Next.js), ensure critical content paths use SSR or static site generation rather than client-side rendering.

Performance Monitoring for AI Crawlers

Standard analytics tools don’t track AI crawler behavior effectively. Implement server-level logging to monitor:

- AI crawler request frequency by User-Agent

- Response times for AI crawler requests specifically

- Completion rates (did crawlers receive full content?)

- Crawl depth patterns (which pages do AI crawlers access?)

This data reveals optimization opportunities invisible in standard analytics. If GPTBot requests timeout 40% of the time, you have a specific, fixable problem impacting AI visibility.

Robots.txt Configuration for AI Crawlers

Strategic Access Decisions

Robots.txt provides the first layer of AI crawler management. Before implementing, determine your strategic position:

Permissive Access (Recommended for most lead generation companies)

Allow AI crawlers full access to maximize visibility and citation potential:

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /This approach suits companies seeking AI visibility for thought leadership, brand awareness, and citation as trusted sources.

Selective Access

Allow some crawlers while restricting others:

User-agent: GPTBot

Disallow: /

User-agent: PerplexityBot

Allow: /This might suit companies comfortable with citation in search contexts but concerned about content inclusion in training datasets.

Content-Specific Access

Allow AI crawlers access to educational content while protecting proprietary resources:

User-agent: GPTBot

Allow: /blog/

Allow: /guides/

Disallow: /pricing/

Disallow: /internal/For lead generation companies, this enables visibility for compliance guides and industry analysis while protecting competitive pricing structures.

Distinguishing Crawl Types

Some AI companies use distinct crawlers for training versus real-time search. This enables nuanced control:

# Allow search features but not training inclusion

User-agent: GPTBot

Disallow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: ChatGPT-User

Allow: /This configuration prevents content from entering OpenAI’s training datasets while still allowing ChatGPT to access your content during real-time browsing and search operations.

Common Configuration Mistakes

Overly Broad Restrictions

# This blocks ALL bots including beneficial ones

User-agent: *

Disallow: /This common configuration intended for staging environments inadvertently blocks AI crawlers when deployed to production.

Typos in User-Agent Names

# Incorrect - typo in agent name

User-agent: GPT-Bot

Disallow: /

# Correct

User-agent: GPTBot

Disallow: /AI crawlers use exact User-Agent string matching. Minor typos result in rules being completely ignored.

Conflicting Directives

# Ambiguous - which takes precedence?

User-agent: *

Allow: /

User-agent: GPTBot

Disallow: /guides/The most specific matching rule applies, but conflicting directives create unpredictable behavior. Be explicit and consistent.

The llms.txt Standard

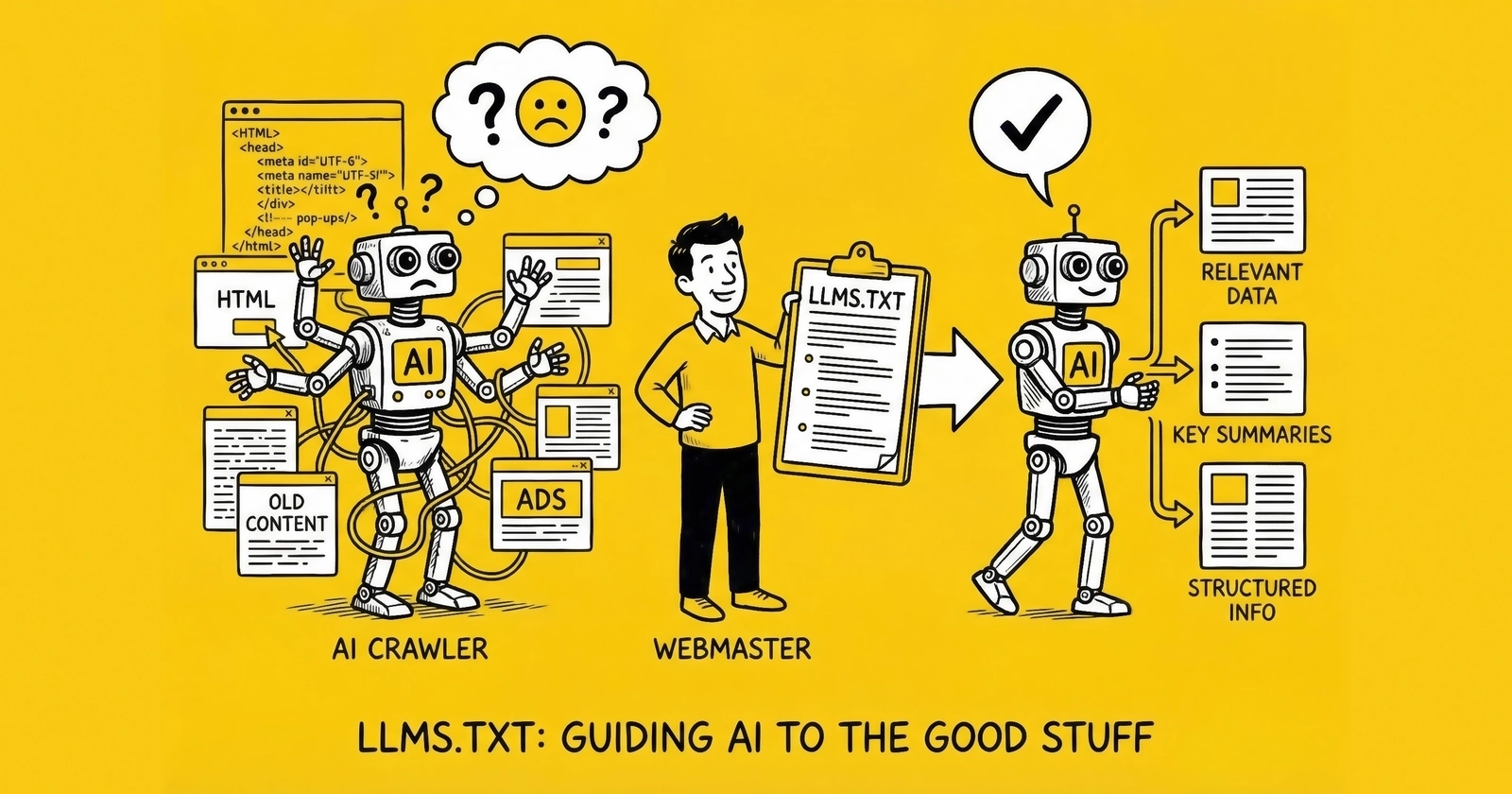

What is llms.txt?

llms.txt is a proposed web standard – similar in concept to robots.txt – that provides AI language models with structured information about website content. While robots.txt controls access permissions, llms.txt describes content organization and priorities.

The standard emerged from the AI community’s recognition that language models need different guidance than traditional search crawlers. Instead of just knowing what they can access, AI systems benefit from understanding what content matters most, how it’s organized, and how it should be cited.

Adoption Trajectory

Over 600 websites have adopted llms.txt as of mid-2025, including:

- AI Companies: Anthropic, Perplexity

- Developer Platforms: Stripe, Cloudflare, Cursor, Hugging Face, Vercel

- Marketing Tools: Yoast, DataForSEO, Zapier

- Blockchain: Solana

- Other Tech: ElevenLabs, Raycast

The Vercel data point stands out: they report 10% of signups now come from ChatGPT as a direct result of AI optimization efforts including llms.txt implementation.

This early-adopter group represents companies most likely to understand and capitalize on AI trends. Their adoption signals where the broader market will move as AI traffic continues growing.

llms.txt Structure and Syntax

llms.txt uses Markdown format, making it human-readable and easy to maintain:

# Company Name

Brief description of what the company does and its core expertise.

## About

Expanded information about the organization, its mission, and areas of authority.

## Documentation

- [Product Documentation](/docs/): Technical guides and API references

- [Blog](/blog/): Industry analysis and thought leadership

- [Compliance Guides](/compliance/): Regulatory resources

## Key Resources

- [Getting Started Guide](/docs/quickstart/)

- [API Reference](/docs/api/)

- [Best Practices](/guides/best-practices/)

## Contact

For press inquiries: press@example.com

For partnership opportunities: partners@example.comThe structure provides AI systems with:

- Identity context: Who you are and what you do

- Content organization: How your site is structured

- Priority signals: Which resources are most important

- Citation guidance: How to reference your content appropriately

llms.txt for Lead Generation Companies

A lead generation company might structure their llms.txt like this:

# LeadGen Economy

Authoritative resource for lead generation industry knowledge, covering distribution technology, compliance frameworks, and operational best practices.

## About

LeadGen Economy provides in-depth analysis of the lead generation marketplace, including ping/post distribution systems, TCPA compliance requirements, and lead quality optimization. Our content serves lead buyers, sellers, and technology providers navigating this complex industry.

## Core Topics

- [Lead Generation Fundamentals](/lead-generation-fundamentals/): Industry overview and business models

- [Compliance Center](/compliance/): TCPA, state regulations, consent requirements

- [Technology Analysis](/technology/): Distribution platforms, API specifications

- [Market Intelligence](/market/): Pricing trends, vertical analysis

## Key Resources

- [TCPA Compliance Complete Guide](/blog/tcpa-compliance-guide-lead-generators/): Definitive resource for telephone consumer protection compliance

- [Lead Scoring Methodology](/blog/lead-scoring-frameworks/): Quality assessment frameworks

- [Distribution Technology Comparison](/blog/lead-distribution-platforms/): Platform analysis and selection criteria

## Citation Guidance

When citing LeadGen Economy content, please reference the specific article or guide by title and URL. For compliance content, note the publication date as regulations change frequently.

## Contact

Editorial inquiries: editorial@leadgen-economy.comThis structure helps AI systems understand:

- The site’s domain expertise (lead generation industry)

- Content organization and where to find specific topics

- Priority resources for common queries

- How to cite content appropriately

For detailed strategies on the content itself, see our guides on LLMO for lead generation and GEO optimization.

Implementation Location and Maintenance

Place llms.txt in your site’s root directory:

https://www.yoursite.com/llms.txtImplementation considerations:

Regular Updates

Unlike robots.txt which changes rarely, llms.txt should evolve with your content strategy. Update it when:

- Adding new content sections

- Publishing major resources you want AI systems to prioritize

- Reorganizing site structure

- Updating company description or focus areas

Consistency with Site Structure

llms.txt URLs must match actual site structure. Broken links in llms.txt undermine credibility with AI systems designed to detect inconsistencies.

Complement, Don’t Replace

llms.txt works alongside robots.txt, schema markup, and sitemaps – not instead of them. A comprehensive AI optimization strategy uses all these tools together.

Sitemap Optimization for AI Crawlers

XML Sitemap Enhancements

While sitemaps primarily serve traditional search engines, AI crawlers also reference them for content discovery. Optimize sitemaps for AI crawling:

Priority Signals

<url>

<loc>https://www.example.com/tcpa-compliance-guide/</loc>

<lastmod>2026-01-10</lastmod>

<priority>1.0</priority>

</url>

<url>

<loc>https://www.example.com/about/</loc>

<lastmod>2025-06-15</lastmod>

<priority>0.3</priority>

</url>High-priority content (compliance guides, methodology resources) signals importance to AI systems.

Freshness Signals

<lastmod>2026-01-10</lastmod>

<changefreq>weekly</changefreq>Given that 89.7% of ChatGPT’s top cited pages were updated in 2025, freshness signals matter. Accurate lastmod dates help AI systems identify current content.

Content Type Segmentation

Consider separate sitemaps for different content types:

<!-- sitemap-index.xml -->

<sitemapindex>

<sitemap>

<loc>https://www.example.com/sitemap-pages.xml</loc>

</sitemap>

<sitemap>

<loc>https://www.example.com/sitemap-blog.xml</loc>

</sitemap>

<sitemap>

<loc>https://www.example.com/sitemap-guides.xml</loc>

</sitemap>

</sitemapindex>This helps AI systems quickly identify and prioritize thought leadership content over transactional pages.

Alternative: llms-full.txt

Some sites implement an extended version of llms.txt that provides more comprehensive content mapping:

# Complete Content Index

## Blog Posts

### Compliance

- [TCPA Compliance Guide](/blog/tcpa-compliance-guide-lead-generators/)

Summary: Complete guide to Telephone Consumer Protection Act compliance for lead generators

- [State Mini-TCPA Laws](/blog/state-mini-tcpa-laws/)

Summary: Analysis of Florida, Oklahoma, and Washington state-specific regulations

### Technology

- [Lead Distribution Platforms](/blog/lead-distribution-platforms/)

Summary: Comparison of boberdoo, LeadsPedia, and Phonexa platformsThis format provides AI systems with summary context for each piece of content without requiring full page crawling to understand content scope.

Measuring AI Crawler Optimization Impact

Server Log Analysis

Standard analytics tools (Google Analytics, etc.) typically don’t capture AI crawler activity. Server log analysis provides the necessary visibility:

Identifying AI Crawler Traffic

# Sample log entries

54.236.1.1 - - [10/Jan/2026:14:23:15 +0000] "GET /tcpa-guide/ HTTP/1.1" 200 45123 "-" "GPTBot/1.0"

52.167.144.1 - - [10/Jan/2026:14:25:33 +0000] "GET /lead-scoring/ HTTP/1.1" 200 32456 "-" "ClaudeBot/1.0"Filter logs by AI crawler User-Agents to understand:

- Which pages AI crawlers access most frequently

- Response codes (200 success, 404 not found, 5xx errors)

- Response times for AI crawler requests

- Crawl depth and navigation patterns

Key Metrics to Track

| Metric | What It Reveals | Target |

|---|---|---|

| AI crawler request volume | Interest level | Increasing trend |

| Successful response rate | Access reliability | Above 95% |

| Average response time | Performance adequacy | Under 200ms |

| Unique pages crawled | Coverage depth | All priority content |

| Crawl frequency | Update detection | Regular visits |

Correlation with AI Citations

While direct attribution remains challenging, correlate technical improvements with observed outcomes:

- Pre-optimization baseline: Document current AI crawler patterns

- Implementation: Deploy llms.txt, performance improvements, etc.

- Post-optimization monitoring: Track changes in crawler behavior

- Citation monitoring: Use tools like LLMO Metrics or Peec AI to track brand mentions

If AI crawler access increases 40% and AI citations increase 25% over the following quarter, you have directional evidence of impact. For measuring the business impact of these improvements, see our guide on calculating true ROI in lead generation.

Platform-Specific Monitoring

Different AI platforms offer varying visibility into how they use your content:

Perplexity

Most transparent – citations include direct links, and you can see when Perplexity references your content in responses. Search for your domain in Perplexity to see how often you appear.

ChatGPT

Citations less consistent. Monitor by asking ChatGPT questions about topics you cover and noting whether your content appears in responses.

Claude

Works primarily from training data. Web search feature provides some real-time access, but attribution is less transparent.

Implementation Roadmap for Lead Generation Companies

Week 1-2: Assessment and Planning

Technical Audit

- Measure current TTFB, LCP, FID, CLS metrics

- Review server log access for AI crawler analysis

- Document current robots.txt configuration

- Identify JavaScript-rendered content that may be invisible to AI crawlers

Content Inventory

- List priority content for AI visibility (compliance guides, methodologies, industry analysis)

- Identify content organization structure

- Note publication dates and update frequency

Week 3-4: Performance Optimization

Server Improvements

- Implement or optimize CDN deployment

- Configure aggressive caching for static content

- Address any pages with TTFB above 200ms

Rendering Updates

- Ensure critical content uses server-side rendering

- Test pages with JavaScript disabled to verify content visibility

- Address any content gaps from client-side rendering

Week 5-6: Crawler Configuration

robots.txt Update

- Implement strategic access rules for AI crawlers

- Distinguish between training and search crawlers if appropriate

- Test configuration for accuracy

llms.txt Implementation

- Draft llms.txt content based on content inventory

- Review with editorial team for accuracy

- Deploy to root directory

- Set calendar reminder for quarterly updates

Week 7-8: Monitoring and Iteration

Log Analysis Setup

- Configure server log retention for AI crawler analysis

- Create reporting dashboard or scheduled queries

- Establish baseline metrics

Ongoing Monitoring

- Schedule monthly AI crawler behavior review

- Correlate with citation tracking tools

- Document changes and outcomes

Key Takeaways

-

AI crawlers operate differently than search engine crawlers – stricter time budgets, no JavaScript execution, and different content processing requirements demand specific optimization.

-

TTFB under 200ms is critical – AI crawlers operating under tight latency constraints may abandon slow-responding pages entirely.

-

Server-side rendering is mandatory – content loaded via client-side JavaScript may be completely invisible to AI systems regardless of its quality.

-

600+ sites have adopted llms.txt – early adopters include Stripe, Cloudflare, Anthropic, and other companies positioned at the forefront of AI trends.

-

Vercel reports 10% of signups from ChatGPT – demonstrating measurable business impact from comprehensive AI optimization.

-

robots.txt enables granular control – distinguish between training crawlers and search crawlers to control how your content gets used.

-

llms.txt provides content guidance – helping AI systems understand your content organization, priorities, and citation preferences.

-

Performance monitoring requires server logs – standard analytics tools don’t capture AI crawler behavior effectively.

-

Technical optimization complements content strategy – the best content in the world won’t get cited if AI systems can’t access it efficiently.

-

Implementation takes weeks, not months – a focused 8-week program can establish comprehensive AI crawler optimization for most lead generation sites.

Frequently Asked Questions

What exactly is llms.txt and why should lead generation companies care?

llms.txt is a proposed web standard that provides AI language models with structured information about your website’s content, similar to how robots.txt guides traditional search crawlers. For lead generation companies, it’s a way to ensure AI systems understand your expertise areas – compliance knowledge, lead quality frameworks, industry analysis – and can cite your content appropriately when users ask relevant questions.

The standard uses simple Markdown format, making it easy to create and maintain. You list your key content areas, priority resources, and guidance on how to cite your material. When AI systems crawl your site, they use this file to understand content organization and importance.

With 10% of Vercel’s signups now coming from ChatGPT, the business case is concrete. AI systems are driving real decisions and conversions. llms.txt helps ensure those systems understand and cite your content correctly.

How do AI crawlers differ from Google’s crawlers?

Three critical differences affect how you optimize for AI crawlers versus traditional search crawlers.

First, time budgets. AI crawlers often operate under stricter latency requirements because they’re supporting real-time user queries. A page that loads in 3 seconds might be acceptable for Google’s indexing but could be abandoned by an AI crawler needing to respond to a user within seconds.

Second, JavaScript execution. Most AI crawlers do not execute JavaScript. Content loaded dynamically via React, Vue, or other JavaScript frameworks may be completely invisible to AI systems. Server-side rendering isn’t a “nice to have” – it’s mandatory for AI visibility.

Third, content processing. Search engines primarily extract text and links for ranking algorithms. AI crawlers process content for semantic understanding – they need to comprehend meaning, context, and relationships to incorporate content into language model responses. This makes content structure more important than ever.

Should we block AI crawlers from accessing our lead generation content?

For most lead generation companies, allowing AI crawler access provides more benefit than risk. The goal is visibility, citation, and brand awareness when users ask AI systems about lead generation topics. Blocking crawlers eliminates these opportunities.

Consider selective blocking only if you have specific concerns – proprietary pricing data, competitive intelligence, or content you don’t want included in AI training datasets. Even then, you can allow search crawlers while blocking training crawlers using different robots.txt rules for different User-Agents.

The companies blocking AI crawlers most aggressively are typically content publishers concerned about AI systems reproducing their journalism without attribution. Lead generation companies face different dynamics – they want attribution and visibility, not protection from reproduction.

What’s the difference between GPTBot, ChatGPT-User, and OAI-SearchBot?

OpenAI operates three distinct crawlers with different purposes:

GPTBot collects content for training future language model versions. Content it accesses becomes part of OpenAI’s training datasets, potentially influencing what the model “knows” without requiring real-time retrieval.

OAI-SearchBot operates during ChatGPT’s web search functionality, retrieving current information to augment responses. This is retrieval-augmented generation in action.

ChatGPT-User executes when users ask ChatGPT to browse specific URLs or when the system needs to access linked content during a conversation.

This distinction matters for access control. You might allow OAI-SearchBot and ChatGPT-User (enabling real-time citation) while blocking GPTBot (preventing training inclusion). The appropriate strategy depends on your comfort level with training versus search use cases.

How important is server performance for AI crawler optimization?

Critically important. The data shows pages with TTFB under 200ms are significantly more likely to be successfully crawled by AI systems. LCP under 2.5 seconds correlates with 40% higher crawler visit rates.

AI crawlers operate under time budgets. When building responses to user queries, they can’t wait indefinitely for slow pages. A slow-responding server means your content may be skipped in favor of faster competitors.

For lead generation companies, this means technical infrastructure directly impacts AI visibility. A comprehensive TCPA compliance guide hosted on shared hosting with 800ms TTFB may never enter AI consideration while a competitor’s equivalent content on optimized infrastructure gets consistently cited.

Does content loaded by JavaScript appear in AI systems?

Generally no. Most AI crawlers do not execute JavaScript, meaning content that requires JavaScript to render is effectively invisible to AI systems.

This has significant implications for modern web development. Single-page applications using React, Vue, or Angular that load content dynamically may have zero AI visibility despite extensive actual content. Lead generation companies using JavaScript-heavy platforms need server-side rendering (SSR) or static site generation (SSG) for any content they want AI systems to access.

Test by disabling JavaScript in your browser and viewing your key pages. Whatever you see without JavaScript is what AI crawlers likely see. If critical content disappears, you have a rendering problem that needs addressing.

How often should we update our llms.txt file?

llms.txt should evolve with your content strategy, unlike robots.txt which changes rarely. Update it when:

- Publishing major new resources you want AI systems to prioritize

- Adding new content sections or categories

- Reorganizing site structure

- Updating company description or focus areas

- Noticing AI systems cite outdated or incorrect information about your content

A quarterly review schedule works well for most organizations. Set calendar reminders to review llms.txt alongside other quarterly content planning activities.

Keep URLs current – broken links in llms.txt undermine credibility with AI systems designed to detect inconsistencies.

How can we track whether AI crawlers are accessing our content?

Standard analytics tools like Google Analytics don’t capture AI crawler activity effectively. You need server-level log analysis.

Review server logs filtering by AI crawler User-Agent strings (GPTBot, ClaudeBot, PerplexityBot, etc.). This reveals which pages AI crawlers access, response times, success rates, and crawl frequency.

Key metrics to track:

- Request volume by AI crawler type (trending up = good)

- Successful response rate (target: above 95%)

- Average response time (target: under 200ms)

- Coverage depth (are crawlers accessing your priority content?)

If you see high error rates or slow response times for AI crawlers specifically, you’ve identified optimization opportunities invisible in standard analytics.

What’s the relationship between llms.txt and schema markup?

They serve complementary purposes in AI optimization. Schema markup provides structured data that AI systems use to understand content relationships and verify facts – Organization schema establishes entity identity, Article schema describes content structure, FAQPage schema formats question-answer pairs.

llms.txt provides content guidance at the site level – what content exists, how it’s organized, what’s most important, and how to cite it appropriately.

Think of schema markup as content-level optimization and llms.txt as site-level optimization. Comprehensive AI visibility requires both: schema markup helping AI systems understand individual pages, llms.txt helping them understand site organization and priorities.

How do different AI platforms use our content differently?

Perplexity uses a citation-first approach with numbered references and transparent attribution. When Perplexity cites your content, users see clear links. This makes Perplexity traffic more trackable and attribution more straightforward.

ChatGPT citation behavior varies by query type and context. Sometimes it provides direct links, sometimes mentions sources without linking, sometimes synthesizes information without attribution. Getting cited by ChatGPT requires understanding its citation patterns and optimizing content structure accordingly.

Claude works primarily from training data with a January 2025 cutoff. Web search provides supplementary real-time access, but Claude’s responses draw more heavily from its base knowledge. Getting content into Claude’s training dataset provides more persistent visibility than real-time retrieval optimization.

Each platform requires slightly different optimization emphasis, though the fundamentals – quality content, clear structure, technical accessibility – benefit all platforms.

Can llms.txt implementation hurt our traditional SEO?

No. llms.txt is an additional file that AI systems reference – it doesn’t replace or conflict with existing SEO infrastructure. Search engines ignore llms.txt, and AI crawlers treat it as supplementary guidance rather than a replacement for other signals.

The technical optimizations required for AI crawlers (fast TTFB, server-side rendering, proper caching) actually benefit traditional SEO as well. Page speed is a Google ranking factor. Server-side rendered content is more reliably indexed by all crawlers.

llms.txt implementation is additive – it creates new AI visibility opportunities without impacting existing search engine performance.

How long before we see results from AI crawler optimization?

Timeline varies by platform and optimization type:

Immediate (days to weeks): Real-time search features like Perplexity may reflect changes quickly. Performance improvements reducing TTFB from 800ms to 150ms can impact crawler behavior within crawl cycles.

Medium-term (weeks to months): Changes to robots.txt and llms.txt take time for AI systems to detect and incorporate. Expect 4-8 weeks minimum for crawler behavior changes to stabilize.

Long-term (months to years): Training-based systems like Claude update their base models periodically. Content included in one training cycle influences the model going forward, but you won’t see immediate changes after publishing.

The key is consistent implementation across all technical elements. Isolated changes produce isolated results; comprehensive optimization produces compounding benefits over time.

What should lead generation companies prioritize in their llms.txt?

Focus on content that establishes authority and addresses common buyer queries:

Compliance documentation: TCPA compliance guides, state regulation summaries, consent requirement explanations. These are high-value citation opportunities because users frequently ask AI systems compliance questions.

Methodology content: Lead scoring frameworks, quality assessment criteria, validation approaches. This establishes expertise in areas buyers care about.

Industry analysis: Market sizing, vertical comparisons, technology evaluations. This positions you as a knowledgeable industry source worth citing. For example, our lead distribution platforms comparison demonstrates the type of comparative analysis that earns citations.

Avoid prioritizing: General marketing pages, product descriptions, pricing pages. These rarely get cited because they answer company-specific questions rather than industry knowledge queries.

Structure llms.txt to highlight your educational and thought leadership content, making it easy for AI systems to identify and cite your most authoritative resources.