Machine learning models now predict which leads will convert with 85% accuracy before a single call is made. Here’s how predictive analytics is transforming lead generation economics, the practical applications delivering measurable ROI, and how to implement these systems in your operation.

Lead generation has always been a numbers game. Generate enough volume, apply reasonable quality filters, and statistical averages eventually work in your favor. This approach built a $10 billion industry.

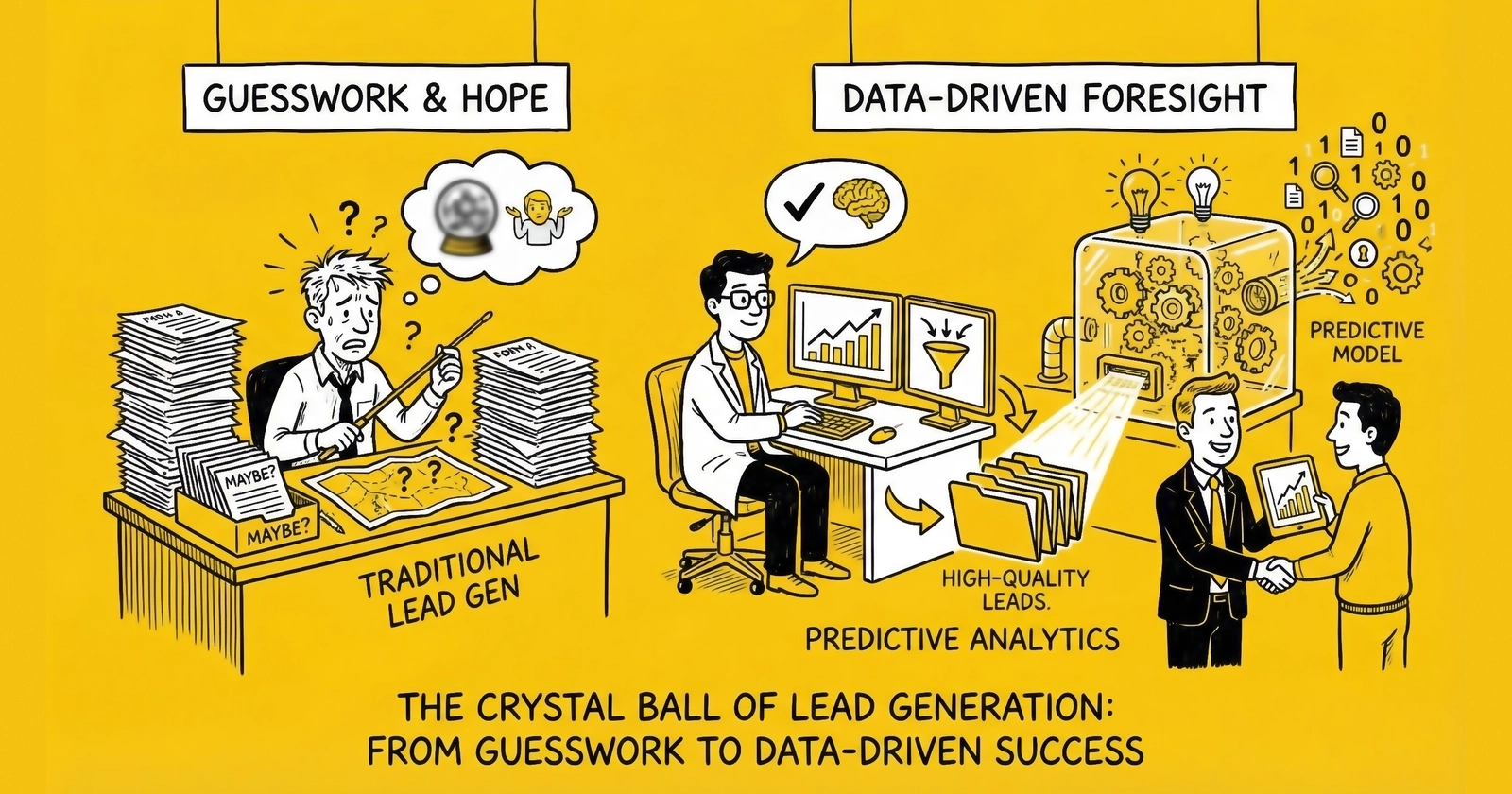

But statistical averages hide massive inefficiency. The same average conversion rate that appears acceptable at the aggregate level masks the reality that 80% of leads never had a chance of converting, while the 20% that could convert often receive the same treatment as everyone else. Sales teams burn through leads with equal effort regardless of potential. Marketing budgets optimize for volume rather than value. The entire apparatus operates on assumptions rather than predictions.

Predictive analytics changes the equation. Instead of treating all leads equally and hoping averages work out, predictive systems identify which leads are most likely to convert, which channels produce genuine intent rather than form-fillers, and which prospects deserve immediate attention versus delayed follow-up. The technology has matured from experimental to operational. The operators implementing predictive analytics report 40% improvement in lead-to-purchase conversion, 50% reduction in wasted sales effort, and cost per acquisition decreases of 30-60%.

This guide cuts through vendor hype to deliver what operators actually need: a clear-eyed assessment of predictive analytics applications that work now, the data infrastructure required for effective implementation, realistic efficiency gains based on documented results, and a practical implementation roadmap based on operational maturity.

What Is Predictive Analytics in Lead Generation?

Predictive analytics applies statistical modeling and machine learning to historical data to forecast future outcomes. In lead generation, this means analyzing past lead behavior, conversion patterns, and outcome data to predict which future leads are most likely to convert.

The distinction matters because traditional lead scoring assigns fixed point values based on assumptions. A VP title gets 20 points. A pricing page visit adds 15. Downloading a whitepaper adds 10. The weights are arbitrary, based on intuition rather than outcome data. Two leads with identical scores might have dramatically different conversion probabilities because the scoring model treats all VP titles equally, regardless of industry, company size, or behavioral context.

Predictive lead scoring instead analyzes hundreds of signals simultaneously to calculate actual conversion probability based on patterns in your specific data. The model learns that Directors at mid-market companies in manufacturing who engage with case studies after viewing pricing convert at 18%, while VPs at enterprise technology companies with similar engagement convert at only 4%. These patterns emerge from data, not assumptions.

Research from 2024-2026 indicates that companies implementing AI-powered predictive lead scoring report 40% improvement in lead-to-purchase conversion rates. That improvement comes not from better leads entering the system, but from better prioritization ensuring the highest-potential prospects receive appropriate attention.

How Predictive Models Work

Predictive analytics in lead generation typically employs several modeling approaches:

Regression models predict continuous outcomes like expected revenue or probability scores. Logistic regression remains common for lead scoring because it produces interpretable probability values between 0 and 1.

Classification models categorize leads into discrete groups: hot, warm, cold, or more nuanced segments based on predicted behavior. Random forests and gradient boosting machines handle the non-linear relationships that characterize complex lead behavior.

Neural networks process high-dimensional data where traditional models struggle, particularly useful when combining behavioral sequences, text analysis, and structured data.

Ensemble methods combine multiple models to improve prediction accuracy beyond what any single approach achieves.

The common thread: all approaches learn from historical outcome data what patterns distinguish leads that convert from those that don’t. The model then applies those learned patterns to score new leads in real-time.

The Data Requirements

Predictive analytics is only as good as the data feeding the models. Effective lead scoring requires:

Outcome data. The model needs to learn what “success” looks like. For most lead generation, this means conversion to sale, but might also include intermediate outcomes: qualified, proposal sent, closed-won, or customer lifetime value. The richer the outcome data, the more nuanced the predictions.

Lead attribute data. Demographics (title, company size, industry), firmographics (revenue, growth rate, technology stack), and contact information quality all influence conversion probability.

Behavioral data. What did the lead do before converting? Page visits, content engagement, email opens, time on site, form completion patterns, and interaction sequences all carry predictive signal.

Channel and source data. Where did the lead originate? Which campaign, keyword, or referral source? Source quality varies dramatically, and predictive models learn these patterns.

Temporal data. When did interactions occur? Recency matters. A lead who engaged heavily last week differs from one who engaged six months ago.

The challenge for many operations is that this data lives in disconnected systems. Marketing automation captures behavior. CRM captures sales outcomes. Lead distribution platforms capture routing and acceptance. Financial systems capture revenue. Without integration, predictive models lack the feedback loops necessary for accurate prediction.

Core Applications of Predictive Analytics

Predictive analytics applies across the lead generation lifecycle, from acquisition through conversion. Each application delivers measurable efficiency gains.

Predictive Lead Scoring

The flagship application. Predictive lead scoring replaces assumption-based point systems with data-driven conversion probability.

Traditional scoring might assign:

- VP or C-level title: 20 points

- Company size over 500 employees: 15 points

- Downloaded whitepaper: 10 points

- Visited pricing page: 15 points

- Demo request: 25 points

Two leads scoring 65 points each are treated identically, regardless of the specific combination of attributes or whether those attributes actually predict conversion in your business.

Predictive scoring processes hundreds of signals to produce a single probability: this lead has an 73% chance of converting based on patterns in your historical data. The model might learn that demo requests from Directors at mid-market SaaS companies who previously engaged with case studies convert at 82%, while demo requests from VPs at enterprise healthcare companies who found you through paid search convert at only 23%. Same “demo request,” radically different outcomes.

Documented results include companies reporting 40% improvement in lead-to-purchase conversion by focusing sales effort on the 20% of leads generating 80% of revenue. The improvement comes from prioritization, not lead quality changes.

Conversion Prediction

Beyond scoring individual leads, predictive analytics forecasts conversion rates across segments, channels, and time periods.

Channel optimization. Predictive models identify which traffic sources produce leads that actually convert, not just leads that look good on submission. A source might deliver leads at $30 CPL with impressive form completion, but if those leads convert at 2% while a $50 CPL source converts at 12%, the more expensive source is far more valuable. Attribution complexity makes this difficult to assess with simple analytics. Predictive models incorporating multi-touch journeys surface these patterns.

Segment performance. Which buyer personas convert best? Which industries? Which company sizes? Predictive analytics quantifies these differences with statistical confidence, enabling budget allocation based on expected outcomes rather than assumptions.

Timing optimization. When do leads convert? Predictive models identify optimal contact timing, not just “within five minutes” but specific windows when conversion probability peaks for different lead types. Some leads convert best with immediate contact; others benefit from nurture before sales engagement.

Churn and Lifetime Value Prediction

For operations focused on customer retention alongside acquisition, predictive analytics extends beyond initial conversion:

Churn prediction. Which customers are likely to leave? Early warning signals enable intervention before churn occurs. A 10% reduction in churn often delivers more value than 10% increase in new acquisition.

Lifetime value forecasting. What is this lead actually worth? Not just initial sale, but total expected revenue over the relationship. LTV prediction enables appropriate acquisition investment. A lead expected to generate $50,000 lifetime value justifies more aggressive acquisition spending than one expected to generate $500.

Expansion opportunity scoring. Which existing customers are likely to upgrade or purchase additional products? Cross-sell and upsell predictions focus limited sales capacity on highest-probability opportunities.

Fraud Detection

Predictive analytics has become essential for fraud prevention in lead generation.

Bot detection. Machine learning identifies non-human form submissions through patterns invisible to rule-based systems: mouse movement anomalies, keystroke dynamics, form completion velocity, and behavioral sequences that distinguish bots from humans.

Synthetic identity detection. Fraudsters create fake identities that pass individual validation checks. Predictive models identify synthetic identities through pattern analysis across multiple leads: phone numbers ported recently, addresses that don’t match public records, email domains created within 24 hours.

Duplicate detection. Beyond exact-match deduplication, predictive models identify “John Smith” at “123 Main St” and “J Smith” at “123 Main Street” as the same person through fuzzy matching and entity resolution.

Litigator identification. Phone numbers associated with TCPA litigation can be flagged before contact, protecting operations from professional plaintiffs.

The ROI is direct. Every fraudulent lead identified and rejected before purchase saves the cost of that lead plus downstream costs of returns, chargebacks, and potential litigation. For verticals where leads cost $50-200+, preventing fraud directly impacts profitability.

Channel and Campaign Optimization

Predictive analytics transforms budget allocation from intuition to optimization.

Budget reallocation. Models identify which channels deliver leads that convert at acceptable economics, not just leads that submit forms. A channel might appear to deliver $40 CPL leads, but if those leads convert at 3% while another channel’s $60 leads convert at 15%, the second channel delivers far better ROI. Predictive analytics surfaces these patterns that simple analytics miss.

Campaign forecasting. Before launching campaigns, predictive models estimate expected conversion rates, costs, and revenue based on historical patterns from similar campaigns. This enables go/no-go decisions before spending.

Creative optimization. Which creative elements correlate with downstream conversion, not just click-through? Predictive models identify patterns where specific headlines, images, or offers attract leads that actually buy, even if click rates appear similar.

Bid optimization. Real-time predictive scoring can adjust bids based on estimated lead value. A lead from a high-value segment might justify $50 CPC while a low-value segment justifies only $10. Dynamic bid adjustment based on predicted value improves overall economics.

The Technology Stack for Predictive Analytics

Implementing predictive analytics requires infrastructure spanning data collection, storage, processing, and activation. The components must work together for predictions to drive actual decisions.

Data Collection and Integration

The foundation is unified data capturing lead attributes, behavior, and outcomes in a format models can process.

CRM integration. Salesforce, HubSpot, or equivalent must capture lead stage progression and conversion outcomes. Without closed-loop reporting from CRM, predictive models lack the outcome data they need to learn.

Marketing automation integration. Marketo, Pardot, ActiveCampaign, or equivalent provides behavioral data: email engagement, web visits, content consumption, and campaign interactions.

Lead distribution platform integration. boberdoo, LeadsPedia, Phonexa, or equivalent provides routing data, buyer acceptance rates, and quality feedback that enriches predictions.

Analytics integration. Google Analytics 4, Mixpanel, or equivalent captures web behavior and acquisition source data.

Financial integration. Eventually connecting to revenue systems enables LTV-based predictions rather than just conversion predictions.

The challenge is connecting these systems. Customer data platforms (CDPs) like Segment, mParticle, or RudderStack help centralize data collection. Data warehouses like Snowflake, BigQuery, or Redshift provide the analytical foundation. Without integration, predictive models operate on partial information.

Predictive Analytics Platforms

Several platform categories enable predictive lead scoring, each with distinct strengths for different operational contexts.

Purpose-built lead scoring platforms. 6sense, Demandbase, MadKudu, and similar tools provide predictive scoring designed specifically for B2B lead generation. They combine proprietary intent data with your first-party data to predict buying readiness.

CRM-native scoring. Salesforce Einstein, HubSpot Predictive Lead Scoring, and similar tools embed prediction capabilities within CRM platforms. The advantage is tight integration; the limitation is typically less sophisticated modeling than specialized tools.

Marketing automation scoring. Marketo’s predictive scoring, Pardot’s Einstein extensions, and similar tools add prediction to automation platforms. Again, integration advantages with modeling limitations.

Custom machine learning. For sophisticated operations, custom models built with Python/scikit-learn, TensorFlow, or cloud ML services (AWS SageMaker, Google Cloud AI, Azure ML) provide maximum flexibility. The trade-off is significant technical investment.

CDPs with scoring. Platforms like Segment, Amplitude, and others increasingly embed predictive capabilities, enabling scoring within the data platform itself.

Vendor Recommendations by Operational Maturity

Selecting the right predictive scoring platform depends on your current operational sophistication, data infrastructure, and budget constraints. The following recommendations reflect real-world implementations across lead generation operations of varying sizes.

For Operations Just Starting (Under $50K Monthly Ad Spend)

Begin with native CRM scoring capabilities rather than specialized tools. If you run HubSpot, enable HubSpot Predictive Lead Scoring in Professional or Enterprise tiers. The scoring uses your existing contact and engagement data to calculate conversion likelihood without additional integration work. HubSpot’s native scoring typically produces measurable prioritization improvements within 30 days of activation, assuming sufficient historical conversion data exists.

For Salesforce environments, Einstein Lead Scoring provides similar native capabilities. Einstein requires Sales Cloud Einstein licensing (approximately $50 per user monthly) but eliminates integration complexity. The model trains on your opportunity data and produces scores that update daily. Operations with at least 1,000 historical conversions typically see reliable predictions within 60 days of enabling Einstein.

At this stage, avoid specialized platforms. The integration overhead and subscription costs exceed the value for smaller operations. Focus instead on ensuring your CRM captures complete conversion data so that when you graduate to specialized tools, your data foundation supports sophisticated modeling.

For Growth Operations ($50K-$250K Monthly Ad Spend)

This operational scale justifies specialized scoring platforms that augment CRM data with external signals. MadKudu offers the most accessible entry point for B2B lead generation, with pricing starting around $1,000 monthly for lower volumes. MadKudu excels at combining demographic data, firmographic data, and behavioral signals into composite scores that predict both conversion likelihood and customer fit.

Implementation typically requires 2-4 weeks for data connection and model training. MadKudu integrates natively with major marketing automation platforms and CRMs, reducing technical overhead. The platform’s pre-built models for common B2B scenarios (SaaS, professional services, technology) accelerate time to value compared to custom builds.

For operations with strong technical teams, Clearbit Reveal combined with custom scoring logic offers a middle path. Clearbit provides company identification and enrichment data that feeds your own scoring models. This approach costs less than full predictive platforms while delivering the firmographic and technographic signals that improve B2B lead scoring accuracy.

For Scaled Operations ($250K+ Monthly Ad Spend)

At scale, the choice between 6sense and Demandbase dominates the conversation. Both platforms combine account identification, intent signal aggregation, and predictive scoring into comprehensive demand generation infrastructure.

6sense emphasizes intent data breadth, aggregating signals from publisher networks, review sites, and search behavior to identify accounts actively researching solutions. Their Revenue AI platform provides account-level predictions that identify which accounts are in-market, which personas within those accounts are engaged, and what topics they are researching. Pricing varies significantly based on account volume and feature access, typically ranging from $30,000 to $200,000 annually for enterprise deployments.

Demandbase focuses on account identification accuracy and advertising integration. Their platform excels at connecting anonymous website visitors to specific accounts and enabling account-based advertising across display, social, and connected TV channels. For operations where account-based marketing aligns with sales strategy, Demandbase’s advertising capabilities often justify the investment. Enterprise pricing typically starts around $50,000 annually and scales with usage.

Both platforms require significant implementation investment, typically 3-6 months to full deployment including data integration, sales team training, and process adaptation. The ROI case depends on account deal values. Operations selling deals under $25,000 annually rarely justify these platforms; operations selling six-figure contracts often see positive ROI within 12 months.

For Consumer Lead Generation

The B2B-focused vendors above serve enterprise sales. Consumer lead generation requires different tools. For insurance, mortgage, solar, and similar consumer verticals, predictive scoring typically happens within lead distribution platforms rather than separate scoring systems.

Platforms like boberdoo, LeadsPedia, and Phonexa offer built-in scoring capabilities that evaluate leads at submission based on historical conversion patterns by geography, time of day, traffic source, and qualification attributes. These scores inform routing decisions, buyer pricing, and quality filtering.

For operations requiring more sophisticated consumer scoring, custom machine learning models typically outperform off-the-shelf alternatives. Consumer lead scoring depends heavily on industry-specific signals (utility bills for solar, credit indicators for mortgage, coverage gaps for insurance) that generalized platforms handle poorly. Investment in custom models using cloud ML services often delivers better results than adapting B2B-focused platforms to consumer contexts.

Evaluation Framework

When evaluating predictive scoring vendors, apply this framework:

First, assess data requirements against your current capabilities. Platforms requiring data you do not capture or cannot integrate deliver zero value regardless of their algorithmic sophistication. Map vendor data requirements against your actual data availability before engaging in sales conversations.

Second, calculate total cost of ownership including implementation, training, and ongoing management. A platform priced at $50,000 annually may cost $100,000 in the first year once implementation consulting and internal resource allocation are counted. Vendors often quote base pricing that excludes necessary professional services.

Third, demand customer references in your specific vertical and operational scale. A platform that performs well for enterprise software companies may fail in consumer lead generation. Request references who can speak to actual results rather than platform capabilities.

Fourth, negotiate pilot periods before annual commitments. Most vendors will provide 60-90 day pilots at reduced pricing to demonstrate value. Pilots should include specific success metrics defined in advance so evaluation is objective rather than subjective.

Activation and Orchestration

Predictions only create value when they drive action. The activation layer connects predictions to operational systems:

Real-time scoring. As leads enter the system, they receive scores that determine immediate routing, prioritization, and treatment.

Dynamic segmentation. Predicted conversion probability segments audiences for differentiated marketing treatment.

Sales routing. High-value predictions route to senior sales resources; lower-value predictions route to automated nurture or inside sales.

Bid management. Predicted lead value feeds back to advertising platforms to adjust bids in real-time.

Alert triggering. Score changes or threshold crossings generate alerts for sales action.

Without activation, predictive models become expensive dashboards that don’t change outcomes.

Implementation Roadmap

Predictive analytics implementation follows a logical progression from foundation to optimization. Attempting advanced applications without data foundations wastes resources and delivers poor results.

Phase 1: Data Foundation (Months 1-3)

Before building models, you need data they can learn from.

Audit existing data. What outcome data do you have? Can you connect leads to eventual sales outcomes? How far back does reliable data extend? Predictive models typically need 6-12 months of outcome data with sufficient conversion volume for statistical reliability.

Establish tracking. If outcome data is missing or incomplete, establish tracking before attempting prediction. You cannot predict what you don’t measure.

Clean historical data. Data quality issues compound in predictive models. Deduplicate records, standardize formats, and resolve inconsistencies before model training.

Integrate systems. Connect marketing, sales, and distribution data into a unified view. CDPs or data warehouses provide the foundation.

Document data definitions. What constitutes a “lead”? A “conversion”? A “qualified opportunity”? Consistent definitions ensure consistent predictions.

Phase 2: Basic Scoring (Months 3-6)

With data foundations in place, implement initial predictive scoring.

Start with conversion prediction. The simplest and highest-value application predicts which leads will convert. Begin here before attempting more sophisticated applications.

Use platform tools. If your CRM or marketing automation offers predictive scoring, start there. The integration is already solved, and results come faster than custom builds.

Establish baselines. Before predictive scoring, measure current conversion rates by segment. You need baselines to quantify improvement.

Implement A/B testing. Route 50% of leads through predictive prioritization and 50% through existing processes. Measure the difference in conversion rates and sales efficiency.

Train sales teams. Predictive scores change nothing if sales teams ignore them. Training on score interpretation and trust-building through visible wins builds adoption.

Phase 3: Optimization (Months 6-12)

With basic scoring operational, extend to optimization applications.

Add behavioral signals. Initial models often rely on demographic data. Adding behavioral sequences (content engagement patterns, website journeys, email interactions) typically improves prediction accuracy 20-40%.

Implement dynamic scoring. Scores should update as leads engage. A cold lead that suddenly visits your pricing page five times should see score increases in real-time.

Extend to channel optimization. Which acquisition channels produce leads that actually convert? Predictive analysis of channel-to-conversion paths enables budget reallocation.

Add fraud detection. Predictive models identifying bot traffic, synthetic identities, and professional litigators protect revenue and reduce wasted effort.

Implement LTV prediction. Move beyond conversion prediction to lifetime value prediction, enabling acquisition investment proportional to expected returns.

Phase 4: Advanced Applications (Months 12-24)

Mature implementations extend prediction across the customer lifecycle.

Account-level prediction. For B2B, move from lead-level to account-level prediction. Modern B2B purchases involve 6-10 stakeholders; account-level scoring aggregates signals across the buying group.

Predictive demand generation. Which content, offers, and campaigns will generate conversions, not just engagement? Predictive models optimize marketing mix.

Churn prediction. Identify at-risk customers before they leave, enabling proactive retention intervention.

Next-best-action prediction. What should sales do next with each lead? Predictive models recommend actions: call now, send case study, schedule demo, add to nurture.

Results and ROI Benchmarks

Predictive analytics delivers measurable improvements across multiple dimensions. These benchmarks reflect documented results from implementations across industries.

Conversion Rate Improvements

Companies implementing AI-powered predictive lead scoring report 40% improvement in lead-to-purchase conversion rates. This improvement comes from prioritization: focusing sales effort on the 20% of leads generating 80% of revenue.

The mechanism is straightforward. Without prediction, sales teams work leads in arrival order or based on crude scoring. High-potential leads receive the same treatment as low-potential leads. Predictive scoring identifies which leads deserve immediate attention, enabling appropriate resource allocation.

B2B companies report focusing sales effort on 20% of leads that generate 80% of revenue when using predictive scoring. The 80% of low-probability leads receive automated nurture rather than consuming sales capacity.

Efficiency Gains

Predictive analytics reduces wasted effort across the lead lifecycle.

50% reduction in unqualified lead pursuit. Sales teams stop chasing leads that never had conversion potential. Time redirects to high-probability opportunities.

30-60% reduction in customer acquisition cost. Better prioritization means fewer touches required per conversion. Budget concentrates on channels and campaigns that produce converting leads.

40% improvement in sales productivity. Reps spend time on likely buyers rather than unlikely ones. The same team capacity produces more revenue.

Quality Improvements

Predictive fraud detection delivers direct ROI through prevented losses.

15-25% reduction in fraudulent leads. Machine learning identifies bots, synthetic identities, and professional litigants that rule-based systems miss.

20-35% reduction in return rates. Predictive quality scoring identifies leads likely to be returned before purchase, enabling seller intervention or pricing adjustment.

Improved buyer satisfaction. Buyers receiving higher-quality leads renew and expand relationships. The virtuous cycle of quality compounds over time.

Reality Check

These numbers require context:

Starting point matters. A 40% improvement from 5% baseline conversion is 7% absolute. Impressive relative improvement; modest absolute change.

Implementation quality varies. Off-the-shelf tools without customization underperform. The best results come from models trained on your specific data.

Time to value varies. Predictive models require learning periods. Expect 3-6 months before meaningful improvement, with ongoing optimization thereafter.

Human oversight remains essential. Fully automated systems without oversight generate compounding errors. Predictions inform decisions; they don’t replace judgment.

Common Implementation Challenges

Predictive analytics implementation fails more often than it succeeds. Understanding common failure modes enables avoidance.

Data Quality Issues

Garbage in, garbage out applies with particular force to predictive analytics.

Missing outcome data. If you can’t connect leads to eventual conversion outcomes, models cannot learn what success looks like. Many operations lack closed-loop reporting linking marketing leads to sales outcomes.

Inconsistent definitions. What constitutes a “lead” varies across systems. What constitutes “qualified” or “converted” changes over time. Inconsistent definitions produce inconsistent predictions.

Survivorship bias. Models trained only on leads that received follow-up miss patterns in leads that were ignored. The model learns what predicts conversion among worked leads, not among all leads.

Small sample sizes. Statistical reliability requires sufficient data. Operations with fewer than 1,000 conversions annually struggle to build reliable models. The patterns don’t have enough examples to distinguish signal from noise.

Model Drift

Predictive models degrade over time as conditions change.

Market changes. Economic conditions, competitive dynamics, and buyer preferences shift. Models trained on historical data may not predict accurately in changed conditions.

Product changes. New offerings, pricing changes, and positioning shifts alter conversion patterns. Models need retraining to reflect current products.

Process changes. Modified sales processes, new qualification criteria, and changed routing logic all affect which leads convert. The model’s training data no longer represents current operations.

Data drift. The leads entering the system differ from historical leads in ways that affect prediction accuracy. New acquisition channels, changed marketing messages, and evolved buyer profiles all contribute.

Addressing drift requires ongoing monitoring and periodic retraining. Predictions should be validated against actual outcomes regularly, with model updates when degradation appears.

Organizational Adoption

The best model delivers zero value if nobody uses it.

Sales resistance. Reps who believe in their own judgment resist following algorithmic recommendations. Building trust requires demonstrating wins.

Process inertia. Existing workflows don’t incorporate prediction. Changing processes requires effort that organizations resist.

Accountability gaps. Who owns predictive analytics? Marketing implemented it but Sales must use it. Without clear ownership, nobody drives adoption.

Measurement gaps. If you can’t measure the improvement from predictive scoring, you can’t demonstrate value. Skeptics remain skeptical.

Successful implementation requires executive sponsorship, clear ownership, A/B testing to demonstrate value, and ongoing communication of results.

Technical Complexity

Building and maintaining predictive analytics requires capabilities many operations lack.

Data engineering. Connecting disparate systems, maintaining pipelines, and ensuring data quality requires specialized skills.

Data science. Building, validating, and optimizing models requires statistical expertise most marketing teams lack.

MLOps. Deploying models to production, monitoring performance, and managing retraining requires operational infrastructure.

Integration. Connecting predictions to activation systems requires technical work beyond model building.

Organizations often underestimate the ongoing investment required. Building a model is 20% of the work; operationalizing and maintaining it is 80%.

The Future of Predictive Analytics in Lead Generation

Predictive analytics is evolving rapidly. Current capabilities are table stakes; emerging capabilities will reshape competition.

Real-Time Prediction

Today’s predictive scoring often operates in batches: leads are scored periodically rather than instantaneously. Emerging systems score in real-time as leads engage.

In-session scoring. As a visitor navigates your site, their conversion probability updates with each action. The visitor consuming case studies after viewing pricing receives different treatment than one bouncing after 10 seconds.

Dynamic pricing. Real-time value prediction enables real-time pricing. Lead prices adjust based on predicted conversion probability, segment value, and competitive dynamics.

Instant routing. Millisecond scoring enables instant routing to optimal handlers. High-value leads reach senior resources immediately; lower-value leads enter automated sequences.

Prescriptive Analytics

Predictive analytics answers “what will happen?” Prescriptive analytics answers “what should we do about it?”

Next-best-action recommendations. Beyond scoring, systems recommend specific actions: call now, send specific content, adjust offer, schedule demo. The system moves from prediction to prescription.

Automated optimization. Systems don’t just recommend actions; they execute them. Campaign budgets adjust automatically based on predicted outcomes. Lead routing adapts without human intervention.

Simulation and scenario planning. Before taking action, systems simulate expected outcomes across alternatives. What happens if we increase budget here versus there? Predictions enable informed experimentation.

Agentic Commerce Integration

As AI agents increasingly mediate commerce, predictive analytics must evolve.

Agent behavior prediction. When AI agents shop on behalf of consumers, predicting agent behavior differs from predicting human behavior. Different signals matter; different patterns emerge.

API-first prediction. As forms give way to API queries, scoring must operate on structured parameters rather than behavioral observation. The data inputs change.

Algorithmic trust signals. Predictions about which providers agents will recommend become relevant. What makes a provider algorithmically trustworthy differs from what makes them humanly persuasive.

Privacy-First Prediction

Increasing privacy regulation and tracking prevention challenge predictive analytics.

First-party data reliance. As third-party cookies disappear and tracking prevention grows, predictions must rely more heavily on first-party data you collect directly.

Aggregated modeling. Privacy-preserving techniques enable prediction without individual-level tracking. Cohort-based approaches and differential privacy maintain utility while protecting privacy.

Server-side intelligence. Server-side tracking recovers signals lost to client-side blocking, maintaining prediction accuracy despite browser restrictions.

Frequently Asked Questions

1. What is predictive lead scoring and how does it differ from traditional lead scoring?

Traditional lead scoring assigns fixed point values based on assumptions about what matters: job titles receive 20 points, pricing page visits add 15 points, and so forth. The weights are arbitrary, based on intuition rather than outcome data. Two leads with identical scores might have dramatically different conversion probabilities.

Predictive lead scoring uses machine learning to analyze historical data and identify patterns that actually predict conversion in your specific business. The model might learn that Directors at mid-market manufacturing companies who engage with case studies convert at 18%, while VPs at enterprise technology companies with similar engagement convert at only 4%. These patterns emerge from data, not assumptions. Companies implementing predictive lead scoring report 40% improvement in lead-to-purchase conversion by focusing effort on leads most likely to buy.

2. How much data do I need to implement predictive analytics effectively?

Effective predictive analytics requires sufficient data for statistical reliability. At minimum, you need 6-12 months of historical data with at least 1,000 conversion events (leads that became customers). Models trained on smaller datasets cannot reliably distinguish signal from noise.

Beyond volume, you need connected data: lead attributes (demographics, firmographics), behavioral data (engagement patterns, content consumption), and outcome data (which leads converted and at what value). If you cannot connect leads to eventual sales outcomes, models cannot learn what success looks like. Many implementations fail not from insufficient volume but from fragmented data that prevents learning.

3. What ROI can I realistically expect from predictive analytics?

Documented results show companies achieving 40% improvement in lead-to-purchase conversion rates, 30-60% reduction in customer acquisition cost, and 50% reduction in time spent on unqualified leads. However, results vary significantly based on starting point and implementation quality.

A 40% improvement from a 5% baseline conversion rate means absolute conversion increases to 7% – impressive in relative terms but modest absolute improvement. Operations already well-optimized see smaller gains than those with significant inefficiency. Implementation quality matters enormously: off-the-shelf tools without customization underperform custom models trained on your specific data. Expect 3-6 months before meaningful improvement, with continued optimization thereafter.

4. How do I start implementing predictive analytics with limited resources?

Begin with your existing platforms. If you use Salesforce, explore Einstein Lead Scoring. HubSpot offers predictive scoring in higher tiers. Marketo and Pardot provide similar capabilities. These built-in tools require less technical investment than custom solutions while delivering meaningful improvement.

Focus first on data foundation: ensure leads connect to outcomes, standardize definitions, clean historical data. The best model fails without quality data. Start with simple conversion prediction rather than sophisticated applications. Measure baselines before implementation so you can quantify improvement. Expand sophistication as you demonstrate value and justify additional investment.

5. What are the most common reasons predictive analytics implementations fail?

Data quality issues top the list. Missing outcome data prevents learning. Inconsistent definitions produce unreliable predictions. Small sample sizes lack statistical power. Without quality data, even sophisticated models fail.

Organizational adoption failures rank second. Sales teams resist algorithmic recommendations. Processes don’t incorporate predictions. Accountability gaps mean nobody drives adoption. The best model delivers zero value if nobody uses it.

Technical underestimation ranks third. Organizations build models without planning for deployment, monitoring, and maintenance. Building the model is 20% of the work; operationalizing it is 80%. Model drift degrades accuracy over time, requiring ongoing attention most organizations don’t budget.

6. How does predictive analytics handle fraud detection in lead generation?

Predictive fraud detection identifies patterns that rule-based systems miss. Machine learning models analyze mouse movement anomalies, keystroke dynamics, form completion velocity, and behavioral sequences to distinguish bots from humans. Synthetic identity detection identifies fabricated identities through cross-reference patterns: phone numbers ported recently, addresses not matching public records, email domains created within 24 hours.

Litigator identification flags phone numbers associated with TCPA litigation before contact occurs. Duplicate detection uses fuzzy matching and entity resolution to identify “John Smith” at “123 Main St” and “J Smith” at “123 Main Street” as the same person. The ROI is direct: every fraudulent lead prevented saves the lead cost plus downstream costs of returns, chargebacks, and potential litigation.

7. How often do predictive models need to be retrained?

Models degrade as conditions change – a phenomenon called model drift. Market shifts, product changes, process modifications, and evolving buyer profiles all affect prediction accuracy. Most operations should plan for quarterly model evaluation and at least annual retraining.

More sophisticated operations implement continuous learning: models update incrementally as new outcome data becomes available. This approach reduces drift impact but requires more sophisticated technical infrastructure. Regardless of approach, predictions should be validated against actual outcomes regularly. When conversion rates among high-scored leads decline meaningfully, the model needs attention.

8. Can predictive analytics work with limited first-party data?

Predictive analytics becomes more challenging as third-party data sources diminish and privacy regulations expand. However, several approaches maintain effectiveness.

First-party data focus concentrates on signals you collect directly: on-site behavior, email engagement, form responses, and declared preferences. These signals often prove more predictive than third-party data anyway.

Intent data providers (6sense, Bombora, TrustRadius) aggregate buying signals across the web, providing supplemental signals while maintaining privacy compliance. Server-side tracking recovers signals lost to client-side blocking, maintaining data availability despite browser restrictions.

The operations investing in first-party data infrastructure now will maintain predictive capability as privacy restrictions tighten.

9. How does predictive analytics integrate with AI-powered automation?

Predictive analytics provides the intelligence layer that AI automation acts upon. Predictive scores determine which leads receive human attention versus automated nurture. Predicted conversion probability triggers dynamic routing, content personalization, and offer customization.

Chatbots and conversational AI use predictive scores to prioritize engagement and customize conversation flows. A high-score lead receives immediate connection to sales; a low-score lead enters qualification sequences. Bid management systems adjust advertising bids based on predicted lead value, concentrating spend on high-probability segments.

The integration multiplies impact: prediction without automation remains insight without action; automation without prediction treats all leads equally.

10. How will agentic commerce change predictive analytics requirements?

As AI agents increasingly shop on behalf of consumers, predictive analytics must evolve. When agents bypass forms to query APIs directly, the behavioral signals that current models depend on disappear. Predictions must operate on structured parameters rather than observed behavior.

Agent behavior prediction differs from human behavior prediction. Different signals matter – data structure quality, response completeness, verification credentials – rather than the psychological triggers that influence humans. Algorithmic trust signals become relevant: what makes a provider appealing to an algorithm differs from what makes them appealing to a human.

The operators building predictive capabilities now are best positioned to adapt as agent-mediated commerce scales. The data infrastructure, modeling expertise, and operational integration remain valuable even as specific applications evolve.

Key Takeaways

-

Predictive analytics transforms lead generation from assumption-based scoring to data-driven conversion probability, with companies reporting 40% improvement in lead-to-purchase conversion rates.

-

Effective implementation requires connected data spanning lead attributes, behavioral signals, and outcome data – most failures trace to data quality issues rather than modeling sophistication.

-

Start with platform-native scoring tools before investing in custom solutions; the integration advantages often outweigh modeling limitations for initial implementation.

-

Fraud detection through predictive analytics delivers direct ROI by identifying bot traffic, synthetic identities, and professional litigators before they consume resources or create liability.

-

Model drift degrades prediction accuracy over time; plan for quarterly evaluation and at least annual retraining as market conditions, products, and processes evolve.

-

Sales adoption determines actual impact; the best model delivers zero value if nobody uses it. A/B testing to demonstrate wins builds trust.

-

Implementation follows logical progression: data foundation first (months 1-3), basic scoring second (months 3-6), optimization applications third (months 6-12), advanced applications thereafter.

-

As privacy restrictions tighten and agentic commerce emerges, first-party data infrastructure and API-first architectures become essential for maintaining predictive capability.

-

Predictive analytics is additive, not replacement – predictions inform decisions but don’t eliminate the need for human judgment on complex opportunities and strategic questions.

Statistics and projections current as of December 2025. Predictive analytics capabilities and best practices continue to evolve rapidly.