How Model Context Protocol became the universal connector for enterprise AI – and why it matters for every organization building with large language models

The N×M Problem

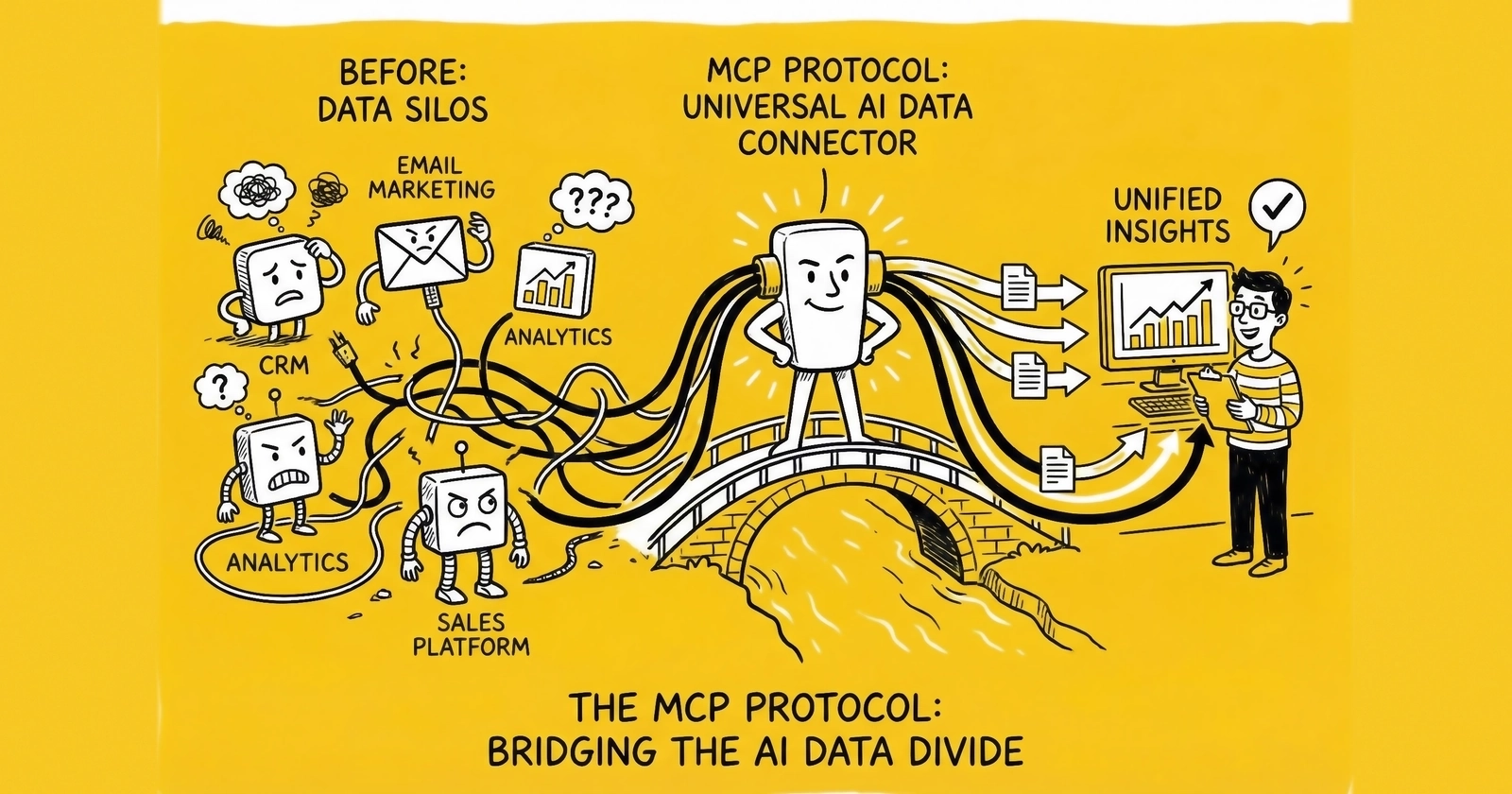

Every enterprise building AI applications faced the same nightmare.

You want your AI assistant to access Salesforce. That’s one custom integration. Now add Google Drive. That’s two. Slack makes three. Your internal database, four. The company wiki, five. Each integration requires unique authentication, custom code, specific API handling, and ongoing maintenance.

When any of those systems updates their API, your integration breaks. When you switch AI providers – from Claude to GPT to Gemini – you rebuild everything from scratch.

This was the N×M problem: N different AI models needing to connect to M different data sources, resulting in N×M custom integrations. Scale multiplied complexity. Every new capability required exponential engineering effort.

Then Anthropic released Model Context Protocol.

One year later, MCP has achieved what few technology standards accomplish: genuine industry-wide adoption from competing giants. OpenAI, Google DeepMind, Microsoft, AWS, Block, Bloomberg – organizations that compete fiercely in AI have united around a single protocol for connecting AI to enterprise systems.

The numbers tell the story:

- 97M+ monthly SDK downloads as of late 2025

- 5,800+ MCP servers and 300+ MCP clients available

- 8 million server downloads in the first six months (up from ~100,000 at launch)

- Major deployments at Fortune 500 companies across every industry

Jensen Huang, CEO of NVIDIA, captured the significance: “The work on MCP has completely revolutionized the AI landscape.”

This isn’t hyperbole. MCP transforms how AI applications are built, deployed, and scaled. Understanding the protocol is no longer optional for organizations serious about enterprise AI – it’s foundational to context engineering, the discipline that determines whether AI pilots succeed.

Part I: What MCP Actually Is

The Universal Connector Concept

MCP is an open standard that defines how AI applications connect to external tools, data sources, and systems. Think of it as USB-C for AI – a universal interface that replaces fragmented, custom connections with a single, standardized protocol.

Before MCP, connecting an AI model to a database required:

- Understanding the database’s specific API

- Building authentication handling

- Implementing error management

- Creating data transformation logic

- Maintaining the integration as either system evolved

Multiply that work by every system the AI needs to access. The result was either massive engineering overhead or severely limited AI capability – a core reason why enterprise data fragmentation undermines AI initiatives.

MCP replaces this fragmentation with a common protocol. An AI application that supports MCP can connect to any MCP-compliant data source without custom integration code. The protocol handles communication, capability discovery, and data exchange through standardized interfaces.

The analogy to USB is precise. Before USB, connecting a printer required understanding parallel ports; a keyboard needed PS/2 connectors; external drives used SCSI cables. Each device type required different knowledge. USB unified these connections – any USB device works with any USB port.

MCP does the same for AI. Any MCP-compliant model works with any MCP-compliant data source. Build once, connect anywhere.

Architecture: Clients, Servers, and Hosts

MCP uses a client-server architecture inspired by the Language Server Protocol (LSP) – the standard that enables code editors to support any programming language. The architecture comprises three components working together.

The host application is the user-facing AI application – Claude Desktop, ChatGPT, an AI-enhanced IDE, or a custom enterprise application where users interact with AI. Embedded within the host, the MCP client translates user requests into MCP protocol messages and manages connections to servers; each host typically runs multiple clients, one for each connected server. MCP servers are lightweight programs that expose specific capabilities – access to a database, a code repository, a business tool, a file system – defining what data and functions they make available.

The communication flows:

- Host application receives user request

- MCP client determines which server capabilities are needed

- Client sends standardized MCP messages to relevant servers

- Servers execute requests and return results

- Client aggregates responses for the host application

- Host incorporates results into AI response

This architecture decouples AI applications from data sources. Change your AI provider? The servers still work. Add a new data source? Just deploy another server – no changes to existing infrastructure.

The Three Primitives

MCP defines three core primitives that servers can expose, each serving a distinct purpose in enterprise integration.

Resources represent data that AI applications can read – documents, database records, API responses, file contents. A Google Drive MCP server, for example, might expose documents as resources, allowing AI to read spreadsheets, presentations, and text files. Tools represent functions that AI applications can invoke – actions with side effects that go beyond reading data. A calendar MCP server might expose tools like “create_event” or “reschedule_meeting” that modify the user’s calendar. Prompts provide reusable templates for common interactions, predefined patterns that guide how AI should handle specific scenarios. A customer service MCP server might expose prompts for handling refund requests, with templated structures for gathering required information.

These primitives cover the vast majority of enterprise integration needs: reading information (Resources), taking action (Tools), and applying patterns (Prompts).

JSON-RPC Transport

Under the hood, MCP messages travel via JSON-RPC 2.0 – a lightweight remote procedure call protocol that’s well-understood, widely supported, and transport-agnostic.

This means MCP can work over:

- Standard I/O (stdio): For local server processes

- HTTP with Server-Sent Events (SSE): For web-based servers

- WebSockets: For persistent, bidirectional connections

The transport flexibility matters for enterprise deployment. Organizations can run MCP servers wherever makes sense – locally on developer machines, in corporate data centers, or in cloud environments – using whatever transport fits their infrastructure.

Part II: Why MCP Won

The Standards Paradox

Technology history is littered with failed standards. The classic XKCD comic about standards captures the usual pattern: fourteen competing standards exist, someone creates a fifteenth to unify them, and now there are fifteen competing standards. MCP broke this pattern. Within eighteen months of launch, it achieved adoption from every major AI provider and platform. How did it succeed where others failed?

Several factors converged at exactly the right moment. MCP launched precisely when the pain of fragmented integrations became acute – organizations had moved past AI experimentation into production deployment, where integration complexity became the dominant obstacle. The protocol arrived as a solution to a problem everyone was actively experiencing, not a theoretical improvement.

Technical elegance accelerated adoption. The protocol is simple enough to implement in days, yet powerful enough to handle complex scenarios. The LSP-inspired architecture was already familiar to developers. JSON-RPC is a known quantity. The primitives (Resources, Tools, Prompts) map naturally to enterprise needs. Anthropic reinforced this accessibility by releasing MCP as an open-source project from day one, with clear specifications, reference implementations, and SDKs in multiple languages. This wasn’t a proprietary standard disguised as open – it was genuinely community-owned infrastructure.

Early adoption patterns sealed the outcome. When Block (formerly Square), Apollo, Sourcegraph, and other respected engineering organizations adopted MCP immediately, it sent a credibility signal that accelerated broader adoption. Then came the tipping point: once OpenAI adopted MCP in March 2025, other AI providers faced a stark choice – adopt the emerging standard or maintain incompatible alternatives. Google DeepMind and Microsoft followed. The network effects became self-reinforcing.

The Linux Foundation Donation

In December 2025, Anthropic donated MCP to the Agentic AI Foundation (AAIF), a directed fund under the Linux Foundation co-founded by Anthropic, Block, and OpenAI.

This governance transition matters for enterprise adoption. Organizations that hesitate to build on technology controlled by a single vendor can now commit to MCP with confidence. Vendor neutrality means no single company controls the standard’s evolution. Linux Foundation governance provides institutional continuity for the long term. Specification Enhancement Proposals (SEPs) enable collaborative, community-driven development. And the Linux Foundation’s track record with Kubernetes, Node.js, and other critical infrastructure provides enterprise credibility.

The governance model mirrors what made Kubernetes successful: strong initial design from a single company (Google), followed by transition to neutral governance that enabled competitor adoption and community evolution.

Adoption Metrics

The numbers validate the standard’s momentum:

| Metric | November 2024 | December 2025 |

|---|---|---|

| Monthly SDK downloads | ~100,000 | 97M+ |

| MCP servers available | ~50 | 5,800+ |

| MCP clients | ~5 | 300+ |

| Enterprise deployments | Pilot only | Fortune 500 production |

Market projections estimate the MCP ecosystem growing from $1.2 billion (2022) to $4.5 billion (2025), with some analysts suggesting 90% of organizations will use MCP by end of 2025.

This adoption velocity exceeds most technology standards. HTTP took years to displace alternatives. REST evolved over a decade. MCP achieved critical mass in twelve months.

Part III: Enterprise Implementation

Building Your First MCP Server

For developers, MCP implementation starts with servers. A basic server requires:

-

Define Capabilities: What resources, tools, and prompts will this server expose?

-

Implement Handlers: Code that executes when clients request each capability

-

Configure Transport: How will clients connect (stdio, HTTP, WebSocket)?

-

Deploy: Run the server where AI applications can reach it

The MCP SDKs (available in TypeScript, Python, and other languages) handle protocol details. Developers focus on business logic – what data to expose and what actions to enable.

A minimal Python server exposing a database query tool might be implemented in under 100 lines of code. The SDK handles JSON-RPC serialization, capability negotiation, and error handling.

Enterprise Architecture Patterns

Production MCP deployments typically follow several architectural patterns. The gateway pattern aggregates multiple backend systems behind a single interface – rather than exposing individual servers for CRM, ERP, and database access, a gateway provides unified access with centralized authentication, logging, and rate limiting. The sidecar pattern takes a different approach: MCP servers run alongside existing services, exposing their capabilities without modifying the services themselves. A sidecar MCP server can wrap a legacy API, translating between MCP protocol and the original interface.

For more complex scenarios, two additional patterns apply. The mesh pattern coordinates multiple MCP servers through an orchestration layer, supporting scenarios where AI actions require multiple systems – for example, a purchase approval that touches inventory, accounting, and notification systems. The registry pattern addresses organizations with many MCP servers by implementing registries for discovery and governance. Clients query the registry to find servers with needed capabilities, rather than maintaining static configurations.

Security Architecture

Security represents MCP’s most critical enterprise consideration. The November 2025 specification update addressed multiple security requirements through four key mechanisms. Authentication extensions now support OAuth 2.0 client credentials for machine-to-machine authorization and enterprise IdP policy controls for cross-application access, enabling MCP to integrate with existing enterprise identity infrastructure. Client security requirements provide guidelines for local server installation that prevent common attack vectors like command injection through malicious servers.

The specification also addresses compliance and containment. Audit and traceability features provide protocol support for logging all context interactions, enabling compliance with governance requirements. Sandboxed execution patterns isolate MCP servers from sensitive systems, limiting blast radius if a server is compromised.

However, significant security challenges remain. Security researchers have identified multiple attack vectors: tool poisoning, where MCP tools with malicious descriptions manipulate AI behavior; prompt injection via tools, where crafted tool outputs hijack AI responses; cross-server shadowing, where malicious servers intercept requests meant for legitimate servers; and package supply chain compromises through malicious MCP packages in npm or PyPI registries.

These risks are not theoretical. The November 2025 CVE-2025-6514 in the mcp-remote package – affecting 437,000+ developer environments through shell command injection – demonstrated exactly how dangerous unvetted MCP infrastructure can become.

Enterprise deployments require layered security: vetting servers before deployment, monitoring for behavioral anomalies, implementing network segmentation, and maintaining update processes for security patches.

Governance Framework

Effective MCP governance addresses five interconnected domains. Server registration determines which MCP servers are approved for enterprise use, who can deploy new servers, and how servers are validated before deployment. Capability boundaries define what resources and tools each server should expose and how to prevent overly permissive access. Access control establishes which users and applications can connect to which servers and how to implement least-privilege principles.

The final two domains complete the picture. Monitoring answers what telemetry to collect from MCP interactions and how to detect anomalous behavior. Incident response defines what processes engage when an MCP-related security event occurs and how to contain and remediate the impact.

Organizations establishing MCP governance should start with clear policies before deployment rather than retrofitting governance onto existing infrastructure.

Part IV: The Ecosystem

Commercial MCP Servers

Major platforms now offer official MCP servers:

| Platform | Capability |

|---|---|

| Google Drive | Document access and search |

| Slack | Channel reading and messaging |

| GitHub | Repository access and code operations |

| Salesforce | CRM data and record operations |

| PostgreSQL | Database query and manipulation |

| Notion | Workspace and page access |

| Jira | Issue tracking and project management |

| Microsoft 365 | Email, calendar, and document access |

These commercial servers accelerate enterprise deployment – organizations can connect AI to major systems without building custom integrations.

Developer Tools Integration

MCP has become particularly prevalent in AI-assisted development, with adoption spanning the entire toolchain. IDEs like Cursor and Zed integrate MCP for codebase access, while code intelligence platforms like Sourcegraph and Codeium use MCP to provide AI with repository context. Deployment platforms such as Replit integrate MCP for project awareness, and Context7 addresses AI code generation accuracy by providing version-specific documentation through MCP.

The developer tools ecosystem demonstrates MCP’s value proposition clearly: AI coding assistants become dramatically more useful when they can access actual project context rather than relying solely on training data.

Security and Governance Vendors

A growing ecosystem addresses enterprise security needs. MCP gateways from SGNL, Pomerium, and ArchGW provide security layers for MCP traffic. New Relic launched MCP-specific observability (though capabilities remain limited). Services like MCPTotal scan servers for vulnerabilities, and various tools enable implementing governance policies across MCP deployments.

This security ecosystem remains immature compared to other enterprise infrastructure. Organizations deploying MCP at scale often need to build custom security controls.

The Agent-to-Agent Future

MCP’s roadmap extends beyond human-AI interaction to agent-to-agent communication. As autonomous agents proliferate, they need standardized ways to:

- Discover capabilities of other agents

- Request services across organizational boundaries

- Negotiate and execute transactions

- Maintain context across multi-step workflows

The Agent-to-Agent (A2A) protocol – a related standard emerging alongside MCP – addresses machine-to-machine scenarios. Together, MCP and A2A form the communication infrastructure for the agentic enterprise.

Gartner’s projection that 90% of B2B buying will be agent-intermediated by 2028 depends on this infrastructure. Procurement agents will negotiate with sales agents. Supply chain agents will coordinate with logistics agents. The protocols enabling this communication are being established now.

Part V: Implementation Strategy

Phase 1: Foundation (Months 1-3)

Assess Current State

- Inventory existing AI applications and their integration needs

- Identify high-value data sources not currently accessible to AI

- Evaluate security and compliance requirements

- Assess developer familiarity with MCP concepts

Establish Governance

- Define MCP server approval process

- Establish security requirements for server deployment

- Create monitoring and incident response procedures

- Assign ownership for MCP infrastructure

Pilot Implementation

- Select 2-3 low-risk, high-value use cases

- Deploy official MCP servers for common platforms (Google Drive, Slack, etc.)

- Build one custom server for internal system

- Document lessons learned

Phase 2: Expansion (Months 4-9)

Scale Server Deployment

- Deploy servers for additional enterprise systems

- Implement gateway pattern for unified access

- Build internal server registry

- Establish deployment automation

Enhance Security

- Implement network segmentation for MCP traffic

- Deploy monitoring and alerting

- Integrate with existing SIEM/SOC infrastructure

- Conduct security assessments of deployed servers

Enable Developers

- Provide MCP training and documentation

- Create templates for common server patterns

- Establish code review requirements for MCP components

- Build internal MCP server library

Phase 3: Maturity (Months 10-18)

Advanced Patterns

- Implement multi-agent workflows using MCP

- Deploy cross-organizational MCP connections (with partners/vendors)

- Build sophisticated orchestration capabilities

- Enable self-service server deployment with governance guardrails

Optimize Operations

- Implement comprehensive observability

- Automate security scanning and compliance validation

- Establish SLAs for MCP infrastructure

- Build operational runbooks for common scenarios

Strategic Integration

- Integrate MCP into enterprise architecture standards

- Include MCP considerations in vendor evaluations

- Develop MCP competency as organizational capability

- Plan for emerging protocols (A2A, etc.)

Common Pitfalls

Organizations implementing MCP frequently encounter predictable obstacles. The most dangerous is underestimating security – treating MCP servers like internal tools rather than security-critical infrastructure, when every MCP server represents a potential attack surface. Closely related is over-permissive access: exposing more capabilities than necessary through MCP rather than starting with minimal permissions and expanding based on demonstrated need.

Operational failures are equally common. Neglecting monitoring means deploying servers without visibility into usage patterns, errors, or anomalies – and monitoring gaps inevitably become security gaps. Many organizations also waste resources by custom building when unnecessary, writing servers when official or community alternatives exist. Finally, ignoring governance by allowing ad-hoc server deployment without approval processes creates shadow MCP servers and unmanaged risk.

Part VI: The Competitive Landscape

MCP vs. Alternatives

MCP isn’t the only approach to AI integration, but it has emerged as the dominant standard. OpenAI Function Calling predates MCP and enables AI to invoke functions, but lacks standardization across providers – function calling remains useful but doesn’t address the N×M problem. ChatGPT Plugins, OpenAI’s earlier approach to extending ChatGPT, required ChatGPT-specific implementation without cross-platform compatibility. OpenAI’s own adoption of MCP signals their recognition of the standard’s advantages.

Alternative approaches persist. Custom APIs offer maximum control but don’t benefit from ecosystem effects and require ongoing maintenance. Framework-specific integrations like LangChain and LlamaIndex work well within their ecosystems but don’t provide cross-framework standardization.

MCP’s advantage is network effects: the more servers and clients exist, the more valuable the protocol becomes. An MCP server works with any compliant client; custom integrations work only with specific implementations.

The Emerging Stack

The “agentic AI mesh” architecture emerging in 2025-2026 positions MCP as foundational infrastructure:

┌─────────────────────────────────────────────────┐

│ AI Applications │

│ (Claude, ChatGPT, Custom Applications) │

├─────────────────────────────────────────────────┤

│ MCP Clients │

│ (Protocol handling, server management) │

├─────────────────────────────────────────────────┤

│ MCP Gateway / Mesh │

│ (Security, routing, observability) │

├─────────────────────────────────────────────────┤

│ MCP Servers │

│ (Resources, Tools, Prompts) │

├─────────────────────────────────────────────────┤

│ Enterprise Systems │

│ (Databases, APIs, Services) │

└─────────────────────────────────────────────────┘This architecture enables organizations to evolve AI applications independently from data sources, swap AI providers without rebuilding integrations, and implement security centrally rather than per-integration.

Building Competitive Advantage

Organizations can leverage MCP for competitive advantage through several reinforcing mechanisms. Speed to capability matters most immediately – standard integration means faster deployment of AI capabilities, connecting in days while competitors build custom integrations. Ecosystem access amplifies this advantage, as the growing MCP server ecosystem provides capabilities that would require significant development effort to build internally.

Provider flexibility provides strategic optionality. MCP-based architectures can switch AI providers based on capability and cost without integration rework, improving negotiating position and adaptation speed. Finally, talent efficiency compounds over time – developers learning MCP apply that knowledge across projects, while custom integration skills don’t transfer.

Key Takeaways

-

MCP solves the N×M integration problem by providing a universal standard that transforms multiplicative complexity into additive complexity – any AI model can connect to any data source through one protocol.

-

Unprecedented industry adoption occurred within 12 months, with Anthropic, OpenAI, Google DeepMind, Microsoft, AWS, and Bloomberg all joining the Agentic AI Foundation under Linux Foundation governance.

-

97 million monthly SDK downloads and 5,800+ servers demonstrate MCP has achieved critical mass faster than comparable standards like HTTP or REST.

-

Three primitives – Resources, Tools, Prompts – cover the vast majority of enterprise integration needs: reading data, taking action, and applying patterns.

-

Security remains the critical challenge with documented vulnerabilities including tool poisoning, prompt injection, and supply chain attacks. Enterprise deployments require layered security controls.

-

Buy before build applies to MCP servers – commercial servers for Google Drive, Slack, GitHub, Salesforce, and other major platforms enable rapid deployment without custom development.

-

Governance before scaling prevents shadow MCP servers and unmanaged risk. Server registration, capability boundaries, access control, and monitoring should precede enterprise rollout.

-

Implementation spans 12-18 months across foundation (months 1-3), expansion (months 4-9), and maturity (months 10-18) phases for comprehensive enterprise adoption.

-

The Agent-to-Agent (A2A) protocol extends MCP into machine-to-machine communication, preparing infrastructure for Gartner’s prediction that 90% of B2B buying will be agent-intermediated by 2028.

-

Competitive advantage accrues to early adopters through speed to capability, ecosystem access, provider flexibility, and talent efficiency. Organizations building MCP architecture now position for the agentic AI transformation.

MCP specifications, ecosystem statistics, and security considerations current as of late 2025. Organizations should review current protocol documentation and security advisories before deployment.