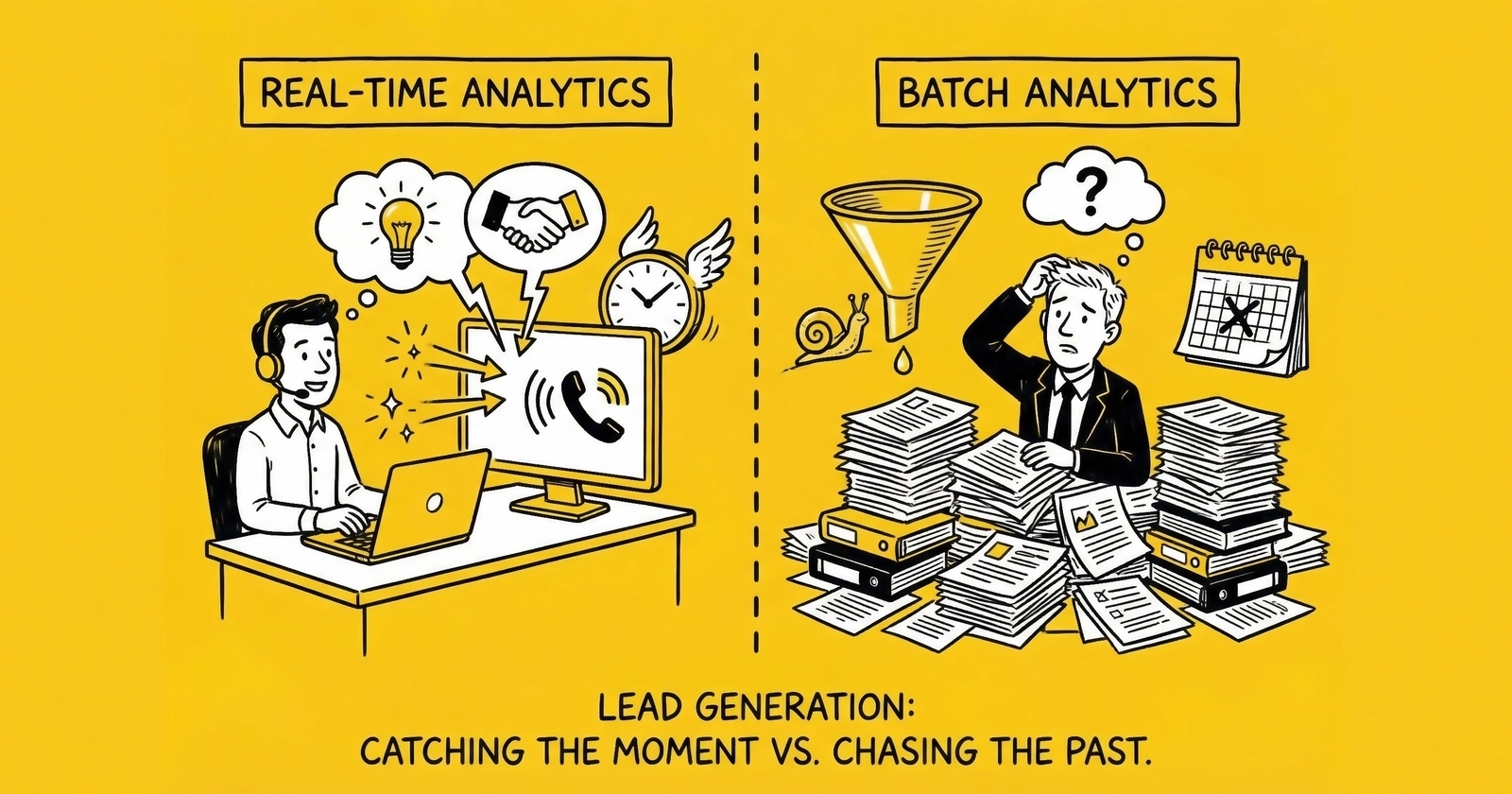

The distinction between real-time and batch analytics determines whether you can respond to problems as they happen or only discover them after the damage is done. Understanding when each approach serves your operation – and when forcing real-time creates more problems than it solves – separates mature analytics operations from expensive infrastructure projects that deliver little value.

The lead generation operator watching their dashboard at 10:47 AM sees something concerning: conversion rates dropped 23% over the past two hours. The campaign is still spending. Every minute that passes burns budget on traffic that is not converting.

The operator immediately pauses the underperforming campaign, investigates the cause, and discovers a landing page error introduced during an overnight deployment. Within fifteen minutes of detection, the problem is resolved. Total waste: approximately $800 in inefficient spend. Without real-time visibility, that same error would have continued burning budget through the night, potentially wasting $5,000 or more before the morning batch report revealed the issue.

This is the promise of real-time analytics – catching problems before they compound. And in this scenario, real-time visibility saved thousands of dollars by enabling immediate intervention.

But consider another scenario. A different operator invests $15,000 monthly in real-time infrastructure to monitor metrics that update every 30 seconds. The problem: the decisions those metrics inform happen weekly during budget allocation meetings. The real-time capability provides psychological comfort – watching numbers move – without changing any business outcome. The same decisions would emerge from data refreshed every four hours.

The question is not whether real-time analytics are valuable. They are, in specific contexts. The question is where real-time creates genuine business value versus where it creates infrastructure cost and operational complexity without proportional return.

This guide provides a framework for deciding when real-time reporting justifies its investment, when batch analytics serve equally well at lower cost, and how hybrid approaches capture the benefits of both without the worst trade-offs of either.

Understanding the Fundamental Distinction

Before evaluating trade-offs, establish clear definitions. The terminology in analytics gets muddy, and that muddiness leads to poor infrastructure decisions.

What Real-Time Actually Means

Real-time analytics processes and displays data with minimal latency between event occurrence and visibility. The practical definition varies by context:

Sub-second real-time (true streaming): Data appears within milliseconds of the event. Examples include stock trading systems, fraud detection during transaction processing, and industrial sensor monitoring. Few lead generation use cases require this level of immediacy, though certain fraud prevention scenarios – detecting coordinated bot attacks or identifying suspicious submission patterns – benefit from millisecond-level awareness.

Near-real-time (micro-batch): Data appears within seconds to minutes of the event. Most “real-time” lead generation dashboards operate here – processing events in small batches every 15-60 seconds. This level captures the practical benefits of real-time for most marketing and sales operations. When operators describe their system as “real-time,” they typically mean near-real-time with minute-level granularity rather than true streaming infrastructure.

Operational real-time (hourly refresh): Data appears within the current hour. For many business decisions, hourly refresh provides sufficient timeliness while dramatically reducing infrastructure complexity. This tier represents the sweet spot for many lead generation operations – fresh enough to catch developing problems, infrequent enough to avoid the operational burden of true streaming.

What Batch Analytics Means

Batch analytics processes accumulated data at scheduled intervals. The batch runs on a schedule – hourly, daily, weekly – transforming raw data into analytics-ready form and updating dashboards or reports.

Hourly batch: Processes the previous hour’s data on a regular schedule. Provides reasonable timeliness for operational decisions while simplifying infrastructure.

Daily batch: The most common approach for business analytics. Data from the previous day becomes available for analysis, typically by early morning. Sufficient for strategic decisions and trend analysis.

Weekly or monthly batch: Appropriate for financial reconciliation, long-term trend analysis, and executive reporting where recency matters less than accuracy and completeness.

The Latency Spectrum

Rather than a binary real-time/batch distinction, analytics exists on a latency spectrum:

| Latency Level | Data Freshness | Typical Use Cases |

|---|---|---|

| Sub-second | Milliseconds | Fraud detection, programmatic bidding |

| Near-real-time | 1-60 seconds | System monitoring, queue management |

| Operational | 1-4 hours | Campaign optimization, traffic routing |

| Daily | 24 hours | Budget allocation, performance review |

| Weekly+ | Days to weeks | Financial analysis, strategic planning |

The infrastructure cost and complexity increase dramatically as you move toward lower latency. A daily batch pipeline might cost $200/month to operate. A true streaming pipeline processing the same data in real-time might cost $2,000-5,000/month – ten to twenty-five times more for the same underlying data.

The cost curve is not linear. Moving from daily batch to hourly refresh might cost an additional $100-200 monthly in compute and orchestration. Moving from hourly to near-real-time adds $1,500-3,000 in streaming infrastructure. The incremental value of each latency improvement must justify its incremental cost – and often, the last mile of latency reduction provides the least business value at the highest marginal cost.

When Real-Time Reporting Creates Genuine Value

Real-time analytics justify their cost when three conditions converge: high velocity of change, high cost of delayed response, and ability to act on the information immediately.

Condition 1: High Velocity of Change

The underlying reality must change fast enough that hourly or daily snapshots miss actionable variation.

High Velocity Scenarios

Several lead generation metrics genuinely move fast enough to warrant real-time attention. Traffic source performance during campaign launch exemplifies this – conversion rates can shift 50% within hours as audience segments exhaust or competitive dynamics change. Buyer capacity during high-volume periods presents similar urgency, as caps fill mid-day and routing decisions become stale. System performance during peak traffic demands immediate visibility because latency spikes directly affect lead quality and buyer experience. Fraud attacks represent perhaps the most time-sensitive scenario; patterns emerge and damage operations within hours if not detected and stopped. Click fraud prevention requires this kind of immediate visibility.

Low Velocity Scenarios

Contrast these with metrics that genuinely do not benefit from real-time visibility. Overall monthly revenue trends rarely shift dramatically within hours – the day-to-day variation is noise, not signal. Buyer payment patterns change over weeks and months, not minutes, driven by invoicing cycles and cash flow decisions that no amount of real-time monitoring accelerates. Lead return rates emerge over days and remain unmeasurable until return windows close – real-time visibility of incomplete return data creates false confidence.

If the underlying metric genuinely does not change on the timescale of your refresh rate, real-time infrastructure provides no additional insight – just higher costs.

Condition 2: High Cost of Delayed Response

The financial impact of discovering a problem later must justify the infrastructure investment to discover it sooner.

Calculate the cost of delayed detection:

Cost = (Problem Duration in Hours) x (Hourly Impact) - (Detection Time Reduction) x (Hourly Impact)

Example calculation:

- A traffic source starts delivering 40% lower quality leads

- The hourly spend on that source is $500

- Daily batch analytics would catch this the next morning (14 hours later)

- Real-time would catch this in 2 hours

- Detection time reduction: 12 hours

- If lower quality costs 50% of spend in waste: 12 hours x $250 = $3,000 saved per incident

If this scenario occurs monthly, the annual value of real-time detection is $36,000 – easily justifying infrastructure investment.

But reverse the calculation for a different scenario:

- Monthly financial reconciliation timing

- Delay in detection has no cost because the data is not actionable until month-end

- Real-time infrastructure cost: $3,000/month

- Value of real-time: zero dollars

The math must work. Emotionally preferring fresher data does not justify economically irrational infrastructure spending.

Condition 3: Ability to Act Immediately

Real-time data only creates value when someone or something can respond to it in real-time.

When Immediate Action Is Possible

Some operational scenarios genuinely support real-time response. Automated systems that pause campaigns when KPIs breach thresholds can act within seconds – no human approval required. On-call operators monitoring dashboards during business hours can investigate and adjust within minutes. API integrations that adjust bidding or routing based on live data respond algorithmically to changing conditions. In these scenarios, faster data translates directly to faster response.

When Immediate Action Is Not Possible

Many business decisions cannot happen in real-time regardless of data availability. Strategy decisions requiring executive approval move at the speed of calendars and meetings, not dashboards. Budget allocation changes with weekly approval cycles cannot accelerate beyond those cycles. Vendor negotiations based on performance data unfold over conversations and contracts. Compliance investigations require documentation review that inherently takes time.

A real-time dashboard showing a problem at 2 AM provides no value if no one can act until 9 AM. The same insight from a 6 AM batch job arrives in time for the same response window – at lower cost.

High-Value Real-Time Use Cases in Lead Generation

Based on these criteria, several lead generation scenarios typically justify real-time analytics investment.

Campaign Launch Monitoring

New campaigns carry high uncertainty. Conversion rates, lead quality, and traffic patterns are unknown until tested. Real-time visibility during the first 24-48 hours enables rapid adjustment before significant budget waste. A campaign burning $500/hour on traffic that converts at half the expected rate wastes $6,000 if the problem is not caught until the next morning’s batch report. Real-time detection within two hours limits that waste to $1,000. Early detection of poor performance prevents tens of thousands in wasted spend during launch phases.

System Health and Performance

Processing latency, API response times, queue depths, and error rates require immediate visibility. A system degradation that increases processing time from 2 seconds to 30 seconds destroys speed-to-lead and damages buyer relationships. Buyers expect leads delivered within seconds of capture; when processing backlogs, lead value decays and SLA violations accumulate. The five-minute rule documents how quickly contact rates decay with delayed response. Minutes of system downtime during peak hours can cost thousands in lost leads and buyer SLA penalties – making system health the most universally justified real-time use case.

Fraud Detection and Prevention

Fraud patterns emerge quickly. A sophisticated bot might generate hundreds of fake leads before pattern detection catches up. Real-time monitoring with automated response limits damage by catching anomalies – sudden volume spikes from single IP ranges, impossible form completion speeds, or coordinate patterns – before they compound. Fraudulent leads waste processing resources, damage buyer relationships, and create compliance risk. Early detection limits all three.

Capacity and Threshold Management

Daily buyer caps, budget limits, and inventory constraints require real-time awareness to optimize allocation. Discovering at 6 PM that a buyer’s cap filled at 2 PM means four hours of leads were routed to inferior alternatives – perhaps sold for $20 instead of $35, or worse, rejected entirely. Optimal routing increases revenue per lead by ensuring leads reach highest-paying available buyers at all times, not just when batch reports reveal capacity changes.

Competitive Bidding Environments

In ping/post marketplaces and real-time bidding scenarios, split-second awareness of market conditions affects yield. Operators need visibility into win rates, bid distributions, and price dynamics as they happen. When a competitor adjusts bid strategy mid-day, operators without real-time visibility lose auctions at prices they would have willingly paid – had they known. Pricing optimization in competitive markets can improve margins 5-15% compared to delayed information.

When Batch Analytics Serves Equally Well

Batch analytics often provides the same business value as real-time at a fraction of the cost. Recognizing these scenarios prevents over-engineering.

Strategic Decision Making

Strategic decisions – budget allocation, vendor selection, market entry, team expansion – happen on weekly, monthly, or quarterly cycles. The incremental value of receiving data 23 hours earlier than a daily batch provides is zero if the decision meeting happens once per week.

For strategic analytics, daily batch refresh is almost always sufficient. Data completeness and accuracy matter more than freshness because strategic decisions depend on understanding patterns, not reacting to moments. Historical trends provide more insight than momentary snapshots – knowing that a source has declined 15% over four weeks matters more than knowing its conversion rate changed 0.3% in the past hour.

Financial Analysis and Reconciliation

Financial truth emerges over time. Revenue recognition, return rates, payment collection, and margin calculation all require data that only becomes complete after time passes.

Consider return rate calculation. A lead sold today might be returned anytime in the 7-14 day return window – meaning “real-time return rate” is meaningless because returns have not happened yet. Understanding lead return rate benchmarks requires this time-lagged perspective. Accurate return rates require waiting for the return window to close completely. Daily batch processing on 14-day-lagged data provides actionable insight; real-time processing on incomplete data provides misleading numbers that encourage poor decisions.

The same logic applies to lifetime value calculations, which require months of downstream conversion and revenue data. Payment collection rates require aging analysis – knowing what percentage of invoices paid within 30, 60, or 90 days demands waiting for those windows to close. True profitability by source requires fully-loaded cost allocation that only becomes available after accounting periods close. Rushing these calculations to real-time does not improve accuracy – it degrades it.

Trend Analysis and Pattern Recognition

Trends emerge over time. A single hour’s data is noise; a week’s data starts to show patterns; a month’s data reveals trends.

For trend analytics, daily or weekly aggregation smooths noise and reveals underlying patterns. Statistical significance requires sample size – a conversion rate calculated from 50 leads carries enormous confidence intervals, while the same rate from 5,000 leads provides actionable precision. Real-time trend monitoring creates anxiety about variation that has no statistical meaning.

Consider an operator watching real-time conversion rates bounce between 2.1% and 2.7% throughout the day. Each fluctuation triggers concern. Should we pause the campaign? Is something wrong with the landing page? Daily analysis reveals a different story: conversion rate is steady at 2.4% with normal hourly variation well within expected bounds. Real-time creates false signals; batch creates clarity.

External Data Integration

Many valuable data sources only update on batch schedules regardless of your infrastructure choices. Google Ads API data can lag 3-24 hours depending on which metrics you query – conversion data particularly lags as it awaits attribution windows. CRM downstream conversion data flows on sales cycles, not real-time; knowing whether a lead eventually purchased requires waiting for sales teams to update records. Financial system data typically batches overnight as accounting systems close daily books. Third-party data enrichment processes in batches, often daily, as providers aggregate and verify information.

Building real-time infrastructure that then waits for daily external data creates the worst of both worlds: high cost and delayed insight. The analytics are only as fresh as the slowest data source.

Compliance and Documentation

Compliance reporting requires complete, accurate, verified data – not fast data. TCPA documentation, consent records, and regulatory reporting need audit trails and validation that batch processing provides.

Real-time compliance dashboards might show current consent capture rates, but compliance verification requires more. Complete documentation assembly means gathering consent records, call recordings, and form submissions into coherent packages. Cross-system reconciliation ensures lead counts match across capture, validation, and distribution systems. Audit trail verification confirms the chain of custody for every data point. Manual review for edge cases – partial consents, amended records, disputed contacts – requires human judgment that cannot be automated.

These processes are inherently batch-oriented. Attempting to force them into real-time creates incomplete compliance pictures that provide false reassurance.

Infrastructure Requirements and Cost Comparison

The decision between real-time and batch is not purely strategic – it carries significant infrastructure and operational cost implications.

Batch Analytics Infrastructure

A well-designed batch analytics stack for lead generation typically includes four core components that work together predictably.

Data Warehouse

Cloud warehouses like BigQuery, Snowflake, or Redshift store historical data and handle analytical queries. For lead generation workloads processing hundreds of thousands to millions of leads monthly, typical costs run $200-500/month. These platforms scale predictably with data volume – you can forecast costs based on storage and query patterns.

ETL/ELT Tooling

Scheduled data movement from source systems to the warehouse requires extraction and transformation tooling. Options range from managed services like Fivetran and Stitch to open-source alternatives like Airbyte, with dbt Cloud handling transformation logic. Custom scripts remain viable for simpler needs. Costs typically run $100-500/month depending on connector count and data volume.

BI Platform

The visualization layer reads from the warehouse and presents insights to users. Options span the cost spectrum: Looker Studio is free, Power BI runs $10/user/month, and Tableau commands $70/user/month. Total cost depends on platform choice and user count, typically ranging from $0-500/month for small to mid-sized operations.

Orchestration

Job scheduling and monitoring ensures data pipelines run reliably. Options include Airflow, Dagster, dbt Cloud’s built-in scheduling, and cloud-native schedulers from AWS, GCP, or Azure. Costs range from free (self-managed open source) to $200/month for managed services.

Total batch infrastructure cost: $300-1,500/month for typical lead generation operations.

Real-Time Analytics Infrastructure

Real-time processing adds components and complexity beyond the batch foundation.

Stream Processing

Event ingestion and processing requires streaming infrastructure to capture, buffer, and route events as they occur. Options include Apache Kafka (the industry standard), Amazon Kinesis, Google Pub/Sub, and Confluent Cloud (managed Kafka). Costs run $500-2,000/month depending on throughput volume and retention requirements.

Real-Time Processing Layer

Stream transformation and aggregation handles the logic that converts raw events into meaningful metrics. Apache Flink leads in capability, with Spark Streaming, Kafka Streams, and Materialize offering alternatives at different complexity and cost points. Managed services typically cost $500-3,000/month, with self-managed options requiring equivalent engineering time.

Time-Series Database

Storing and querying real-time metrics demands specialized databases optimized for time-stamped data and recent queries. InfluxDB, TimescaleDB, ClickHouse, and Prometheus serve different use cases and scale profiles. Costs range from $200-1,000/month depending on data volume and query complexity.

Real-Time Dashboarding

Low-latency visualization requires tooling designed for sub-minute refresh. Grafana dominates this space for operational metrics, with custom builds and specialized real-time BI tools serving specific needs. Costs run $100-500/month.

Increased Compute Overhead

Real-time requires always-on processing – systems must run continuously rather than spinning up for scheduled jobs. This creates a typical 2-3x compute cost premium compared to equivalent batch workloads.

Total real-time infrastructure cost: $2,000-8,000/month for comprehensive real-time capability.

The cost differential – 5x to 10x higher for real-time – must be justified by proportionally higher business value.

Operational Complexity Considerations

Beyond infrastructure cost, real-time systems require more operational attention that translates to ongoing labor expense.

On-call requirements illustrate the difference starkly. Real-time systems failing at 2 AM require immediate response – someone must be available to restore data flow before the gap becomes noticeable. Batch failures can wait until business hours because the next scheduled run accommodates minor delays.

Data quality monitoring becomes more urgent in real-time environments. Quality issues propagate instantly to dashboards and downstream systems. Batch processing provides opportunities for validation before publication – catching anomalies before users see misleading numbers.

Schema evolution presents unique challenges for streaming systems. Changing data structures in batch pipelines means updating the next scheduled job. Changing structures in streaming requires careful coordination to avoid breaking in-flight events and corrupting downstream state.

Debugging difficulty increases substantially. Troubleshooting streaming systems requires understanding distributed state, replay mechanics, and timing issues. Batch job debugging is more straightforward – rerun with logging enabled and examine the output.

Team capability requirements follow from all the above. Real-time infrastructure requires data engineering expertise that batch analytics may not demand. Finding and retaining engineers with streaming experience commands premium compensation.

These operational burdens compound over time. An organization choosing real-time should plan for ongoing operational investment, not just initial build cost.

Accuracy Trade-offs Between Approaches

Data freshness and data accuracy often trade against each other. Understanding these trade-offs prevents poor decisions based on fast-but-wrong numbers.

The Latency-Accuracy Trade-off

Real-time data is, by definition, incomplete. Consider how a single lead’s data completeness evolves in a ping/post distribution scenario.

At T+0 (real-time), the lead has been captured and that fact is known. Validation may or may not be complete – some validators run asynchronously. The lead has probably been posted to buyers, but acceptance remains unknown as responses are still pending. Revenue is unknown, and return status cannot even begin measurement since the return window has not started.

At T+1 hour, the picture clarifies. All validation is complete. Buyer acceptance is mostly known, though some slow responders may still be pending. Revenue has been booked to initial estimates.

At T+14 days, the data achieves completeness. The return window has closed, revealing which leads were actually kept versus returned. Actual revenue is confirmed – not estimated, but real. Data accuracy reaches its peak because every downstream event has resolved.

The operator monitoring “real-time CPL” at T+0 is monitoring a fantasy number. Actual CPL requires complete return data that only exists after the return window closes.

Dealing with Late-Arriving Data

In distributed systems, data arrives out of order and late. A conversion that happened at 9:00 AM might not appear in tracking systems until 9:47 AM due to client-side buffering and batch transmission, network latency and retry delays, backend processing queues, and third-party system batch exports. The 47-minute gap between reality and data is not unusual – it is the norm.

Real-time dashboards must decide how to handle late arrivals, and each option carries trade-offs.

Ignoring Late Data

The simplest approach ignores late data until the next reporting period. Implementation is straightforward, but early periods are chronically understated. The 9 AM hour looks low because the 9:47 arrival counts toward 10 AM. Over time, this creates reconciliation headaches as real-time reports never match eventual truth.

Backfilling Historical Periods

The more sophisticated approach backfills and restates historical periods as late data arrives. Accuracy improves over time, but this creates a “moving target” problem. The 9 AM number changes at 9:47, then again if more late data arrives. Users see different numbers at different times for the same period, creating confusion about which number to trust.

Watermarking with Known Lag

A compromise approach shows data with explicit lag acknowledgment – “Data complete through 15 minutes ago.” This is honest about incompleteness but still cannot solve the fundamental problem that certain metrics require time to become measurable.

Batch analytics sidesteps all of this by processing complete time windows. “Yesterday’s data” means all events from yesterday, including late arrivals, fully processed and reconciled. The number will not change tomorrow.

Data Quality in Real-Time vs Batch

Batch processing enables data quality checks that real-time cannot easily provide.

Cross-system reconciliation becomes possible when you can compare complete datasets. Verifying that lead counts match across source, validation, and distribution systems requires seeing all three datasets in their entirety. Real-time shows each system’s numbers before reconciliation – the source shows 1,247 leads, validation shows 1,243, distribution shows 1,239, and users wonder which number is correct. Batch waits until all systems agree on the day’s final count.

Anomaly detection works better on complete datasets. Statistical outlier detection requires establishing baselines and confidence intervals. Real-time anomaly detection has higher false positive rates because incomplete data looks anomalous simply because it is incomplete. A source that typically delivers 500 leads by noon might show only 200 at 10 AM – is that an anomaly or just Tuesday’s slower morning pattern?

Manual verification for edge cases becomes possible in batch workflows. Unusual patterns – a source suddenly delivering 5x normal volume, a buyer accepting at unusual rates – can be investigated before appearing in reports. Real-time publishes first and explains later, potentially causing incorrect decisions based on data that would have been flagged in review.

Deduplication across time windows requires seeing complete windows. Detecting duplicates that span hours or days – the same lead submitted at 9 AM and again at 3 PM – requires looking at complete time periods. Real-time might count duplicates twice if they arrive in different processing windows.

For metrics where accuracy matters more than speed – margin calculation, return rates, lifetime value – batch processing produces more reliable numbers.

Hybrid Approaches: Capturing Both Benefits

The most effective analytics architectures combine real-time and batch processing, applying each where it creates value.

The Lambda Architecture

Lambda architecture processes the same data through two parallel paths, combining their outputs to deliver both speed and accuracy.

Speed Layer

The speed layer processes incoming events with minimal latency, providing approximate but fast results. Each new event updates the current picture, overwriting previous calculations. This layer serves queries requiring recency – what is happening right now, even if the answer is imprecise.

Batch Layer

The batch layer processes complete datasets on schedule, providing accurate but delayed results. It creates the authoritative historical record that will not change. This layer serves queries requiring accuracy – what actually happened yesterday, with full confidence in the numbers.

Serving Layer

The serving layer combines speed and batch outputs into a unified view. Recent periods draw from real-time data; historical periods draw from batch-verified data. Users query a single interface that transparently selects the appropriate source.

In lead generation practice, the speed layer shows today’s lead volume and conversion rate updating continuously. The batch layer provides accurate yesterday and prior metrics, updated each morning. The dashboard displays both, clearly labeled so users understand which numbers are estimates versus confirmed actuals.

Lambda architecture complexity is its primary drawback. Maintaining two parallel processing paths doubles code and operational burden. Every transformation must be implemented twice, in streaming and batch frameworks that may use different languages and paradigms.

The Kappa Architecture

Kappa architecture simplifies by processing everything as streams while materializing batch-style views from the stream itself. All events flow through a single stream processing system. Historical queries replay from the stream’s persistent log. Batch-style results derive from the stream rather than a separate pipeline.

This approach reduces the duplication inherent in Lambda – one codebase, one processing paradigm – but requires more sophisticated stream processing infrastructure. Kappa works best when the organization has strong streaming expertise and can handle the operational complexity of stream replay for historical queries. For most lead generation operations without dedicated data engineering teams, Kappa represents over-engineering.

Practical Hybrid Patterns for Lead Generation

Most lead generation operations benefit from a pragmatic hybrid that avoids the complexity of formal Lambda or Kappa architectures while capturing their benefits.

Real-Time Tier

The real-time tier operates on 1-5 minute refresh cycles for metrics requiring immediate visibility. System health metrics – latency, error rates, queue depth – belong here because degradation demands immediate response. Processing volumes (leads in, leads out) provide operational awareness. Immediate quality alerts catch validation failure spikes before they compound. Buyer capacity and cap status enable optimal routing decisions throughout the day.

Operational Tier

The operational tier refreshes hourly for metrics that inform same-day decisions. Campaign performance metrics like CPL and conversion rate by source update frequently enough to catch problems but not so frequently as to create noise. Buyer acceptance rates reveal relationship health. Traffic distribution and routing efficiency help operators adjust mid-day. Intra-day pacing against targets enables budget management.

Strategic Tier

The strategic tier refreshes daily for metrics that inform weekly and monthly decisions. Complete daily performance summaries provide the authoritative record. Trend analysis across days and weeks reveals patterns invisible in shorter timeframes. Budget utilization and allocation guide strategic spending. Reconciled financial metrics ensure accuracy over speed.

Financial Tier

The financial tier operates weekly or monthly for metrics requiring complete data. Return rate analysis only becomes meaningful after return windows close. Actual revenue versus booked revenue reconciliation reveals the difference between estimates and reality. Margin analysis with full cost allocation requires accounting period closure. Lifetime value and downstream conversion calculations need months of follow-on data.

This tiered approach puts real-time investment where it creates value – system health, immediate quality – while using efficient batch processing for metrics that do not benefit from sub-minute freshness.

Implementation Pattern: Start Batch, Add Real-Time Selectively

For operations building analytics capability, a phased approach prevents over-investment while capturing genuine value.

Phase 1: Comprehensive Batch Foundation

Begin with comprehensive batch analytics covering all metrics with daily refresh. Establish complete data quality and reconciliation processes. Build full historical visibility so trends and patterns become visible. This foundation typically costs $300-1,000/month and provides the accuracy baseline against which real-time additions will be measured.

Phase 2: Real-Time Candidate Identification

Before investing in real-time infrastructure, rigorously evaluate candidates. Which metrics genuinely inform hourly decisions? What is the calculated cost of delayed detection for each? Who specifically will act on real-time data, and do they have authority to respond? Document the business case for each potential real-time metric – not in generalities, but in specific dollar terms.

Phase 3: Selective Real-Time Addition

Add real-time capability only for validated use cases, starting with highest-value, lowest-complexity metrics. System health and monitoring typically justify real-time first because the business case is clearest and the infrastructure simplest. Campaign performance during launch periods often merits real-time second. Each addition costs $500-2,000/month in incremental infrastructure.

Phase 4: Continuous Evaluation

Measure actual value captured from real-time visibility against the documented business cases. Expand to additional metrics only where realized value exceeds cost. Regularly prune metrics that moved to real-time but proved unnecessary – real-time capability that no one acts upon is waste.

This incremental approach prevents over-investment while capturing real-time value where it genuinely exists.

Organizational Considerations

Technology choices affect – and are affected by – organizational structure, skills, and culture.

Skill Requirements

Batch analytics requires SQL proficiency, BI tool familiarity with platforms like Tableau, Power BI, or Looker, basic data modeling understanding, and scheduling and orchestration basics. These skills are common and relatively easy to hire or train. Many marketing analysts and operations specialists bring these capabilities, and internal training can develop them in months.

Real-time analytics demands a different skill set. Stream processing frameworks like Kafka, Flink, and Kinesis require specialized knowledge. Event-driven architecture design differs fundamentally from batch ETL thinking. Time-series database management involves concepts unfamiliar to traditional database administrators. On-call operations experience means comfort with 2 AM alerts and rapid incident response. Distributed systems debugging requires understanding failure modes that simply do not exist in batch processing.

These skills are specialized and command premium compensation. A lead generation operation with a $100K/year analytics budget might afford an analyst comfortable with batch tools. The same budget does not stretch to real-time infrastructure expertise – engineers with streaming experience command $150-200K salaries in most markets.

Decision-Making Culture

Real-time data only creates value when organizational culture supports real-time decision-making.

Organizations that benefit from real-time share common characteristics. Enabled operators can adjust campaigns without seeking approval – they see a problem and fix it. Clear thresholds and automated response protocols define what action to take when metrics breach boundaries. A culture of experimentation and rapid iteration means changes happen daily, not quarterly. On-call coverage during operating hours ensures someone can actually respond to what the data reveals.

Organizations where real-time provides limited value exhibit different patterns. Hierarchical approval requirements for spend changes mean even urgent adjustments wait for sign-off. Weekly or monthly budget allocation cycles constrain response speed regardless of data availability. Risk-averse cultures requiring thorough analysis before action cannot leverage real-time visibility. Limited operator empowerment means the people watching dashboards cannot act on what they see.

Implementing real-time infrastructure for an organization that only makes weekly decisions creates expensive, unused capability. Sometimes the bottleneck is not data freshness – it is decision-making speed.

Change Management for Real-Time Adoption

Moving from batch to real-time reporting changes how people work, and organizations must prepare for this shift.

Training Requirements

Users need new skills to interpret real-time data correctly. Understanding incompleteness is fundamental – today’s numbers are estimates, not facts. Avoiding over-reaction to normal variance prevents wasted effort investigating non-problems. Distinguishing signal from noise at shorter timescales requires statistical intuition that batch reports did not demand. Using appropriate urgency for different metrics means knowing which alerts warrant immediate action versus which can wait for tomorrow’s batch confirmation.

Process Changes

Workflows must adapt to real-time visibility. Establishing escalation thresholds defines what level of deviation triggers escalation versus routine monitoring. Defining who monitors during which hours ensures coverage without burnout. Creating playbooks for common real-time alerts speeds response and ensures consistency. Integrating real-time visibility into decision workflows means connecting dashboards to action – not just displaying numbers.

Cultural Shifts

Mindset changes accompany technical changes. Teams must accept that real-time numbers will change as data completes – the dashboard showing $47 CPL at noon might show $52 CPL tomorrow morning, and both numbers are correct for their context. Trusting operators to make quick decisions requires giving up some control in exchange for speed. Avoiding analysis paralysis from constant data streams means knowing when to stop watching and start acting.

Organizations should budget time and attention for these changes alongside infrastructure investment.

Common Implementation Pitfalls and How to Avoid Them

Organizations implementing analytics infrastructure frequently encounter predictable obstacles that derail projects or limit value realization. Understanding these pitfalls in advance enables proactive mitigation rather than reactive firefighting.

Pitfall 1: Over-Engineering Initial Deployments

Many organizations approach analytics infrastructure with aspirations exceeding immediate needs. They build for anticipated scale that may never materialize, implement features demanded by stakeholders who will never use them, and create architectural complexity that becomes maintenance burden.

The symptom appears as projects that stretch across quarters without delivering usable dashboards. Engineering teams spend months on infrastructure while business teams continue making decisions with spreadsheets. By the time the system launches, requirements have shifted and the carefully designed architecture no longer matches actual needs.

The solution involves starting with minimum viable analytics that answer specific, documented business questions. Build the simplest system capable of answering “what is our CPL by source this week” before attempting real-time fraud detection. Add capability incrementally as proven value justifies investment. A working dashboard that updates daily is infinitely more valuable than a sophisticated streaming system that remains perpetually under development.

Pitfall 2: Dashboard Proliferation Without Governance

Organizations often create dashboards in response to each stakeholder request without considering the broader ecosystem. The result is dozens of dashboards with overlapping metrics calculated differently, no single source of truth, and users who cannot determine which number to trust.

The symptom manifests as meetings derailed by debates about whose dashboard shows the correct number. Finance shows CPL at $47; Marketing shows $42; Operations shows $51. Each dashboard uses slightly different definitions, time ranges, or inclusion criteria. Decisions stall while teams reconcile conflicting data.

The solution requires establishing metric definitions before dashboard creation. Document how each metric is calculated, what data sources it uses, what filters apply, and who owns the definition. Create a central metric catalog that serves as the authoritative reference. When stakeholders request new dashboards, verify whether existing definitions satisfy their needs or whether genuinely new metrics are required.

Pitfall 3: Ignoring Data Quality in Pursuit of Speed

Real-time dashboards can display inaccurate data just as quickly as accurate data. Organizations that prioritize latency without investing in data quality create systems that confidently display wrong numbers – potentially driving poor decisions faster than batch alternatives.

The symptom appears as dashboards showing numbers that fail to reconcile with source systems. The real-time CPL shows $32 while the nightly batch report shows $38. Investigation reveals the real-time system misses certain cost categories, excludes returns that have not yet processed, or double-counts leads split across multiple buyers. Users lose trust in the system and revert to manual reporting.

The solution involves building data quality checks into the pipeline regardless of latency requirements. Implement reconciliation logic that flags discrepancies between real-time aggregates and source totals. Display confidence indicators that communicate data completeness. Accept that some metrics cannot be calculated accurately in real-time and communicate that limitation rather than displaying misleading numbers.

Pitfall 4: Underestimating Ongoing Maintenance Requirements

Analytics infrastructure requires ongoing attention that organizations frequently underestimate. Schema changes in source systems break pipelines. Business logic changes require dashboard updates. New data sources need integration. User questions reveal edge cases requiring investigation.

The symptom presents as systems that work initially but degrade over time. Dashboards stop updating because a source table was renamed. Metrics drift from reality as business processes evolve without corresponding analytics updates. Users discover bugs through incorrect decisions rather than through systematic monitoring.

The solution demands budgeting ongoing engineering time for analytics maintenance – typically 20-30% of initial build effort annually for mature systems, higher for systems still evolving. Implement monitoring that alerts when data stops flowing, aggregates deviate from expected ranges, or reconciliation checks fail. Treat analytics infrastructure as a product requiring continuous investment rather than a project with an endpoint.

Pitfall 5: Selecting Technology Before Defining Requirements

Organizations sometimes select analytics platforms based on marketing materials, conference presentations, or engineer enthusiasm rather than documented requirements. The chosen tool may excel at capabilities the organization does not need while lacking features that turn out to be essential.

The symptom emerges when implementation reveals gaps between tool capability and business need. The streaming platform handles event processing beautifully but cannot join historical tables required for LTV analysis. The business intelligence tool provides gorgeous visualizations but cannot handle the row-level security requirements for multi-tenant operations. Migration to alternative tools consumes budget that should have funded analytics development.

The solution requires documenting specific requirements before evaluating tools. What questions must the system answer? What data sources must it integrate? What latency is required for each metric? Who needs access and with what permissions? What is the budget for infrastructure and the budget for engineering time? Evaluate tools against documented requirements rather than general capability claims.

Cost-Benefit Decision Framework

Bringing the analysis together into a practical decision framework.

The Four-Question Filter

For each metric or analytics capability, answer four questions that determine appropriate refresh rate.

Question 1: How Fast Does Reality Change?

Metrics that change hourly or faster are real-time candidates – system performance, buyer capacity, active campaign performance during launches. Metrics that change daily merit operational refresh every 1-4 hours – campaign performance during steady state, routing efficiency. Metrics that change weekly or slower work best with daily batch – trends, strategic KPIs, financial summaries.

Question 2: What Is the Cost of Delayed Detection?

Calculate explicitly: hours of delay multiplied by hourly impact. Compare that cost to additional real-time infrastructure cost. If delayed detection cost exceeds infrastructure cost, consider real-time. If infrastructure cost exceeds delayed detection cost, accept the delay. A $500/hour problem caught 12 hours earlier saves $6,000 – easily justifying $2,000/month infrastructure. A $50/hour problem with the same detection improvement saves $600/month – not enough to justify the same infrastructure.

Question 3: Can Anyone Act on Real-Time Information?

Automated response systems represent the strongest real-time case – algorithms can pause campaigns, adjust routing, and block fraudulent patterns without human involvement. Operators available during business hours moderate the case – problems can be caught and addressed same-day, but overnight issues wait for morning. Actions requiring approval or process make real-time pointless – the data arrives faster than anyone can respond to it.

Question 4: Is the Data Complete Enough to Be Useful?

Data available immediately and fully – like lead capture events – is appropriate for real-time display. Data requiring aggregation or enrichment – like conversion rates that need validation completion – suits operational refresh. Data that remains incomplete until time passes – returns, payments, downstream conversions – requires batch processing regardless of infrastructure capability.

Decision Matrix

| Velocity | Detection Cost | Action Capacity | Data Complete | Recommendation |

|---|---|---|---|---|

| High | High | Yes | Yes | Real-time |

| High | High | Yes | No | Operational (hourly) |

| High | High | No | Any | Daily batch |

| High | Low | Any | Any | Daily batch |

| Low | Any | Any | Any | Daily or weekly batch |

ROI Calculation Template

For real-time investment decisions, calculate expected return explicitly.

Start with annual cost of real-time infrastructure. Technology costs include streaming platforms, real-time databases, and additional compute – typically $24,000-96,000 annually at the $2,000-8,000/month range. Operational labor for on-call coverage, maintenance, and incident response adds to the total. Sum these for total annual cost.

Then estimate annual value of real-time detection. Identify expected incidents per year where faster detection would matter – quality issues, capacity problems, system degradations. Estimate average hours of faster detection compared to batch alternatives. Calculate average cost per hour of delay based on spend rates and impact severity. Annual value equals incidents multiplied by hours saved multiplied by hourly cost.

The decision follows from comparison. If annual value exceeds annual cost, proceed with real-time. If annual cost exceeds annual value, use batch and invest the savings elsewhere – perhaps in better batch analytics, more sources, or improved conversion rates.

Be honest in this calculation. Operators often overestimate incident frequency and underestimate infrastructure costs when emotionally committed to real-time visibility. Challenge assumptions. Review historical incidents to ground estimates in reality rather than fear.

Frequently Asked Questions

What is the difference between real-time analytics and batch analytics?

Real-time analytics processes and displays data with minimal delay between when events occur and when insights become available – typically seconds to minutes. Batch analytics processes accumulated data on scheduled intervals – hourly, daily, or weekly. The key distinction is latency: real-time prioritizes speed of insight while batch prioritizes completeness and accuracy. Real-time infrastructure costs 5-10x more than batch processing for equivalent data volumes. The choice depends on whether decisions benefit from fresher data and whether the organization can act on real-time information.

When should lead generation operations invest in real-time analytics?

Invest in real-time when three conditions converge: the underlying metrics change fast enough to matter (hourly or faster), delayed detection carries significant cost (thousands of dollars per hour of delay), and someone or something can act on the information immediately (automated systems or operators with authority to adjust). Common high-value real-time use cases include system health monitoring, campaign launch performance, fraud detection, and buyer capacity management. For most strategic and financial metrics, batch analytics provides equivalent value at lower cost.

How much does real-time analytics infrastructure cost compared to batch?

Batch analytics infrastructure for lead generation typically costs $300-1,500 per month, including data warehouse, ETL tooling, BI platform, and orchestration. Real-time analytics adds stream processing, real-time databases, specialized dashboarding, and higher compute costs, typically reaching $2,000-8,000 per month for comprehensive real-time capability. The 5-10x cost premium requires proportional business value justification. Beyond infrastructure, real-time systems require more operational attention, on-call coverage, and specialized engineering skills – all additional cost factors.

Can I have both real-time and batch analytics?

Yes, and this hybrid approach often provides the best results. The Lambda architecture processes data through parallel real-time and batch paths, using real-time for recent data and batch for accurate historical data. Practical hybrid implementations tier metrics by appropriate refresh rate: real-time for system health and immediate alerts (1-5 minute refresh), operational tier for campaign performance (hourly), strategic tier for trends and planning (daily), and financial tier for reconciled metrics (weekly/monthly). Start with comprehensive batch analytics, then selectively add real-time for metrics where the business case justifies the additional investment.

What metrics should be tracked in real-time versus batch?

Real-time candidates include system health metrics (latency, error rates, queue depth), processing volumes, immediate quality alerts, buyer capacity status, and fraud indicators. Batch is appropriate for financial metrics (revenue, margin, true CPL), return rate analysis (requires waiting for return windows to close), trend analysis (requires historical comparison), lifetime value calculations, and any metrics requiring cross-system reconciliation. The determining factors are: how fast the metric changes, cost of delayed detection, ability to act immediately, and whether the data is complete enough to be meaningful in real-time.

How does real-time analytics handle late-arriving data?

Late-arriving data is a fundamental challenge in real-time systems. Events might appear minutes or hours after they occurred due to buffering, network delays, or third-party system batch exports. Real-time systems handle this by either ignoring late data until the next period (simple but understates early periods), backfilling and restating historical periods (more accurate but creates “moving target” where numbers change), or using watermarking that explicitly shows data completeness (“complete through 15 minutes ago”). Batch analytics sidesteps this by processing complete time windows after all data has arrived, producing more stable and accurate numbers.

What skills does my team need for real-time versus batch analytics?

Batch analytics requires SQL proficiency, BI tool familiarity (Tableau, Power BI, Looker), basic data modeling, and scheduling basics – skills that are common and relatively accessible. Real-time analytics requires stream processing expertise (Kafka, Flink, Kinesis), event-driven architecture design, time-series database management, on-call operations experience, and distributed systems debugging – specialized skills commanding premium compensation. Operations should honestly assess team capability before committing to real-time infrastructure. Sometimes investing in upskilling or hiring is the real bottleneck, not the technology choice.

How do I avoid over-reacting to real-time data variance?

Normal variance looks like problems when viewed at short timescales. A conversion rate bouncing between 2.1% and 2.7% hour-to-hour might be completely normal statistical variation, not a signal requiring intervention. Mitigation strategies include: using longer moving averages that smooth noise (7-day rolling average instead of hourly spot checks), establishing statistically-informed thresholds (only alert when deviation exceeds 2 standard deviations), displaying confidence intervals alongside point estimates, training operators to understand expected variance, and using batch-confirmed data for strategic decisions while reserving real-time for operational alerts.

What is the accuracy trade-off between real-time and batch?

Real-time data is inherently incomplete. A lead captured one minute ago might not have completed validation, received buyer acceptance, or booked revenue – let alone return status, which only emerges after the return window closes. “Real-time CPL” is an estimate based on incomplete data; “true CPL” requires waiting for all returns. Batch processing enables data quality checks, cross-system reconciliation, and deduplication across time windows that real-time cannot provide. For metrics where accuracy matters more than speed – margin calculation, return rates, lifetime value – batch processing produces more reliable numbers. For metrics where directional awareness matters more than precision – system health, volume monitoring – real-time provides appropriate visibility.

How should I phase in analytics capability?

Start with comprehensive batch analytics: daily refresh for all metrics, complete data quality processes, and full historical visibility. This creates a solid foundation at modest cost ($300-1,000/month). Next, identify real-time candidates by documenting which metrics inform hourly decisions, calculating the cost of delayed detection, and determining who will act on real-time data. Add real-time for validated use cases only, typically starting with system health monitoring and campaign launch performance. Evaluate actual value captured before expanding. This incremental approach prevents over-investment while capturing real-time value where it genuinely exists.

What organizational changes accompany real-time analytics adoption?

Real-time data changes how people work. Training needs include interpreting incomplete data correctly, avoiding over-reaction to normal variance, and using appropriate urgency for different metrics. Process changes include establishing escalation thresholds, defining monitoring coverage by hour, creating playbooks for common alerts, and integrating real-time visibility into decision workflows. Cultural shifts include accepting that real-time numbers will change as data completes, trusting operators to make quick decisions, and avoiding analysis paralysis from constant data streams. Organizations should budget time for these changes alongside infrastructure investment – technology without process change produces expensive unused capability.

Analytics Maturity: A Progressive Framework

Lead generation operations progress through predictable maturity stages as analytics capabilities develop. Understanding your current position and the path forward helps prioritize investments and avoid capability gaps that constrain growth.

Stage 1: Reactive Reporting

Most operations begin here. Analytics consists of spreadsheets, platform-native reporting, and manual data collection. Questions like “what happened last week” require hours of data gathering. Trends are identified retrospectively, often too late for intervention.

Characteristics of this stage include reliance on platform exports such as Google Ads and Facebook reports, spreadsheet-based aggregation and analysis, weekly or monthly reporting cadence, limited ability to correlate data across systems, and decisions based on gut feel supplemented by delayed data.

The path forward involves centralizing data into a single repository. Even a simple data warehouse loaded nightly transforms analytical capability. The first priority is answering basic questions quickly rather than attempting sophisticated analysis.

Stage 2: Operational Visibility

Operations at this stage have centralized data and can answer operational questions within hours rather than days. Daily dashboards provide current performance visibility. Basic alerting notifies operators of significant deviations.

Characteristics include data warehouse with daily refresh, standard dashboards for key metrics, ability to slice performance by source, buyer, and geography, threshold-based alerts for major deviations, and weekly business reviews supported by reliable data.

The path forward involves identifying specific high-value use cases where faster data would change decisions. Implement targeted real-time capability for those use cases rather than attempting comprehensive real-time coverage.

Stage 3: Proactive Operations

Organizations at this stage combine batch analytics for strategic decisions with real-time visibility for operational response. Automated systems respond to routine conditions. Human attention focuses on exceptions and strategic direction.

Characteristics include hybrid real-time and batch architecture, automated campaign management based on performance thresholds, real-time system health monitoring, anomaly detection that surfaces unusual patterns, and data-driven decision culture with clear metrics ownership.

The path forward involves investing in predictive capability – using historical patterns to anticipate future states. Machine learning models begin supplementing rule-based automation.

Stage 4: Predictive Intelligence

Mature operations use historical data to predict future outcomes and optimize proactively. Lead scoring predicts conversion probability. Budget allocation algorithms optimize spend across channels. Quality models identify high-risk leads before delivery.

Characteristics include predictive models integrated into operational workflows, continuous experimentation with systematic learning, real-time optimization based on predicted outcomes, sophisticated attribution modeling, and analytics as a competitive advantage rather than a cost center.

Few lead generation operations reach this stage. Those that do typically have dedicated data science resources, significant historical data, and organizational commitment to data-driven operations.

Key Takeaways

-

Real-time and batch analytics exist on a latency spectrum, not as binary options. True streaming (sub-second), near-real-time (seconds to minutes), operational (hours), and batch (daily or longer) each serve different purposes. Understanding where on this spectrum each metric belongs prevents over-engineering infrastructure and under-serving genuine needs.

-

Real-time analytics justifies investment only when three conditions converge. The underlying data must change fast enough to matter (hourly or faster), delayed detection must carry significant cost, and someone or something must be able to act immediately. When any condition is absent, batch analytics serves equally well at a fraction of the cost.

-

Real-time infrastructure costs 5-10x more than batch processing. A comprehensive batch analytics stack runs $300-1,500 per month while real-time adds streaming infrastructure, real-time databases, and higher compute costs reaching $2,000-8,000 per month. Operational burden compounds this: real-time requires on-call coverage, specialized skills, and more complex debugging.

-

Faster data is not always more accurate data. Real-time metrics are inherently incomplete. Return rates, payment collection, and margin calculation require data that only exists after time passes. Rushing these calculations to real-time degrades accuracy. Use real-time for directional awareness, batch for precision metrics.

-

Hybrid architectures capture benefits of both approaches. Process system health and immediate alerts in real-time, campaign performance hourly, strategic metrics daily, and financial reconciliation weekly. This tiered approach puts real-time investment where it creates value while using efficient batch processing elsewhere.

-

Late-arriving data complicates real-time accuracy. Events arrive out of order due to buffering, network delays, and third-party batch exports. Real-time systems must choose between ignoring late data (understating early periods), backfilling (creating “moving target” numbers), or watermarking (honest about completeness). Batch sidesteps this by processing complete windows.

-

Organizational readiness determines real-time value. Real-time data only creates value when decision-making culture supports rapid response. Hierarchical approval requirements, weekly budget cycles, and risk-averse cultures limit real-time value regardless of technical capability. Sometimes the bottleneck is not data freshness but decision-making speed.

-

Start with batch, add real-time selectively. Build comprehensive batch analytics first. Identify real-time candidates based on specific business case calculations. Add real-time only where documented value exceeds cost. Evaluate and prune regularly. This incremental approach prevents expensive unused capability.

-

Calculate ROI explicitly before committing to real-time. Estimate annual infrastructure and operational cost of real-time. Estimate value from faster detection: incident frequency times hours saved times hourly cost of delay. If value exceeds cost, proceed. If not, invest the difference elsewhere. Be honest – operators often overestimate incident frequency when emotionally committed to real-time.

-

Different metrics belong at different tiers. System health metrics warrant real-time monitoring. Campaign performance during launch deserves hourly attention. Budget allocation decisions need daily batch data. Financial reconciliation requires weekly or monthly batch with complete return window data. Match refresh rate to decision cycle, not to technical capability.

The most sophisticated analytics operations recognize that data freshness is a means, not an end. The goal is not to see data faster but to make better decisions. Sometimes better decisions come from faster data. Often they come from more accurate data, better visualization, or clearer analysis of the data already available. Invest in real-time where it creates measurable value. Invest in analytics quality everywhere.